一、介绍Prometheus

Prometheus(普罗米修斯)是一个最初在SoundCloud上构建的监控系统。自2012年成为社区开源项目,拥有非常活跃的开发人员和用户社区。为强调开源及独立维护,Prometheus于2016年加入CNCF,成为继kubernetes之后的第二个托管项目。

官网:https://prometheus.io/

源码托管:https://github.com/prometheus

Prometheus的特点:

- 多维数据模型:由度量名称和键值对标识的时间序列数据。

- 内置时间序列数据库:TSDB

- promQL:一种灵活的查询语言,可以利用多维数据完成复杂查询

- 基于HTTP的pull(拉取)方式采集时间序列数据(exporter)

- 同时支持PushGateway组件收集数据

- 通过服务发现或静态配置发现目标收集数据

- 多种图形模式及仪表盘支持

- 支持做为数据源接入Grafana

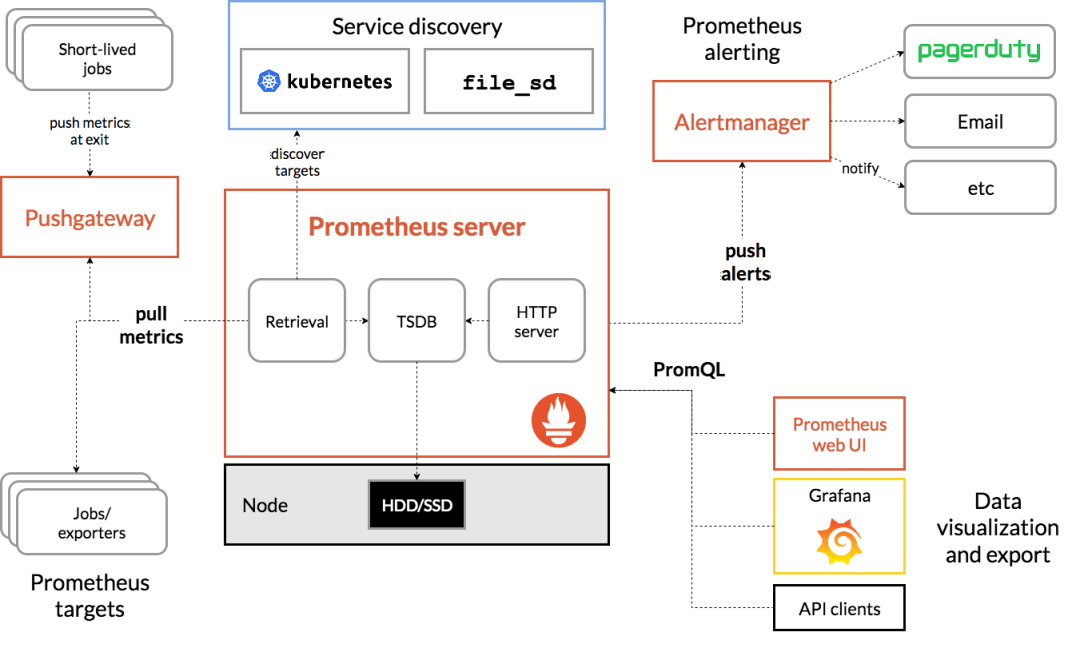

Prometheus架构:

- 服务发现:重点,一种是基于kuberntes本身元数据的自动发现,叫kuberntes_sd(kuberntes_service discovery).另一种是你把自动发现规则写到文件 ,基于文件自动发现。叫做file_sd

- Retrieval:收集自动发现的规则,二还要收集exporters收集的监控指标。都由Retrieval引擎帮收集,然后传到TSDB

- TSDB:可以落盘到HDD/SSD

- HTTP server:普罗米修斯本身还提供可http server 1、服务于报警,push alerts推报警信息。2、通过PromQL给Prometheus web ui 或者grafana提供数据查询的接口

二、搭建Prometheus

1、交付Exporters

正常需要4个exporters(kube-state-metrics、node-exporters)就可以

1.1、部署kube-state-metrics

kube-state-metrics用来收集k8s状态信息,或者收集基本状态信息的监控代理。比如k8s中有多少个节点,每个节点有多少个deployment,deployment更新过多少版等等

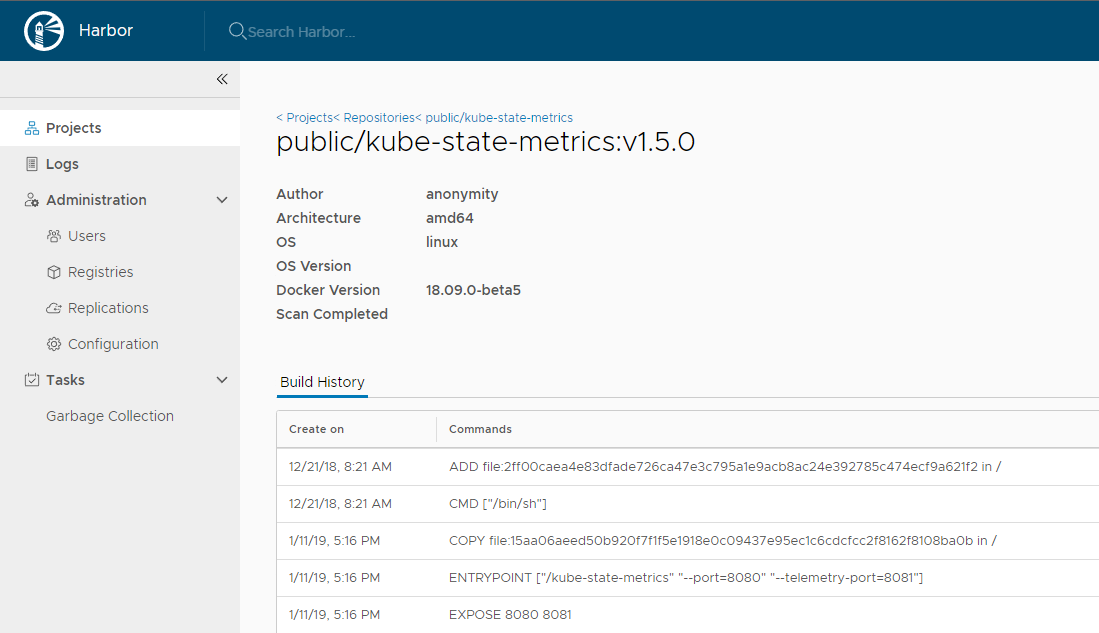

1.1.1、准备镜像

kube-state-metrics官方quay.io地址:https://quay.io/repository/coreos/kube-state-metrics?tab=tags

[root@k8s-7-200 ~]# docker pull quay.io/coreos/kube-state-metrics:v1.5.0 # quay.io无法访问可改用以下源

[root@k8s-7-200 ~]# docker pull quay.mirrors.ustc.edu.cn/coreos/kube-state-metrics:v1.5.0

[root@k8s-7-200 ~]# docker images |grep kube-state

quay.io/coreos/kube-state-metrics v1.5.0 91599517197a 3 years ago 31.8MB

[root@k8s-7-200 ~]# docker tag 91599517197a harbor.itdo.top/public/kube-state-metrics:v1.5.0

[root@k8s-7-200 ~]# docker push harbor.itdo.top/public/kube-state-metrics:v1.5.0

1.1.2、准备资源配置清单

[root@k8s-7-200 ~]# mkdir -p /data/k8s-yaml/kube-state-metrics && cd /data/k8s-yaml/kube-state-metrics

[root@k8s-7-200 kube-state-metrics]# vi rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

[root@k8s-7-200 kube-state-metrics]# vi dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "2"

labels:

grafanak8sapp: "true"

app: kube-state-metrics

name: kube-state-metrics

namespace: kube-system

spec:

selector:

matchLabels:

grafanak8sapp: "true"

app: kube-state-metrics

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

grafanak8sapp: "true"

app: kube-state-metrics

spec:

containers:

- name: kube-state-metrics

image: harbor.itdo.top/public/kube-state-metrics:v1.5.0 #修改镜像地址

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: http-metrics

protocol: TCP

readinessProbe: #就绪性探针,探测确认容器已经完成启动,才给调度流量

failureThreshold: 3

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

serviceAccountName: kube-state-metrics

1.1.3、应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/kube-state-metrics/rbac.yaml

serviceaccount/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/kube-state-metrics/dp.yaml

deployment.extensions/kube-state-metrics created

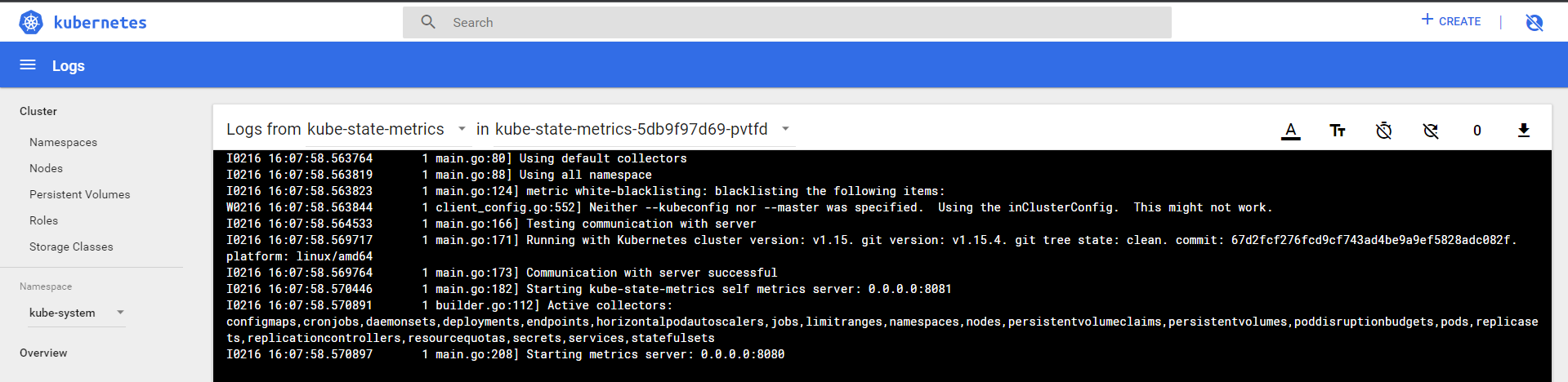

1.1.4、检测正否正常

1 main.go:208] Starting metrics server: 0.0.0.0:8080 内部启动8080

1 main.go:182] Starting kube-state-metrics self metrics server: 0.0.0.0:8081 自己检测自己跑的是8081

检查部署正常

[root@k8s-7-21 ~]# kubectl get pods -o wide -n kube-system |grep kube-state-metrics

kube-state-metrics-5db9f97d69-pvtfd 1/1 Running 0 21s 172.7.21.6 k8s-7-21.host.top <none> <none>

[root@k8s-7-21 ~]# curl 172.7.21.6:8080/healthz

ok

[root@k8s-7-21 ~]# ok代表正常

1.2、部署node-exporters

node-exporters用来收集k8s运算节点上基础设施信息。比如运算节点还有多少内存、cpu内存使用量、网络IO等等。要在所有运算节点上

1.2.1、准备镜像

网站:https://hub.docker.com/r/prom/node-exporter/tags

[root@k8s-7-200 ~]# docker pull prom/node-exporter:v0.15.0

[root@k8s-7-200 ~]# docker images |grep node-exporter

prom/node-exporter v0.15.0 12d51ffa2b22 3 years ago 22.8MB

[root@k8s-7-200 ~]# docker image tag 12d51ffa2b22 harbor.itdo.top/public/node-exporter:v0.15.0

[root@k8s-7-200 ~]# docker image push harbor.itdo.top/public/node-exporter:v0.15.0

1.2.2、准备资源配置清单

[root@k8s-7-200 k8s-yaml]# mkdir -p /data/k8s-yaml/node-exporter && cd /data/k8s-yaml/node-exporter

[root@k8s-7-200 node-exporter]# vi ds.yaml

#node-exporter采用daemonset类型控制器,部署在所有Node节点,且共享了宿主机网络名称空间

#通过挂载宿主机的/proc和/sys目录采集宿主机的系统信息

kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: node-exporter

namespace: kube-system

labels:

daemon: "node-exporter"

grafanak8sapp: "true"

spec:

selector:

matchLabels:

daemon: "node-exporter"

grafanak8sapp: "true"

template:

metadata:

name: node-exporter

labels:

daemon: "node-exporter"

grafanak8sapp: "true"

spec:

volumes:

- name: proc

hostPath:

path: /proc

type: ""

- name: sys

hostPath:

path: /sys

type: ""

containers:

- name: node-exporter

image: harbor.itdo.top/public/node-exporter:v0.15.0

args:

- --path.procfs=/host_proc

- --path.sysfs=/host_sys

ports:

- name: node-exporter

hostPort: 9100

containerPort: 9100

protocol: TCP

volumeMounts:

- name: sys

readOnly: true

mountPath: /host_sys

- name: proc

readOnly: true

mountPath: /host_proc

hostNetwork: true

1.2.3、应用资源配置清单

在一个node节点执行

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/node-exporter/ds.yaml

daemonset.extensions/node-exporter created

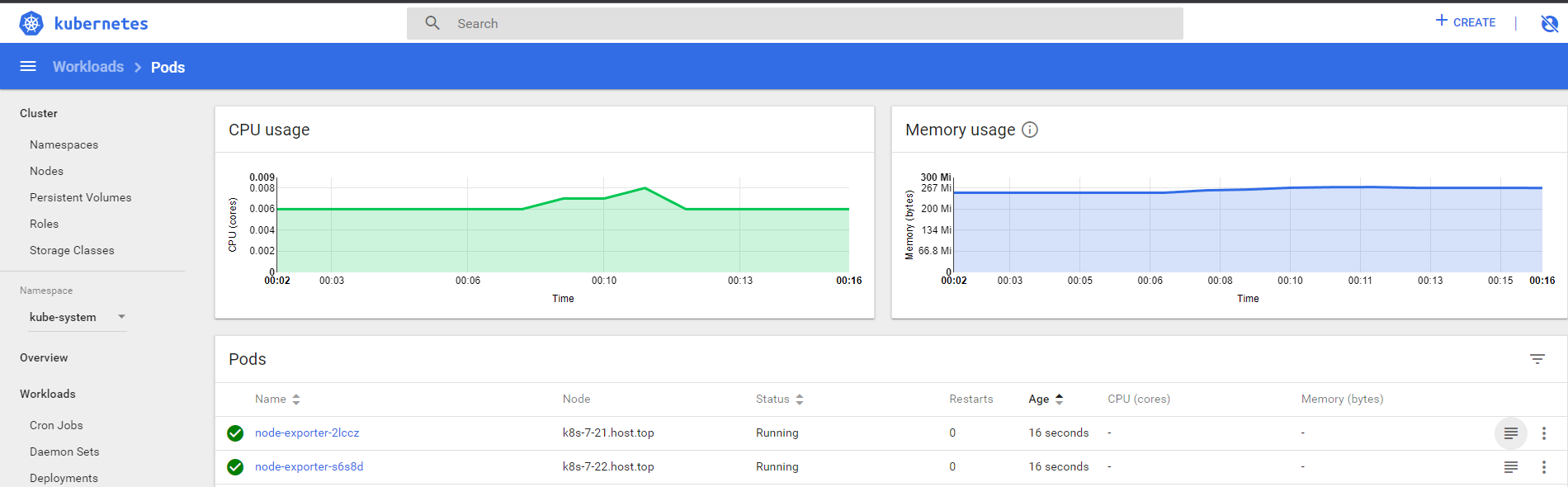

1.2.4、检测正否正常

查看9100端口:

[root@k8s-7-21 ~]# netstat -tulpn |grep 9100

tcp6 0 0 :::9100 :::* LISTEN 27069/node_exporter

[root@k8s-7-21 ~]# kubectl get pods -o wide -n kube-system |grep node-exporter

node-exporter-2lccz 1/1 Running 0 2m13s 10.4.7.21 k8s-7-21.host.top <none> <none>

node-exporter-s6s8d 1/1 Running 0 2m13s 10.4.7.22 k8s-7-22.host.top <none> <none>

由于我们跟宿主机共享网络,所以docker没有IP了,IP就是宿主机

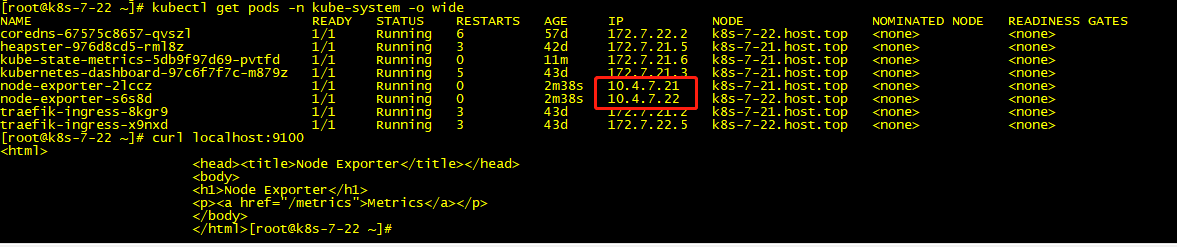

[root@k8s-7-21 ~]# curl localhost:9100

<html>

<head><title>Node Exporter</title></head>

<body>

<h1>Node Exporter</h1>

<p><a href="/metrics">Metrics</a></p>

</body>

</html>

docker取到的宿主机数据: curl http://10.4.7.21:9100/metrics

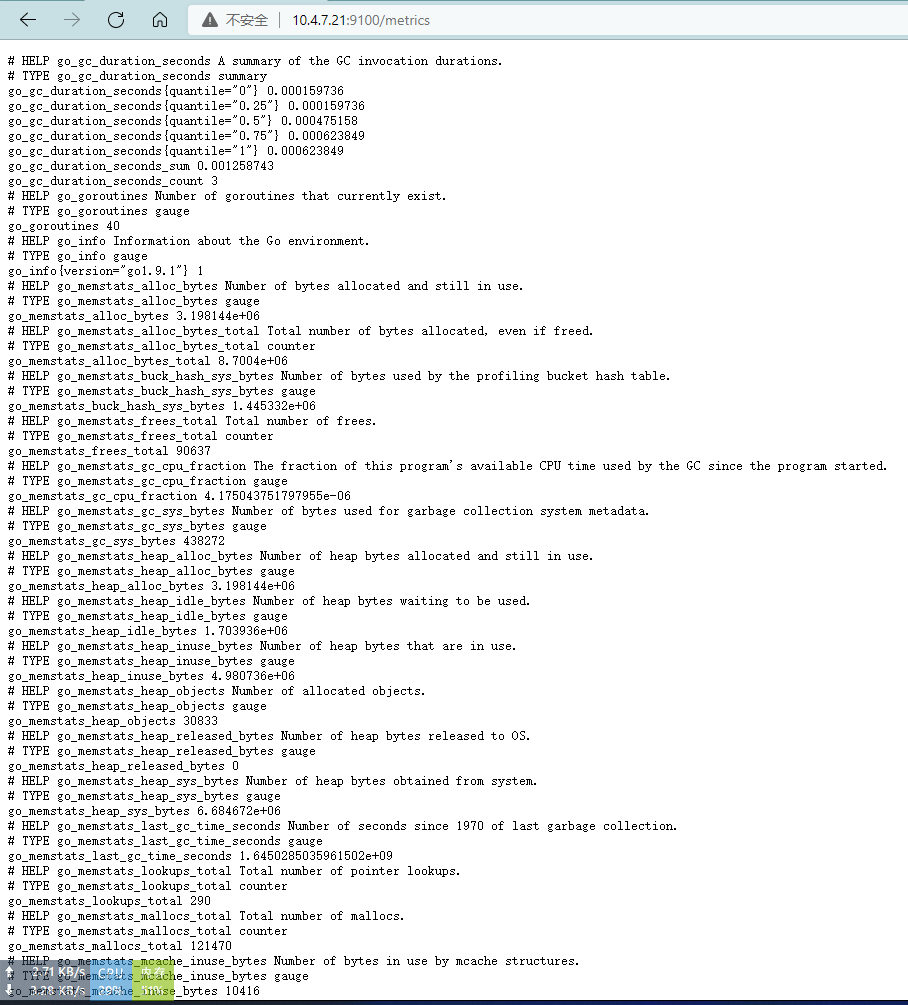

1.3、部署cadvisor

容器本身到底耗费多少资源,应该怎么监控到目前为止不知道。cadvisor用来监控容器内部的使用资源的重要工具,他是通过容器外部来监控。cadvisor在k8s1.9以前跟kubectl集成在一起,启动kubectl,cadvisor启动。从1.9以后分开。

所以部署cadvisor后,cadvisor通过跟kubectl要数据,kubectl跟docker引擎要数据,才能实现采集数据。所以容器本身消耗资源的监控是依赖于cadvisor,而不是kube-state-metrics

官方地址: https://hub.docker.com/r/google/cadvisor

1.3.1、准备镜像

[root@k8s-7-200 ~]# docker pull google/cadvisor:v0.28.3

[root@k8s-7-200 node-exporter]# docker images |grep cadvisor

google/cadvisor v0.28.3 75f88e3ec333 3 years ago 62.2MB

[root@k8s-7-200 node-exporter]# docker image tag 75f88e3ec333 harbor.itdo.top/public/cadvisor:v0.28.3

[root@k8s-7-200 node-exporter]# docker push harbor.itdo.top/public/cadvisor:v0.28.3

1.3.2、准备资源配置清单

[root@k8s-7-200 k8s-yaml]# mkdir -p /data/k8s-yaml/cadvisor && cd /data/k8s-yaml/cadvisor

[root@k8s-7-200 cadvisor]# vim ds.yaml

#cadvisor采用daemonset方式运行在node节点上,通过污点的方式排除master

#同时将部分宿主机目录挂载到本地,如docker的数据目录

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: cadvisor

namespace: kube-system

labels:

app: cadvisor

spec:

selector:

matchLabels:

name: cadvisor

template:

metadata:

labels:

name: cadvisor

spec:

hostNetwork: true

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: cadvisor

image: harbor.itdo.top/public/cadvisor:v0.28.3 #修改镜像地址

imagePullPolicy: IfNotPresent

volumeMounts:

- name: rootfs

mountPath: /rootfs

readOnly: true

- name: var-run

mountPath: /var/run

- name: sys

mountPath: /sys

readOnly: true

- name: docker

mountPath: /var/lib/docker

readOnly: true

ports:

- name: http

containerPort: 4194

protocol: TCP

readinessProbe:

tcpSocket:

port: 4194

initialDelaySeconds: 5

periodSeconds: 10

args:

- --housekeeping_interval=10s

- --port=4194

terminationGracePeriodSeconds: 30

volumes:

- name: rootfs

hostPath:

path: /

- name: var-run

hostPath:

path: /var/run

- name: sys

hostPath:

path: /sys

- name: docker

hostPath:

path: /data/docker

解释:

effect 定义对Pod 排斥效果:

- NoSchedule: 仅影响调度过程,对现存的Pod 不产生影响

- NoExecute:影响调度过程,也影响现存的Pod,不满足容忍度就会去除节点的Pod

- PreferNoSchedule:属于柔性约束,尽量避免将Pod调度到具有该污点的Node上,不过无其他节点可以调度时也可以允许调度

修改运算节点的软连接,在所有的运算节点。

理由:版本原因,不修改软连接容器启动不起来

k8s-7-21和k8s-7-22都需要操作

配置/sys/fs/cgroup/重新挂载

[root@k8s-7-21 ~]# mount -o remount,rw /sys/fs/cgroup/

挂在在那呢,

[root@k8s-7-21 ~]# ll /sys/fs/cgroup/

drwxr-xr-x. 7 root root 0 2月 1 15:51 blkio

lrwxrwxrwx. 1 root root 11 2月 1 15:45 cpu -> cpu,cpuacct

lrwxrwxrwx. 1 root root 11 2月 1 15:45 cpuacct -> cpu,cpuacct

drwxr-xr-x. 7 root root 0 2月 1 15:51 cpu,cpuacct

容器使用cpuacct,cpu

[root@k8s-7-21 ~]# ln -s /sys/fs/cgroup/cpu,cpuacct/ /sys/fs/cgroup/cpuacct,cpu

[root@k8s-7-21 ~]# ll /sys/fs/cgroup/

lrwxrwxrwx. 1 root root 11 2月 1 15:45 cpu -> cpu,cpuacct

lrwxrwxrwx. 1 root root 11 2月 1 15:45 cpuacct -> cpu,cpuacct

lrwxrwxrwx. 1 root root 27 2月 2 10:35 cpuacct,cpu -> /sys/fs/cgroup/cpu,cpuacct/

drwxr-xr-x. 7 root root 0 2月 1 15:51 cpu,cpuacct

1.3.3、应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/cadvisor/ds.yaml

daemonset.extensions/cadvisor created

1.3.4、查看状态

1.4、blackbox-exporter

用来帮助探明业务容器是否存活,zabbix有一个重要监控场所,tomcat起来占用8080端口,要监控8080端口是否存活,如果是意外的情况下宕了,要触发报警。 在这里面专门检测容器是否宕机的工具(发版跟新代码不算)

1.4.1、准备镜像

[root@k8s-7-200 cadvisor]# docker pull prom/blackbox-exporter:v0.15.1

[root@k8s-7-200 cadvisor]# docker images |grep blackbox-exporter

prom/blackbox-exporter v0.15.1 81b70b6158be 16 months ago 19.7MB

[root@k8s-7-200 cadvisor]# docker image tag 81b70b6158be harbor.itdo.top/public/blackbox-exporter:v0.15.1

[root@k8s-7-200 cadvisor]# docker image push harbor.itdo.top/public/blackbox-exporter:v0.15.1

1.4.2、准备资源配置清单

[root@k8s-7-200 cadvisor]# mkdir -p /data/k8s-yaml/blackbox-exporter && cd /data/k8s-yaml/blackbox-exporter

[root@k8s-7-200 blackbox-exporter]# vi cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: kube-system

data:

blackbox.yml: |-

modules:

http_2xx:

prober: http

timeout: 2s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

valid_status_codes: [200,301,302]

method: GET

preferred_ip_protocol: "ip4"

tcp_connect:

prober: tcp

timeout: 2s

[root@k8s-7-200 blackbox-exporter]# vi dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: blackbox-exporter

namespace: kube-system

labels:

app: blackbox-exporter

annotations:

deployment.kubernetes.io/revision: 1

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

volumes:

- name: config

configMap:

name: blackbox-exporter

defaultMode: 420

containers:

- name: blackbox-exporter

image: harbor.itdo.top/public/blackbox-exporter:v0.15.1

imagePullPolicy: IfNotPresent

args:

- --config.file=/etc/blackbox_exporter/blackbox.yml

- --log.level=info

- --web.listen-address=:9115

ports:

- name: blackbox-port

containerPort: 9115

protocol: TCP

resources:

limits:

cpu: 200m

memory: 256Mi

requests:

cpu: 100m

memory: 50Mi

volumeMounts:

- name: config

mountPath: /etc/blackbox_exporter

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

[root@k8s-7-200 blackbox-exporter]# vi svc.yaml

#没有指定targetPort是因为Pod中暴露端口名称为 blackbox-port

kind: Service

apiVersion: v1

metadata:

name: blackbox-exporter

namespace: kube-system

spec:

selector:

app: blackbox-exporter

ports:

- name: blackbox-port

protocol: TCP

port: 9115

[root@k8s-7-200 blackbox-exporter]# vi ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: blackbox-exporter

namespace: kube-system

spec:

rules:

- host: blackbox.itdo.top

http:

paths:

- path: /

backend:

serviceName: blackbox-exporter

servicePort: blackbox-port

配置blackbox.itdo.top域名解析:

[root@k8s-7-11 ~]# vi /var/named/itdo.top.zone

blackbox A 10.4.7.10

[root@k8s-7-11 ~]#systemctl restart named

[root@k8s-7-21 ~]# dig -t A blackbox.itdo.top @192.168.0.2 +short

10.4.7.10

1.4.3、应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/blackbox-exporter/cm.yaml

configmap/blackbox-exporter created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/blackbox-exporter/dp.yaml

deployment.extensions/blackbox-exporter created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/blackbox-exporter/svc.yaml

service/blackbox-exporter created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/blackbox-exporter/ingress.yaml

ingress.extensions/blackbox-exporter created

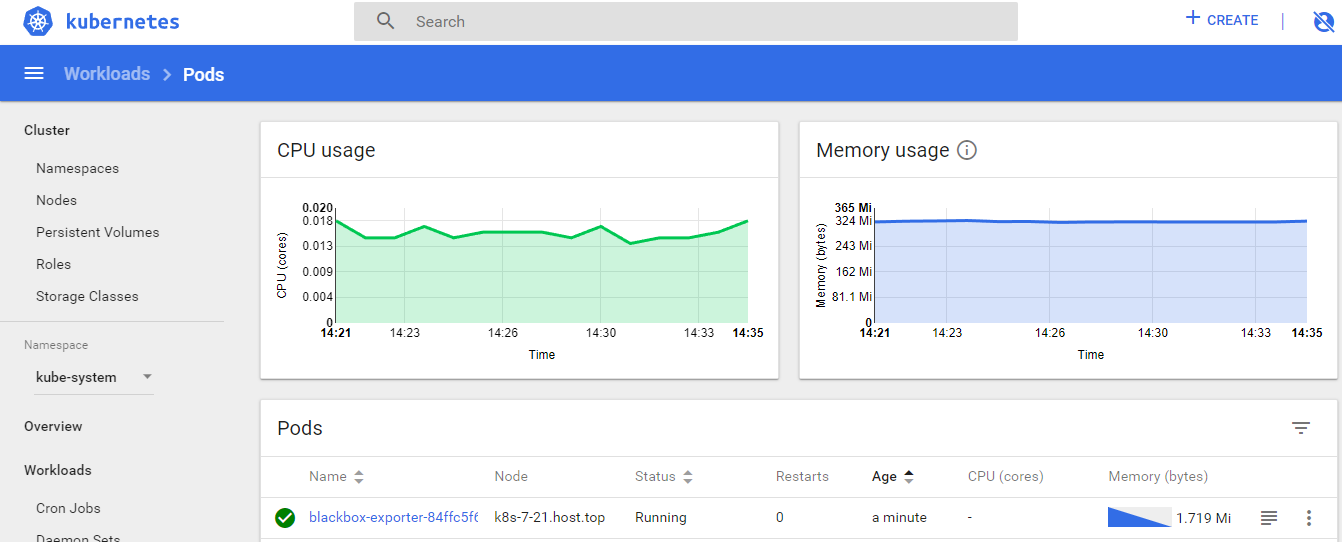

1.4.4、查看状态

2、交付Prometheus Server

2.1、准备镜像

[root@k8s-7-200 ~]# docker pull prom/prometheus:v2.14.0

[root@k8s-7-200 ~]# docker image tag prom/prometheus:v2.14.0 harbor.itdo.top/infra/prometheus:v2.14.0

[root@k8s-7-200 ~]# docker image push harbor.itdo.top/infra/prometheus:v2.14.0

2.2、准备资源配置清单

[root@k8s-7-200 ~]# mkdir /data/k8s-yaml/prometheus && cd /data/k8s-yaml/prometheus

[root@k8s-7-200 prometheus]# vi rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

namespace: infra

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: infra

[root@k8s-7-200 prometheus]# vi dp.yaml

#Prometheus在生产环境中,一般采用一个单独的大内存node部署,采用污点让其它pod不会调度上来

#–storage.tsdb.min-block-duration 内存中缓存最新多少分钟的TSDB数据,生产中会缓存更多的数据

#–storage.tsdb.retention TSDB数据保留的时间,生产中会保留更多的数据

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "5"

labels:

name: prometheus

name: prometheus

namespace: infra

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

nodeName: k8s-7-21.host.top

containers:

- name: prometheus

image: harbor.itdo.top/infra/prometheus:v2.14.0

imagePullPolicy: IfNotPresent

command:

- /bin/prometheus

args:

- --config.file=/data/etc/prometheus.yml

- --storage.tsdb.path=/data/prom-db

- --storage.tsdb.min-block-duration=5m

- --storage.tsdb.retention=24h

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: /data

name: data

resources:

requests:

cpu: "500m"

memory: "1Gi"

limits:

cpu: "1000m"

memory: "2Gi"

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

serviceAccountName: prometheus

volumes:

- name: data

nfs:

server: k8s-7-200.itdo.top

path: /data/nfs-volume/prometheus

[root@k8s-7-200 prometheus]# vi svc.yaml 暴露9090端口

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: infra

spec:

ports:

- port: 9090

protocol: TCP

targetPort: 9090

selector:

app: prometheus

[root@k8s-7-200 prometheus]# vi ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: traefik

name: prometheus

namespace: infra

spec:

rules:

- host: prometheus.itdo.top

http:

paths:

- path: /

backend:

serviceName: prometheus

servicePort: 9090

配置dns解析

[root@k8s-7-11 ~]# vi /var/named/itdo.top.zone

prometheus A 10.4.7.10

[root@k8s-7-11 ~]# systemctl restart named

[root@k8s-7-11 ~]# dig -t A prometheus.itdo.top @10.4.7.11 +short

10.4.7.10

2.3、准备Prometheus配置

拷贝证书

[root@k8s-7-200 ~]# mkdir -p /data/nfs-volume/prometheus/{etc,prom-db} 创建etc配置、时间序列数据库

[root@k8s-7-200 prometheus]# cd /data/nfs-volume/prometheus/etc/ 拷贝证书,因为要跟apiserver通讯。Prometheus为什么自动发现k8s的元数据,是因为跟apiserver通讯

[root@k8s-7-200 etc]# cp /opt/certs/ca.pem .

[root@k8s-7-200 etc]# cp -a /opt/certs/client.pem .

[root@k8s-7-200 etc]# cp -a /opt/certs/client-key.pem .

[root@k8s-7-200 etc]# ll

-rw-r--r--. 1 root root 1346 2月 5 16:12 ca.pem

-rw-------. 1 root root 1679 11月 30 12:22 client-key.pem

-rw-r--r--. 1 root root 1363 11月 30 12:22 client.pem

准备Prometheus的配置文件yml

[root@k8s-7-200 etc]# vim /data/nfs-volume/prometheus/etc/prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'etcd' #除了job name etcd需要修改参数,其他都可以直接应用到生产环境,因为其他都是自动发现规则

tls_config:

ca_file: /data/etc/ca.pem

cert_file: /data/etc/client.pem

key_file: /data/etc/client-key.pem

scheme: https

static_configs: #修改为对应etcd的ip

- targets:

- '10.4.7.12:2379'

- '10.4.7.21:2379'

- '10.4.7.22:2379'

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:10255

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __address__

replacement: ${1}:4194

- job_name: 'kubernetes-kube-state'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_pod_label_grafanak8sapp]

regex: .*true.*

action: keep

- source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name']

regex: 'node-exporter;(.*)'

action: replace

target_label: nodename

- job_name: 'blackbox_http_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: http

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port, __meta_kubernetes_pod_annotation_blackbox_path]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+);(.+)

replacement: $1:$2$3

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'blackbox_tcp_pod_probe'

metrics_path: /probe

kubernetes_sd_configs:

- role: pod

params:

module: [tcp_connect]

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]

action: keep

regex: tcp

- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __param_target

- action: replace

target_label: __address__

replacement: blackbox-exporter.kube-system:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'traefik'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme]

action: keep

regex: traefik

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

2.4、应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/prometheus/rbac.yaml

serviceaccount/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/prometheus/dp.yaml

deployment.extensions/prometheus created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/prometheus/svc.yaml

service/prometheus created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/prometheus/ingress.yaml

ingress.extensions/prometheus created

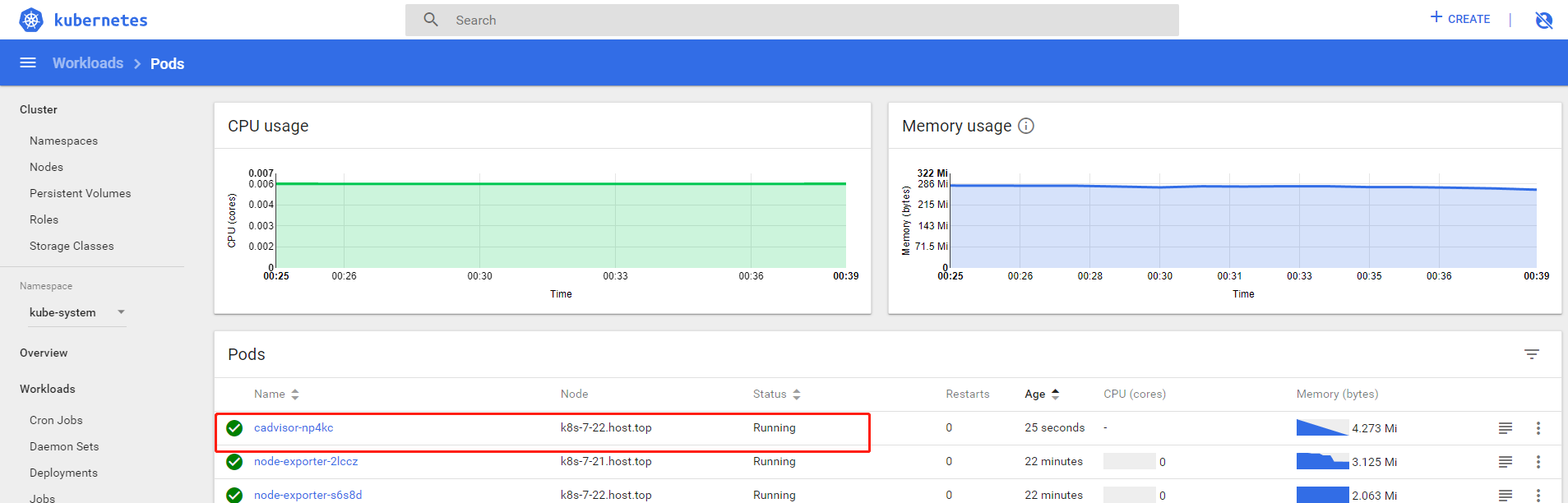

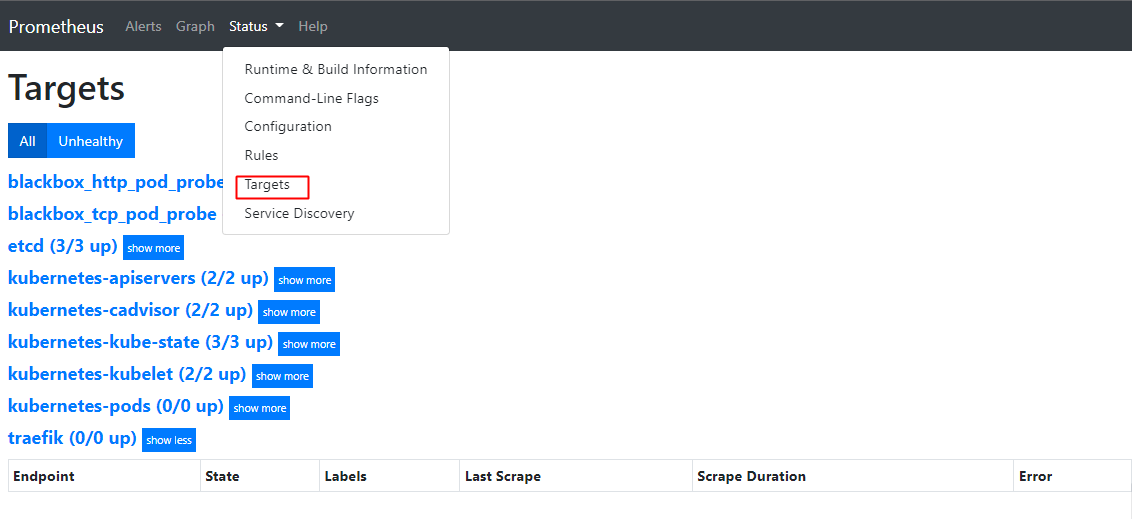

2.5、查看状态

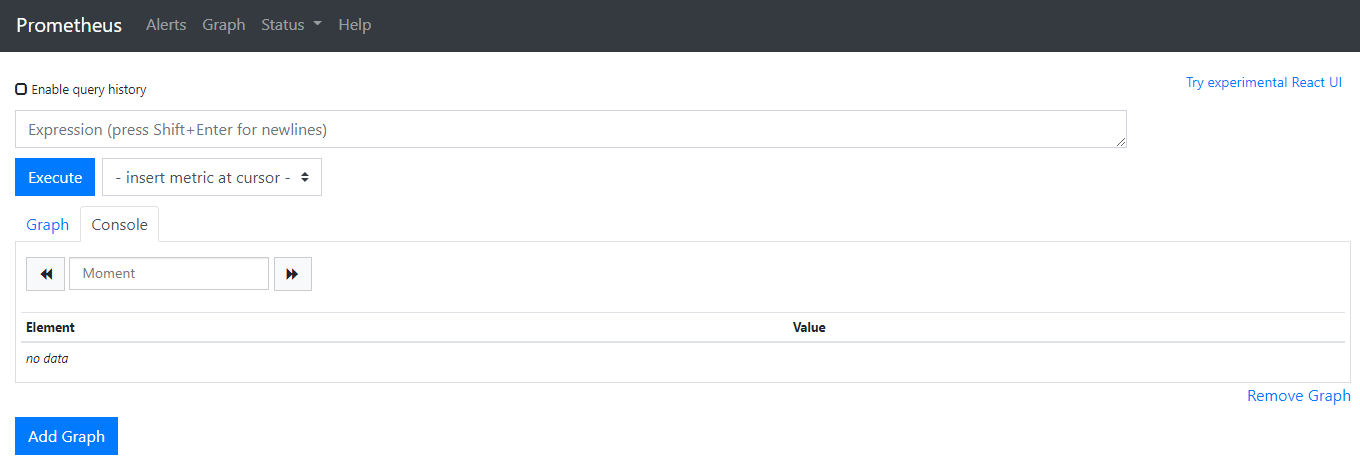

访问http://prometheus.itdo.top后跳转http://prometheus.itdo.top/graph

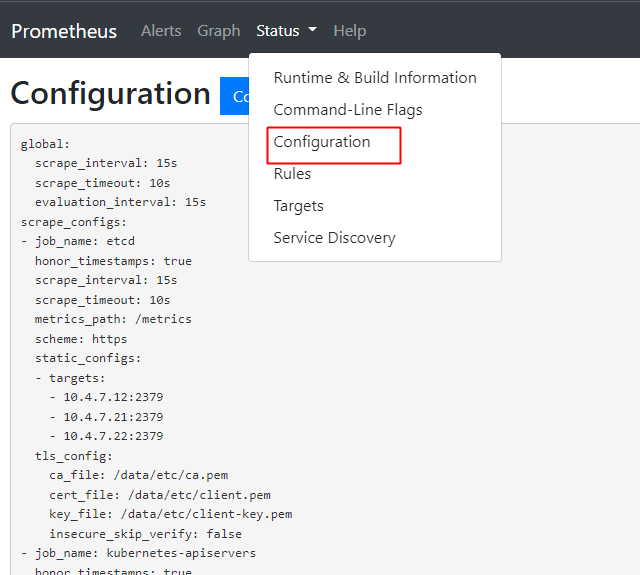

3、 配置文件说明

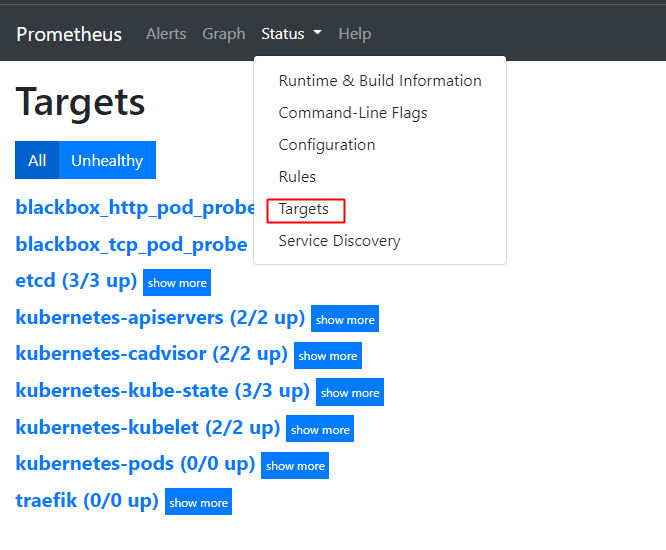

正常情况下,会看到如下这些,这些targets是怎么出来的,是之前的prometheus.yml配置文件已经写怎么调取

通过Configuration也可以查看。配置文件里面有多少个job.name,targets就有多少个模块

job_name: etcd中的配置说明:

- job_name: etcd

honor_timestamps: true

scrape_interval: 15s

scrape_timeout: 10s

metrics_path: /metrics 这里代表取etcd中的哪个路径取数据,比如etc是10.4.7.12:2379,取10.4.7.12:2379/metrics数据

scheme: https

static_configs: 静态配置,在这些job_name只有etcd是静态(etcd是写死),其他都是自动发现,

- targets:

- 10.4.7.12:2379

- 10.4.7.21:2379

- 10.4.7.22:2379

tls_config: 从etcd中取/存数据,使用ca认证

ca_file: /data/etc/ca.pem

cert_file: /data/etc/client.pem

key_file: /data/etc/client-key.pem

insecure_skip_verify: false

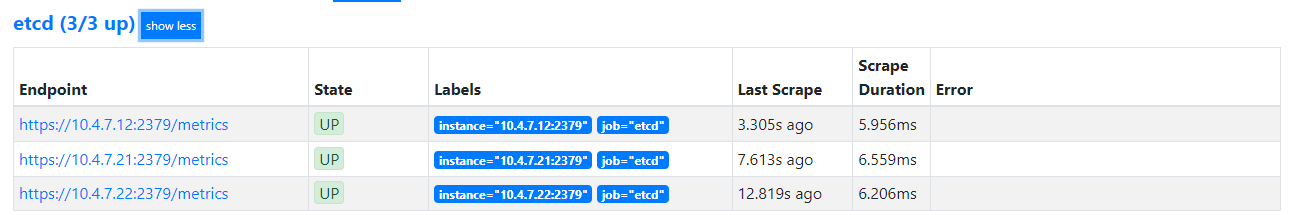

Endpoint 接入点,从那些接入点取数据。lables我能通过什么样的维度标签去过滤相关的指标

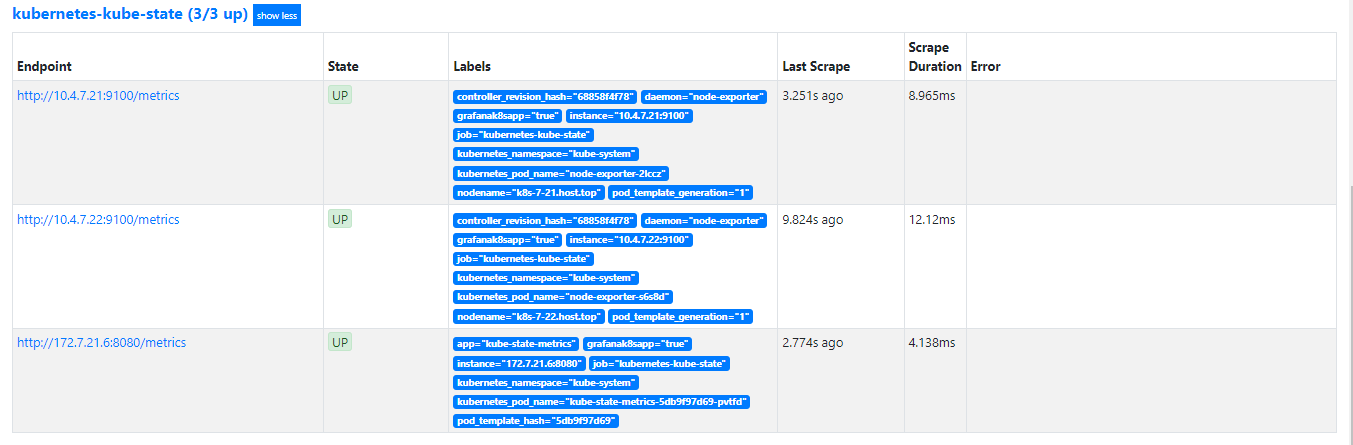

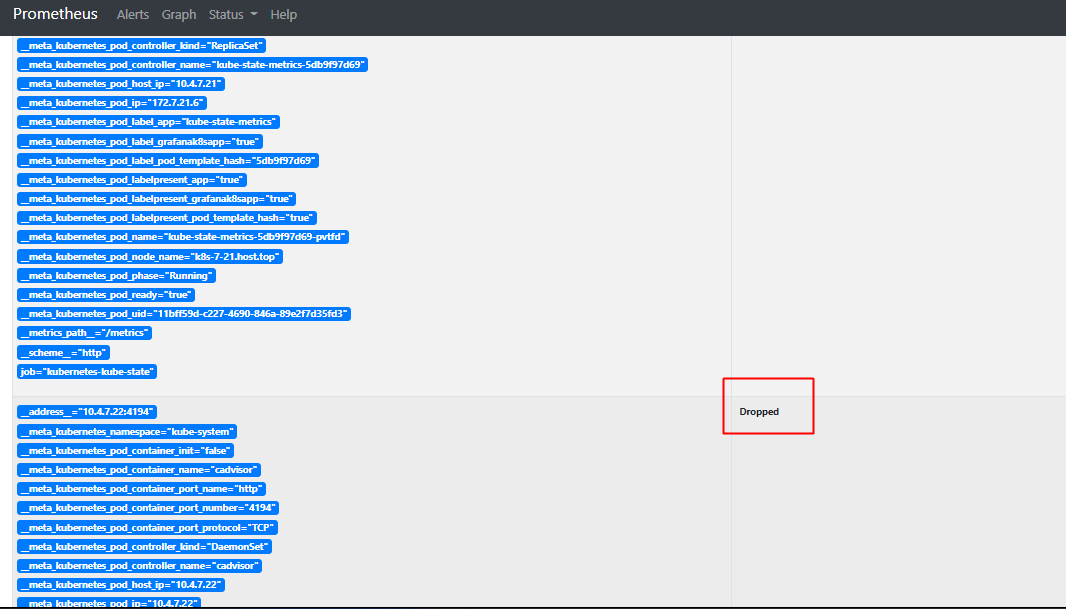

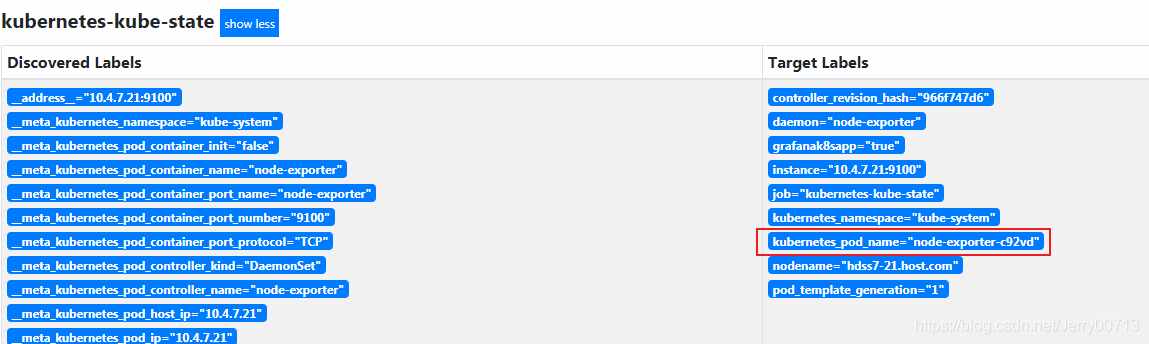

job_name:kubernetes-kube-state中的配置说明:

这是一个自动发现

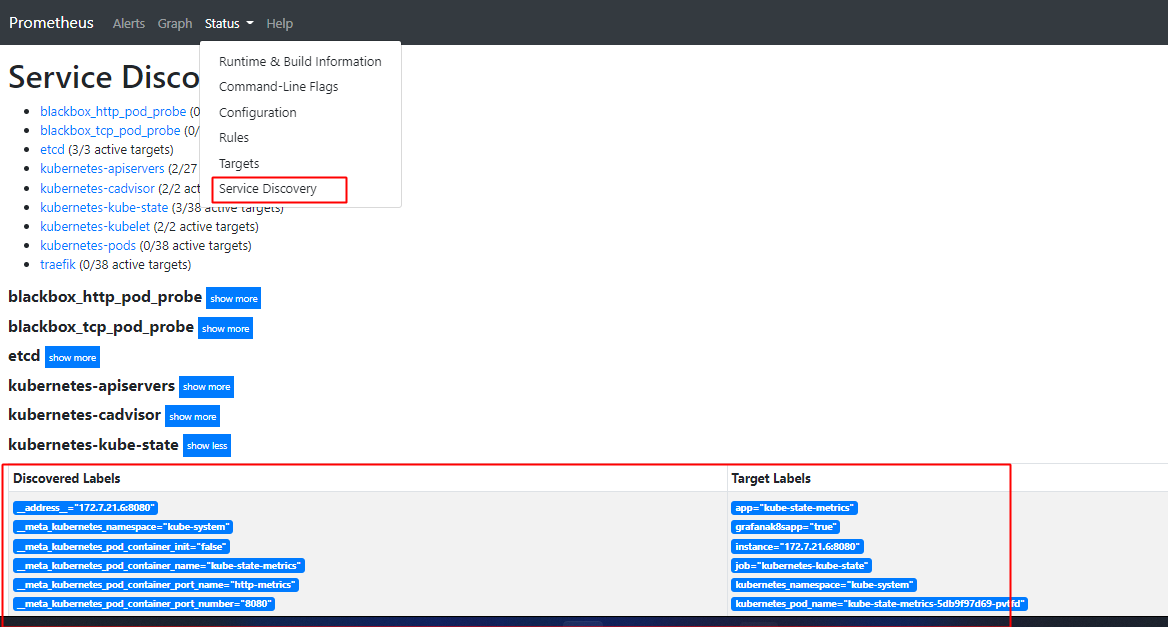

Service Discovery 服务发现详细

- job_name: kubernetes-kube-state

honor_timestamps: true

scrape_interval: 15s 采集的时间

scrape_timeout: 10s 超时时间

metrics_path: /metrics 到底请求哪个url,地址

scheme: http

kubernetes_sd_configs: kubernetes_service Discoveryd的配置,定义job_name: kubernetes-kube-state的时候用的就是自动发现的service Discoveryd配置.而之所普罗米修斯能够使用(kubernetes_sd)自动发现k8s,主要在于普罗米修斯天生就跟k8s在一起,所以只要声明后,自动Retrieval数据中心识别自动发现k8s的元数据

- role: pod 能够发现k8s中所有的pod

relabel_configs: 我不能让所有的pod都进到我job这里来,过滤

其中Endpoint里面的数据是从Service Discovery 的_address_=“” 取得

按照配置显示发现所有的pod,然后过滤后是http://10.4.7.21:9100/metrics、http://10.4.7.22:9100/metrics是node_exporter,http://172.7.22.5:8080/metrics是kube-state-metrics。怎么过滤

relabel_configs配置说明(keep):

relabel_configs: 我不能让所有的pod都进到我job这里来,过滤

- separator: ;

regex: __meta_kubernetes_pod_label_(.+)

replacement: $1

action: labelmap

- source_labels: [__meta_kubernetes_namespace]

separator: ;

regex: (.*)

target_label: kubernetes_namespace

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

target_label: kubernetes_pod_name

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_label_grafanak8sapp]

separator: ;

regex: .*true.*

replacement: $1

action: keep

- source_labels: [__meta_kubernetes_pod_label_daemon, __meta_kubernetes_pod_node_name]

separator: ;

regex: node-exporter;(.*)

target_label: nodename

replacement: $1

action: replace

最重要的是查看action,其中keep和dorp是主要,先看。

source_labels: [__meta_kubernetes_pod_label_grafanak8sapp] 取源数据=当你的k8s里面的pod有一个标签,这个标签的名字是grafanak8sapp,我就把他保留,没有这个标签就删除。

separator: ;

regex: .true. 如果正则表达式是true,动作就是keep

replacement: $1

action: keep

[root@k8s-7-22 ~]# kubectl get pods -o wide -n kube-system |grep kube-state-metrics

kube-state-metrics-6bc667c8b9-z9dhd 1/1 Running 1 5d18h 172.7.22.5 k8s-7-22.host.com <none> <none>

[root@k8s-7-22 ~]# kubectl get pod kube-state-metrics-6bc667c8b9-z9dhd -o yaml -n kube-system |grep -A 5 labels

labels:

app: kube-state-metrics

grafanak8sapp: "true" #之前在dp.yaml中定义好了

pod-template-hash: 6bc667c8b9

name: kube-state-metrics-6bc667c8b9-z9dhd

namespace: kube-system

[root@k8s-7-22 ~]#

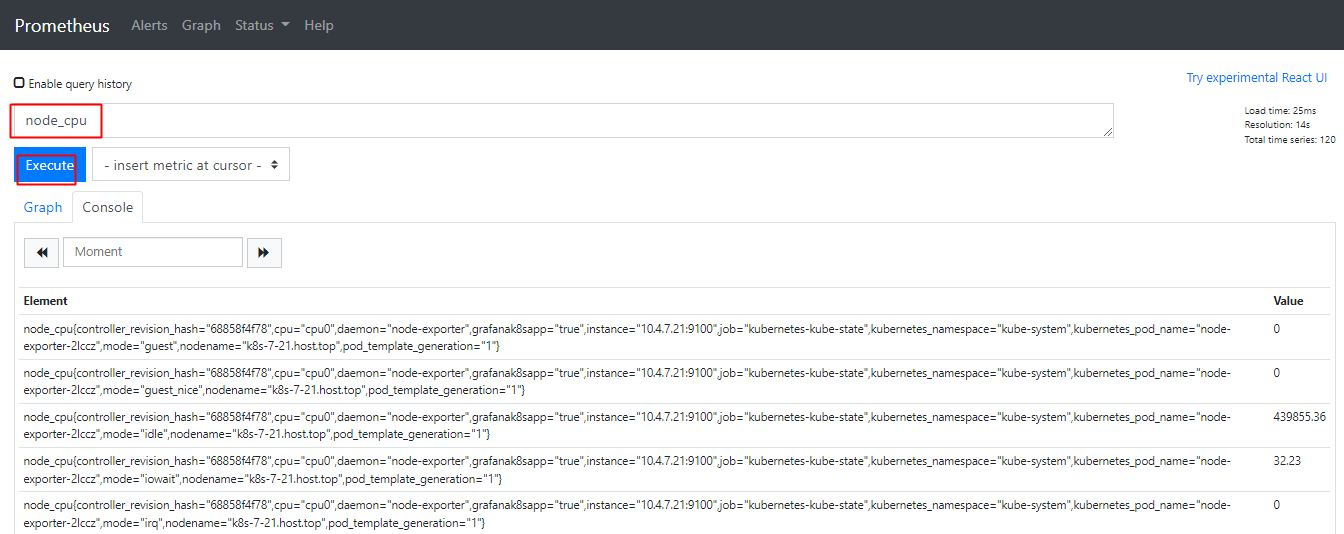

使用Prometheus 查询

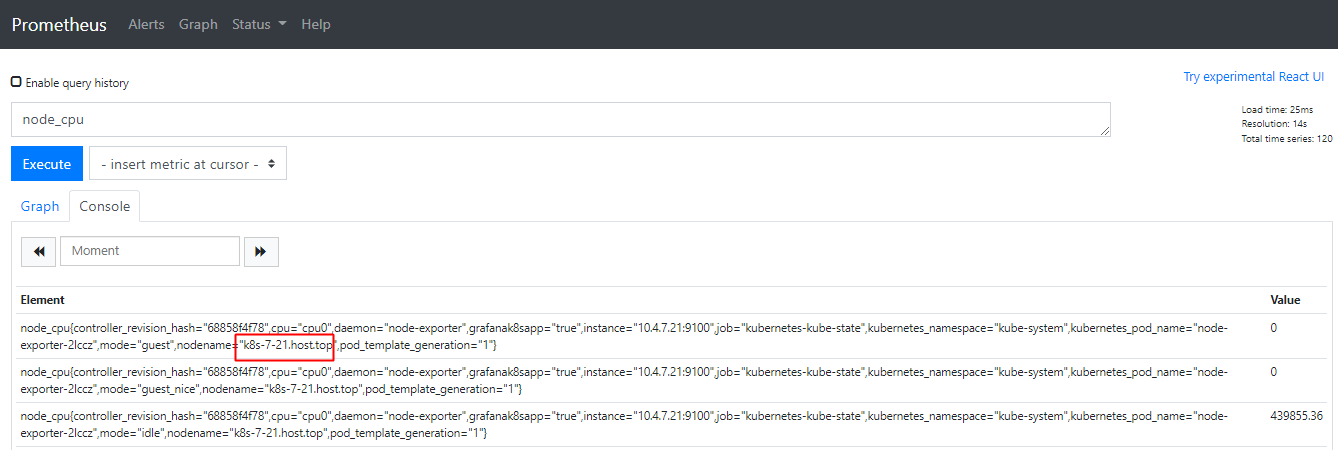

输入node_cpu (函数) ,点击Execute ,其中一条就是一个数据维度

搜索的都是监控指标,所以输出都是监控指标。如node_cpu{nodename=“k8s-7-21.host.top”}

总结:从拉取到的所有的数据中(拉取的都是函数),存到普罗米修斯,通过Execute(标签)过滤这些函数

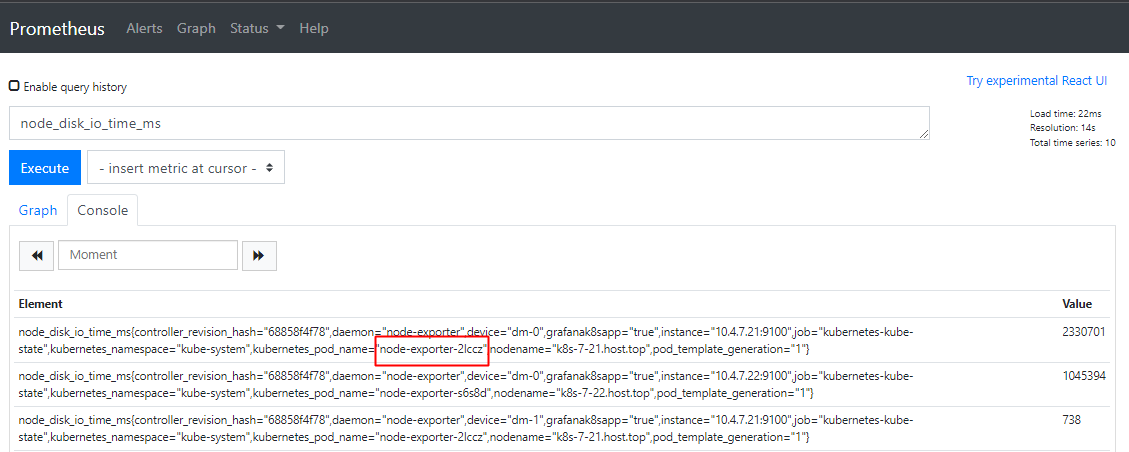

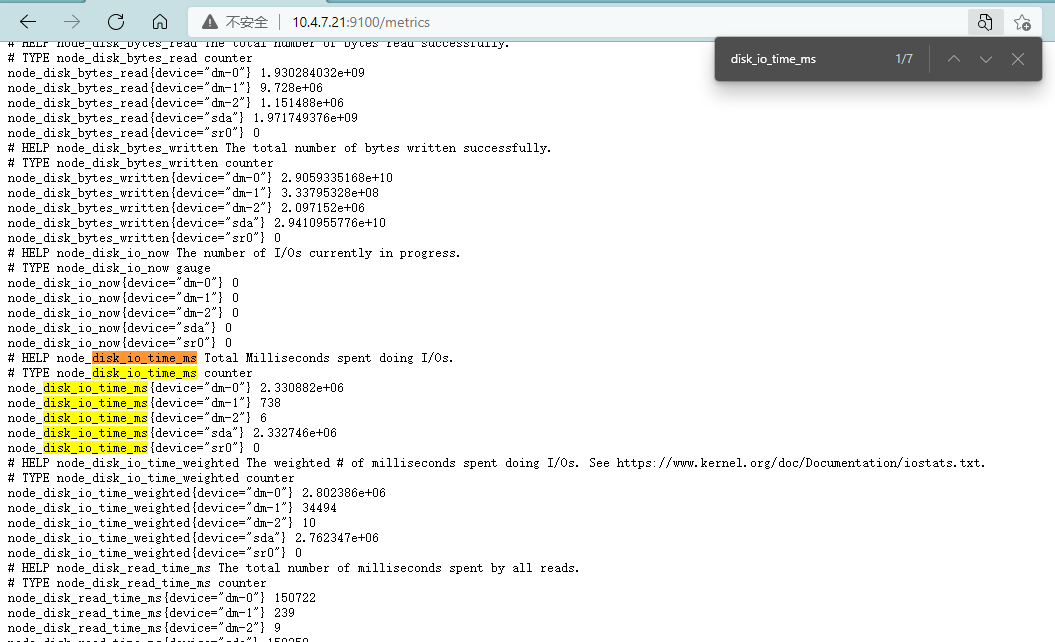

比如搜索的是node_disk_io_time_ms,他是从kubernetes_pod_name="node-exporter-2lccz"获取的

在target中kubernetes-kube-state (3/3 up)中随便找个http://10.4.7.21:9100/metrics 说明是node-exporter帮助收集。Prometheus通过 scrape_interval: 15s 的间隔,curl一下http://10.4.7.21:9100/metrics收集最新数据

思考:搜索node_disk_io_time_ms数据时很长的,但是在http://10.4.7.21:9100/metrics中node_disk_io_time_ms只有{device=“dm-0”} ,其他数据呢。这个其他数据就是relabel_configs下的决定的

relabel_configs配置说明(replace):

- job_name: kubernetes-kube-state

略

relabel_configs:

略

- source_labels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

target_label: kubernetes_pod_name

replacement: $1

action: replace

略

这句话的意思是:把从k8s中获取到有用的pod的pod_name整了一个标签名字叫当前监控指标下的对应的pod的名字(kubernetes_pod_name)。如数据从kubernetes-kube-state中http://10.4.7.21:9100/metrics获取的,对应的http://10.4.7.21:9100/metrics的pod的名字是node-exporter-c92vd,所以给在http://10.4.7.21:9100/metrics获取的数据对应的pod_name整了一个标签名字叫node-exporter-c92vd

也就是kubernetes_pod_name="node-exporter-c92vd"

-- action:重新标签动作

-- replace:默认,通过regex匹配source_lable的值,适用replacement来引用表达式匹配的分组

-- keep:删除regex与连接不匹配的目标source_label.

-- dorp:删除regex与连接匹配的目标source_label

-- hashmod:设置target_label为modules连接的哈希值source_label.

-- lablemap:匹配regex所有标签名称,然后复制匹配标签的值进行分组,replacement分组引用($1,$2)替代

4、Prometheus的使用

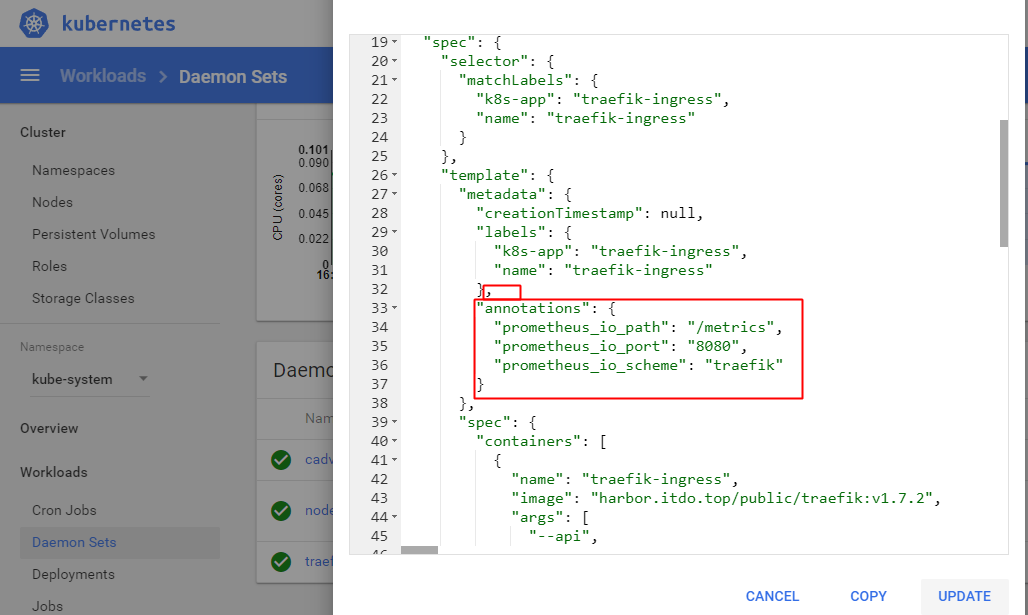

4.1、Traefik接入

查看一下配置

- job_name: traefik

略

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme] 这里面是匹配了一个注解,所以在traefik的pod控制器加上一个annotations注解,重启pod后,监控就会生效

separator: ;

regex: traefik

replacement: $1

action: keep

略

- job_name: traefik

honor_timestamps: true

scrape_interval: 15s

scrape_timeout: 10s

metrics_path: /metrics

scheme: http

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme]

separator: ;

regex: traefik

replacement: $1

action: keep

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

separator: ;

regex: (.+)

target_label: __metrics_path__

replacement: $1

action: replace

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

separator: ;

regex: ([^:]+)(?::\d+)?;(\d+)

target_label: __address__

replacement: $1:$2

action: replace

- separator: ;

regex: __meta_kubernetes_pod_label_(.+)

replacement: $1

action: labelmap

- source_labels: [__meta_kubernetes_namespace]

separator: ;

regex: (.*)

target_label: kubernetes_namespace

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

target_label: kubernetes_pod_name

replacement: $1

action: replace

修改traefik的pod控制器加上一个annotations注解,重启pod

#在traefik的daemonset.yaml的spec.template.metadata 加入注释,然后重启Pod

tips:不需要手动对齐语句

"annotations": {

"prometheus_io_scheme": "traefik",

"prometheus_io_path": "/metrics",

"prometheus_io_port": "8080"

}

Annotation与Label类似,也使用key/value键值对的形式进行定义。

Label:具有严格的命名规则,它定义的是Kubernetes对象的元数据(Metadata),并且用于 Label Selector。

Annotation:则是用户任意定义的“附加”信息,以便于外部工具进行查找。

删除两个节点的traefik

如果容器发生了Terminating后,可以进行强制删除

kubectl delete pods 容器名字 -n kube-system --force --grace-period=0

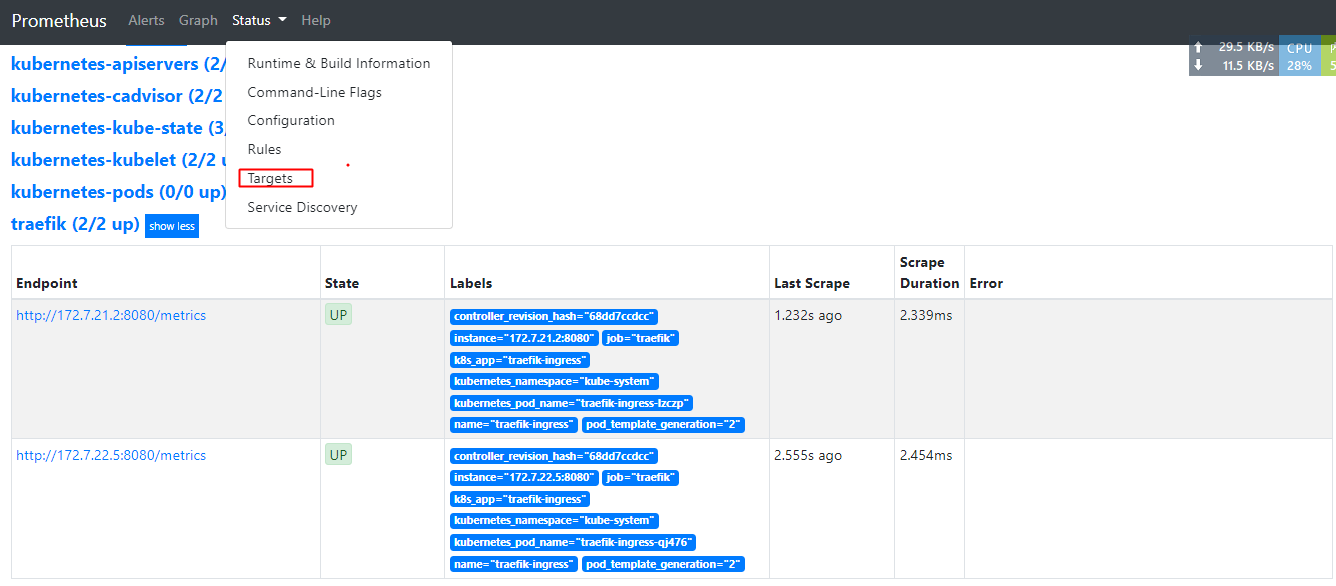

启动后查看Prometheus

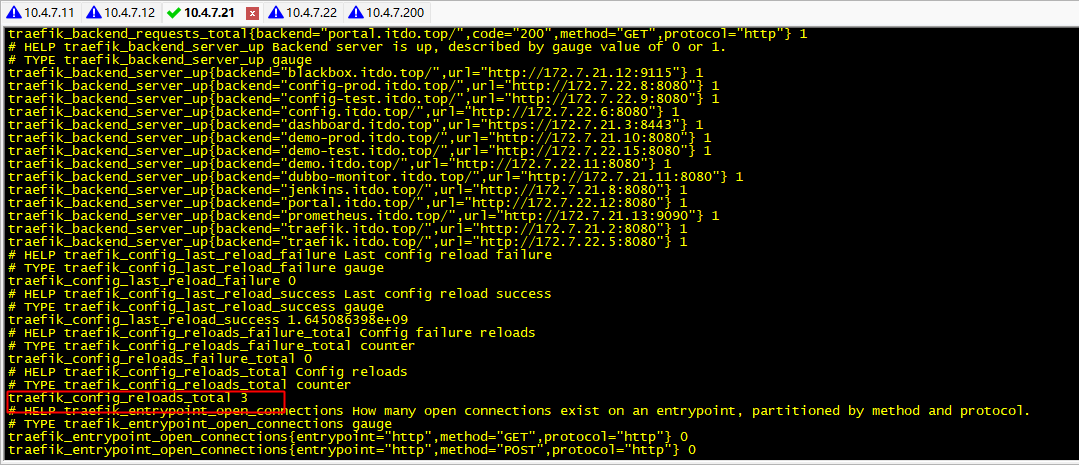

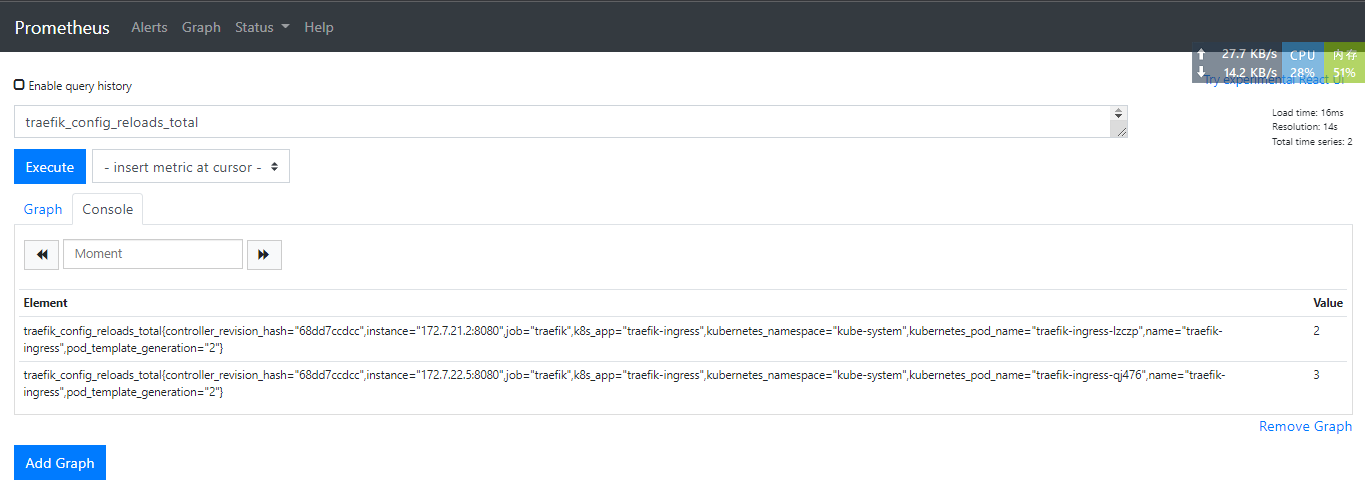

查看对应监控项

curl http://172.7.22.5:8080/metrics

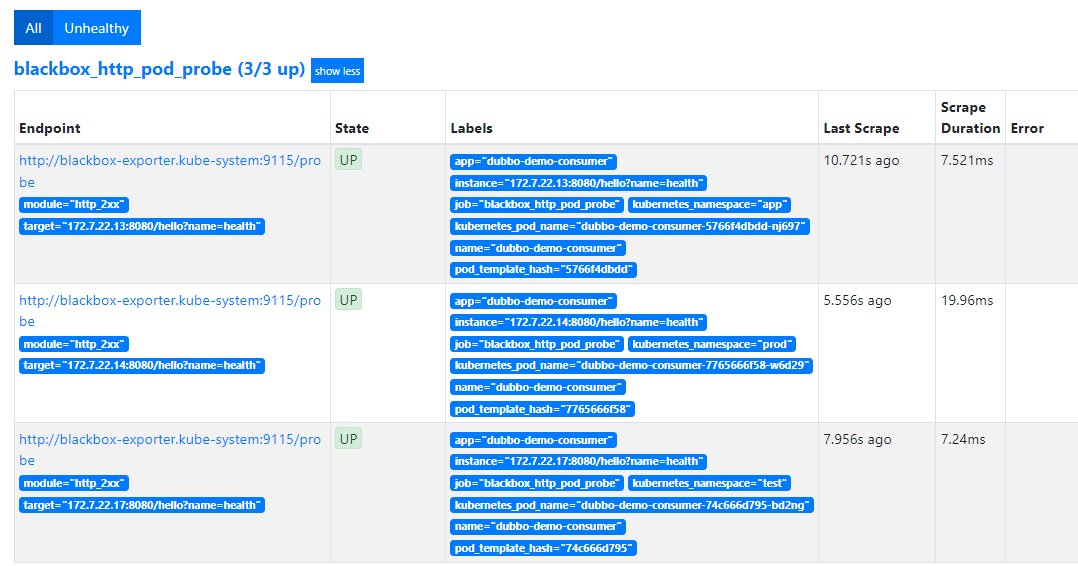

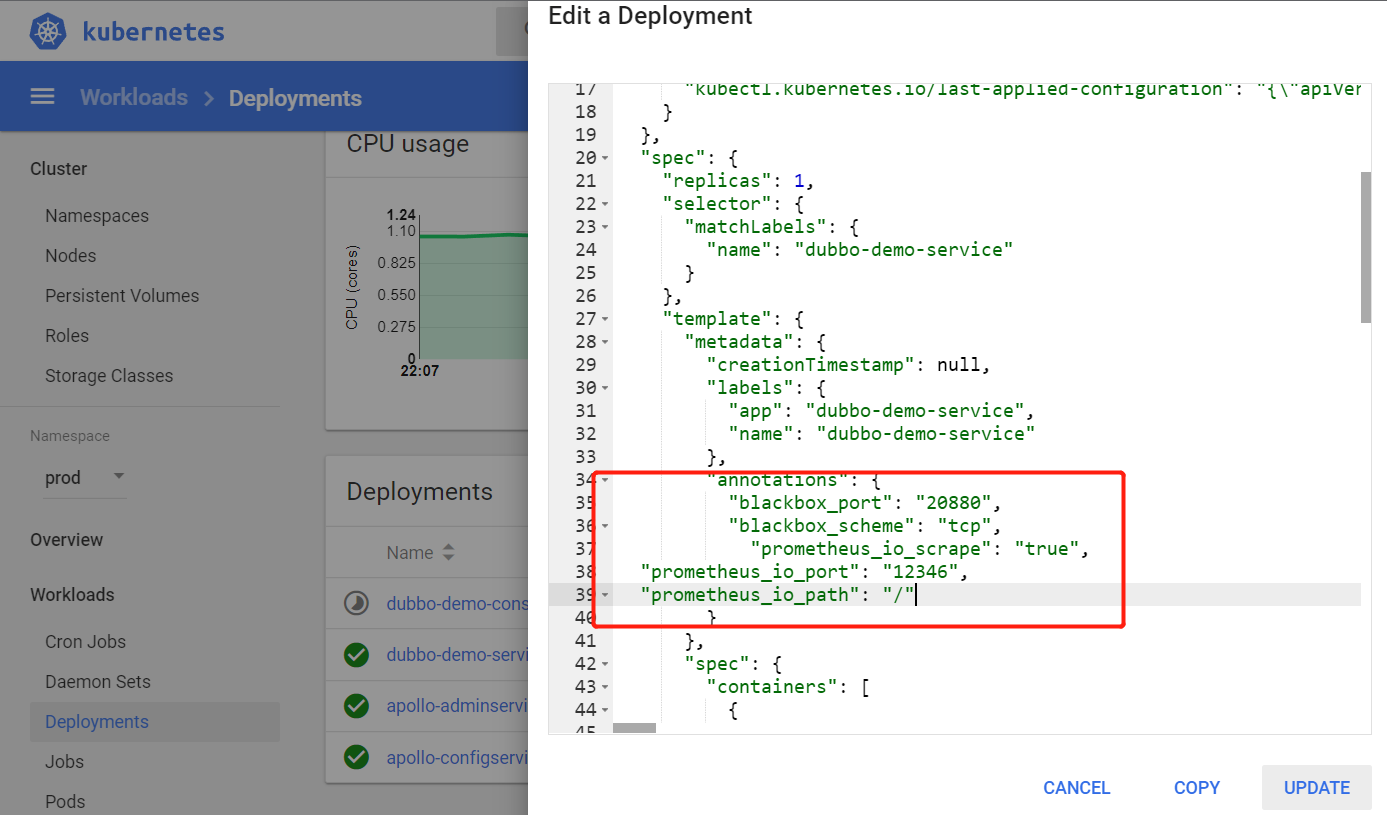

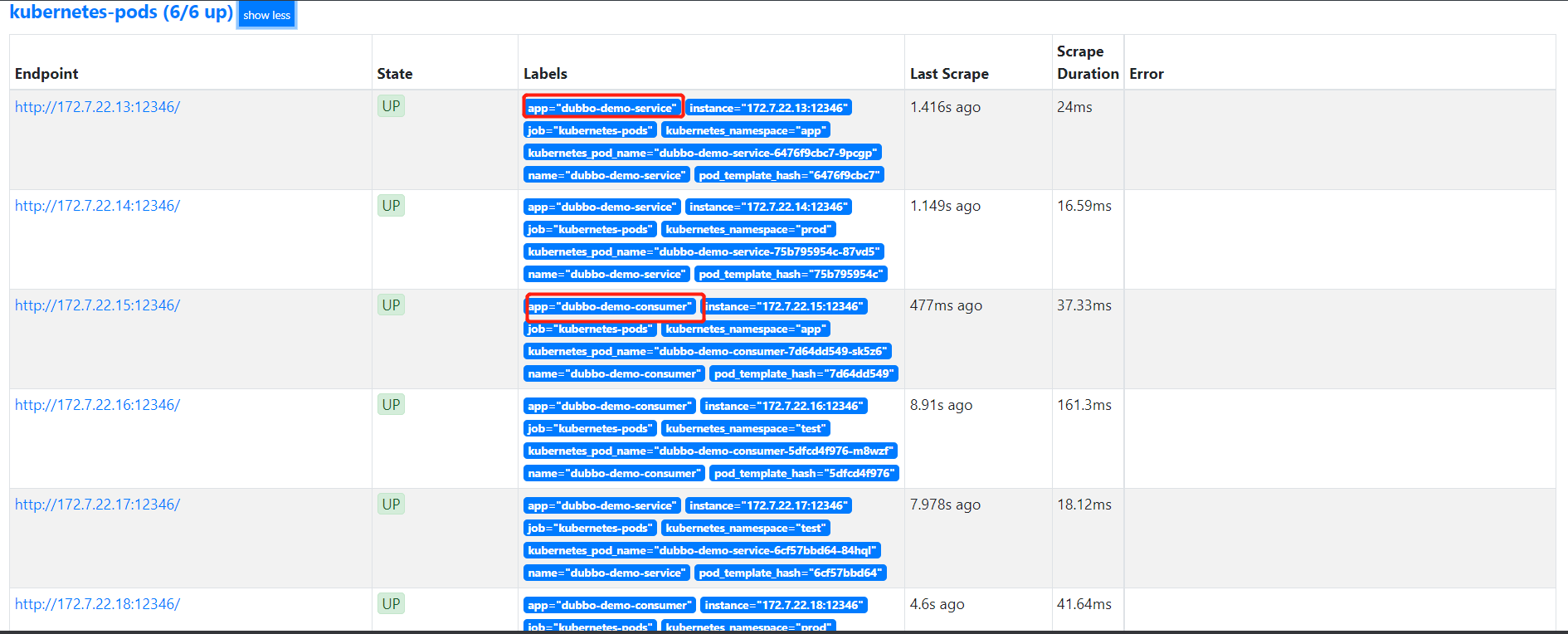

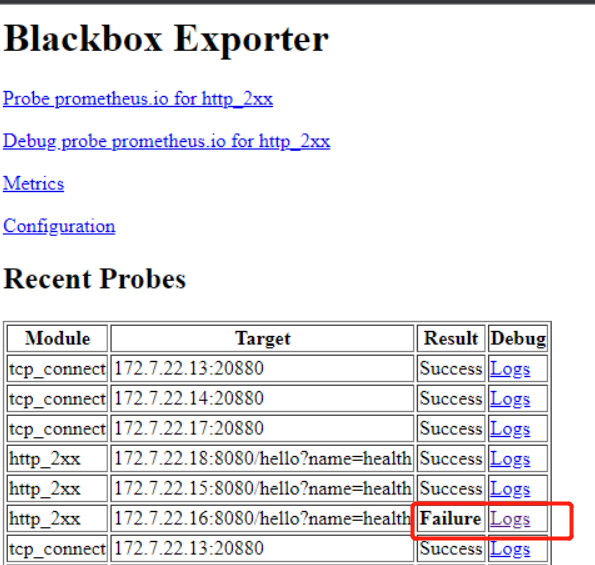

4.2、接入Blackbox监控

监控我们服务是否存活,检测这个服务是否存活,我的先确定这个服务是什么类型的服务,是TCP还是http,然后会不定时的检测端口存活,判断是不是存活。

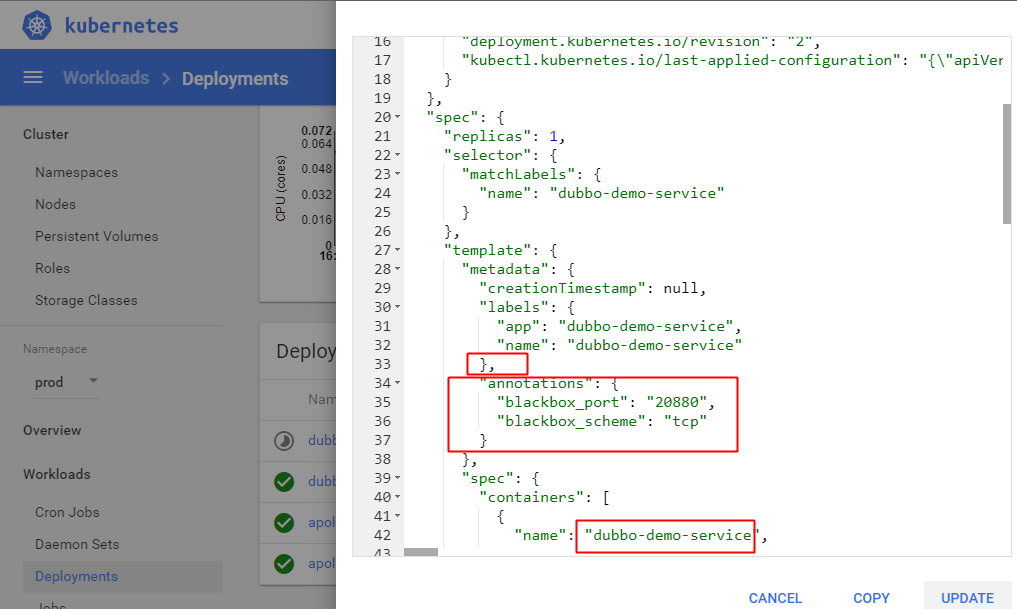

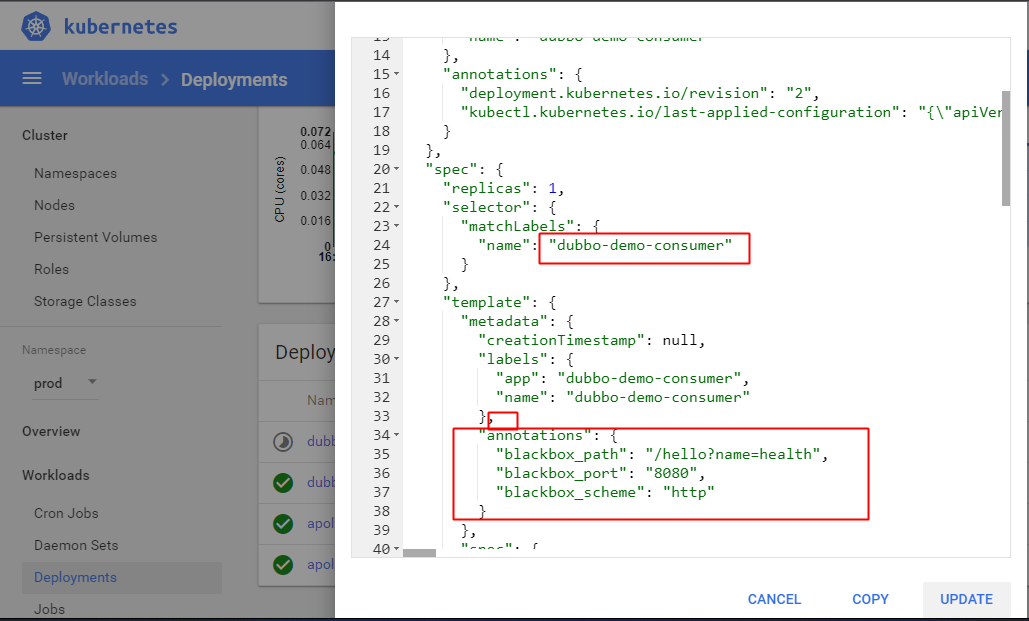

# 在对应pod的注释中添加,以下分别是TCP探测和HTTP探测,Prometheus中没有定义其它协议的探测

"annotations": {

"blackbox_port": "20880",

"blackbox_scheme": "tcp"

}

"annotations": {

"blackbox_port": "8080",

"blackbox_scheme": "http",

"blackbox_path": "/hello?name=health"

}

测试TCP:

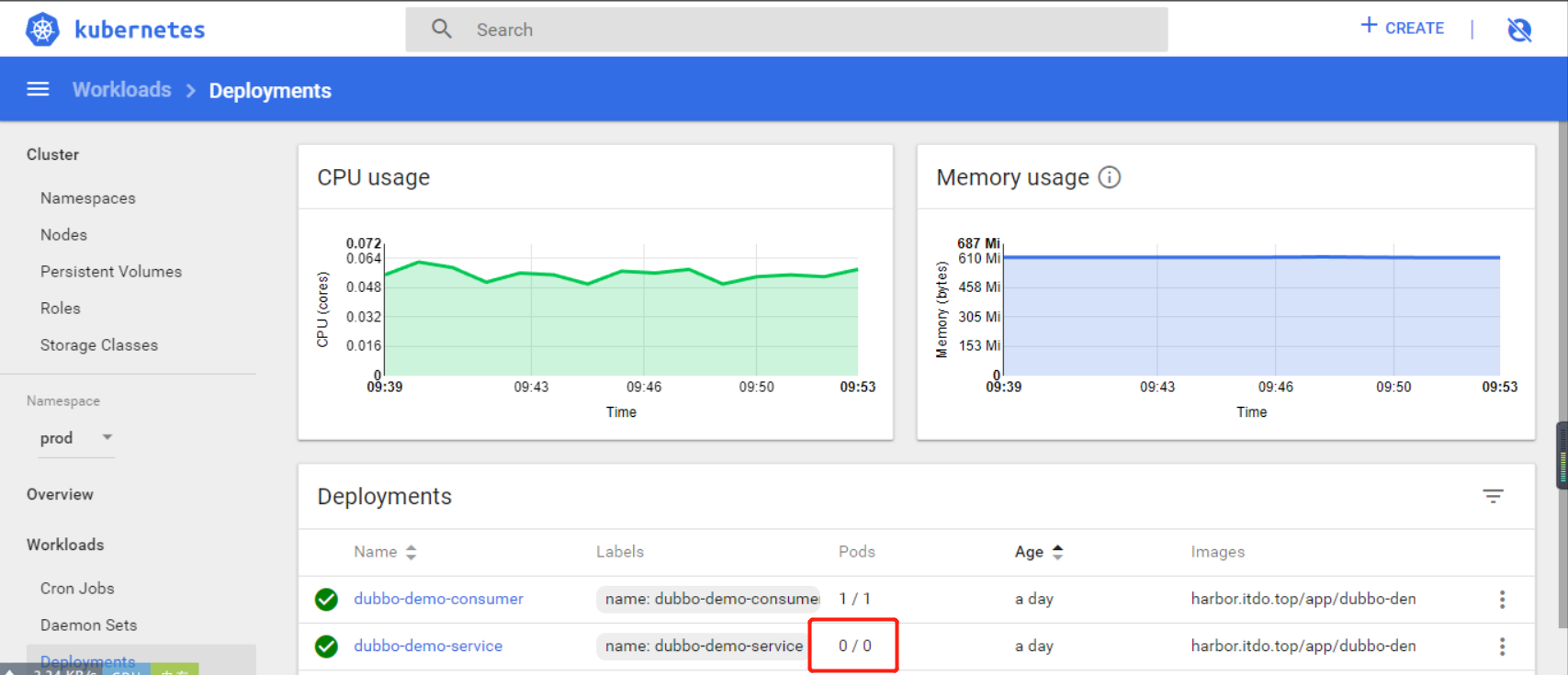

这里我们监控dubbo-demo-server为例子,dubbo-demo-server是TCP协议,修改dp.yaml启动容器

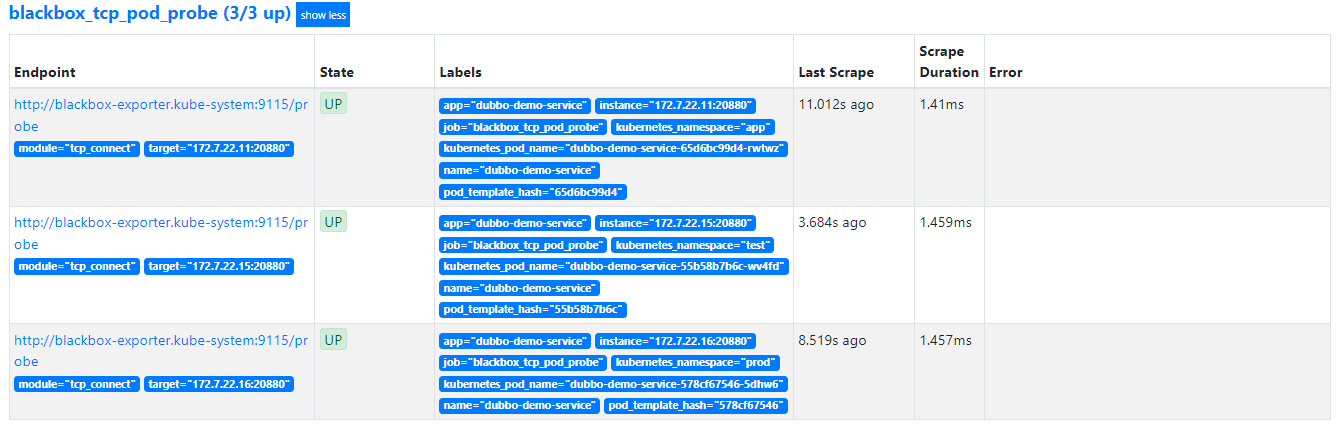

查看状态

显示的结果在

blackbox_http_pod_probe (0/0 up) show more http监控结果项目

blackbox_tcp_pod_probe (1/1 up) TCP监控结果项目

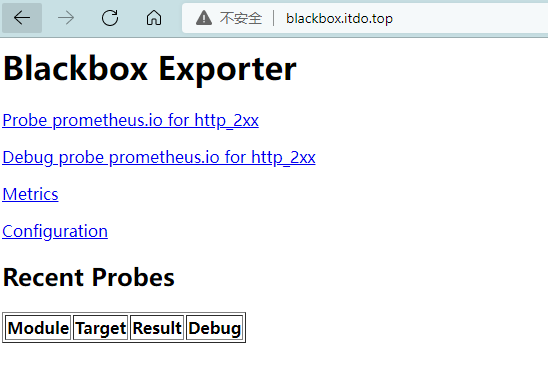

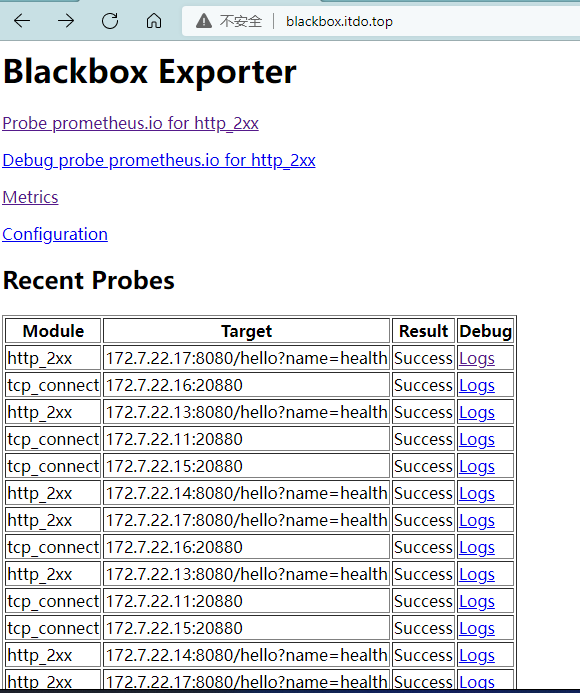

访问http://blackbox.itdo.top,所以检测机制:blackbox帮助访问172.7.22.16:20880,进而判断服务是不是正常

查看日志

在Targets中请求数据是http://blackbox-exporter.kube-system:9115/probe 而且get module=“tcp_connect” target=“172.7.22.16:20880”

blackbox-exporter使用的是集群的192.168.20.237:9115

[root@k8s-7-21 ~]# kubectl get svc -o wide -n kube-system |grep blackbox-exporter

blackbox-exporter ClusterIP 192.168.20.237 <none> 9115/TCP 7d20h app=blackbox-exporter

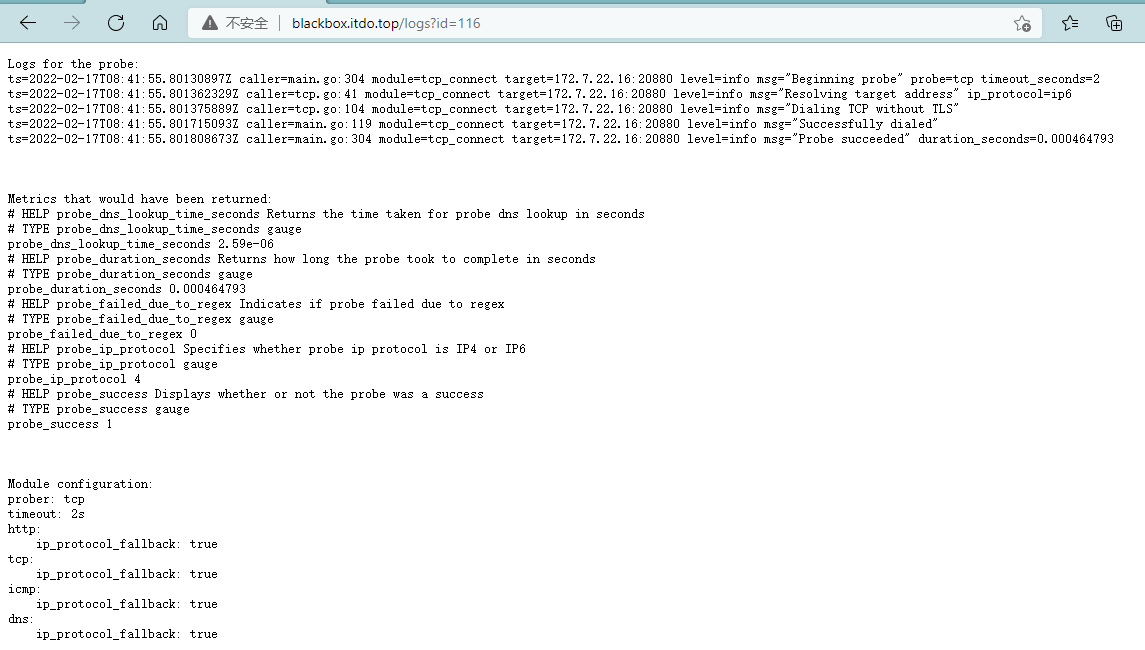

随便进入一个集群中的容器,然后curl blackbox-exporter的9115端口,而且带着module=tcp_connect&target=172.7.22.10:20880

[root@k8s-7-21 ~]# kubectl exec -it nginx-ds-q5tmt /bin/bash

root@nginx-ds-q5tmt:/# curl 'http://blackbox-exporter.kube-system:9115/probe?module=tcp_connect&target=172.7.22.16:20880'

# HELP probe_dns_lookup_time_seconds Returns the time taken for probe dns lookup in seconds

# TYPE probe_dns_lookup_time_seconds gauge

probe_dns_lookup_time_seconds 0.357904555

# HELP probe_duration_seconds Returns how long the probe took to complete in seconds

# TYPE probe_duration_seconds gauge

probe_duration_seconds 0.35803019

# HELP probe_failed_due_to_regex Indicates if probe failed due to regex

# TYPE probe_failed_due_to_regex gauge

probe_failed_due_to_regex 0

# HELP probe_ip_protocol Specifies whether probe ip protocol is IP4 or IP6

# TYPE probe_ip_protocol gauge

probe_ip_protocol 0

# HELP probe_success Displays whether or not the probe was a success

# TYPE probe_success gauge

probe_success 0

root@nginx-ds-q5tmt:/#

测试HTTP:

这里我们监控dubbo-demo-consumer为例子,dubbo-demo-consumer是HTTP协议,修改dp.yaml启动容器

5、部署Grafana

5.1、安装Grafana

[root@k8s-7-200 ~]# docker pull grafana/grafana:5.4.2

[root@k8s-7-200 ~]# docker image ls |grep grafana

grafana/grafana 5.4.2 6f18ddf9e552 2 years ago 243MB

[root@k8s-7-200 ~]# docker image tag 6f18ddf9e552 harbor.itdo.top/infra/grafana:v5.4.2

[root@k8s-7-200 ~]# docker image push harbor.itdo.top/infra/grafana:v5.4.2

5.2、准备资源配置清单

[root@k8s-7-200 ~]# mkdir /data/k8s-yaml/grafana && cd /data/k8s-yaml/grafana

[root@k8s-7-200 grafana]# vi rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: grafana

rules:

- apiGroups:

- "*"

resources:

- namespaces

- deployments

- pods

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: grafana

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: grafana

subjects:

- kind: User

name: k8s-node

[root@k8s-7-200 grafana]# vi dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: grafana

name: grafana

name: grafana

namespace: infra

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

name: grafana

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: grafana

name: grafana

spec:

containers:

- name: grafana

image: harbor.itdo.top/infra/grafana:v5.4.2 #修改镜像地址

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /var/lib/grafana

name: data

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

volumes:

- nfs:

server: k8s-7-200.host.top

path: /data/nfs-volume/grafana

name: data

新建grafana目录

[root@k8s-7-200 grafana]# mkdir /data/nfs-volume/grafana

[root@k8s-7-200 grafana]# vi svc.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: infra

spec:

ports:

- port: 3000

protocol: TCP

targetPort: 3000

selector:

app: grafana

[root@k8s-7-200 grafana]# vi ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana

namespace: infra

spec:

rules:

- host: grafana.itdo.top

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: 3000

配置dns解析

[root@k8s-7-11 ~]# vi /var/named/itdo.top.zone

grafana A 10.4.7.10

[root@k8s-7-11 ~]# systemctl restart named

[root@k8s-7-11 ~]# dig -t A grafana.itdo.top @10.4.7.11 +short

10.4.7.10

5.3、应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/grafana/rbac.yaml

clusterrole.rbac.authorization.k8s.io/grafana created

clusterrolebinding.rbac.authorization.k8s.io/grafana created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/grafana/dp.yaml

deployment.extensions/grafana created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/grafana/svc.yaml

service/grafana created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/grafana/ingress.yaml

ingress.extensions/grafana created

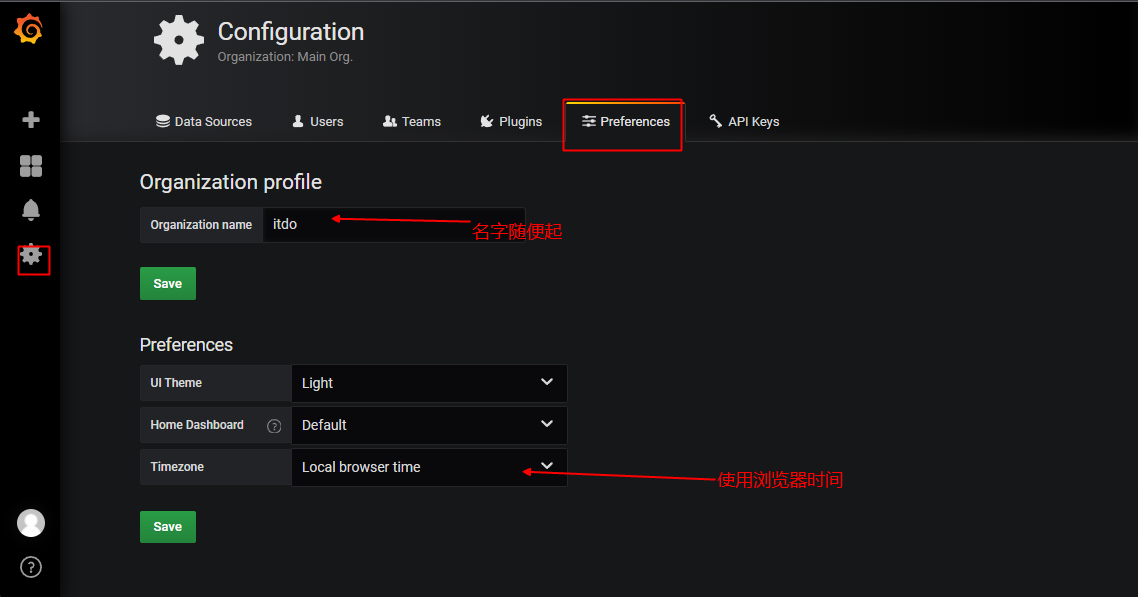

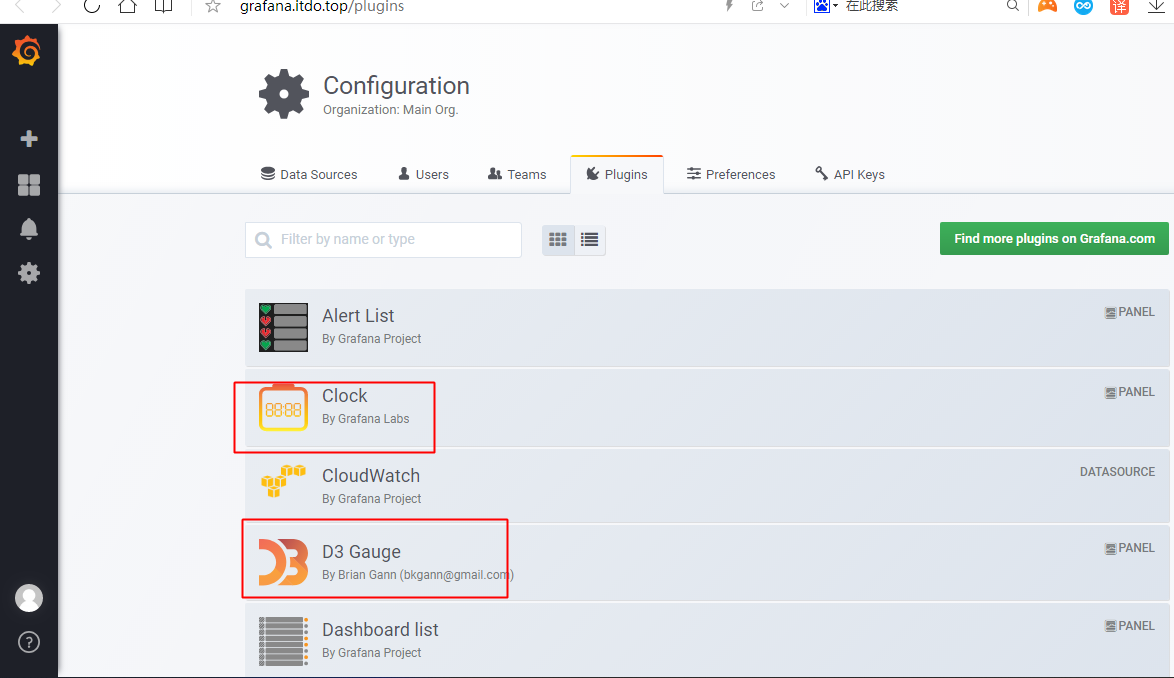

5.4、安装插件

访问http://grafana.itdo.top 默认账户密码 admin admin

配置界面–记得保存

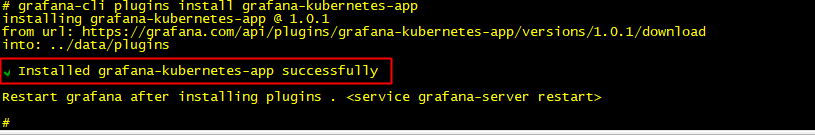

# 需要安装的插件

grafana-kubernetes-app

grafana-clock-panel 时钟插件

grafana-piechart-panel 饼图

briangann-gauge-panel D3 Gauge

natel-discrete-panel Discrete

# 以上插件安装有两种方式:

方式一: 进入docker容器中,执行各个安装命令

[root@k8s-7-21 ~]# kubectl get pod -n infra -l name=grafana

NAME READY STATUS RESTARTS AGE

grafana-6d45bd5f75-x8pxr 1/1 Running 0 79m

[root@k8s-7-21 ~]# kubectl exec -it grafana-6d45bd5f75-x8pxr -n infra /bin/bash

root@grafana-596d8dbcd5-l2466:/usr/share/grafana# grafana-cli plugins install grafana-kubernetes-app

root@grafana-596d8dbcd5-l2466:/usr/share/grafana# grafana-cli plugins install grafana-clock-panel

root@grafana-596d8dbcd5-l2466:/usr/share/grafana# grafana-cli plugins install grafana-piechart-panel

root@grafana-596d8dbcd5-l2466:/usr/share/grafana# grafana-cli plugins install briangann-gauge-panel

root@grafana-596d8dbcd5-l2466:/usr/share/grafana# grafana-cli plugins install natel-discrete-panel

安装后有下面提示代表成功

√Installed $name sccessfully

方式二:手动下载插件zip包,访问 https://grafana.com/api/plugins/repo/$plugin_name 查询插件版本号 $version

# 通过 https://grafana.com/api/plugins/$plugin_name/versions/$version/download 下载zip包

# 将zip包解压到 /data/nfs-volume/grafana/plugins 下

# 插件安装完毕后,重启Grafana的Pod

[root@k8s-7-200 plugins]# cd /data/nfs-volume/grafana/plugins

[root@k8s-7-200 plugins]# wget -O grafana-kubernetes-app.zip https://grafana.com/api/plugins/grafana-kubernetes-app/versions/1.0.1/download

[root@k8s-7-200 plugins]# wget -O grafana-clock-panel.zip https://grafana.com/api/plugins/grafana-clock-panel/versions/1.0.1/download

[root@k8s-7-200 plugins]# wget -O grafana-piechart-panel.zip https://grafana.com/api/plugins/grafana-piechart-panel/versions/1.0.1/download

[root@k8s-7-200 plugins]# wget -O briangann-gauge-panel.zip https://grafana.com/api/plugins/briangann-gauge-panel/versions/0.0.9/download

[root@k8s-7-200 plugins]# wget -O natel-discrete-panel.zip https://grafana.com/api/plugins/natel-discrete-panel/versions/0.1.1/download

[root@k8s-7-200 plugins]# ls *.zip | xargs -I {} unzip -q {}

不管方式一还是二都需要重启grafana,插件才能生效

[root@k8s-7-21 ~]# kubectl delete pod grafana-6d45bd5f75-x8pxr -n infra

pod "grafana-6d45bd5f75-x8pxr" deleted

[root@k8s-7-21 ~]# kubectl get pod -o wide -n infra

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

grafana-6d45bd5f75-wlm95 1/1 Running 0 42s 172.7.21.10 k8s-7-21.host.top <none> <none>

检查插件是否添加成功

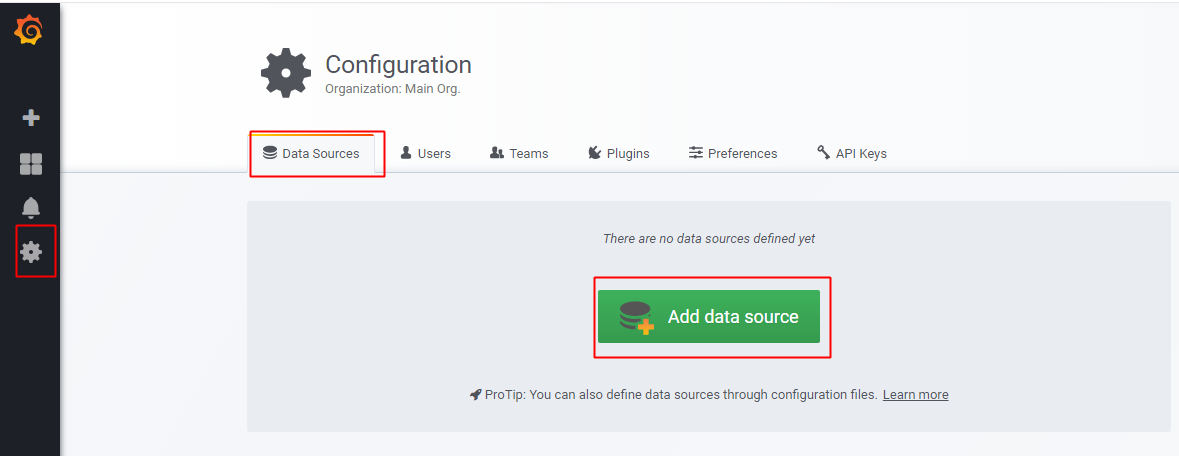

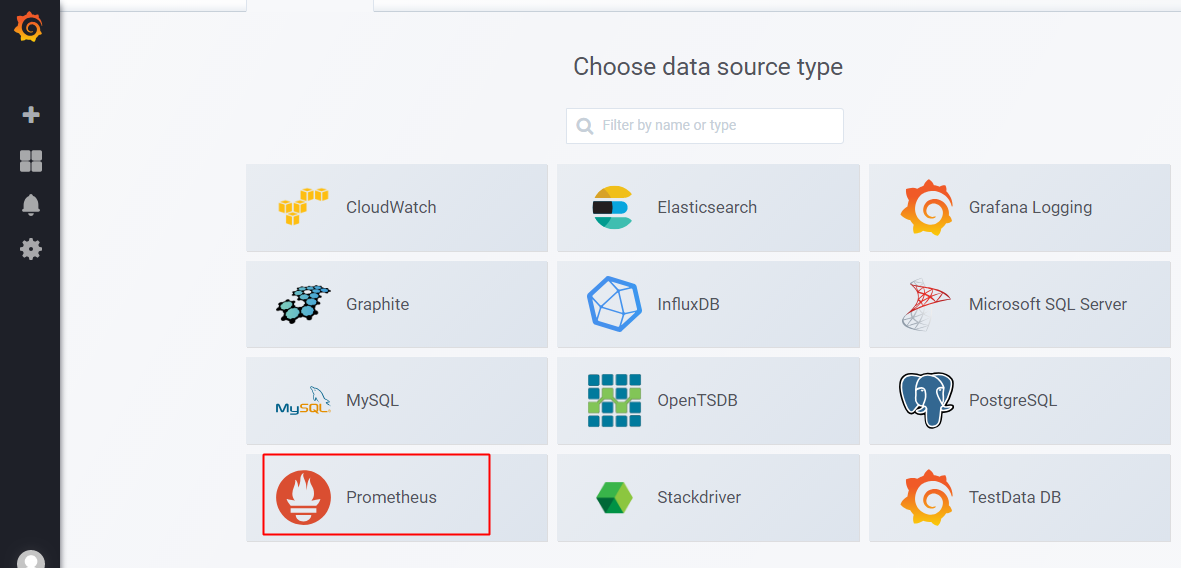

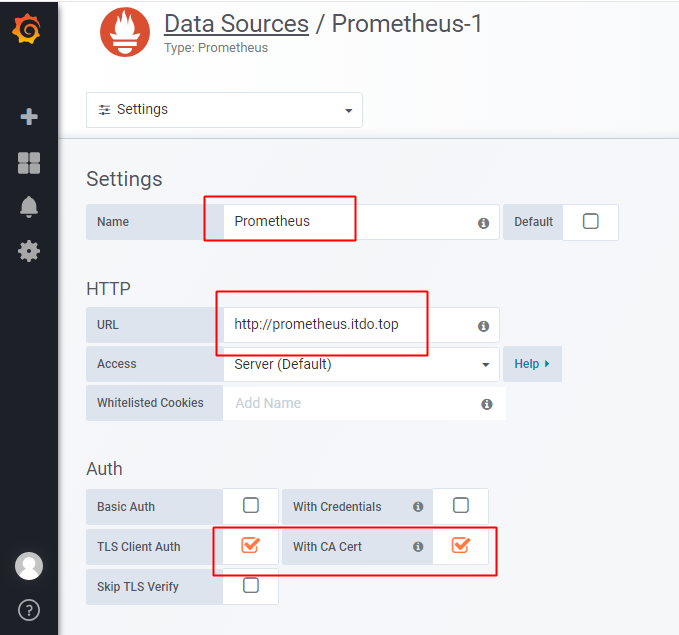

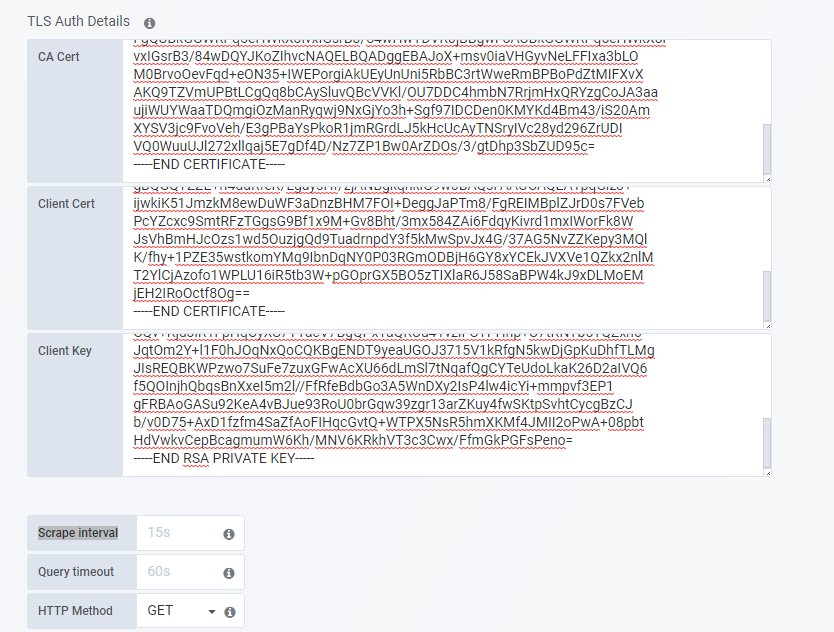

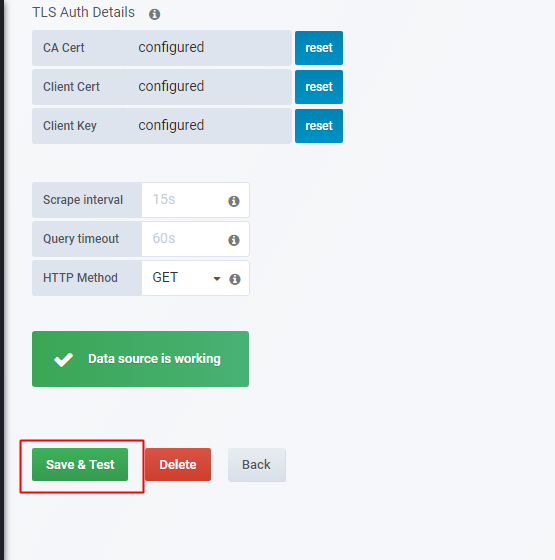

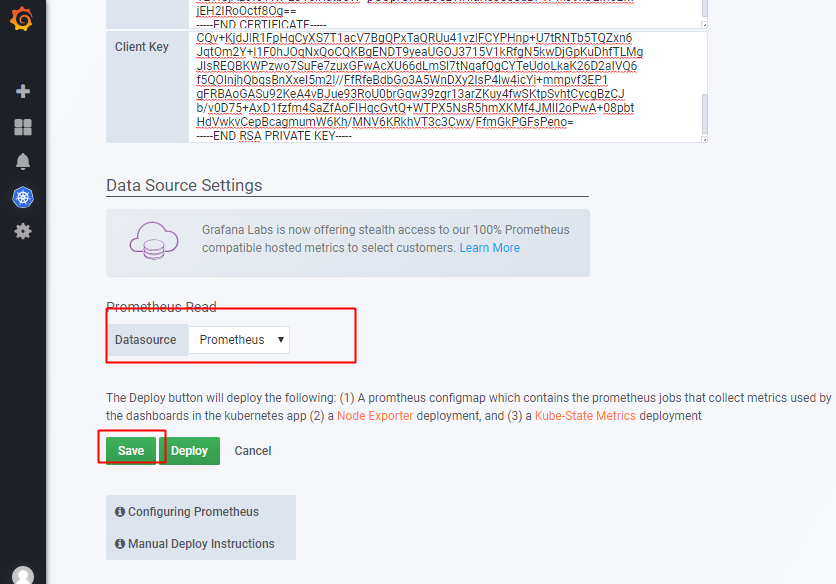

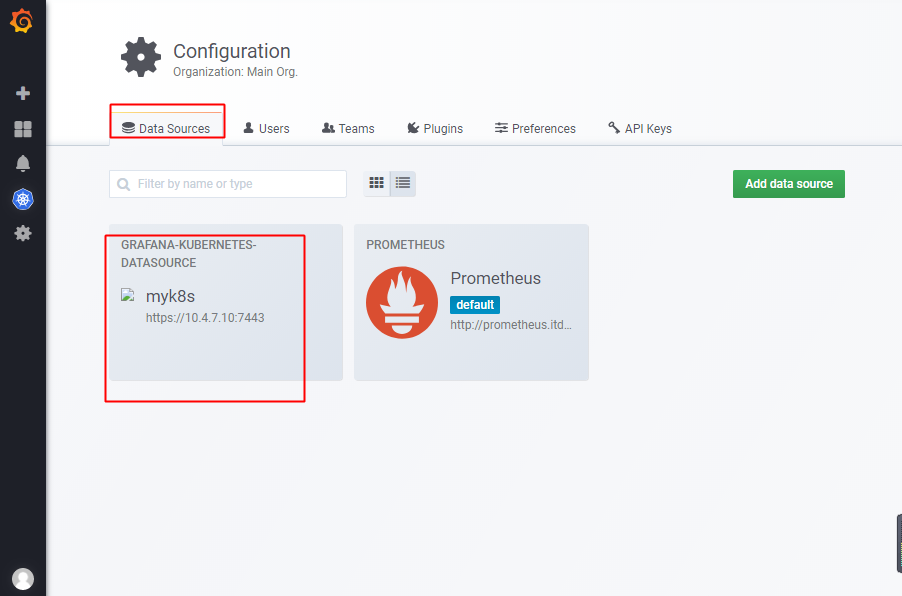

5.4、添加数据源

选择prometheus

URL写的是http://prometheus.itdo.top

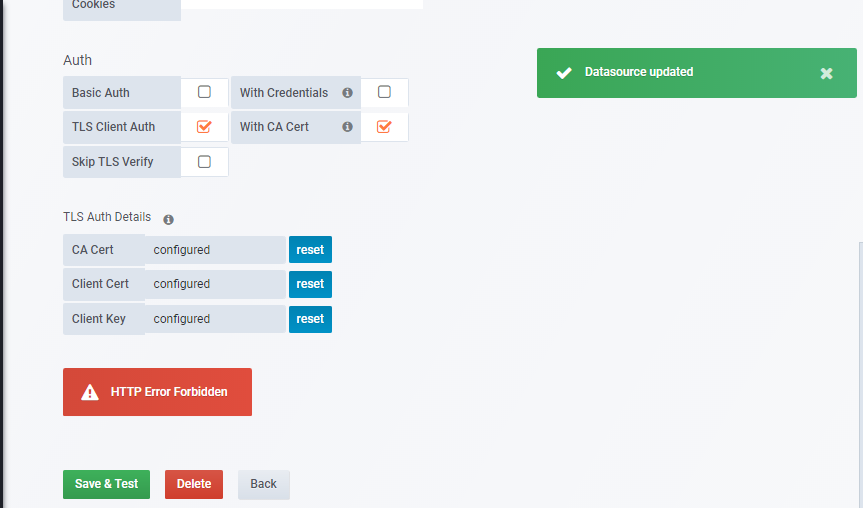

Access写的是Server 服务端

Whitelisted Cookies白名单不用写

把[root@k8s-7-200 ~]# cat /opt/certs/ca.pem 复制到CA.Cert

把[root@k8s-7-200 ~]# cat /opt/certs/client.pem 复制到Clinet.Cert

把[root@k8s-7-200 ~]# cat /opt/certs/client-key.pem 复制到Clinet.key

Scrape interval 采集的时间间隔

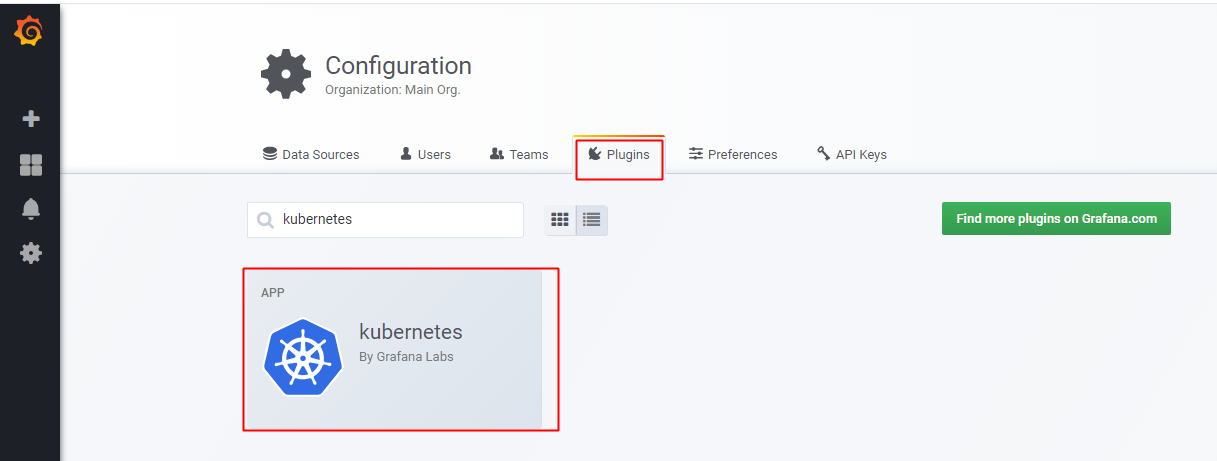

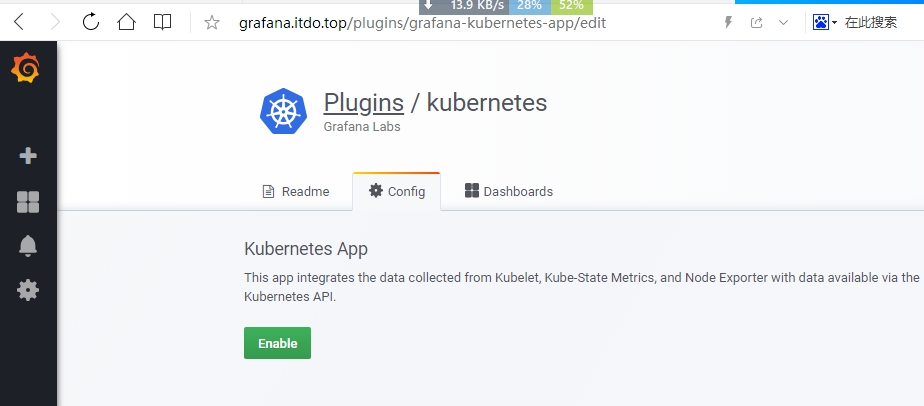

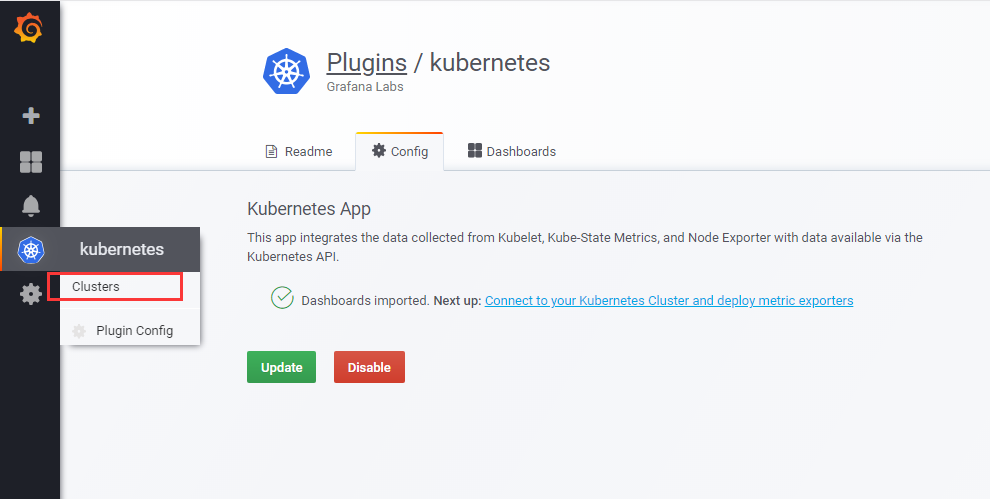

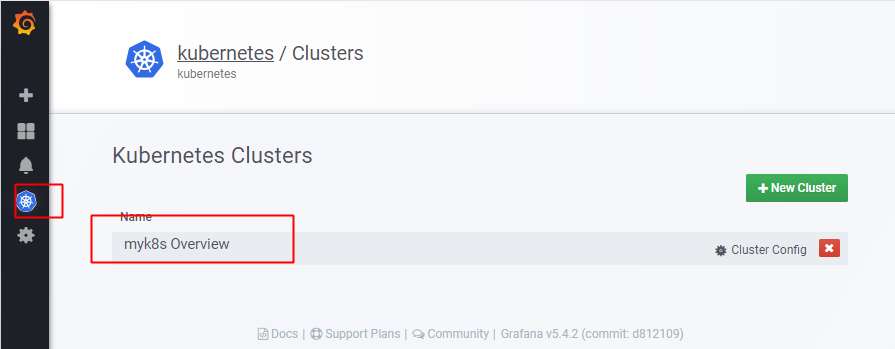

点击插件-kubernetes

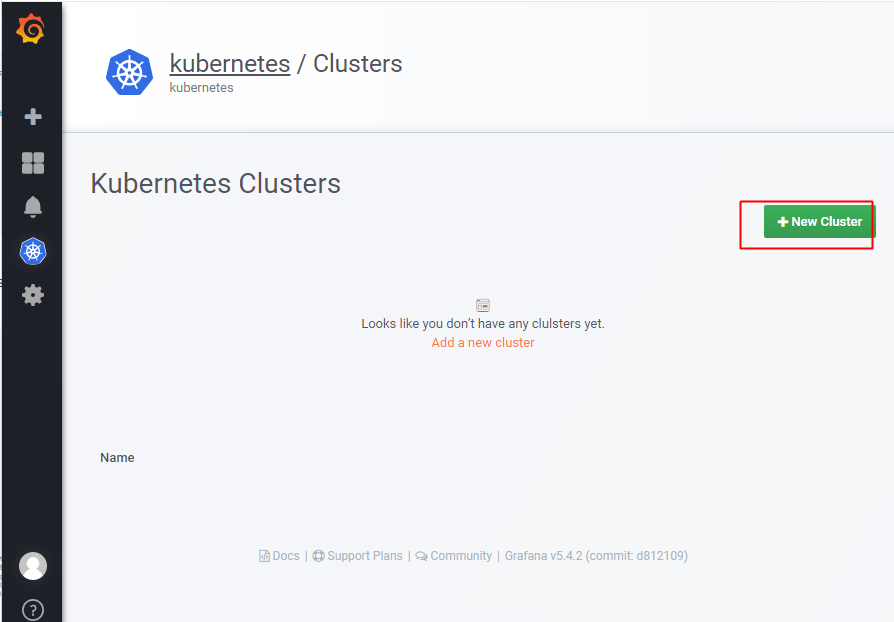

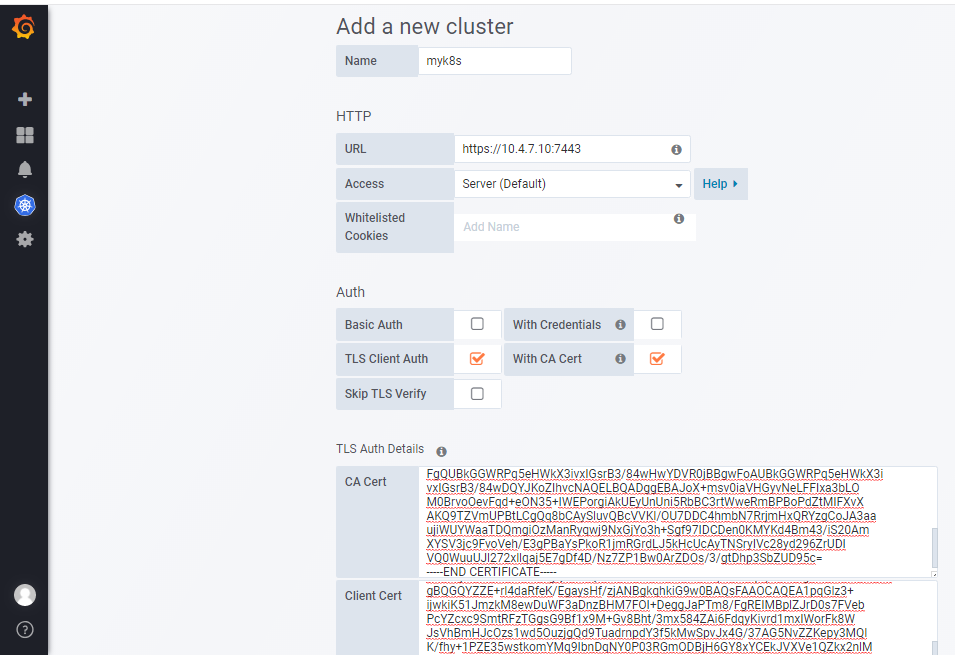

点击enable后,如下点击左边kubernetes,点击Clusters,点击新建

name 填写名字随意

url这里写apiserver中的地址。因为10.4.7.21、10.4.7.22 的kube-apiserver 启动6443端口,并且代理给10.4.7.10的7443端口。

填写相关ca

save

测试,可以不做

点击save & test

报错—这里忽略

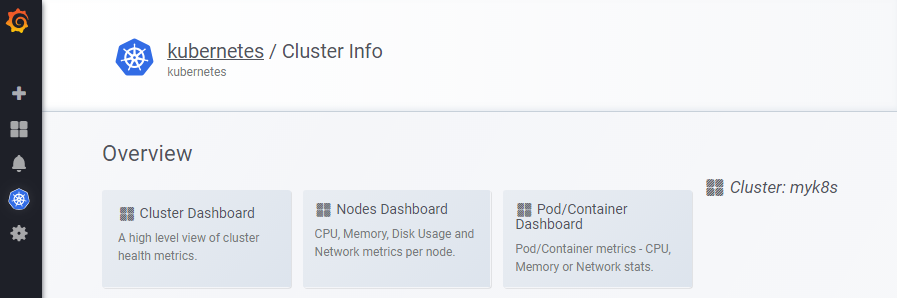

点击myk8s后

发现dashboard

点击里面的dashboard后发现数据

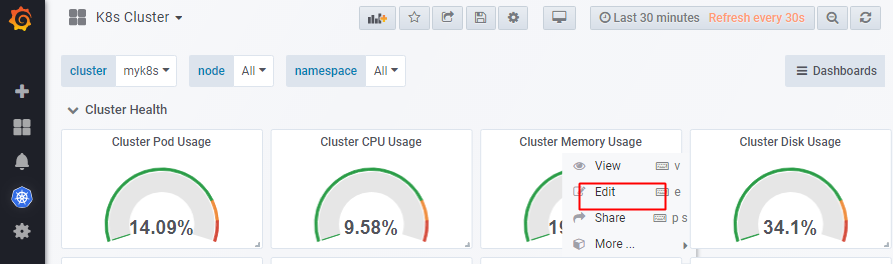

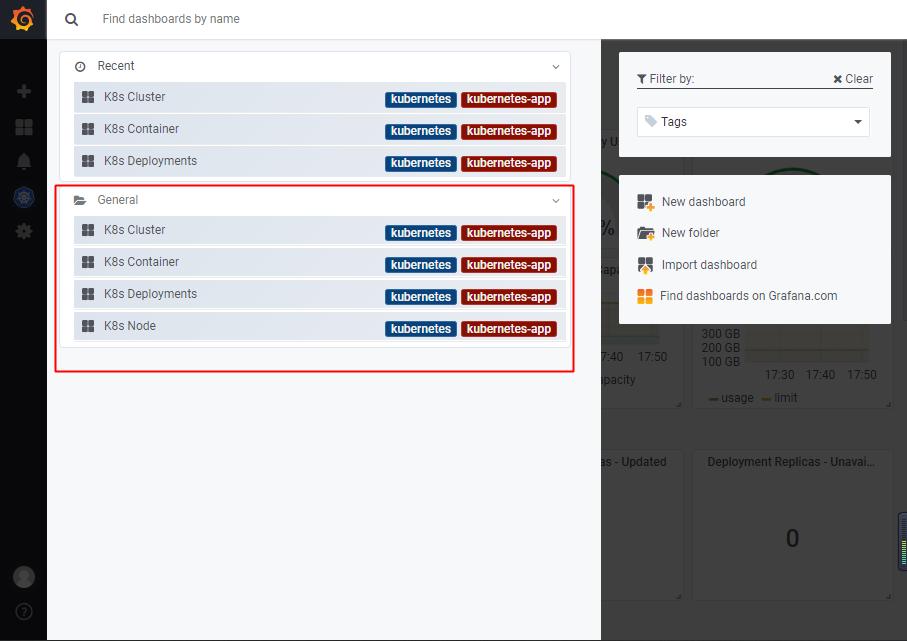

点击左上角的K8s Cluster ,这个插件会生成4个dashboard的概要,比如k8s Deployments 里面有多少个k8s 的Deployments版本。k8s Container:内存/cpu使用率

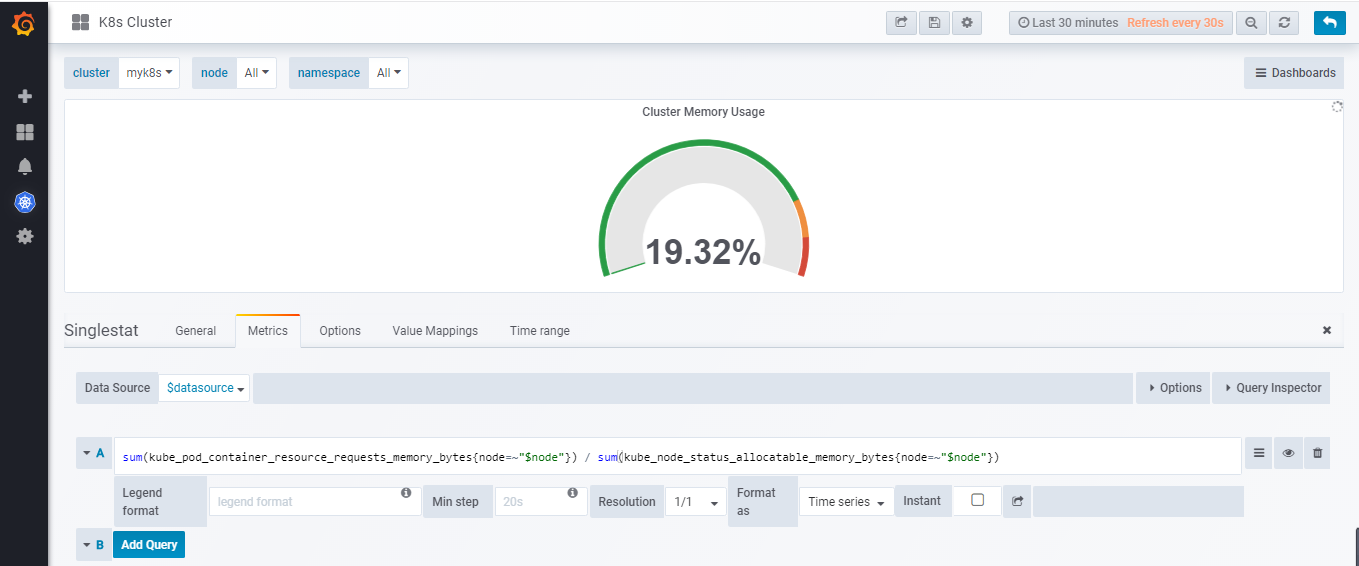

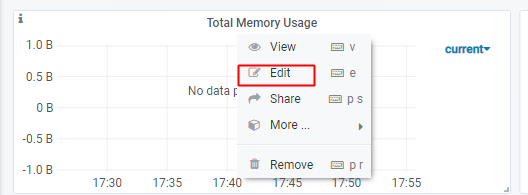

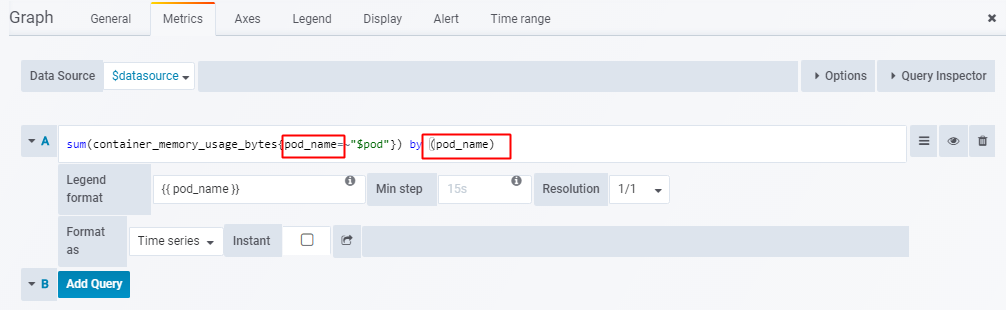

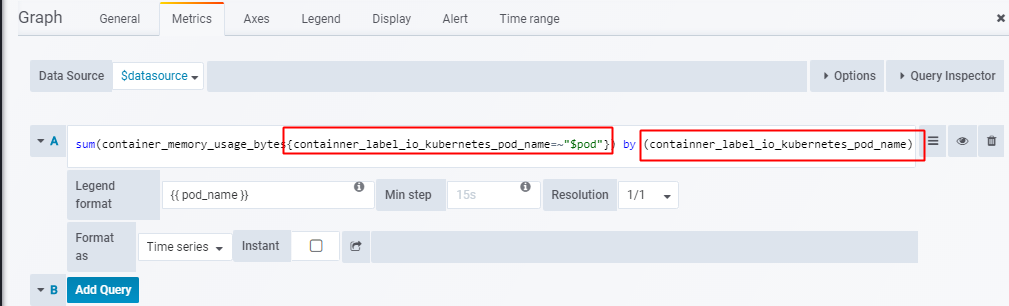

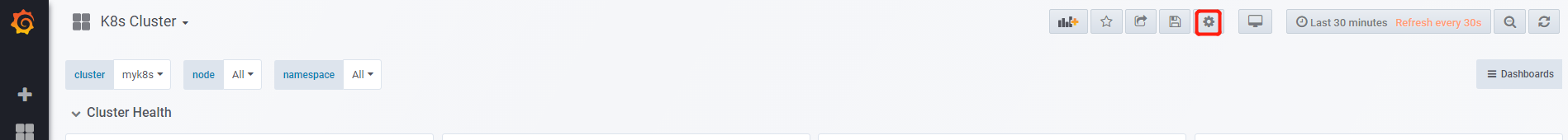

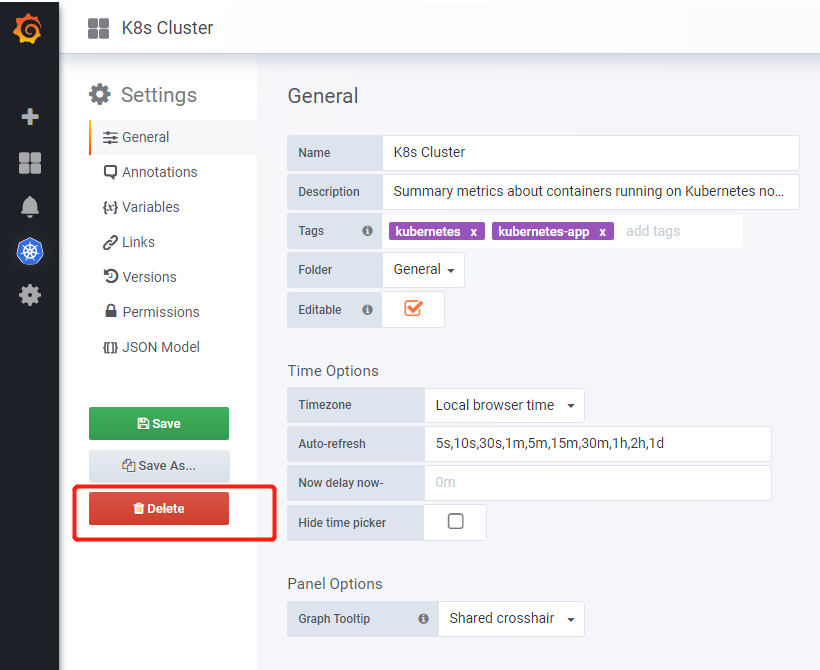

注意:k8s Container有bug,默认取不到数据,修改方式:

点击上图的k8s Container后,点击edit,需要修改

pod_name --改为–> containner_label_io_kubernetes_pod_name

修改前

修改后

由于自带的有bug,而且监控的不准,所以4个dashboard全部删除,从官网上找新的dashboard导入

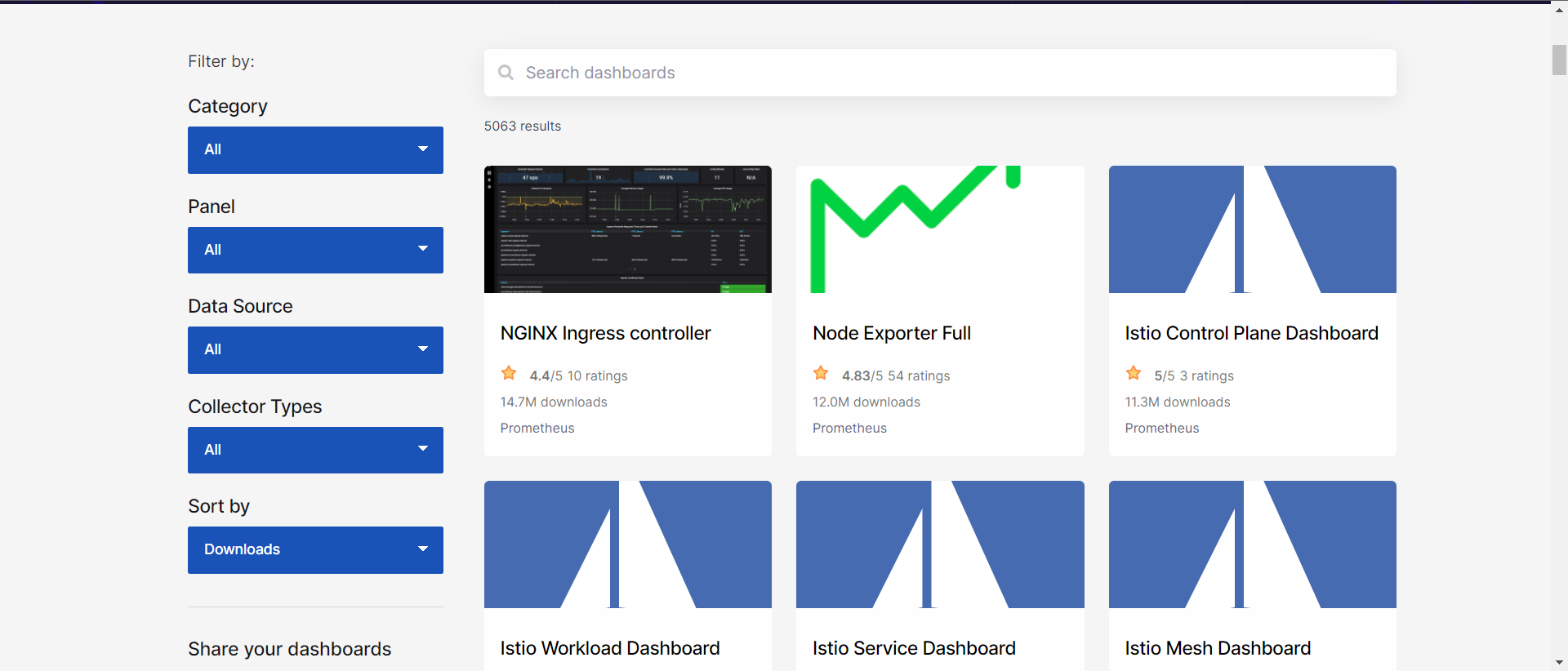

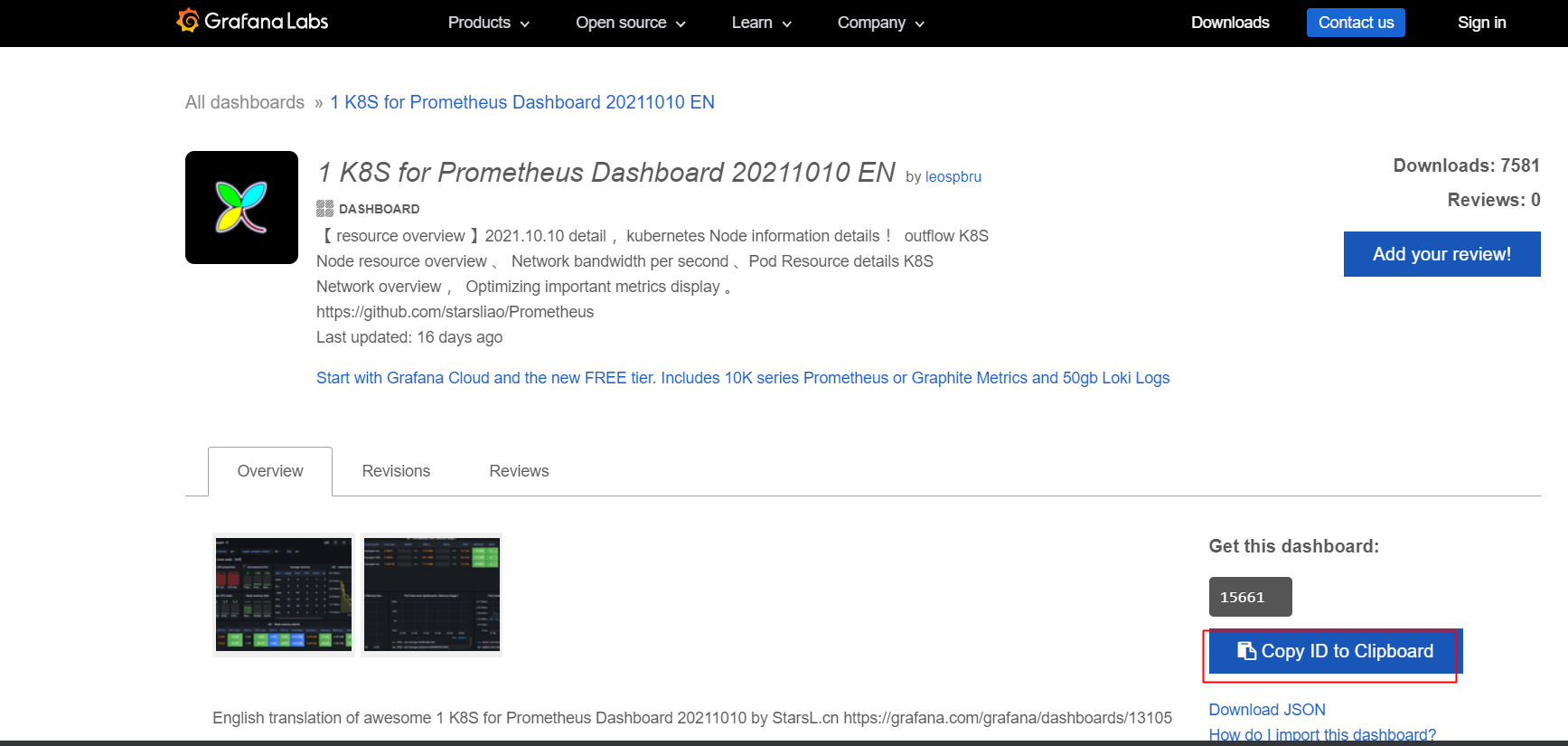

从官网上找新的dashboard导入:

方式一:

然后去官网找dashboard

点击dashboards后,https://grafana.com/grafana/dashboards,点击最多下载的

(需要使用谷歌浏览器打开才能正常搜索)

找到编号为15661

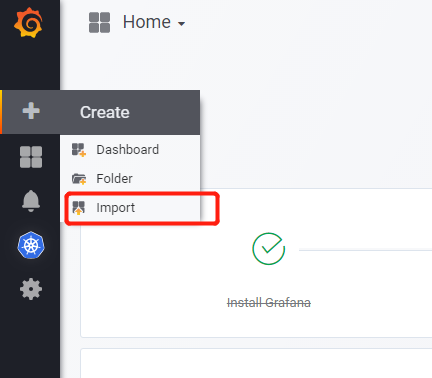

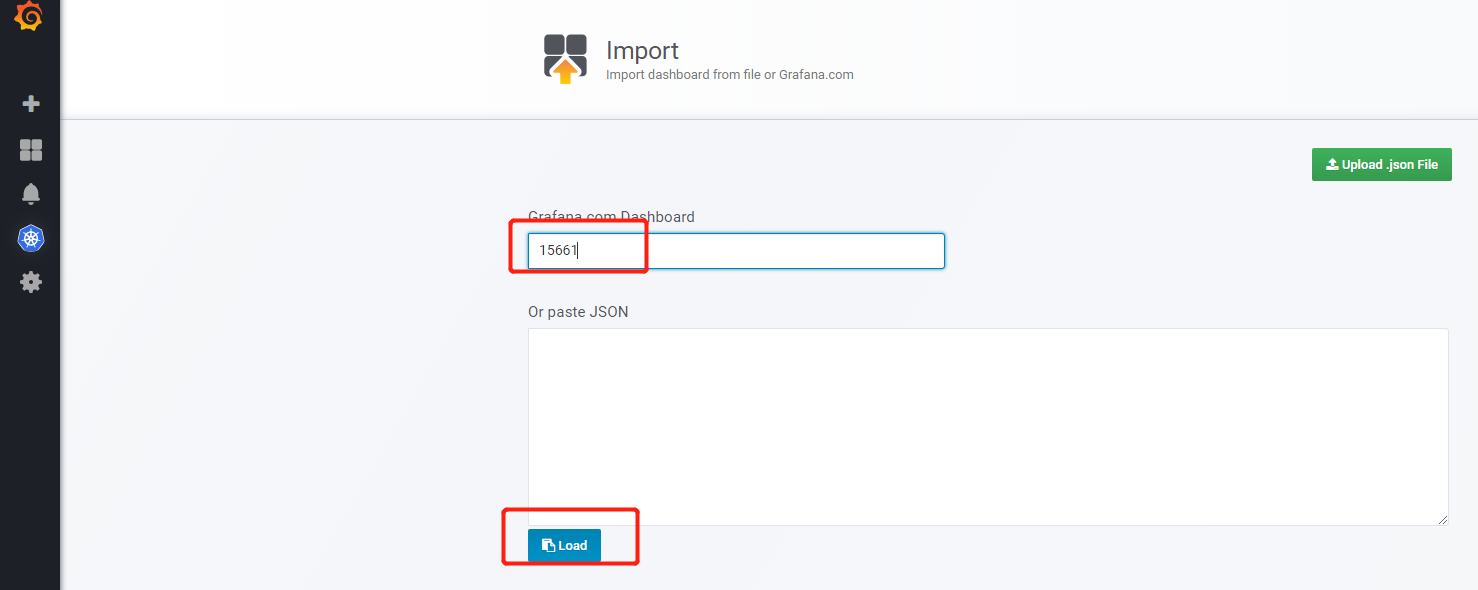

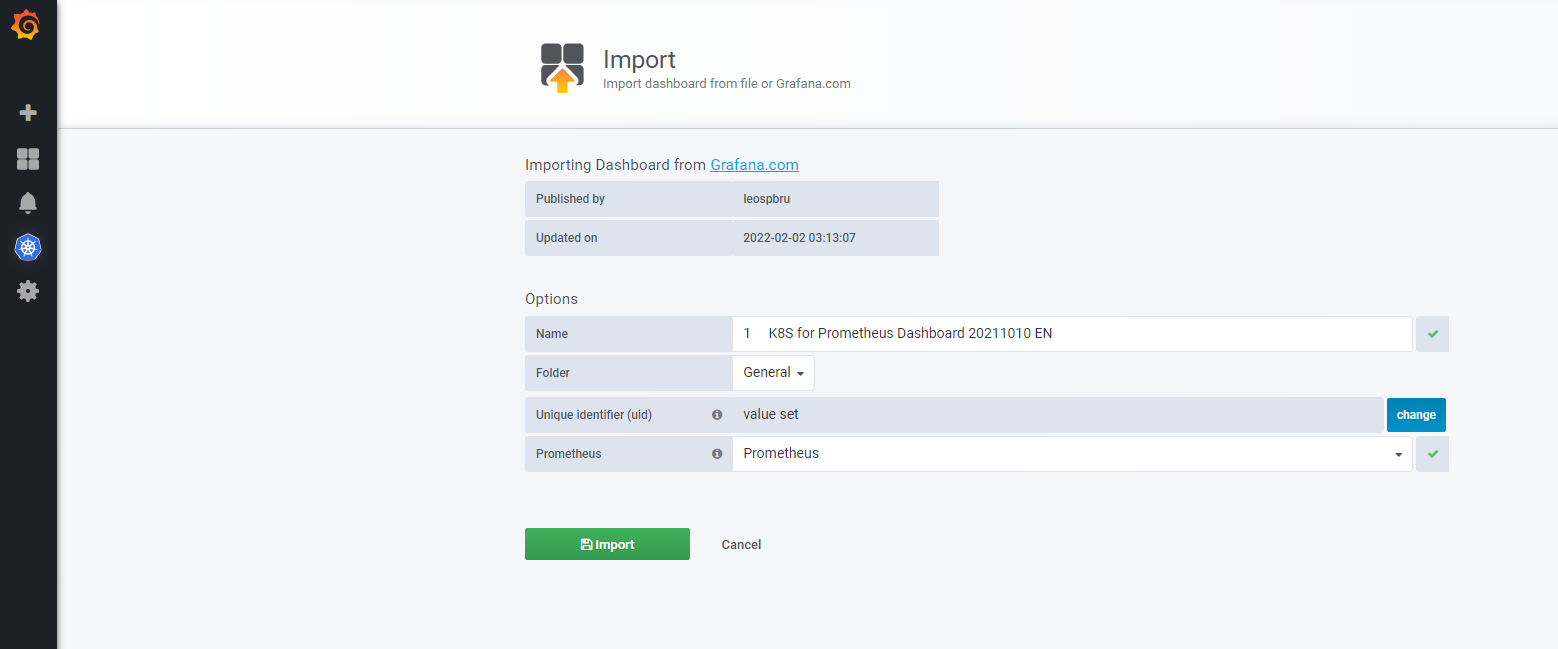

在自己的grafana中,点击import dashboard

输入编号,然后load

import自动导入

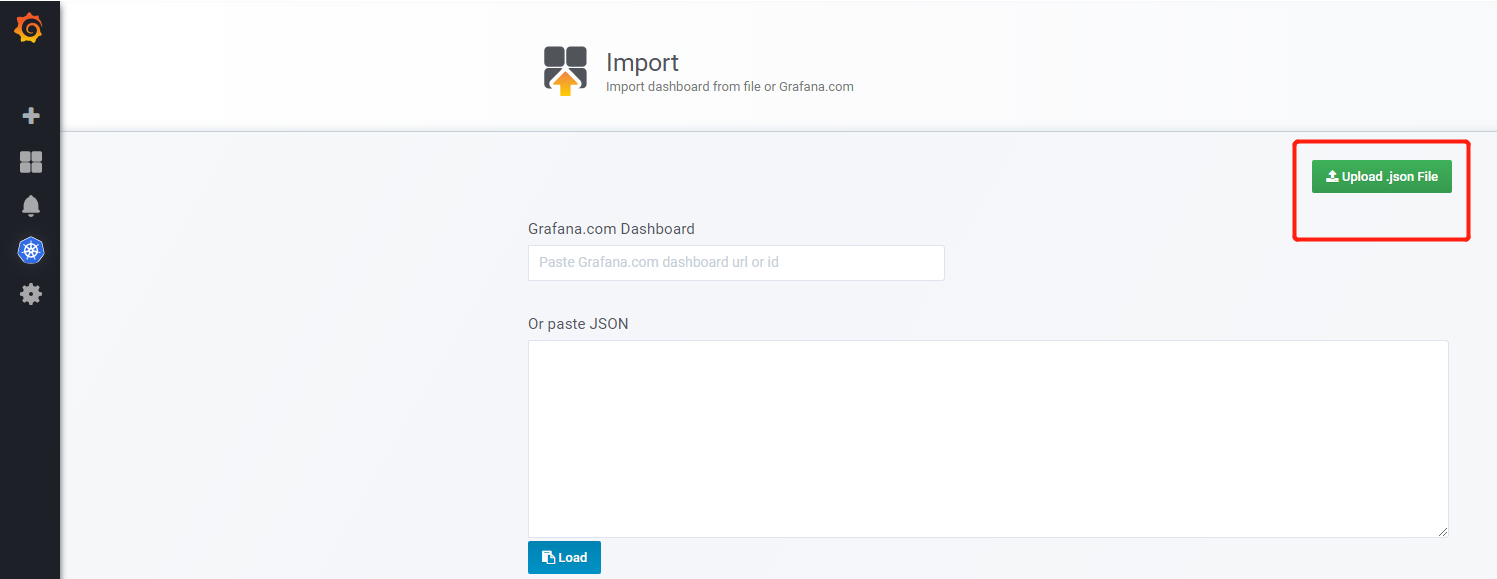

方式二:

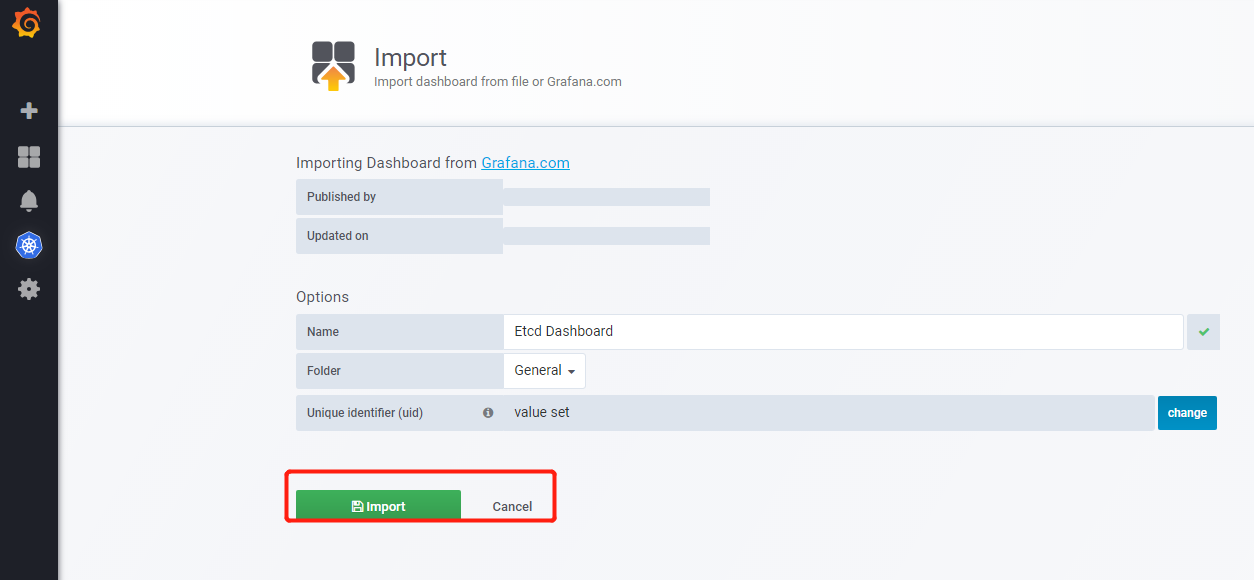

在导入的时候点击Upload .json File 会load本地的

需要导入刚才删除的4个dashboard

选中后import

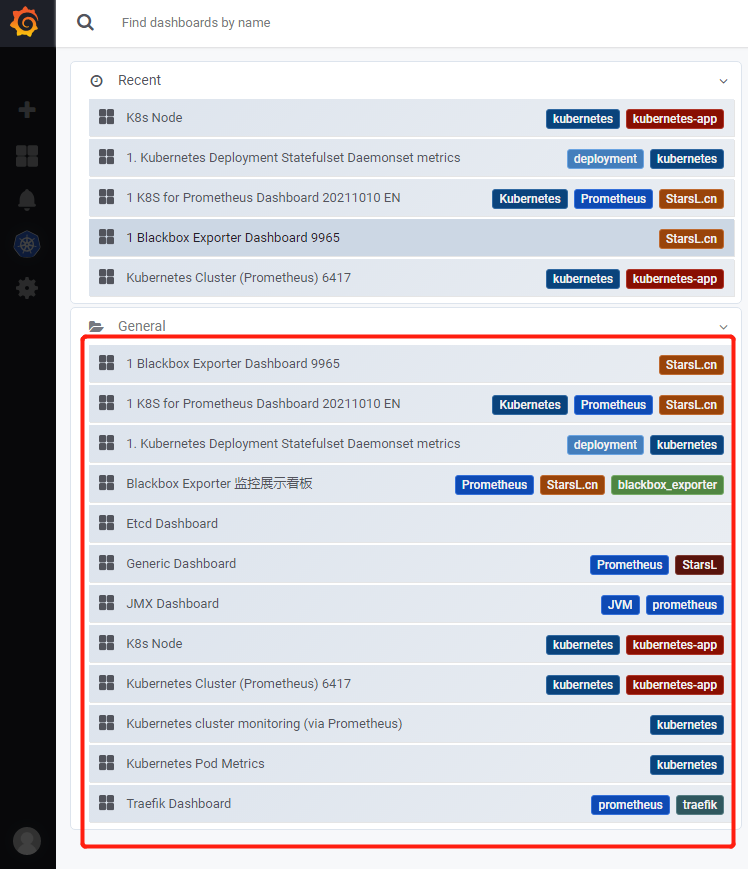

导入完成后的dashboard

注:模块的查询的数据,都是事前prometheus.yml配置文件中定义好的

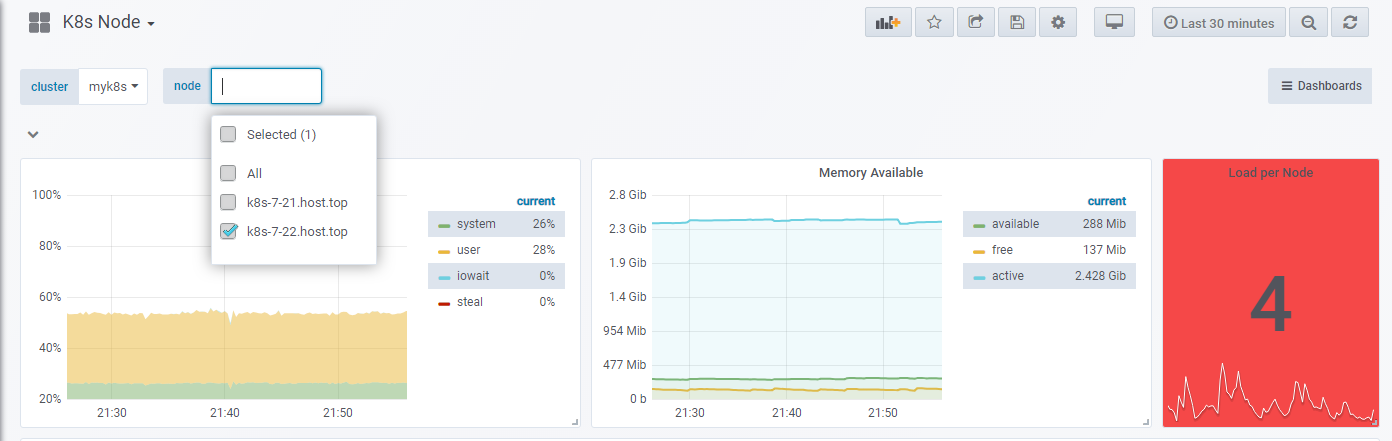

k8s ndoe

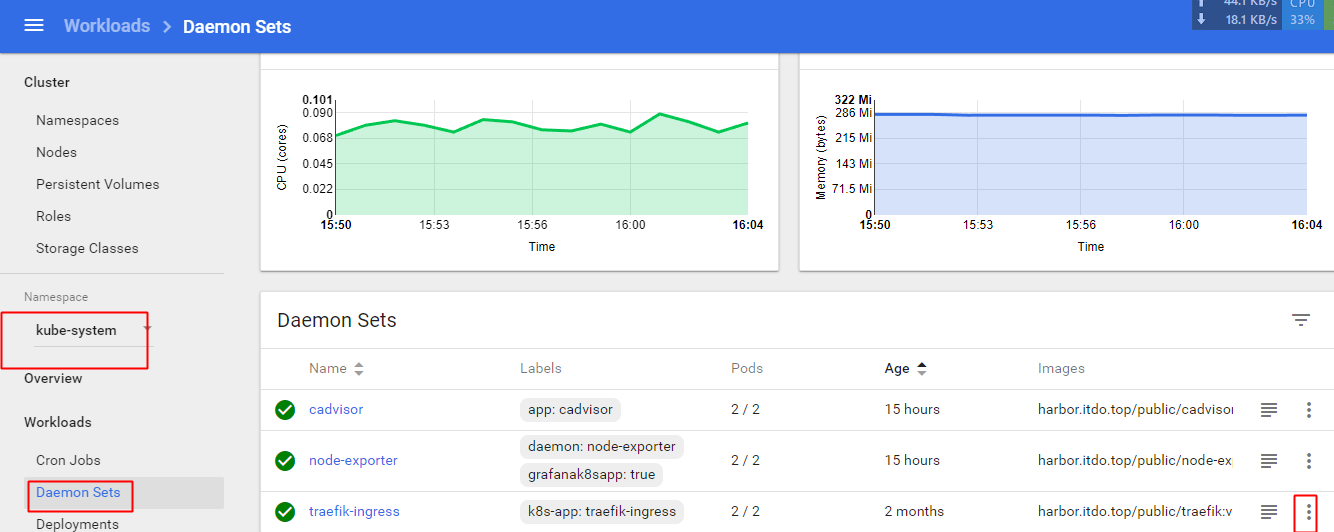

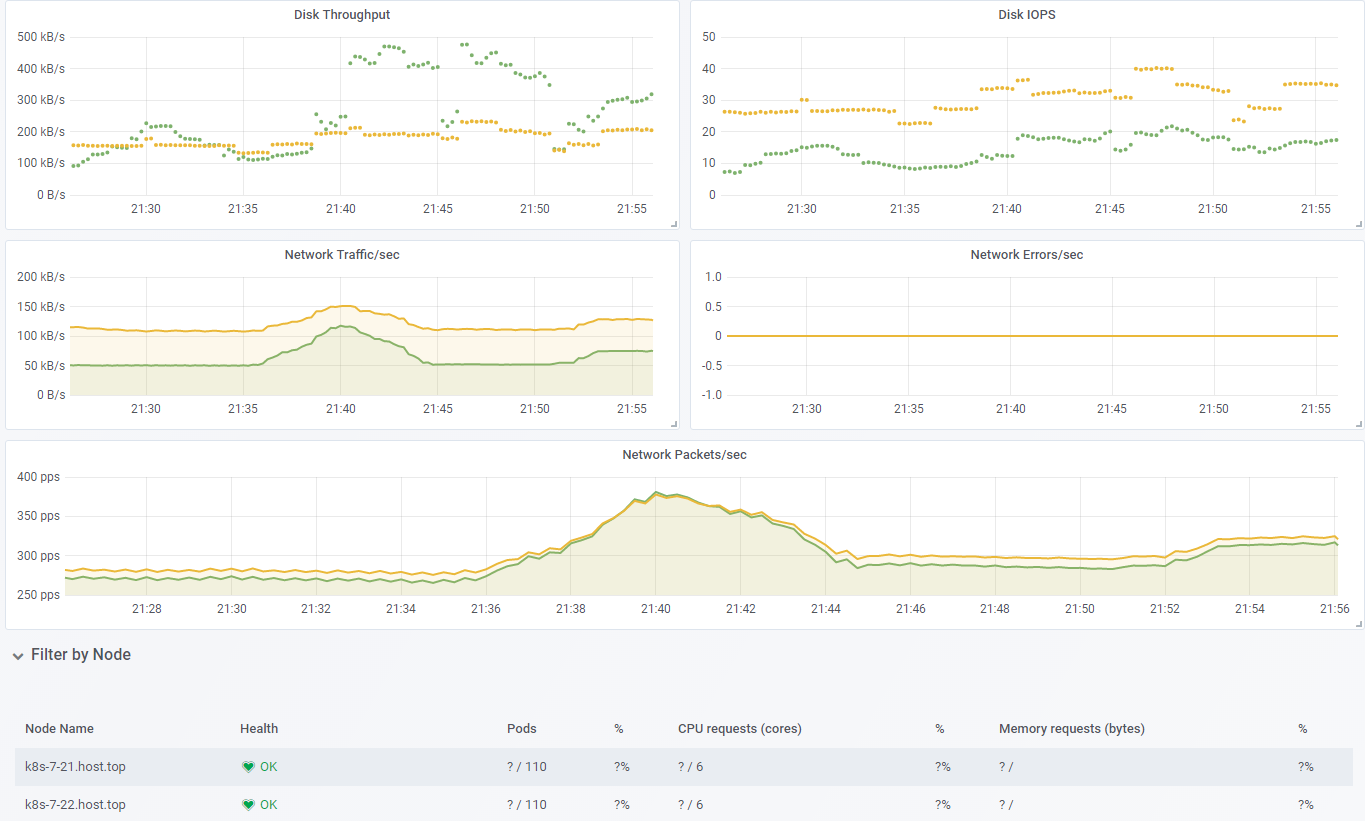

查看两个节点,对应的可以查看有几个运行的node

查看磁盘、IO、网络

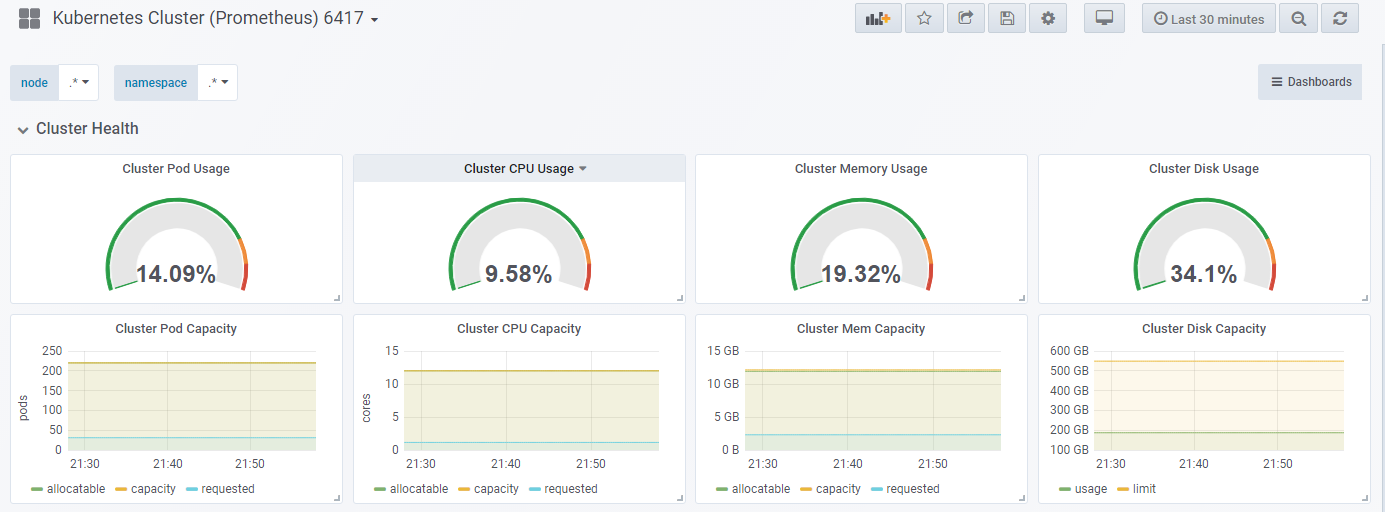

k8s Cluster

查看集群的概况,Cluster Cpu Uasge cpu的百分比、Cluster Memory Uasge 内存的百分比

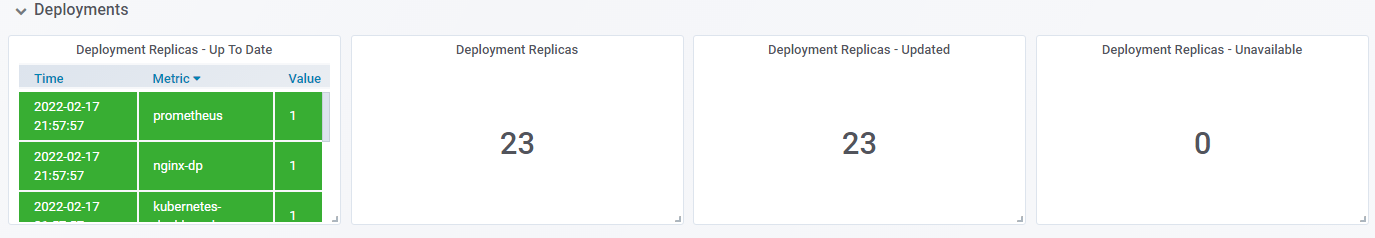

Deployment Replicas 导入后查看多少个副本

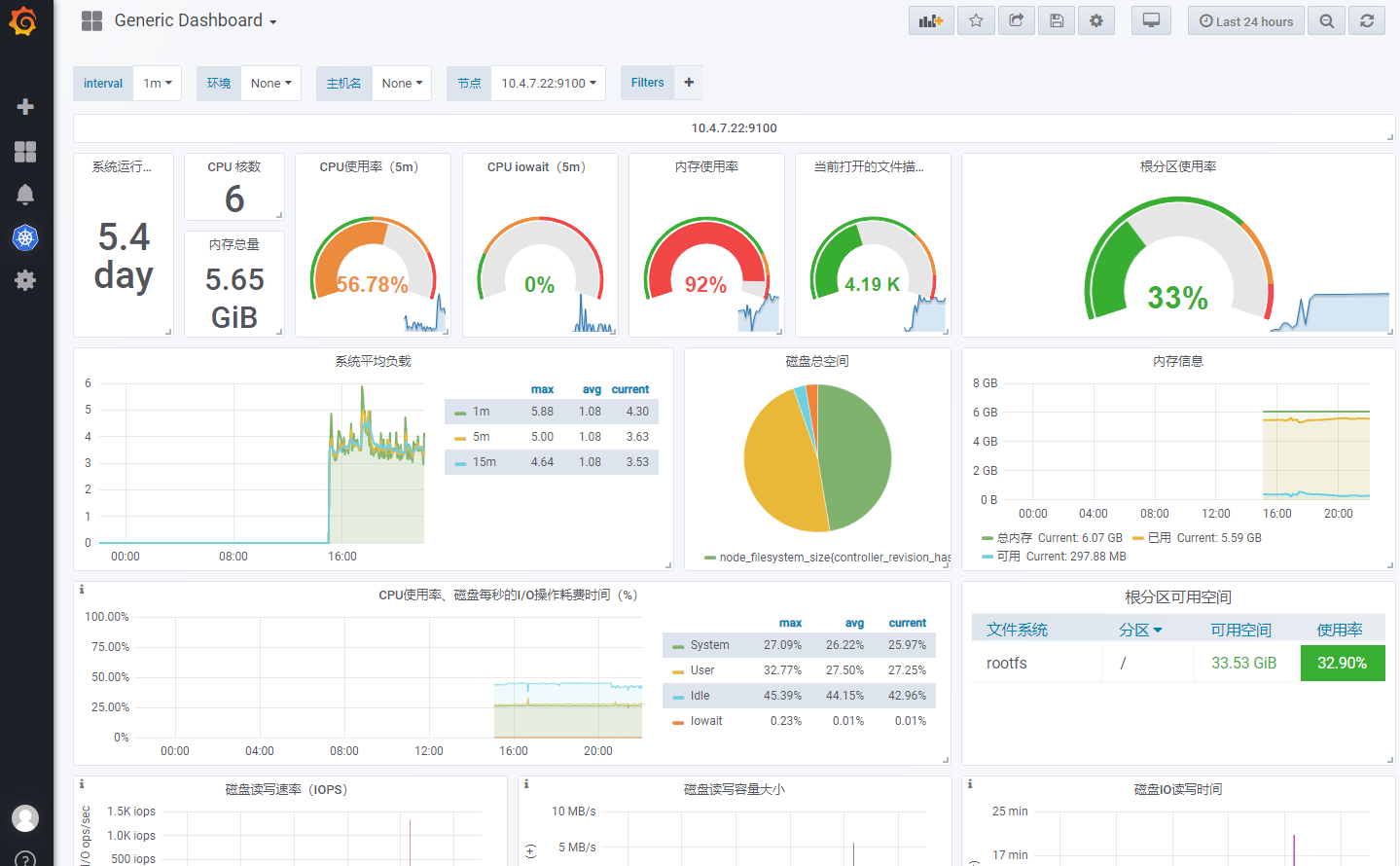

Generic Dasghboard

查看监控宿主机

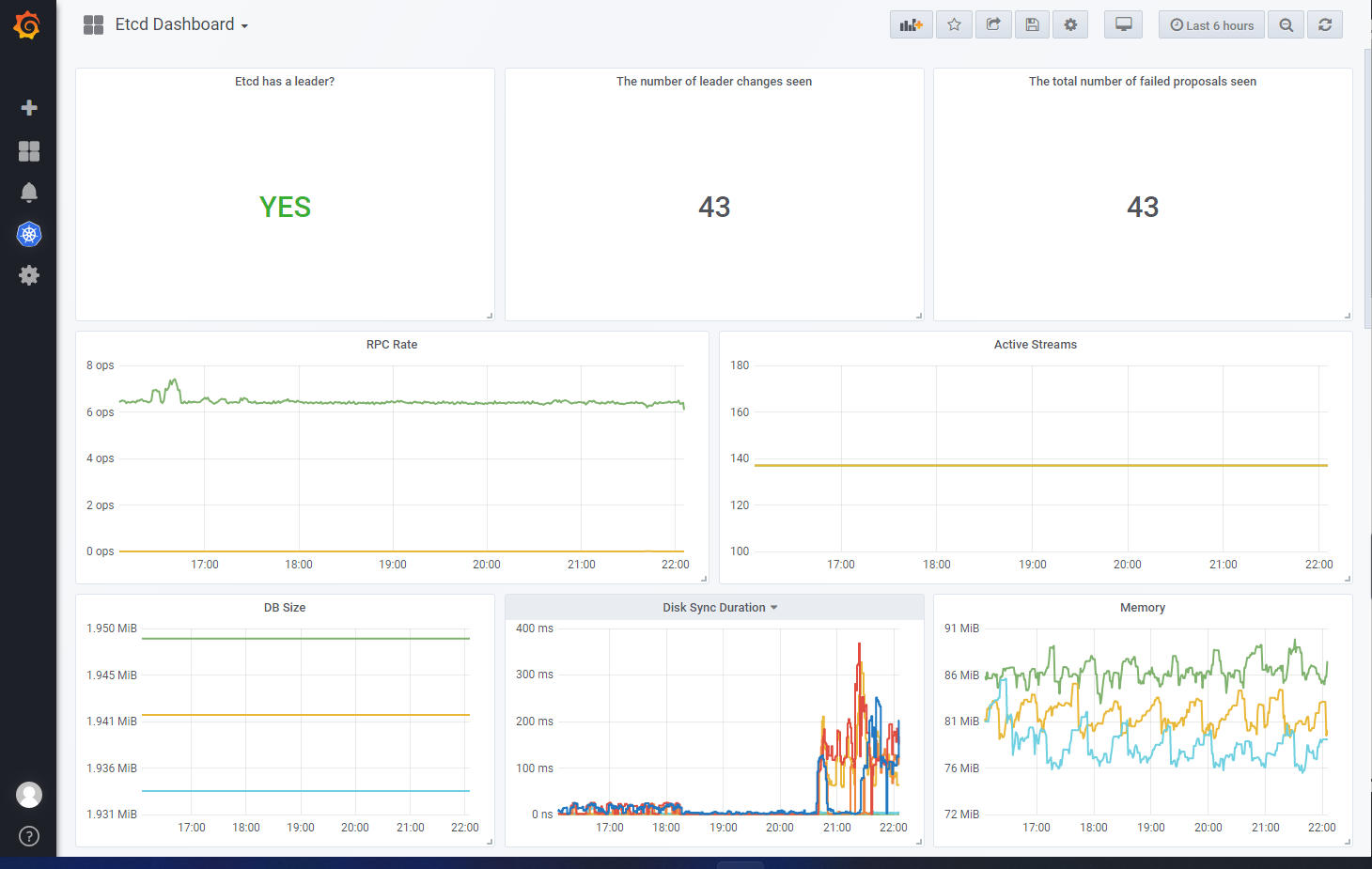

etcd dashborad

先导入Etcd Dashboard.json

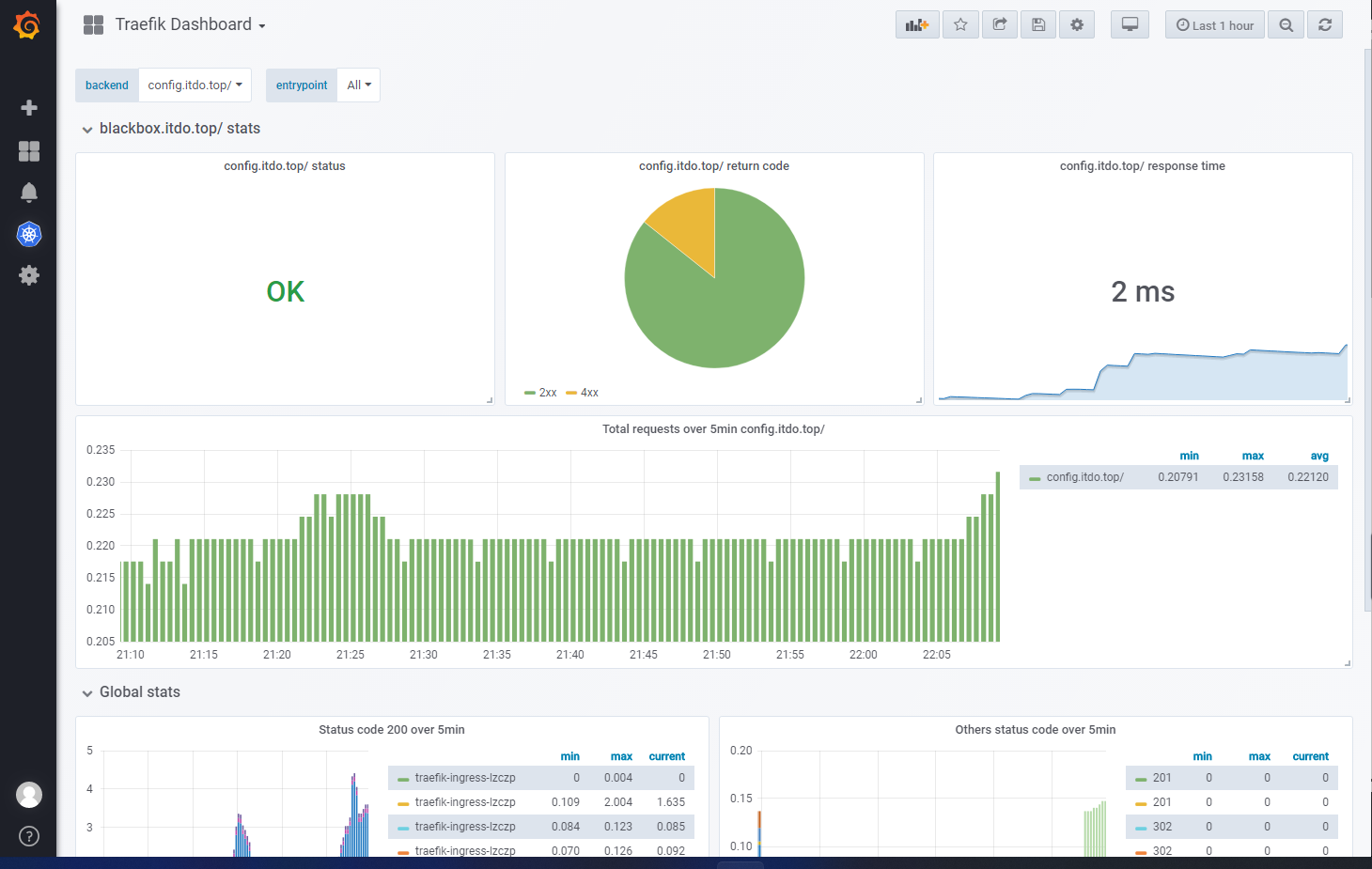

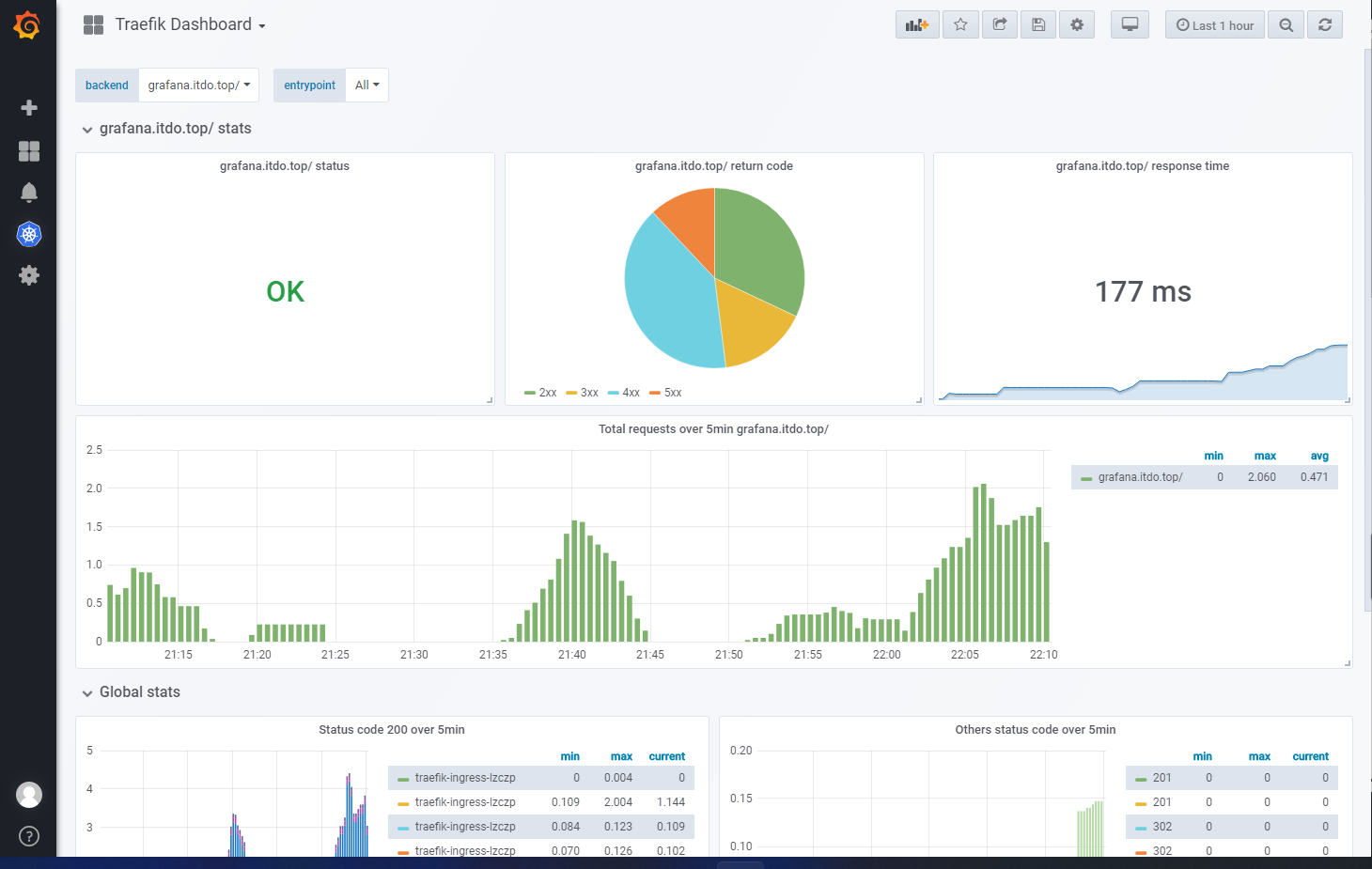

Traefik Dashboard

traefik-ingress暴露作用

tips:需要加annotations注解

"annotations": {

"prometheus_io_scheme": "traefik",

"prometheus_io_path": "/metrics",

"prometheus_io_port": "8080"

}

比如dashboard.itdo.top 是177ms,说明延迟是177ms,3s以内是可以的

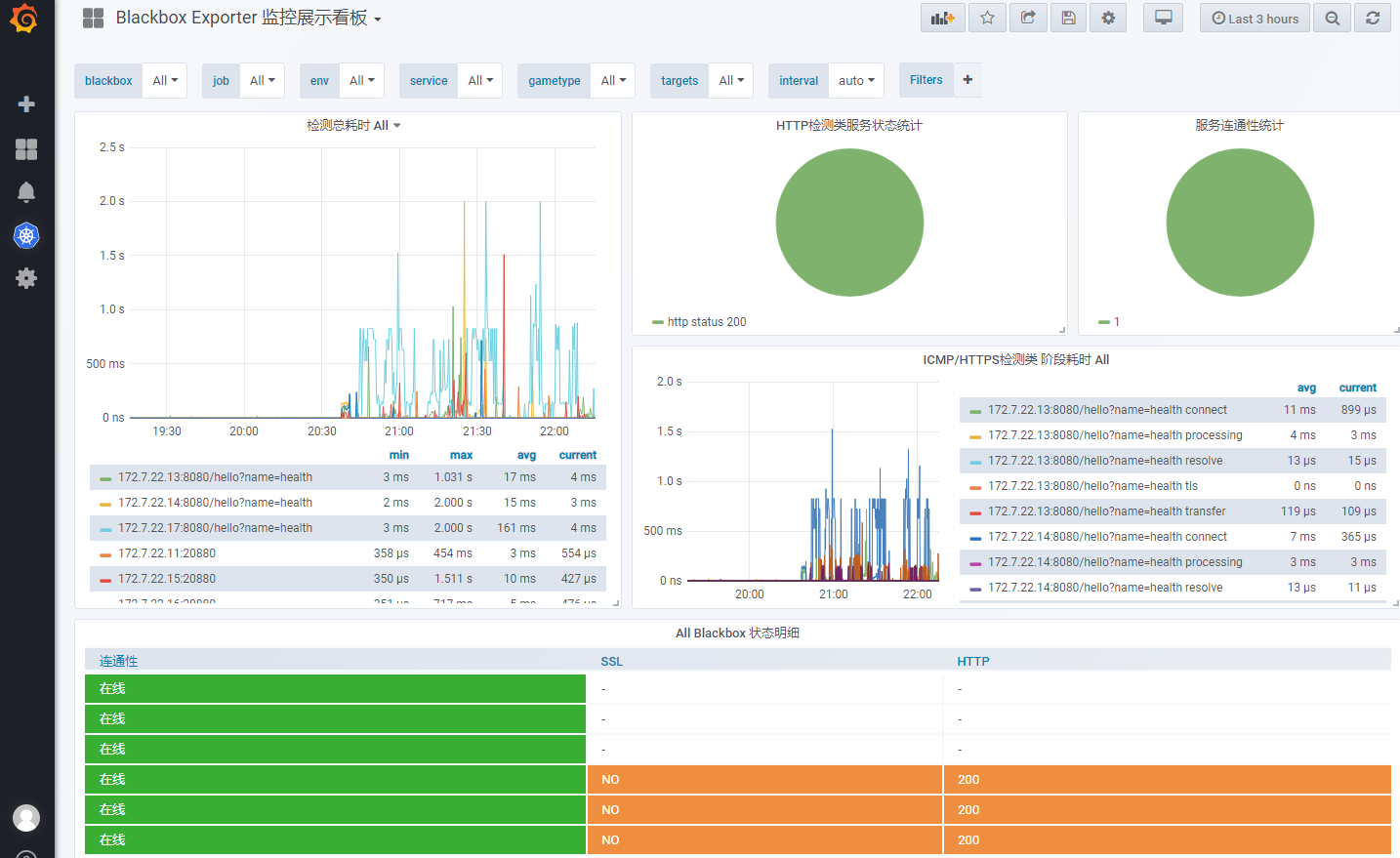

Blackbox Dashboard

导入后查看监控宿主机

tips:两个模块id 9965、7587,两个都可以用不同风格,选一个自己导入

blackbox-exporter 用来监控blackbox-exporter,blackbox-exporter监控dubbo-demo-server等

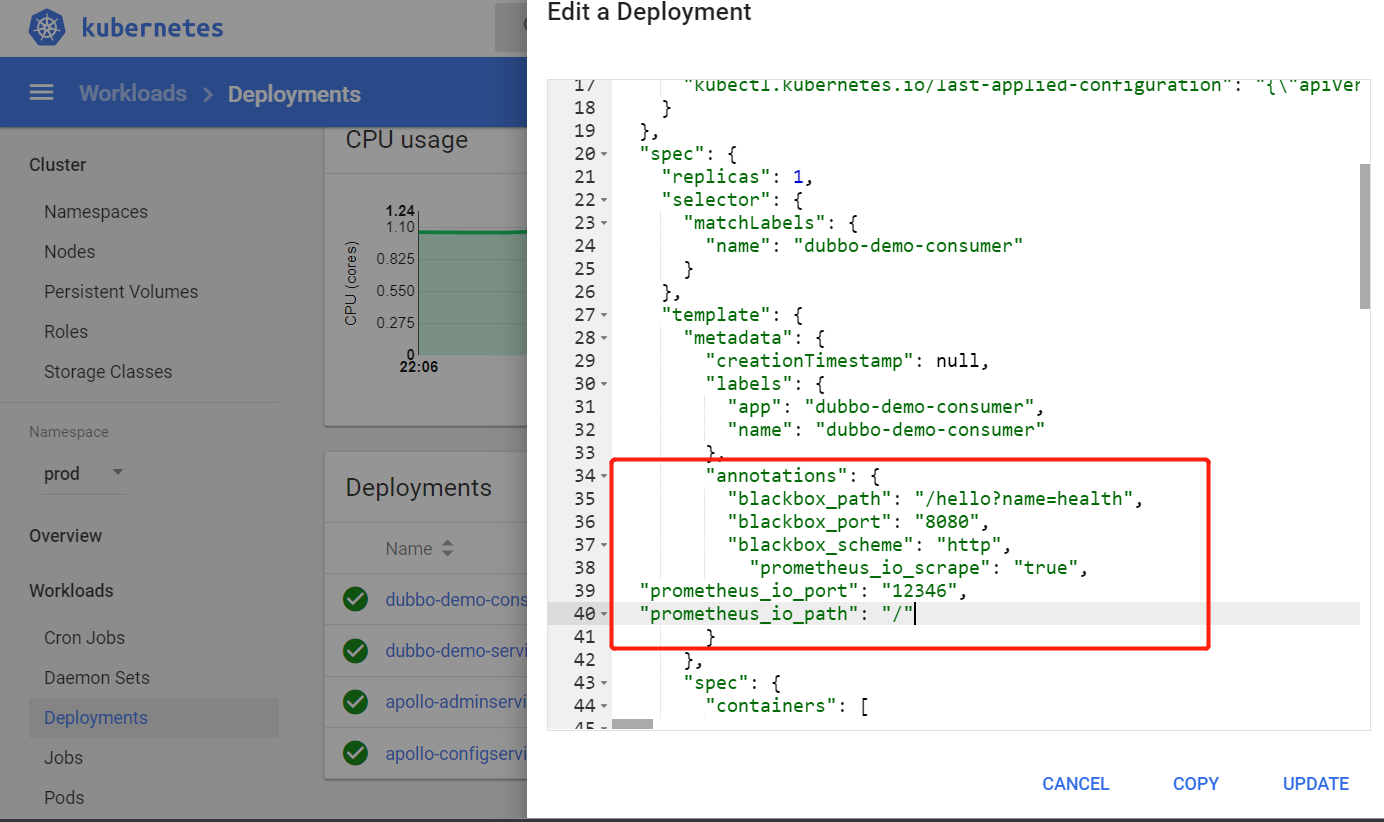

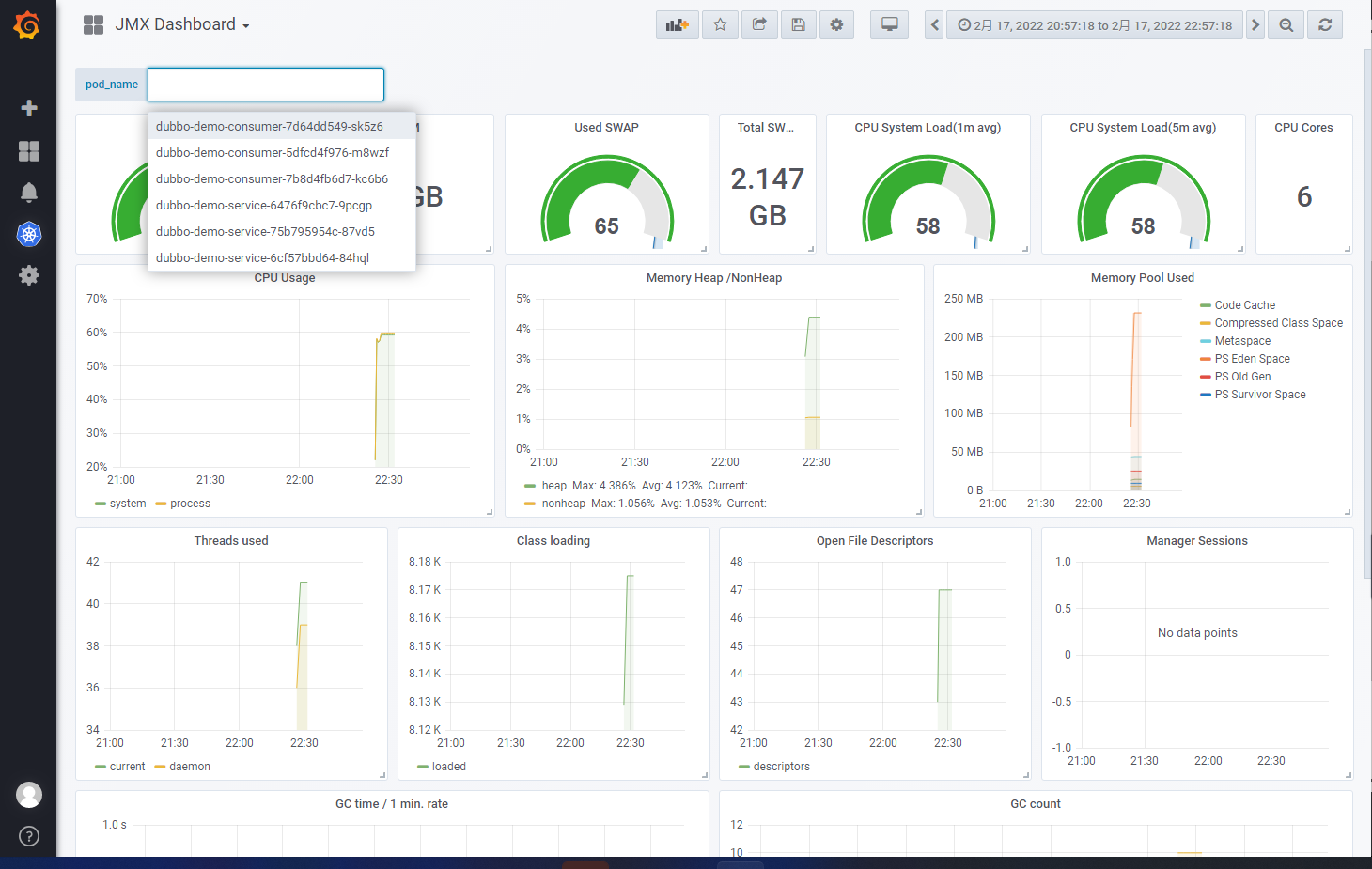

JMX dashboard

导入后监控jvm

tips:

- 在业务容器dp.yaml需要加annotations注解

- 业务容器镜像build已经加入监控客户端jmx_javaagent-0.3.1.jar,并且启动业务服务时传入参数 java -jar -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=

- 对应Promethus.yaml配置文件中job_name: 'kubernetes-pods’的规则

这里我们修改dp.yaml添加annotations注解

"annotations": {

"prometheus_io_scrape": "true",

"prometheus_io_port": "12346",

"prometheus_io_path": "/"

}

注意:12346是jvm远程端口

总结:

监控 traefik:

"annotations": {

"prometheus_io_scheme": "traefik",

"prometheus_io_path": "/metrics",

"prometheus_io_port": "8080"

}

监控 blackbox---tcp:

"annotations": {

"blackbox_port": "20880",

"blackbox_scheme": "tcp"

}

监控 blackbox---http:

"annotations": {

"blackbox_path": "/hello?name=health",

"blackbox_port": "8080",

"blackbox_scheme": "http"

}

监控jvm:

"annotations": {

"prometheus_io_scrape": "true",

"prometheus_io_port": "12346",

"prometheus_io_path": "/"

}

6、部署alertmanager

6.1、准备镜像

[root@k8s-7-200 ]# docker pull docker.io/prom/alertmanager:v0.19.0

[root@k8s-7-200 ]# docker images |grep alert

prom/alertmanager v0.19.0 30594e96cbe8 17 months ago 53.2MB

[root@k8s-7-200 ]# docker image tag 30594e96cbe8 harbor.itdo.top/infra/alertmanager:v0.19.0

[root@k8s-7-200 ]# docker push harbor.itdo.top/infra/alertmanager:v0.19.0

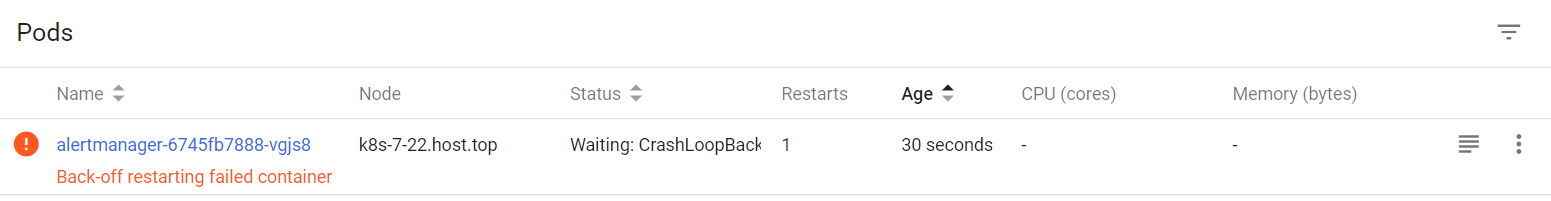

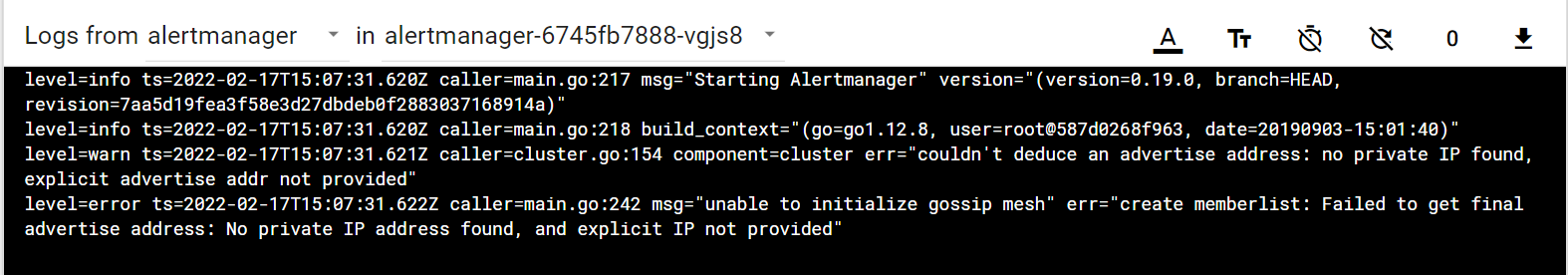

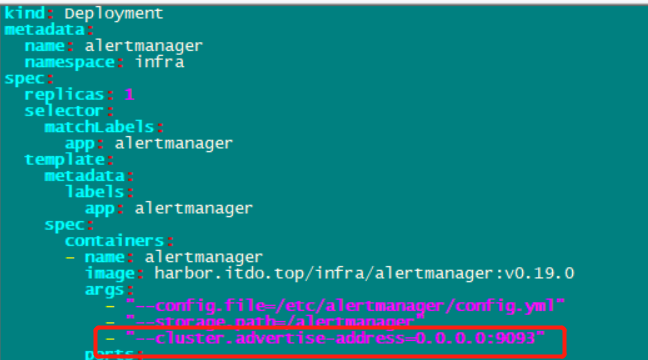

注意:如果应用资源配置清单后,新版本容器启动后可能会报错

提示:Back-off restarting failed container

查看pod日志:# couldn’t deduce an advertise address: no private IP found, explicit advertise addr not provided

解决方案1:降低使用alertmanager:v0.14.0

解决方案2:启动参数加:–cluster.advertise-address=0.0.0.0:9093

6.2、准备资源配置清单

[root@k8s-7-200 ~]# mkdir /data/k8s-yaml/alertmanager && cd /data/k8s-yaml/alertmanager

[root@k8s-7-200 alertmanager]# vi cm.yaml 注意,注释去掉

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: infra

data:

config.yml: |-

global:

# 在没有报警的情况下声明为已解决的时间

resolve_timeout: 5m

# 配置邮件发送信息

smtp_smarthost: 'smtp.itdo.top:465'

smtp_from: '[email protected]'

smtp_auth_username: '[email protected]'

smtp_auth_password: '×××××××'

smtp_require_tls: false

# 所有报警信息进入后的根路由,用来设置报警的分发策略

route:

# 这里的标签列表是接收到报警信息后的重新分组标签,例如,接收到的报警信息里面有许多具有 cluster=A 和 alertname=LatncyHigh 这样的标签的报警信息将会批量被聚合到一个分组里面

group_by: ['alertname', 'cluster']

# 当一个新的报警分组被创建后,需要等待至少group_wait时间来初始化通知,这种方式可以确保您能有足够的时间为同一分组来获取多个警报,然后一起触发这个报警信息。

group_wait: 30s

# 当第一个报警发送后,等待'group_interval'时间来发送新的一组报警信息。

group_interval: 5m

# 如果一个报警信息已经发送成功了,等待'repeat_interval'时间来重新发送他们

repeat_interval: 5m

# 默认的receiver:如果一个报警没有被一个route匹配,则发送给默认的接收器

receiver: default

receivers:

- name: 'default'

email_configs:

- to: '[email protected]' # 收件人

send_resolved: true

[root@k8s-7-200 alertmanager]# vi dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: alertmanager

namespace: infra

spec:

replicas: 1

selector:

matchLabels:

app: alertmanager

template:

metadata:

labels:

app: alertmanager

spec:

containers:

- name: alertmanager

image: harbor.itdo.top/infra/alertmanager:v0.19.0 #修改镜像地址

args:

- "--config.file=/etc/alertmanager/config.yml"

- "--storage.path=/alertmanager"

ports:

- name: alertmanager

containerPort: 9093

volumeMounts:

- name: alertmanager-cm

mountPath: /etc/alertmanager

volumes:

- name: alertmanager-cm

configMap:

name: alertmanager-config

imagePullSecrets:

- name: harbor

[root@k8s-7-200 alertmanager]# vi svc.yaml

# Prometheus调用alert采用service name。不走ingress域名

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: infra

spec:

selector:

app: alertmanager

ports:

- port: 80

targetPort: 9093

6.3、应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/alertmanager/cm.yaml

configmap/alertmanager created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/alertmanager/dp.yaml

deployment.extensions/alertmanager created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/alertmanager/svc.yaml

service/alertmanager created

6.4、配置alert与prometheus联系

添加告警规则(直接套上去就能用,如果深入研究可以去研究prometheus SQL)

[root@k8s-7-200 ~]# vi /data/nfs-volume/prometheus/etc/rules.yml # 配置在prometheus目录下

groups:

- name: hostStatsAlert

rules:

- alert: hostCpuUsageAlert

expr: sum(avg without (cpu)(irate(node_cpu{mode!='idle'}[5m]))) by (instance) > 0.85

for: 5m

labels:

severity: warning

annotations:

summary: "{{ $labels.instance }} CPU usage above 85% (current value: {{ $value }}%)"

- alert: hostMemUsageAlert

expr: (node_memory_MemTotal - node_memory_MemAvailable)/node_memory_MemTotal > 0.85

for: 5m

labels:

severity: warning

annotations:

summary: "{{ $labels.instance }} MEM usage above 85% (current value: {{ $value }}%)"

- alert: OutOfInodes

expr: node_filesystem_free{fstype="overlay",mountpoint ="/"} / node_filesystem_size{fstype="overlay",mountpoint ="/"} * 100 < 10

for: 5m

labels:

severity: warning

annotations:

summary: "Out of inodes (instance {{ $labels.instance }})"

description: "Disk is almost running out of available inodes (< 10% left) (current value: {{ $value }})"

- alert: OutOfDiskSpace

expr: node_filesystem_free{fstype="overlay",mountpoint ="/rootfs"} / node_filesystem_size{fstype="overlay",mountpoint ="/rootfs"} * 100 < 10

for: 5m

labels:

severity: warning

annotations:

summary: "Out of disk space (instance {{ $labels.instance }})"

description: "Disk is almost full (< 10% left) (current value: {{ $value }})"

- alert: UnusualNetworkThroughputIn

expr: sum by (instance) (irate(node_network_receive_bytes[2m])) / 1024 / 1024 > 100

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual network throughput in (instance {{ $labels.instance }})"

description: "Host network interfaces are probably receiving too much data (> 100 MB/s) (current value: {{ $value }})"

- alert: UnusualNetworkThroughputOut

expr: sum by (instance) (irate(node_network_transmit_bytes[2m])) / 1024 / 1024 > 100

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual network throughput out (instance {{ $labels.instance }})"

description: "Host network interfaces are probably sending too much data (> 100 MB/s) (current value: {{ $value }})"

- alert: UnusualDiskReadRate

expr: sum by (instance) (irate(node_disk_bytes_read[2m])) / 1024 / 1024 > 50

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual disk read rate (instance {{ $labels.instance }})"

description: "Disk is probably reading too much data (> 50 MB/s) (current value: {{ $value }})"

- alert: UnusualDiskWriteRate

expr: sum by (instance) (irate(node_disk_bytes_written[2m])) / 1024 / 1024 > 50

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual disk write rate (instance {{ $labels.instance }})"

description: "Disk is probably writing too much data (> 50 MB/s) (current value: {{ $value }})"

- alert: UnusualDiskReadLatency

expr: rate(node_disk_read_time_ms[1m]) / rate(node_disk_reads_completed[1m]) > 100

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual disk read latency (instance {{ $labels.instance }})"

description: "Disk latency is growing (read operations > 100ms) (current value: {{ $value }})"

- alert: UnusualDiskWriteLatency

expr: rate(node_disk_write_time_ms[1m]) / rate(node_disk_writes_completedl[1m]) > 100

for: 5m

labels:

severity: warning

annotations:

summary: "Unusual disk write latency (instance {{ $labels.instance }})"

description: "Disk latency is growing (write operations > 100ms) (current value: {{ $value }})"

- name: http_status

rules:

- alert: ProbeFailed

expr: probe_success == 0

for: 1m

labels:

severity: error

annotations:

summary: "Probe failed (instance {{ $labels.instance }})"

description: "Probe failed (current value: {{ $value }})"

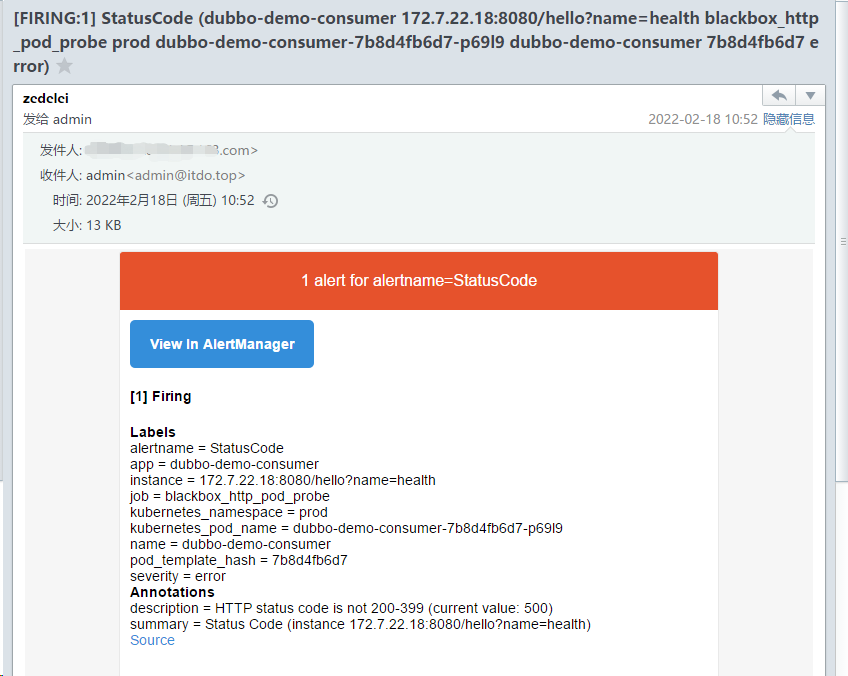

- alert: StatusCode

expr: probe_http_status_code <= 199 OR probe_http_status_code >= 400

for: 1m

labels:

severity: error

annotations:

summary: "Status Code (instance {{ $labels.instance }})"

description: "HTTP status code is not 200-399 (current value: {{ $value }})"

- alert: SslCertificateWillExpireSoon

expr: probe_ssl_earliest_cert_expiry - time() < 86400 * 30

for: 5m

labels:

severity: warning

annotations:

summary: "SSL certificate will expire soon (instance {{ $labels.instance }})"

description: "SSL certificate expires in 30 days (current value: {{ $value }})"

- alert: SslCertificateHasExpired

expr: probe_ssl_earliest_cert_expiry - time() <= 0

for: 5m

labels:

severity: error

annotations:

summary: "SSL certificate has expired (instance {{ $labels.instance }})"

description: "SSL certificate has expired already (current value: {{ $value }})"

- alert: BlackboxSlowPing

expr: probe_icmp_duration_seconds > 2

for: 5m

labels:

severity: warning

annotations:

summary: "Blackbox slow ping (instance {{ $labels.instance }})"

description: "Blackbox ping took more than 2s (current value: {{ $value }})"

- alert: BlackboxSlowRequests

expr: probe_http_duration_seconds > 2

for: 5m

labels:

severity: warning

annotations:

summary: "Blackbox slow requests (instance {{ $labels.instance }})"

description: "Blackbox request took more than 2s (current value: {{ $value }})"

- alert: PodCpuUsagePercent

expr: sum(sum(label_replace(irate(container_cpu_usage_seconds_total[1m]),"pod","$1","container_label_io_kubernetes_pod_name", "(.*)"))by(pod) / on(pod) group_right kube_pod_container_resource_limits_cpu_cores *100 )by(container,namespace,node,pod,severity) > 80

for: 5m

labels:

severity: warning

annotations:

summary: "Pod cpu usage percent has exceeded 80% (current value: {{ $value }}%)"

修改Prometheus配置,使其调用alter

[root@k8s-7-200 ~]# vim /data/nfs-volume/prometheus/etc/prometheus.yml # 在末尾追加,关联告警规则

......

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager"] #调用alertmanager的service name

rule_files:

- "/data/etc/rules.yml"

然后重启promenteus,生产的下不要删除promenteus的pod,因为太大,启动时间太长。由于之前我们配置promenteus运行在k8s-7-21上,所以找到pid 4468。(promenteus支持配置文件修改后,平滑加载)。然后kill -SIGHUP 就实现平滑加载。其中SIGHUP传递信号

[root@k8s-7-21 ~]# ps aux |grep prometheus

root 2576 0.3 0.9 169012 38264 ? Ssl 08:34 0:20 traefik traefik --api --kubernetes --logLevel=INFO --insecureskipverify=true --kubernetes.endpoint=https://10.4.7.10:7443 --accesslog --accesslog.filepath=/var/log/traefik_access.log --traefiklog --traefiklog.filepath=/var/log/traefik.log --metrics.prometheus

root 4468 83.3 26.5 1842404 1024864 ? Ssl 08:38 86:25 /bin/prometheus --config.file=/data/etc/prometheus.yml --storage.tsdb.path=/data/prom-db --storage.tsdb.min-block-duration=5m --storage.tsdb.retention=24h

root 35460 0.0 0.0 112832 976 pts/0 S+ 10:22 0:00 grep --color=auto prometheus

[root@k8s-7-21 ~]# kill -SIGHUP 4468

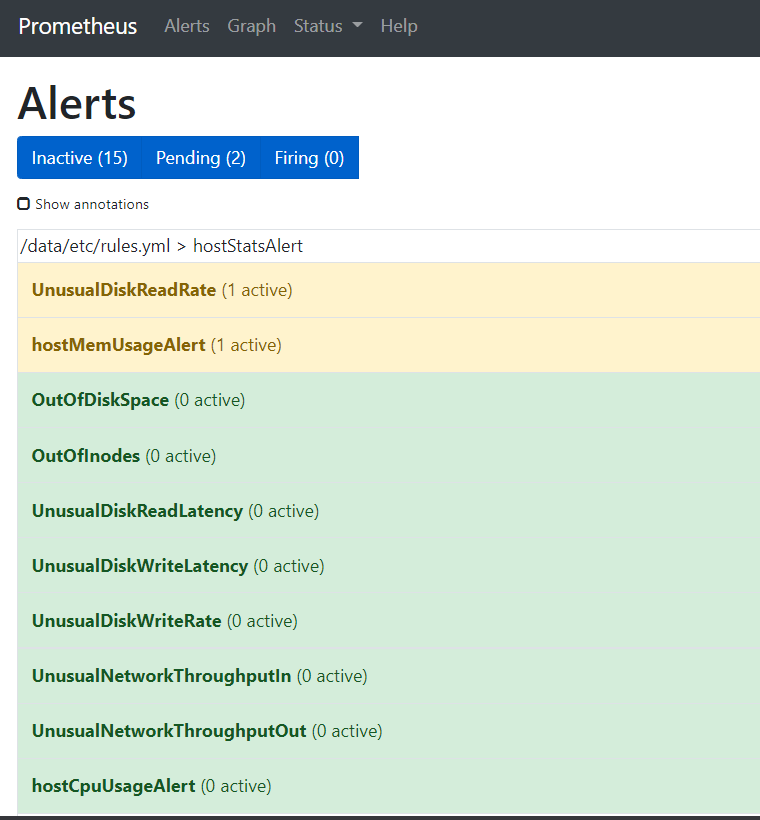

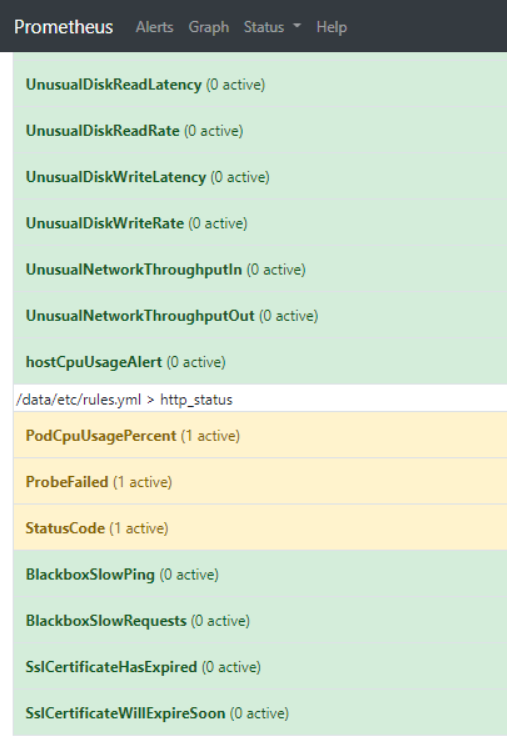

查看Alerts,查看报警规则

测试告警:

把dubbo-demo-service 停止,dubbo-demo-consumer一定是报错,然后触发报警

Blackbox已经报错

查看Alerts,黄色提示报错。waiting 红色后就会发邮件

查看警告邮件