实验:使用configmap配置dubbo-monitor

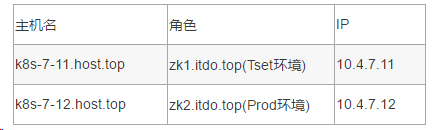

1.1、环境搭建

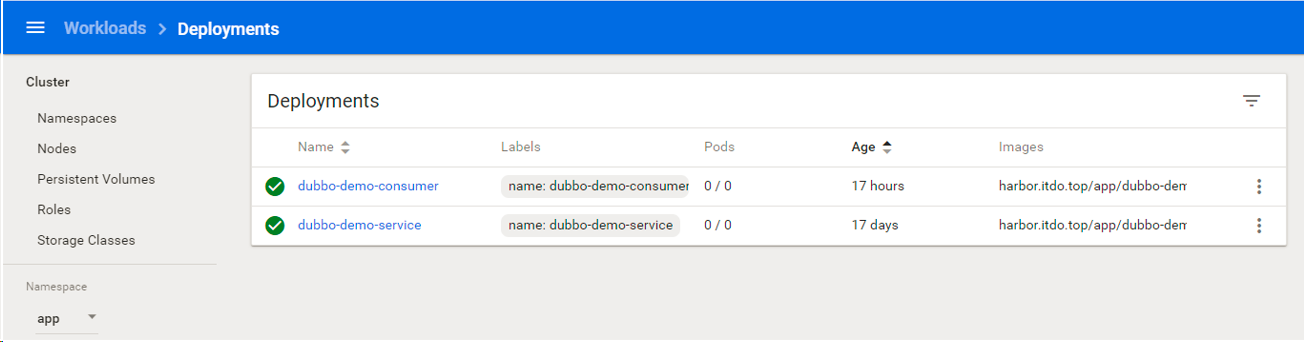

1.1.1、关闭三个服务

缩容dubbo-demo-consumer、dubbo-demo-service、dubbo-monitor实例数量为0,关闭三个服务

1.1.2、拆分zk群集环境重新配置zk为单节点

因为我们要上配置中心,就是要分环境,分测试跟生产环境,尽可能模拟互联网公司的技术部一些细节,不同环境下管理同一套应用,而且应用不用修改配置文件

操作过程:

1、把k8s-7-11、k8s-7-12、k8s-7-21上zk停止:

[root@k8s-7-11 ~]# /opt/zookeeper/bin/zkServer.sh stop

[root@k8s-7-11 ~]# /opt/zookeeper/bin/zkServer.sh stop

[root@k8s-7-11 ~]# /opt/zookeeper/bin/zkServer.sh stop

[root@k8s-7-11 ~]# ps aux |grep zoo

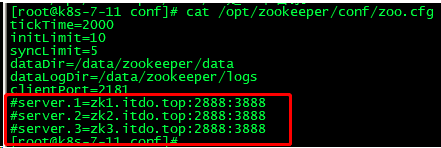

2、k8s-7-11、k8s-7-12 上清除日志跟数据,起来后zk1 zk2不成集群:

[root@k8s-7-12 bin]# cd /data/zookeeper/

[root@hdss7-12 zookeeper]# ll

drwxr-xr-x. 3 root root 35 1月 12 11:01 data

drwxr-xr-x. 3 root root 23 12月 25 16:17 logs

[root@@k8s-7-12 zookeeper]# rm -rf data/*

[root@@k8s-7-12 zookeeper]# rm -rf logs/*

3、把k8s-7-11、k8s-7-12上删除zk集群配置,只让他自己运行自己的

[root@k8s-7-12 logs]# cd /opt/zookeeper/conf/

[root@k8s-7-12 conf]# vim zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

4、启动k8s-7-11、k8s-7-12上zk服务

[root@k8s-7-11 ~]# /opt/zookeeper/bin/zkServer.sh start

[root@k8s-7-11 ~]# /opt/zookeeper/bin/zkServer.sh start

[root@k8s-7-11 conf]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: standalone

1.2、准备资源配置清单

实现dubbo-monitor连接zk1.itdo.top

运维主机k8s-7-200.host.top上

[root@k8s-7-200 ~]# cd /data/k8s-yaml/dubbo-monitor/

[root@k8s-7-200 dubbo-monitor]# ll

-rw-r--r--. 1 root root 936 1月 10 12:45 deployment.yaml

-rw-r--r--. 1 root root 265 1月 10 10:02 ingress.yaml

-rw-r--r--. 1 root root 186 1月 10 09:56 service.yaml

ConfigMap.yaml

[root@k8s-7-200 dubbo-monitor]# vim /data/k8s-yaml/dubbo-monitor/cm.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: dubbo-monitor-cm

namespace: infra

data:

dubbo.properties: |

dubbo.container=log4j,spring,registry,jetty

dubbo.application.name=simple-monitor

dubbo.application.owner=evan

dubbo.registry.address=zookeeper://zk1.itdo.top:2181 #这里配置为单节点

dubbo.protocol.port=20880

dubbo.jetty.port=8080

dubbo.jetty.directory=/dubbo-monitor-simple/monitor

dubbo.charts.directory=/dubbo-monitor-simple/charts

dubbo.statistics.directory=/dubbo-monitor-simple/statistics

dubbo.log4j.file=/dubbo-monitor-simple/logs/dubbo-monitor.log

dubbo.log4j.level=WARN

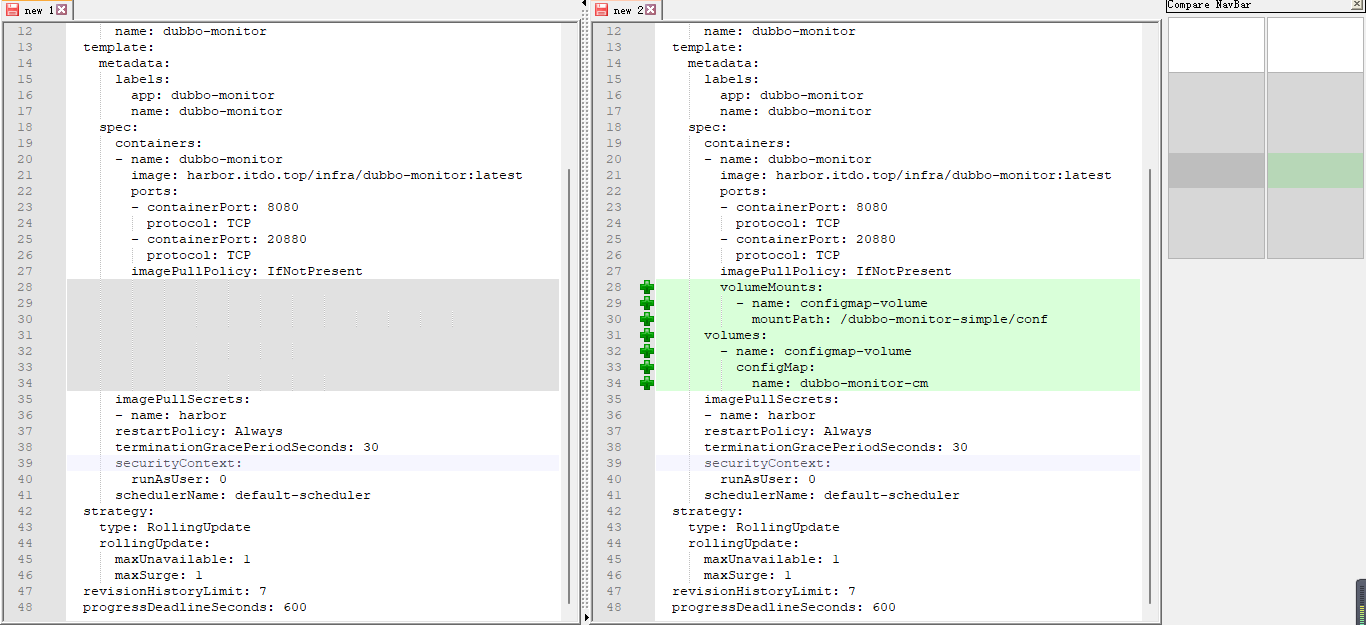

dp.yaml

[root@k8s-7-200 dubbo-monitor]# vim /data/k8s-yaml/dubbo-monitor/deployment-cm.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

labels:

name: dubbo-monitor

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-monitor

template:

metadata:

labels:

app: dubbo-monitor

name: dubbo-monitor

spec:

containers:

- name: dubbo-monitor

image: harbor.itdo.top/infra/dubbo-monitor:latest

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

imagePullPolicy: IfNotPresent

volumeMounts:

- name: configmap-volume

mountPath: /dubbo-monitor-simple/conf

volumes:

- name: configmap-volume

configMap:

name: dubbo-monitor-cm

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

[root@k8s-7-200 dubbo-monitor]# ll

总用量 20

-rw-r--r-- 1 root root 618 1月 29 10:05 configmap.yaml

-rw-r--r-- 1 root root 1145 1月 29 10:09 deployment-cm.yaml

-rw-r--r-- 1 root root 934 1月 28 14:57 deployment.yaml

-rw-r--r-- 1 root root 267 1月 28 14:58 ingress.yaml

-rw-r--r-- 1 root root 246 1月 28 14:57 service.yaml

1.3、应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dubbo-monitor/configmap.yaml

configmap/dubbo-monitor-cm created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dubbo-monitor/deployment-cm.yaml

deployment.extensions/dubbo-monitor configured

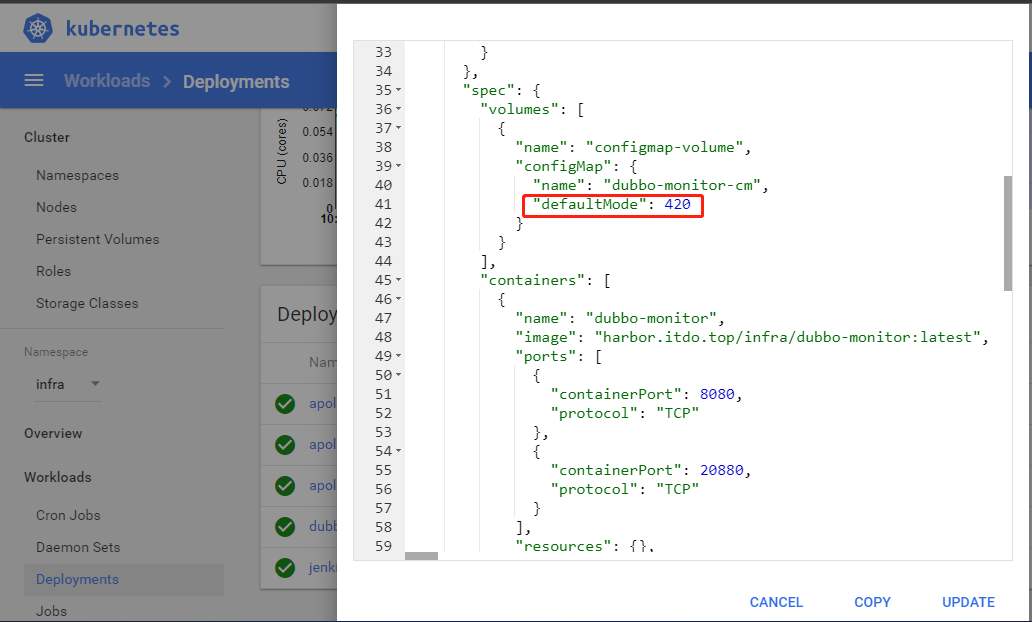

查看dubbo-monitor部署 defaultMode:420:默认文件权限,420就是只读,用umask方式,用只读的方声明一个卷

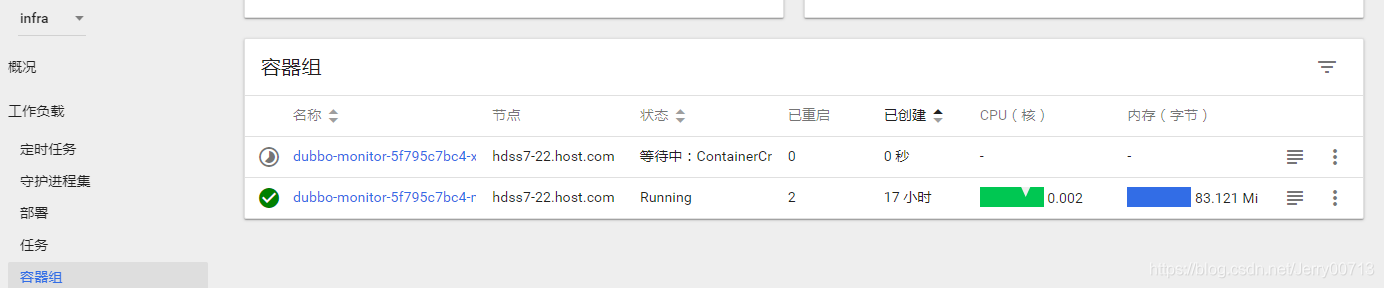

1.4、查看状态

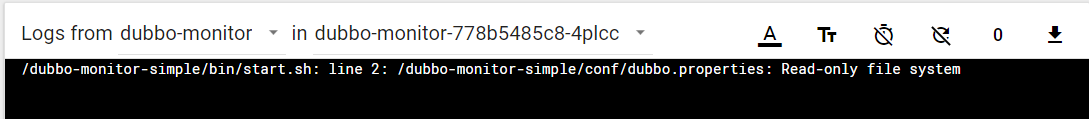

查看日志:

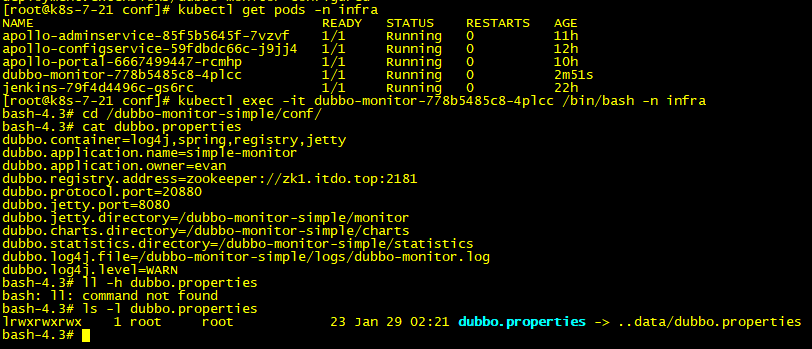

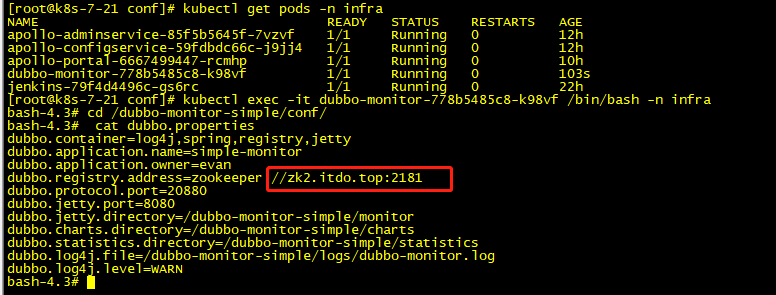

查看容器中的dubbo.properties:

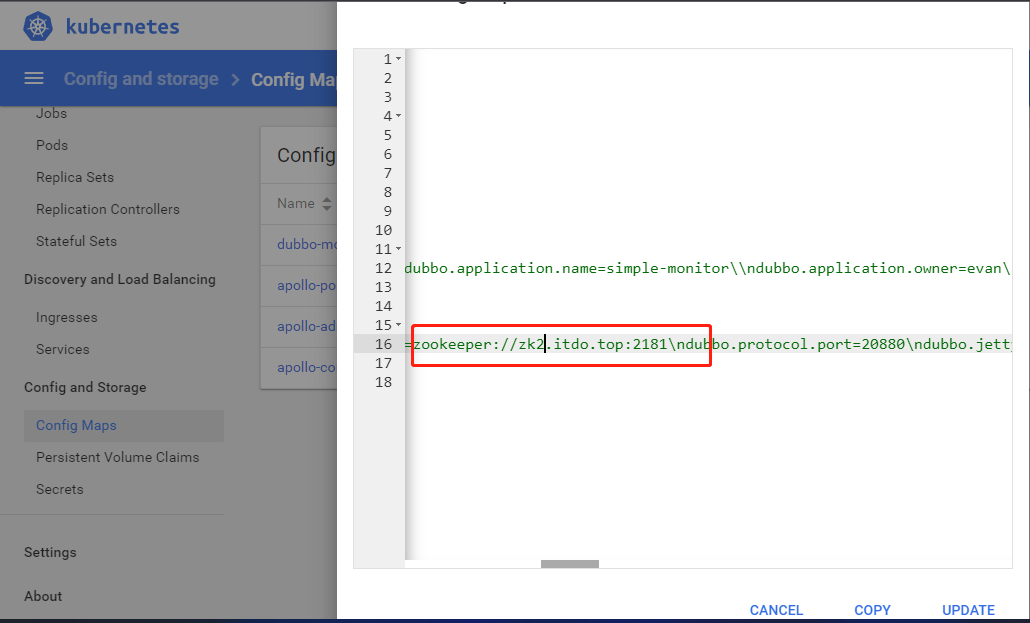

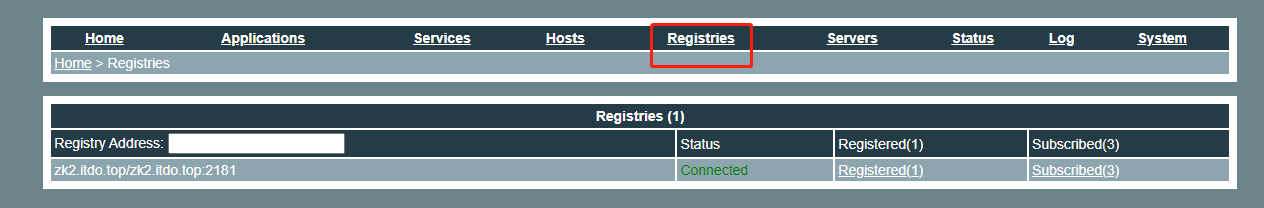

1.5、dubbo-monitor连接zk2.itdo.top

把zk1改成zk2,更新

重启monitor容器,可以看到配置文件已经更新为ZK2

刷新http://dubbo-monitor.itdo.top/registries.html

第二种修改: /data/k8s-yaml/dubbo-monitor/cm.yaml ,然后重新apply -f