1 spinnaker概述和选型

1.1 概述

1.1.1 主要功能

Spinnaker是一个开源的多云持续交付平台,提供快速、可靠、稳定的软件变更服务。主要包含两类功能:集群管理和部署管理

1.1.2 集群管理

集群管理主要用于管理云资源,Spinnaker所说的”云“可以理解成AWS,即主要是laaS的资源,比如OpenStak,Google云,微软云等,后来还支持了容器与Kubernetes,但是管理方式还是按照管理基础设施的模式来设计的。

Spinnaker 中管理如下资源:

- Server Group:最基本的逻辑资源,包括了若干使用相同配置和镜像的虚拟机,若干负载均衡(load balancer),以及安全组。

- 安全组规则(Security Group):就是 AWS 中的安全组,可以理解成防火墙。

- 负载均衡(Load Balancer):AWS 中的 ELB,也可能是安装在虚拟机中的负载均衡。

1.1.3 部署管理

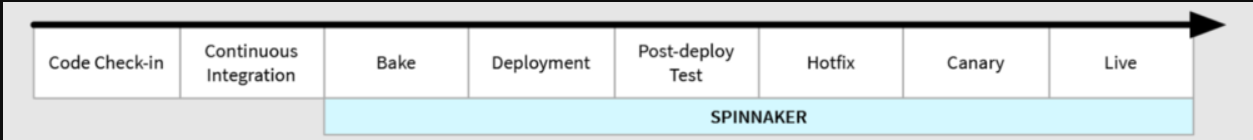

管理部署流程是Spinnaker的核心功能,使用minio作为持久化层,同时对接jenkins流水线创建的镜像,部署到Kubernetes集群中去,让服务真正运行起来。

pipeline

在 Spinnaker 中一个部署流程叫做pipeline,由若干个操作组成,每个操作又叫做一个 stage。触发一个 pipeline 方式非常灵活,可以手动触发,也可以用 jenkins、CRON 等。同时,可以配置 pipeline 向外发送一些通知信息,比如“开始”,“结束”,“失败”等。

stage

pipeline 中的一个操作,stage 之间可以有先后顺序,也可以并行。Spinnaker 中预定义了一些 stage 的类型,这些类型的 stage 往往使用频率比较高:

Bake:在某个 region 中制作虚拟机的镜像。Netflix 推崇不可变基础设施的理念,所以他们将软件打包进镜像的方式来部署服务。创建镜像的核心基于 Packer(Hashicorp 开源的镜像烘焙工具,Vagrant 就出自该公司 CEO 之手)。如果部署时用 docker,则打包过程就交由 docker build 完成。

Deploy:用 Bake 中创建的镜像部署成一台虚拟机。

Jenkins: 执行一个 Jenkins 的 job。

Manual Judgment : 暂停,等待用户的许可后再继续。

Pipeline : 执行另外一个 pipeline。

Script :执行任意的脚本。

Wait : 等待一段时间。

从 pipeline 的定义看,Spinnaker 和 Jenkins 有几分相似,不过两者的设计出发点的不同,stackoverflow上有相关的讨论。总结来看,jenkins 偏向 CI,产出物是软件包;Spinnaker 是 CD,将软件包分发到服务器/虚拟机上,保持软件正常运行,它的目标只是让“部署”的过程更容易更可扩展。有一个例子可以说明两者的关系:Netflix 内部有人不用 Spinnaker 的 pipeline,而只是将 Spinnaker 看为一个部署工具,直接在 jenkins 中调用它的 API 来部署服务。

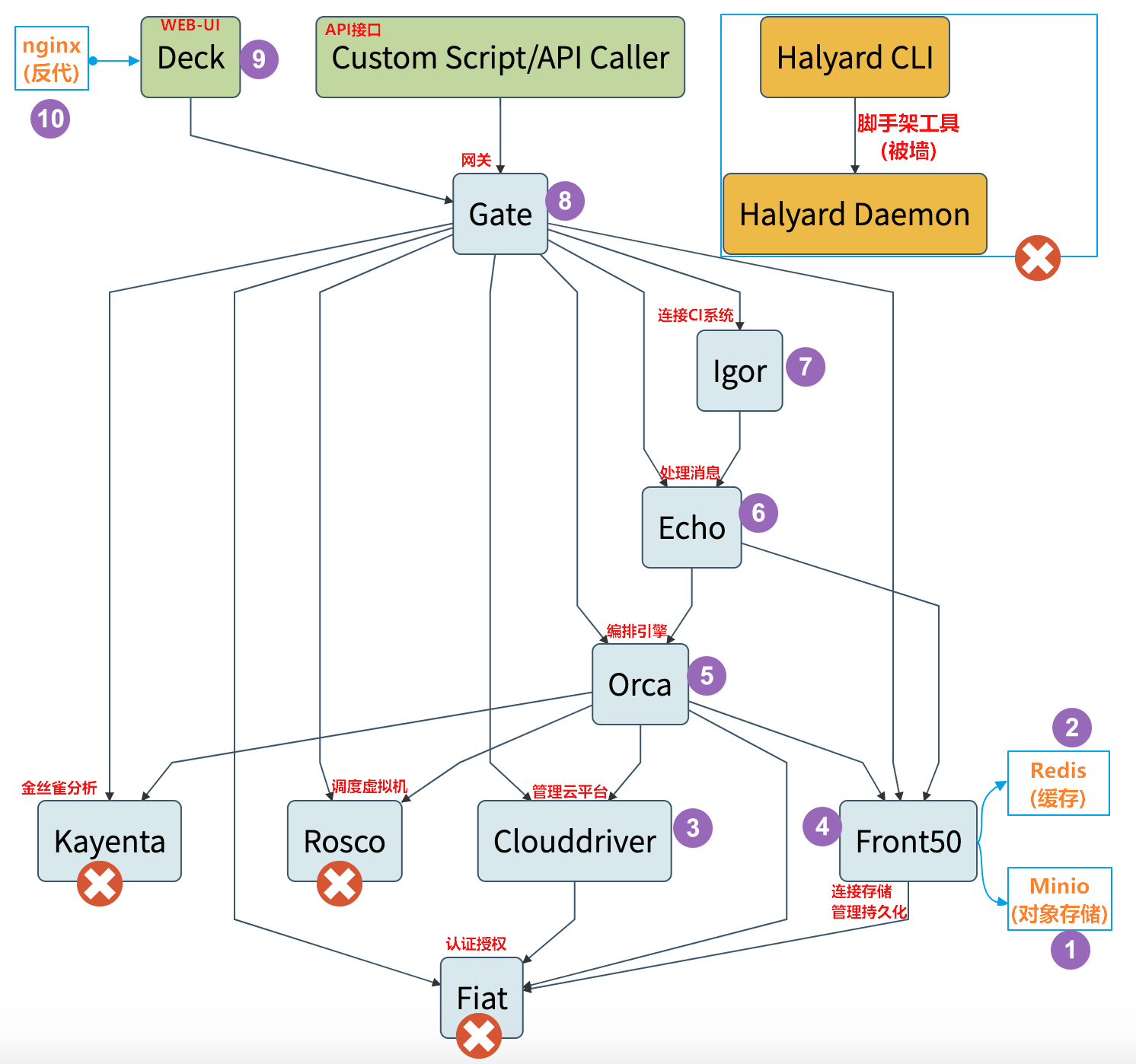

1.1.4 逻辑架构图

Spinnaker自己就是Spinnake一个微服务,由若干组件组成,整套逻辑架构图如下:

- Deck是基于浏览器的UI

- Gate是API网关。

- Spinnaker UI和所有api调用程序都通过Gate与Spinnaker进行通信。

- Clouddriver负责管理云平台,并为所有部署的资源编制索引/缓存。

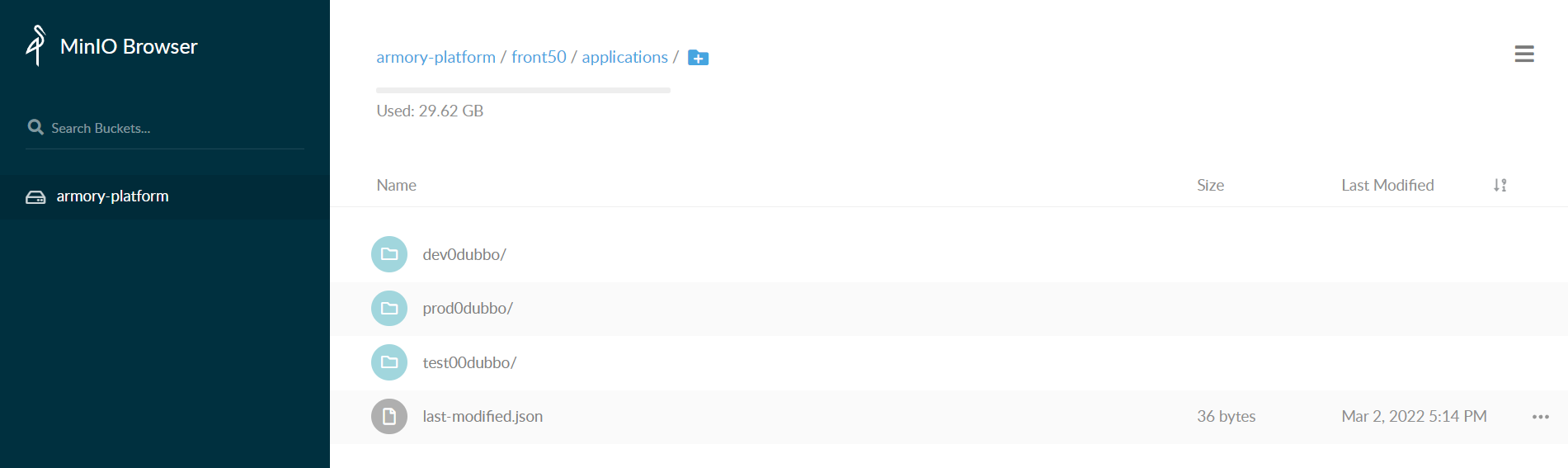

- Front50用于管理数据持久化,用于保存应用程序,管道,项目和通知的元数据。默认连接AWS S3,这里我们使用minio

- Igor用于通过Jenkins和Travis CI等系统中的持续集成作业来触发管道,并且它允许在管道中使用Jenkins / Travis阶段。

- Orca是编排引擎。它处理所有临时操作和流水线。

- Rosco是管理调度虚拟机。

- Kayenta为Spinnaker提供自动化的金丝雀分析。

- Fiat 是Spinnaker的认证服务。

- Echo是信息通信服务。

它支持发送通知(例如,Slack,电子邮件,SMS),并处理来自Github之类的服务中传入的Webhook。

1.1.5 Spinnaker管理方法

Spinnaker 看起来也是一个复杂的微服务架构,由不少服务组成,所以本身也遵循一些运维准则:

1.每个 Spinnaker 的服务(如 deck,gate,orca)都运行在独立的 cluster 中。

2.每个服务都将自己的运行指标推送到 Atlas 中,用于绘制仪表盘和报警。Atlas 是Netflix的一个内存时间序列数据库。

3.每个服务都将自己的日志发送到 ELK 集群中。

4.每个内部服务除了deck 和 gate 必须用 mutual TLS,并且证书和认证通过 Lemur 进行管理。不允许任何外部流量进入内部服务中。所有的 API 调用必须经过 gate。

5.每个外部服务(除了gate)都要支持 mTLS 或者 SSO。

6.如果某个服务有数据存储的需求,那么只能存在自己的数据库中,服务之间不共享数据存储。

-->为了保证兼容性,Spinnaker 在开发过程中还会准守一些准则:

1.保证足够的单元测试和覆盖率。

2.在 code review 的时候特别注意是否会破坏API兼容性。

3.7×24 不间断的执行集成测试。有两种集成测试,一种是一个 jenkins job,会不断调用 API 接口,确保API是按照预想的在工作,另一种是一个 Spinnaker 的 pipeline,用来执行日常任务(比如创建镜像,部署环境等)。

4.当发现未知的失败是,首先执行回滚操作,直到这个问题被修复。

1.2 部署选型

Spinnaker

Spinnaker包含组件众多,部署相对复杂,因此官方提供的脚手架工具halyard,但是可惜里面涉及的部分镜像地址被墙

Armory发行版

基于Spinnaker,众多公司开发了开发第三方发行版来简化Spinnaker的部署工作,例如我们要用的Armory发行版

Armory也有自己的脚手架工具,虽然相对halyard更简化了,但仍然部分被墙

因此我们部署的方式是手动交付Spinnaker的Armory发行版

2 部署spinnaker第一部分

2.1 spinnaker之对象式存储组件minio部署

2.1.1 准备minio镜像

[root@k8s-7-200 ~]# docker pull minio/minio:RELEASE.2019-12-24T23-04-45Z

[root@k8s-7-200 ~]# docker tag e31e0721a96b harbor.itdo.top/armory/minio:RELEASE.2019-12-24T23-04-45Z

[root@k8s-7-200 ~]# docker push harbor.itdo.top/armory/minio:RELEASE.2019-12-24T23-04-45Z

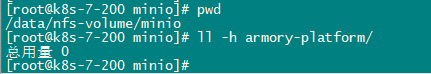

准备目录

[root@k8s-7-200 ~]# mkdir -p /data/nfs-volume/minio

[root@k8s-7-200 ~]# mkdir -p /data/k8s-yaml/armory/minio

[root@k8s-7-200 ~]# cd /data/k8s-yaml/armory/minio

2.1.2 准备dp资源清单

[root@k8s-7-200 ~]# vim dp.yaml

kind: Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: minio

name: minio

namespace: armory

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

name: minio

template:

metadata:

labels:

app: minio

name: minio

spec:

containers:

- name: minio

image: harbor.itdo.top/armory/minio:RELEASE.2019-12-24T23-04-45Z

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

protocol: TCP

args:

- server

- /data

env:

- name: MINIO_ACCESS_KEY #登陆用户

value: admin

- name: MINIO_SECRET_KEY #登陆密码

value: admin123

readinessProbe: #就绪性探针

failureThreshold: 3

httpGet:

path: /minio/health/ready

port: 9000

scheme: HTTP

initialDelaySeconds: 10 #会影响容器启动时间,所以也不要太久

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

volumeMounts:

- mountPath: /data

name: data

imagePullSecrets:

- name: harbor

volumes:

- nfs:

server: k8s-7-200.host.top

path: /data/nfs-volume/minio #nfs路径

name: data

2.1.3 准备svc资源清单

[root@k8s-7-200 ~]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: minio

namespace: armory

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9000

selector:

app: minio

2.1.4 准备ingress资源清单

[root@k8s-7-200 ~]# vim ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: minio

namespace: armory

spec:

rules:

- host: minio.itdo.top

http:

paths:

- path: /

backend:

serviceName: minio

servicePort: 80

2.1.5 应用资源配置清单

任意node节点

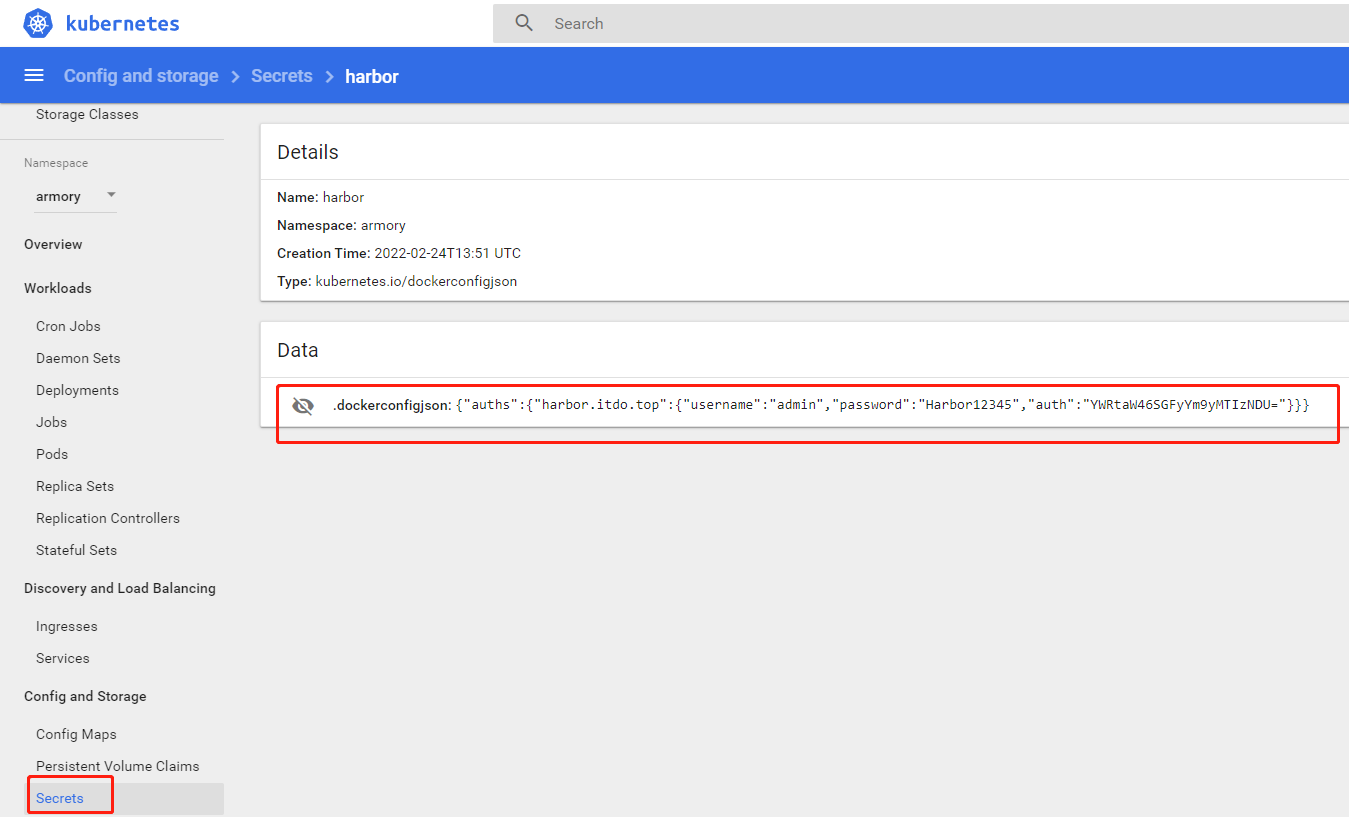

创建namespace和secret(用于连接私有仓库)

[root@k8s-7-21 ~]# kubectl create namespace armory

[root@k8s-7-21 ~]# kubectl create secret docker-registry harbor \

--docker-server=harbor.itdo.top \

--docker-username=admin \

--docker-password=Harbor.itdo12345 \

-n armory

应用清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/minio/dp.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/minio/svc.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/minio/ingress.yaml

2.1.6 DNS解析

minio A 10.4.7.10

[root@k8s-7-11 ~]# systemctl restart named

[root@k8s-7-11 ~]# dig -t A minio.itdo.top @10.4.7.11 +short

10.4.7.10

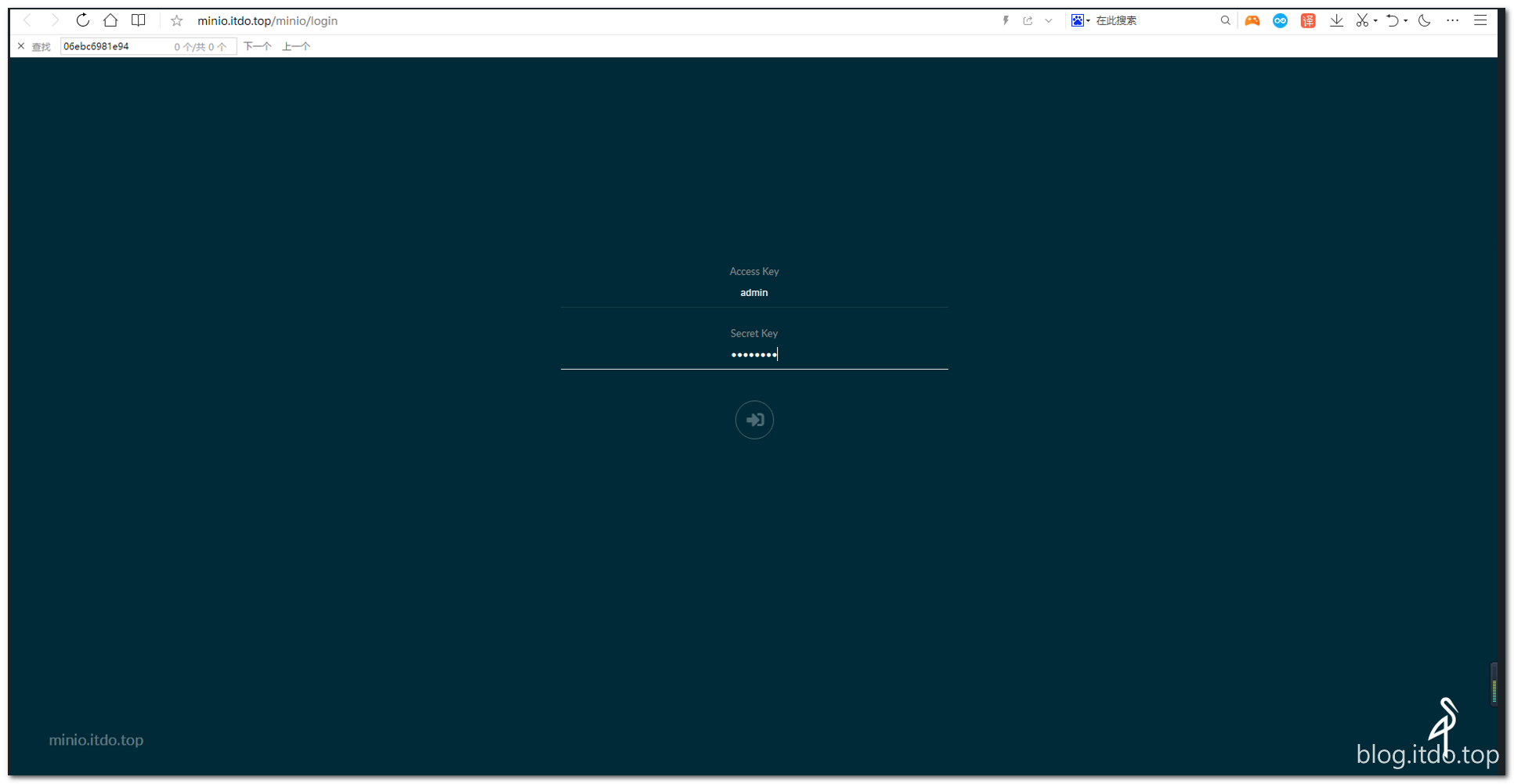

2.1.7 访问验证

访问http://minio.itdo.top,用户名密码为:admin/admin123

如果访问并登陆成功,表示minio部署成功

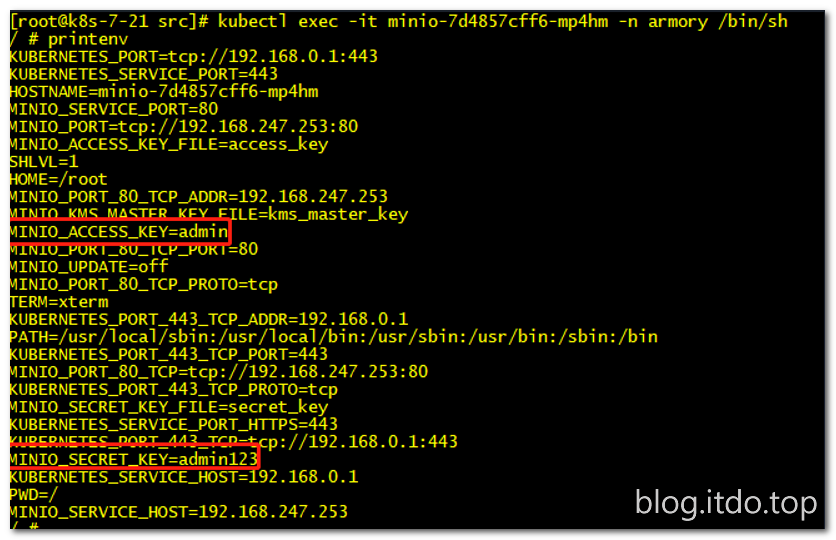

查看环境变量

[root@k8s-7-21 ~]# kubectl exec -it minio-7d4857cff6-mp4hm -n armory /bin/sh

/ # printenv

可以看到定义用户和密码变量

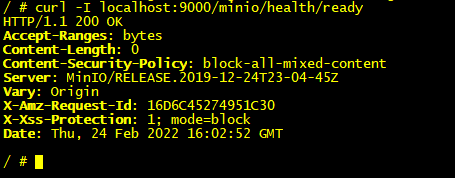

#curl -I localhost:9000/minio/health/ready

2.2 spinnaker之缓存组件redis部署

注:redis没做持久化,因为redis只存放任务历史数据,不是重要数据

2.2.1 准备镜像好目录

[root@k8s-7-200 ~]# docker pull redis:4.0.14

[root@k8s-7-200 ~]# docker tag 191c4017dcdd harbor.itdo.top/armory/redis:v4.0.14

[root@k8s-7-200 ~]# docker push harbor.itdo.top/armory/redis:v4.0.14

准备目录

[root@k8s-7-200 ~]# mkdir -p /data/k8s-yaml/armory/redis

[root@k8s-7-200 ~]# cd /data/k8s-yaml/armory/redis

2.2.2 准备dp资源清单

[root@k8s-7-200 ~]# vim dp.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

name: redis

name: redis

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

name: redis

template:

metadata:

labels:

app: redis

name: redis

spec:

containers:

- name: redis

image: harbor.itdo.top/armory/redis:v4.0.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 6379

protocol: TCP

imagePullSecrets:

- name: harbor

2.2.3 准备svc资源清单

[root@k8s-7-200 ~]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: armory

spec:

ports:

- port: 6379

protocol: TCP

targetPort: 6379

selector:

app: redis

2.3.4 应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/redis/dp.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/redis/svc.yaml

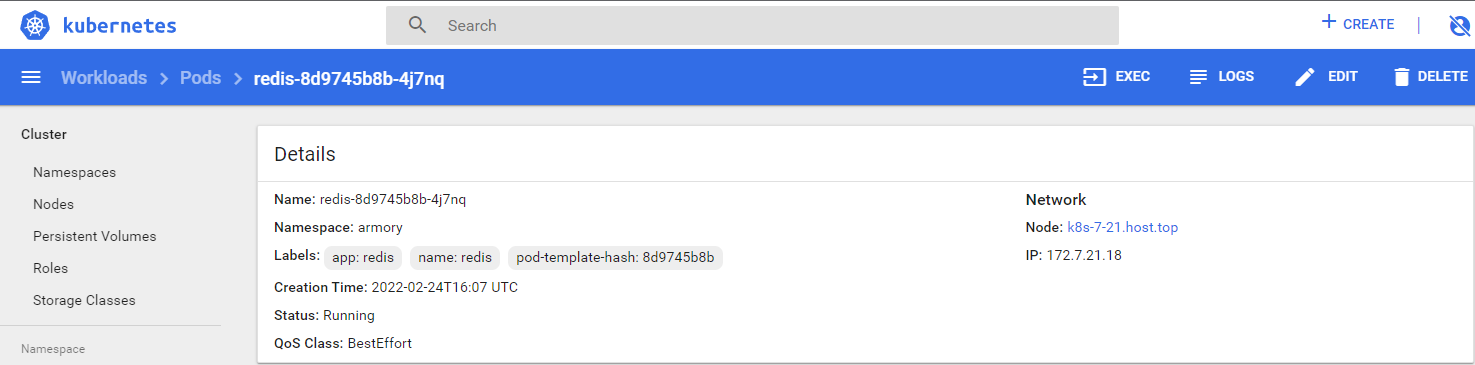

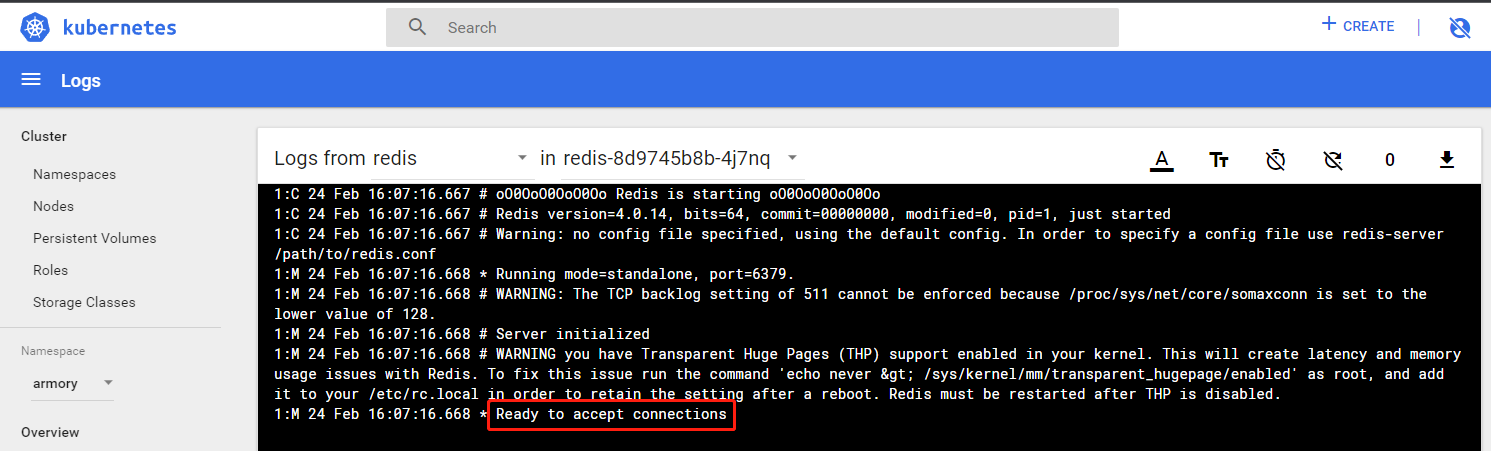

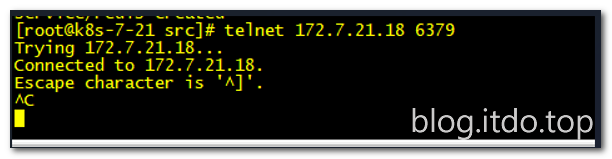

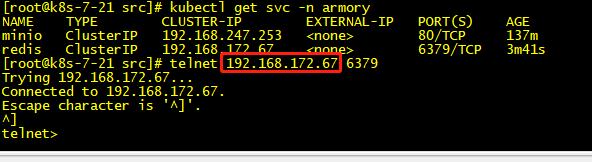

2.3.5 检查验证

3 部署spinnaker之云驱动组件CloudDriver

CloudDriver是整套spinnaker部署中最难的部分,因此单独写一章来说明

3.1 部署准备工作

3.1.1 准备镜像和目录

[root@k8s-7-200 ~]#docker pull armory/spinnaker-clouddriver-slim:release-1.11.x-bee52673a

[root@k8s-7-200 ~]#docker tag f1d52d01e28d harbor.itdo.top/armory/clouddriver:v1.11.x

[root@k8s-7-200 ~]#docker push harbor.itdo.top/armory/clouddriver:v1.11.x

说明:国内网络pull可能经常失败,我pull好几次都失败,最后科学上网才把镜像拉下来

准备目录

[root@k8s-7-200 ~]# mkdir /data/k8s-yaml/armory/clouddriver

[root@k8s-7-200 ~]# cd /data/k8s-yaml/armory/clouddriver

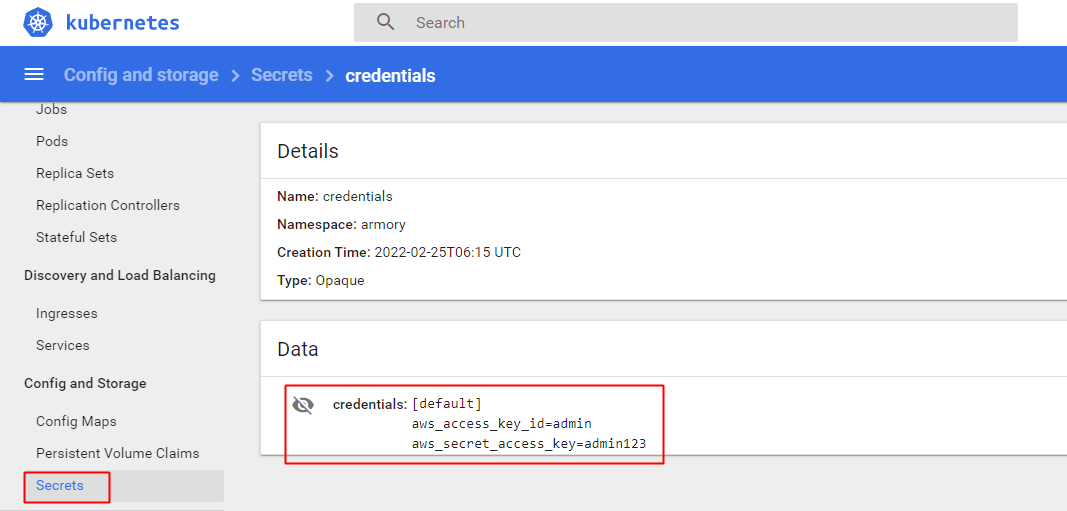

3.1.2 准备minio的secret

方法一:

准备配置文件

[root@k8s-7-200 clouddriver]#vim credentials

[default]

aws_access_key_id=admin

aws_secret_access_key=admin123

NODE节点创建secret

[root@k8s-7-21 ~]# wget http://k8s-yaml.itdo.top/armory/clouddriver/credentials

[root@k8s-7-21 ~]# kubectl create secret generic credentials \

--from-file=./credentials \

-n armory

方法二:

#也可以不急于配置文件,直接命令行创建

[root@k8s-7-21 ~]#kubectl create secret generic credentials \

--aws_access_key_id=admin \

--aws_secret_access_key=admin123 \

-n armory

准备K8S的用户配置

3.1.3 签发证书与私钥

[root@k8s-7-200 ~]# cd /opt/certs

[root@k8s-7-200 ~]# cp client-csr.json admin-csr.json

[root@k8s-7-200 ~]# sed -i 's#k8s-node#cluster-admin#g' admin-csr.json

[root@k8s-7-200 ~]# cat admin-csr.json

{

"CN": "cluster-admin",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "itdo",

"OU": "ops"

}

]

}

签发证书

[root@k8s-7-200 ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client admin-csr.json |cfssl-json -bare admin

2022/02/25 14:17:18 [INFO] generate received request

2022/02/25 14:17:18 [INFO] received CSR

2022/02/25 14:17:18 [INFO] generating key: rsa-2048

2020/09/05 22:10:23 [INFO] generate received request2022/02/25 14:17:19 [INFO] encoded CSR

2022/02/25 14:17:19 [INFO] signed certificate with serial number 448517386500808635238148743975816066852509882741

2022/02/25 14:17:19 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-7-200 certs]# ls -l admin*

-rw-r--r-- 1 root root 1001 2月 25 14:17 admin.csr

-rw-r--r-- 1 root root 287 2月 25 14:16 admin-csr.json

-rw------- 1 root root 1675 2月 25 14:17 admin-key.pem

-rw-r--r-- 1 root root 1375 2月 25 14:17 admin.pem

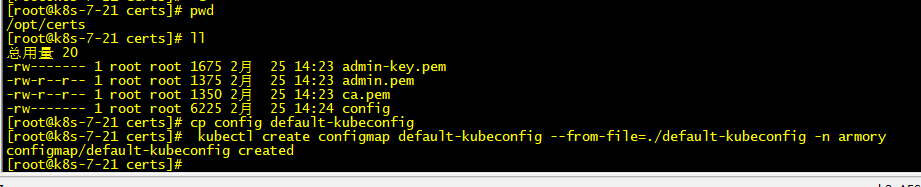

3.1.3 分发证书

在任意node节点

[root@k8s-7-21 ~]# mkdir /opt/certs && cd /opt/certs

[root@k8s-7-21 ~]#scp k8s-7-200.host.top:/opt/certs/ca.pem .

[root@k8s-7-21 ~]#scp k8s-7-200.host.top:/opt/certs/admin.pem .

[root@k8s-7-21 ~]#scp k8s-7-200.host.top:/opt/certs/admin-key.pem .

3.1.4 创建用户

# 4步法创建用户,生成一个config文件

[root@k8s-7-21 ~]# kubectl config set-cluster myk8s --certificate-authority=./ca.pem --embed-certs=true --server=https://10.4.7.10:7443 --kubeconfig=config

Cluster "myk8s" set.

[root@k8s-7-21 ~]# kubectl config set-credentials cluster-admin --client-certificate=./admin.pem --client-key=./admin-key.pem --embed-certs=true --kubeconfig=config

User "cluster-admin" set.

[root@k8s-7-21 ~]# kubectl config set-context myk8s-context --cluster=myk8s --user=cluster-admin --kubeconfig=config

Context "myk8s-context" created.

[root@k8s-7-21 ~]# kubectl config use-context myk8s-context --kubeconfig=config

Switched to context "myk8s-context".

# 集群角色绑定

[root@k8s-7-21 ~]# kubectl create clusterrolebinding myk8s-admin --clusterrole=cluster-admin --user=cluster-admin

clusterrolebinding.rbac.authorization.k8s.io/myk8s-admin created

验证cluster-admin用户

说明:/root/config就是上面创建的config文件

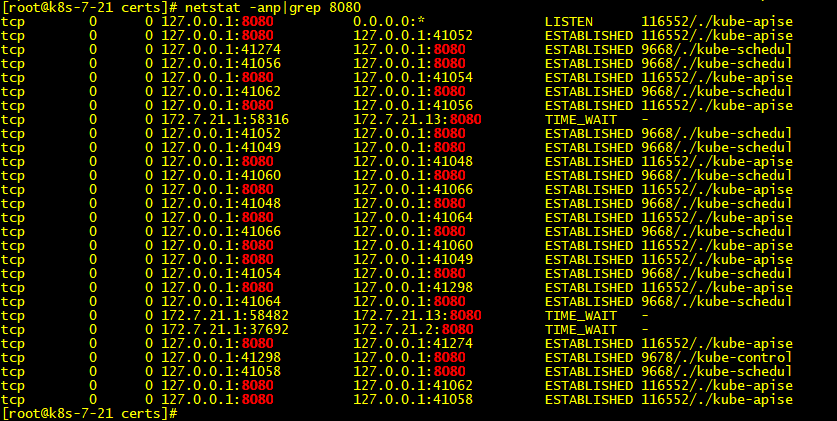

[root@k8s-7-21 ~]# kubectl config view #查看config

apiVersion: v1

clusters: []

contexts: []

current-context: ""

kind: Config

preferences: {}

users: []

#可以看到是空的配置,因为主控(master)节点kubelet使用http://127.0.0.1:8080跟kube-apiserver通讯,没有走6443端口

[root@k8s-7-21 ~]# cd /root/.kube/

[root@k8s-7-21 ~/.kube]# ll

total 4

drwxr-x--- 3 root root 23 Aug 5 18:56 cache

drwxr-x--- 3 root root 4096 Sep 5 22:18 http-cache

[root@k8s-7-21 ~/.kube]# cp /root/config .

[root@k8s-7-21 ~/.kube]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.4.7.10:7443

name: myk8s

contexts:

- context:

cluster: myk8s

user: cluster-admin

name: myk8s-context

current-context: myk8s-context

kind: Config

preferences: {}

users:

- name: cluster-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

#再次查看config,可以看到server: https://10.4.7.10:7443,这时kubelet使用https://10.4.7.10:7443跟kube-apiserver通讯

所以,如果想让运维主机管理k8s集群,做以下操作:

[root@k8s-7-200 ~]# mkdir /root/.kube

[root@k8s-7-200 ~]# cd /root/.kube/

[root@k8s-7-200 ~/.kube]# scp -rp k8s-7-21.host.top:/root/config .

root@k8s-7-21's password:

config 100% 6206 4.4MB/s 00:00

[root@k8s-7-200 .kube]# ll

总用量 8

-rw-r--r-- 1 root root 6225 2月 25 14:24 config

[root@k8s-7-200 ~]# scp -rp k8s-7-21.host.top:/opt/kubernetes/server/bin/kubectl /usr/bin/

root@k8s-7-21's password:

kubectl 100% 41MB 47.8MB/s 00:00

[root@k8s-7-200 ~]# which kubectl

/usr/bin/kubectl

[root@k8s-7-200 ~/.kube]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.4.7.10:7443

name: myk8s

contexts:

- context:

cluster: myk8s

user: cluster-admin

name: myk8s-context

current-context: myk8s-context

kind: Config

preferences: {}

users:

- name: cluster-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@k8s-7-200 ~/.kube]# kubectl get pods -n infra #这里也验证了cluster-admin用户具有管理员权限

NAME READY STATUS RESTARTS AGE

alertmanager-6bd5b9fb86-tdrcx 1/1 Running 0 2d20h

apollo-adminservice-85f5b5645f-5bfsf 1/1 Running 0 2d21h

apollo-configservice-59fdbdc66c-z2bfz 1/1 Running 0 2d21h

apollo-portal-6667499447-fc9sm 1/1 Running 0 2d21h

dubbo-monitor-778b5485c8-mcqpr 1/1 Running 0 2d21h

grafana-5fbf4fddc-pjkzb 1/1 Running 0 2d21h

jenkins-79f4d4496c-rrpr8 1/1 Running 0 2d22h

kafka-manager-84db686cb7-vwnmp 1/1 Running 0 45h

kibana-7d9f979864-j2lmz 1/1 Running 0 27h

prometheus-69f5cc4988-v4gb5 1/1 Running 0 2d21h

问题描述:

[root@k8s-7-200~]# kubectl get pods

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@k8s-7-200 ~]# echo "export KUBECONFIG=/root/.kube/config" >> ~/.bash_profile

[root@k8s-7-200 ~]# source ~/.bash_profile

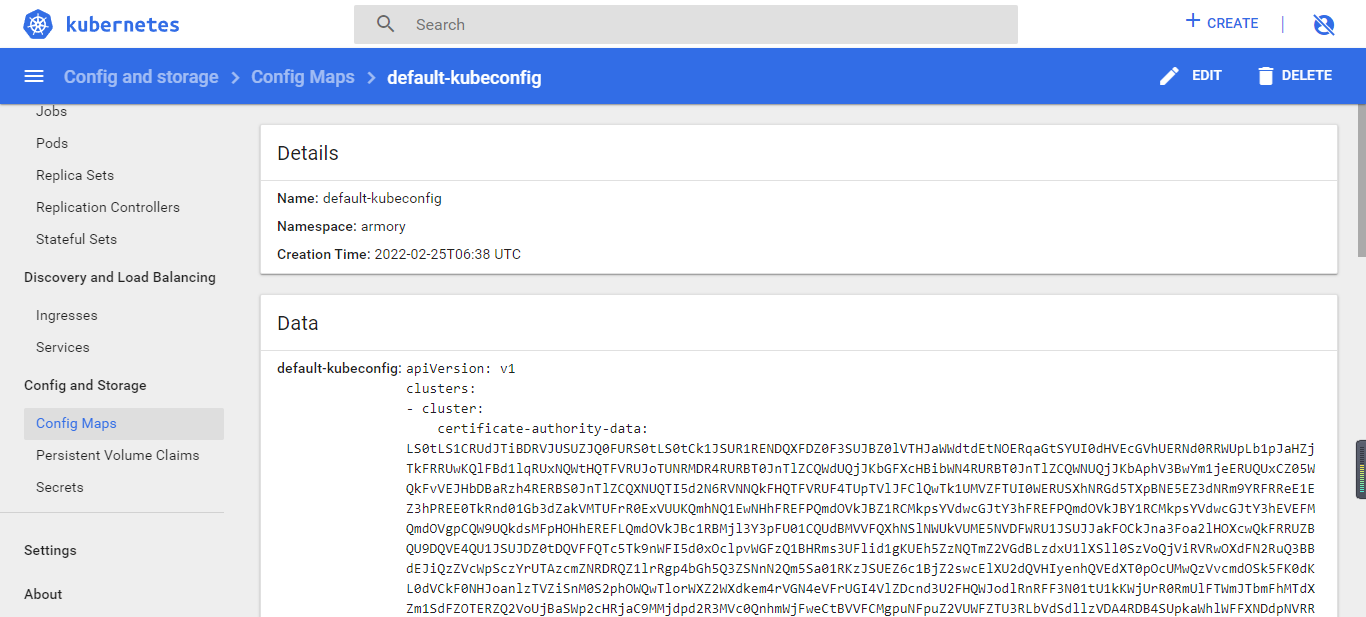

3.1.5 使用config创建configmap资源

[root@k8s-7-21 ~]#cp config default-kubeconfig

[root@k8s-7-21 ~]# kubectl create configmap default-kubeconfig --from-file=./default-kubeconfig -n armory

configmap/default-kubeconfig created

查看configmap

3.2 创建并应用资源清单

回到k8s-7-200.itdo.top运维主机上

[root@k8s-7-200 ~]#cd /data/k8s-yaml/armory/clouddriver

3.2.1 创建环境变量配置

[root@k8s-7-200 ~]#vim init-env.yaml

# 包括redis地址、对外的API接口域名等

kind: ConfigMap

apiVersion: v1

metadata:

name: init-env

namespace: armory

data:

API_HOST: http://spinnaker.itdo.top/api #修改地址

ARMORY_ID: c02f0781-92f5-4e80-86db-0ba8fe7b8544 #随机id,不需要修改也行

ARMORYSPINNAKER_CONF_STORE_BUCKET: armory-platform #minio bucketname

ARMORYSPINNAKER_CONF_STORE_PREFIX: front50

ARMORYSPINNAKER_GCS_ENABLED: "false"

ARMORYSPINNAKER_S3_ENABLED: "true"

AUTH_ENABLED: "false"

AWS_REGION: us-east-1

BASE_IP: 127.0.0.1

CLOUDDRIVER_OPTS: -Dspring.profiles.active=armory,configurator,local

CONFIGURATOR_ENABLED: "false"

DECK_HOST: http://spinnaker.itdo.top #修改地址

ECHO_OPTS: -Dspring.profiles.active=armory,configurator,local

GATE_OPTS: -Dspring.profiles.active=armory,configurator,local

IGOR_OPTS: -Dspring.profiles.active=armory,configurator,local

PLATFORM_ARCHITECTURE: k8s

REDIS_HOST: redis://redis:6379 #这里使用servicename连接

SERVER_ADDRESS: 0.0.0.0

SPINNAKER_AWS_DEFAULT_REGION: us-east-1

SPINNAKER_AWS_ENABLED: "false"

SPINNAKER_CONFIG_DIR: /home/spinnaker/config

SPINNAKER_GOOGLE_PROJECT_CREDENTIALS_PATH: ""

SPINNAKER_HOME: /home/spinnaker

SPRING_PROFILES_ACTIVE: armory,configurator,local

3.2.2 创建组件配置文件

[root@k8s-7-200 ~]#vim custom-config.yaml

# 该配置文件指定访问k8s、harbor、minio、Jenkins的访问方式

# 其中部分地址可以根据是否在k8s内部,和是否同一个名称空间来选择是否使用短域名

kind: ConfigMap

apiVersion: v1

metadata:

name: custom-config

namespace: armory

data:

clouddriver-local.yml: |

kubernetes:

enabled: true

accounts:

- name: cluster-admin

serviceAccount: false

dockerRegistries:

- accountName: harbor

namespace: []

namespaces: #这里只管理test/prod namespace,如果需要管理其他namespace,也可以在这里增加

- test

- prod

- app

kubeconfigFile: /opt/spinnaker/credentials/custom/default-kubeconfig

primaryAccount: cluster-admin #上一步中创建的账号

dockerRegistry:

enabled: true

accounts:

- name: harbor

requiredGroupMembership: []

providerVersion: V1

insecureRegistry: true

address: http://harbor.itdo.top #配置连接harbor

username: admin

password: Harbor.itdo12345

primaryAccount: harbor

artifacts:

s3:

enabled: true

accounts:

- name: armory-config-s3-account

apiEndpoint: http://minio

apiRegion: us-east-1

gcs:

enabled: false

accounts:

- name: armory-config-gcs-account

custom-config.json: ""

echo-configurator.yml: |

diagnostics:

enabled: true

front50-local.yml: |

spinnaker:

s3:

endpoint: http://minio

igor-local.yml: |

jenkins:

enabled: true

masters:

- name: jenkins-admin #jenkins连接配置

address: http://jenkins.infra

username: admin

password: admin123

primaryAccount: jenkins-admin

nginx.conf: |

gzip on;

gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript application/vnd.ms-fontobject application/x-font-ttf font/opentype image/svg+xml image/x-icon;

server {

listen 80;

location / {

proxy_pass http://armory-deck/;

}

location /api/ {

proxy_pass http://armory-gate:8084/;

}

rewrite ^/login(.*)$ /api/login$1 last;

rewrite ^/auth(.*)$ /api/auth$1 last;

}

spinnaker-local.yml: |

services:

igor:

enabled: true

3.2.3 创建默认配置文件

注意:此配置文件超长1600行,是用armory部署工具部署好后,基本不需要改动

default-config.yaml

3.2.5 创建dp资源文件

[root@k8s-7-200 ~]# vim dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-clouddriver

name: armory-clouddriver

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-clouddriver

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-clouddriver"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"clouddriver"'

labels:

app: armory-clouddriver

spec:

containers:

- name: armory-clouddriver

image: harbor.itdo.top/armory/clouddriver:v1.11.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

# 脚本在default-config.yaml中

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/clouddriver/bin/clouddriver

ports:

- containerPort: 7002

protocol: TCP

env:

- name: JAVA_OPTS

# 生产中调大到2048-4096M

value: -Xmx1024M

envFrom: #从前面init-eny.yaml取变量

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 7002

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 7002

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

securityContext:

runAsUser: 0

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /home/spinnaker/.aws

name: credentials

- mountPath: /opt/spinnaker/credentials/custom

name: default-kubeconfig

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: default-kubeconfig

name: default-kubeconfig

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- name: credentials

secret:

defaultMode: 420

secretName: credentials

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

3.2.6 创建svc资源文件

[root@k8s-7-200 ~]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-clouddriver

namespace: armory

spec:

ports:

- port: 7002

protocol: TCP

targetPort: 7002

selector:

app: armory-clouddriver

3.2.7 应用资源清单

任意node节点执行

现在也可以直接在k8s-7-200上kubectl apply

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/clouddriver/init-env.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/clouddriver/default-config.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/clouddriver/custom-config.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/clouddriver/dp.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/clouddriver/svc.yaml

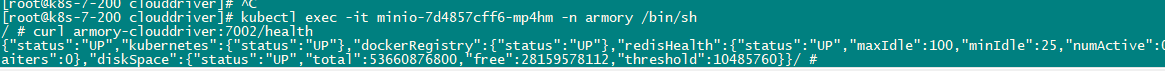

3.2.8 检查

[root@k8s-7-21 ~]# kubectl get pod -n armory|grep minio

[root@k8s-7-21 ~]# kubectl exec -it minio-7d4857cff6-mp4hm -n armory /bin/sh

/ # curl armory-clouddriver:7002/health

{

"status": "UP",

"kubernetes": {

"status": "UP"

},

"dockerRegistry": {

"status": "UP"

},

"redisHealth": {

"status": "UP",

"maxIdle": 100,

"minIdle": 25,

"numActive": 0,

"numIdle": 5,

"numWaiters": 0

},

"diskSpace": {

"status": "UP",

"total": 21250441216,

"free": 15657390080,

"threshold": 10485760

}

}

4 部署spinnaker第三部分

4.1 spinnaker之数据持久化组件front50部署

[root@k8s-7-200 ~]#mkdir /data/k8s-yaml/armory/front50

[root@k8s-7-200 ~]#cd /data/k8s-yaml/armory/front50

4.1.1 准备镜像

[root@k8s-7-200 ~]# docker pull armory/spinnaker-front50-slim:release-1.8.x-93febf2

[root@k8s-7-200 ~]# docker tag 0d353788f4f2 harbor.itdo.top/armory/front50:v1.8.x

[root@k8s-7-200 ~]# docker push harbor.itdo.top/armory/front50:v1.8.x

4.1.2 准备dp资源清单

[root@k8s-7-200 ~]# vim dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-front50

name: armory-front50

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-front50

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-front50"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"front50"'

labels:

app: armory-front50

spec:

containers:

- name: armory-front50

image: harbor.itdo.top/armory/front50:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/front50/bin/front50

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

# 生产中给大一些,本实验一开始给了512M,启动后运行一会就宕了

value: -javaagent:/opt/front50/lib/jamm-0.2.5.jar -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 8

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /home/spinnaker/.aws

name: credentials

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- name: credentials

secret:

defaultMode: 420

secretName: credentials

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

4.1.3 创建svc资源清单

[root@k8s-7-200 ~]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-front50

namespace: armory

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

app: armory-front50

4.1.4 应用资源清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/front50/dp.yaml

deployment.apps/armory-front50 created

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/front50/svc.yaml

service/armory-front50 created

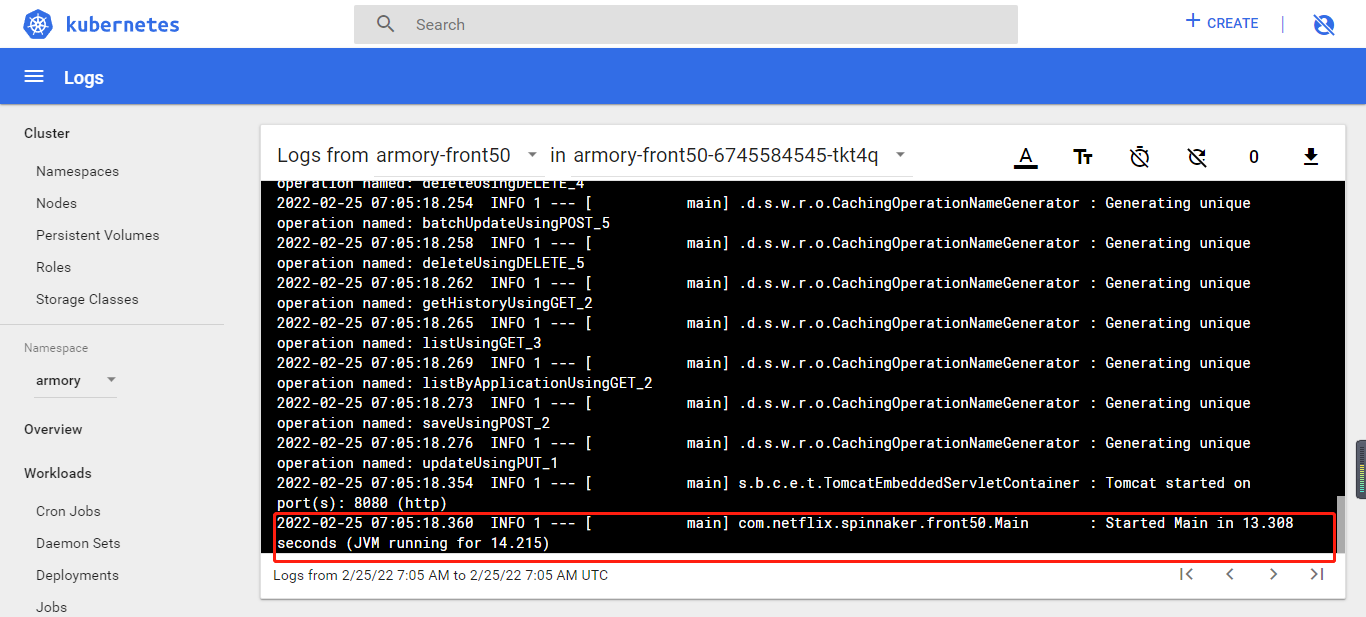

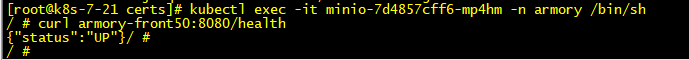

验证

[root@k8s-7-21 certs]# kubectl exec -it minio-7d4857cff6-mp4hm -n armory /bin/sh

/ # curl armory-front50:8080/health

{"status":"UP"}/ #

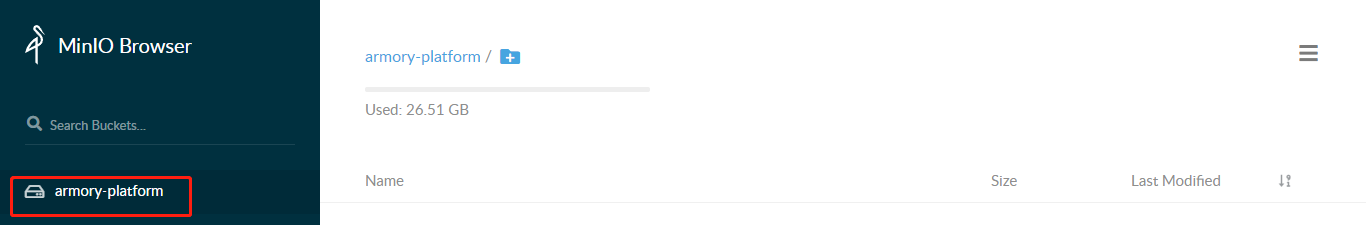

可以看到自动生成了一个bucket

数据存放在/data/nfs-volume/minio/下,如果备份数据也是备份这里

4.2 spinnaker之任务编排组件orca部署

[root@k8s-7-200 ~]# mkdir /data/k8s-yaml/armory/orca

[root@k8s-7-200 ~]# cd /data/k8s-yaml/armory/orca

4.2.1 准备docker镜像

[root@k8s-7-200 ~]# docker pull docker.io/armory/spinnaker-orca-slim:release-1.8.x-de4ab55

[root@k8s-7-200 ~]# docker tag 5103b1f73e04 harbor.itdo.top/armory/orca:v1.8.x

[root@k8s-7-200 ~]# docker push harbor.itdo.top/armory/orca:v1.8.x

4.2.2 准备dp资源清单

t

metadata:

labels:

app: armory-orca

name: armory-orca

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-orca

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-orca"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"orca"'

labels:

app: armory-orca

spec:

containers:

- name: armory-orca

image: harbor.itdo.top/armory/orca:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/orca/bin/orca

ports:

- containerPort: 8083

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 8083

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8083

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

4.2.3 准备svc资源清单

[root@k8s-7-200 ~]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-orca

namespace: armory

spec:

ports:

- port: 8083

protocol: TCP

targetPort: 8083

selector:

app: armory-orca

4.2.4 应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/orca/dp.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/orca/svc.yaml

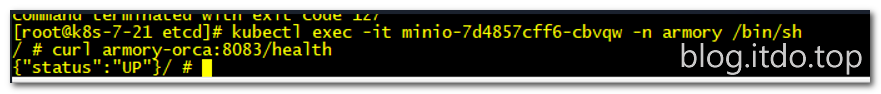

检查

[root@k8s-7-21 etcd]# kubectl exec -it minio-7d4857cff6-cbvqw -n armory /bin/sh

/ # curl armory-orca:8083/health

{"status":"UP"}

4.3 spinnaker之echo部署

[root@k8s-7-200 ~]#mkdir /data/k8s-yaml/armory/echo

[root@k8s-7-200 ~]#cd /data/k8s-yaml/armory/echo

4.3.1 准备docker镜像

[root@k8s-7-200 ~]#docker pull docker.io/armory/echo-armory:c36d576-release-1.8.x-617c567

[root@k8s-7-200 ~]#docker tag 415efd46f474 harbor.itdo.top/armory/echo:v1.8.x

[root@k8s-7-200 ~]#docker push harbor.itdo.top/armory/echo:v1.8.x

4.3.2 准备dp资源清单

[root@k8s-7-200 ~]# vim dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-echo

name: armory-echo

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-echo

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-echo"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"echo"'

labels:

app: armory-echo

spec:

containers:

- name: armory-echo

image: harbor.itdo.top/armory/echo:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/echo/bin/echo

ports:

- containerPort: 8089

protocol: TCP

env:

- name: JAVA_OPTS

value: -javaagent:/opt/echo/lib/jamm-0.2.5.jar -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8089

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8089

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

4.3.3 准备svc资源清单

[root@k8s-7-200 ~]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-echo

namespace: armory

spec:

ports:

- port: 8089

protocol: TCP

targetPort: 8089

selector:

app: armory-echo

4.3.4 应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/echo/dp.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/echo/svc.yaml

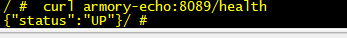

检查

[root@k8s-7-21 ~]# kubectl exec -it minio-7d4857cff6-cbvqw -n armory /bin/sh

/ # curl armory-echo:8089/health

{"status":"UP"}

4.4 spinnaker之流水线交互组件igor部署

[root@k8s-7-200 ~]# mkdir /data/k8s-yaml/armory/igor

[root@k8s-7-200 ~]# cd /data/k8s-yaml/armory/igor

4.4.1 准备docker镜像

[root@k8s-7-200 ~]# docker pull docker.io/armory/spinnaker-igor-slim:release-1.8-x-new-install-healthy-ae2b329

[root@k8s-7-200 ~]# docker tag 23984f5b43f6 harbor.itdo.top/armory/igor:v1.8.x

[root@k8s-7-200 ~]# docker push harbor.itdo.top/armory/igor:v1.8.x

4.4.2 准备dp资源清单

[root@k8s-7-200 ~]# vim dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-igor

name: armory-igor

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-igor

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-igor"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"igor"'

labels:

app: armory-igor

spec:

containers:

- name: armory-igor

image: harbor.itdo.top/armory/igor:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && cd /home/spinnaker/config

&& /opt/igor/bin/igor

ports:

- containerPort: 8088

protocol: TCP

env:

- name: IGOR_PORT_MAPPING

value: -8088:8088

- name: JAVA_OPTS

value: -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8088

scheme: HTTP

initialDelaySeconds: 600

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8088

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

4.4.3 准备svc资源清单

[root@k8s-7-200 ~]#vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-igor

namespace: armory

spec:

ports:

- port: 8088

protocol: TCP

targetPort: 8088

selector:

app: armory-igor

4.4.4 应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/igor/dp.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/igor/svc.yaml

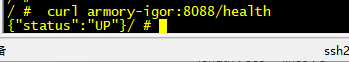

检查

[root@k8s-7-21 ~]# kubectl exec -it minio-7d4857cff6-cbvqw -n armory /bin/sh

/ # curl armory-igor:8088/health

{"status":"UP"}

4.5 spinnaker之API提供组件gate部署

[root@k8s-7-200 ~]#mkdir /data/k8s-yaml/armory/gate

[root@k8s-7-200 ~]#cd /data/k8s-yaml/armory/gate

4.5.1 准备docker镜像

[root@k8s-7-200 ~]#docker pull docker.io/armory/gate-armory:dfafe73-release-1.8.x-5d505ca

[root@k8s-7-200 ~]#docker tag b092d4665301 harbor.itdo.top/armory/gate:v1.8.x

[root@k8s-7-200 ~]#docker push harbor.itdo.top/armory/gate:v1.8.x

4.5.2 准备dp资源清单

[root@k8s-7-200 ~]#vim dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-gate

name: armory-gate

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-gate

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-gate"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"gate"'

labels:

app: armory-gate

spec:

containers:

- name: armory-gate

image: harbor.itdo.top/armory/gate:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh gate && cd /home/spinnaker/config

&& /opt/gate/bin/gate

ports:

- containerPort: 8084

name: gate-port

protocol: TCP

- containerPort: 8085

name: gate-api-port

protocol: TCP

env:

- name: GATE_PORT_MAPPING

value: -8084:8084

- name: GATE_API_PORT_MAPPING

value: -8085:8085

- name: JAVA_OPTS

value: -Xmx1000M

envFrom:

- configMapRef:

name: init-env

livenessProbe:

exec:

command:

- /bin/bash

- -c

- wget -O - http://localhost:8084/health || wget -O - https://localhost:8084/health

failureThreshold: 5

initialDelaySeconds: 600

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

exec:

command:

- /bin/bash

- -c

- wget -O - http://localhost:8084/health?checkDownstreamServices=true&downstreamServices=true

|| wget -O - https://localhost:8084/health?checkDownstreamServices=true&downstreamServices=true

failureThreshold: 3

initialDelaySeconds: 180

periodSeconds: 5

successThreshold: 10

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

4.5.3 准备svc资源清单

[root@k8s-7-200 ~]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-gate

namespace: armory

spec:

ports:

- name: gate-port

port: 8084

protocol: TCP

targetPort: 8084

- name: gate-api-port

port: 8085

protocol: TCP

targetPort: 8085

selector:

app: armory-gate

4.5.4 应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/gate/dp.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/gate/svc.yaml

检查验证

[root@k8s-7-21 ~]# kubectl exec -it minio-7d4857cff6-cbvqw -n armory /bin/sh

/ # curl armory-gate:8084/health

{"status":"UP"}

4.6 spinnaker之前端网站项目deck部署

[root@k8s-7-200 ~]# mkdir /data/k8s-yaml/armory/deck

[root@k8s-7-200 ~]# cd /data/k8s-yaml/armory/deck

4.6.1 准备docker镜像

[root@k8s-7-200 ~]# docker pull docker.io/armory/deck-armory:d4bf0cf-release-1.8.x-0a33f94

[root@k8s-7-200 ~]# docker tag 9a87ba3b319f harbor.itdo.top/armory/deck:v1.8.x

[root@k8s-7-200 ~]# docker push harbor.itdo.top/armory/deck:v1.8.x

4.6.2 准备dp资源清单

[root@k8s-7-200 ~]# vim dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-deck

name: armory-deck

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-deck

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-deck"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"deck"'

labels:

app: armory-deck

spec:

containers:

- name: armory-deck

image: harbor.itdo.top/armory/deck:v1.8.x

imagePullPolicy: IfNotPresent

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh && /entrypoint.sh

ports:

- containerPort: 9000

protocol: TCP

envFrom:

- configMapRef:

name: init-env

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: 9000

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 5

httpGet:

path: /

port: 9000

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /etc/podinfo

name: podinfo

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /opt/spinnaker/config/custom

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: podinfo

4.6.3 准备svc资源清单

[root@k8s-7-200 ~]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-deck

namespace: armory

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9000

selector:

app: armory-deck

4.6.4 应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/deck/dp.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/deck/svc.yaml

检查验证

[root@k8s-7-21 ~]# kubectl exec -it minio-7d4857cff6-cbvqw -n armory /bin/sh

/ #curl armory-deck:80

4.7 spinnaker之前端代理nginx部署

[root@k8s-7-200 ~]# mkdir /data/k8s-yaml/armory/nginx

[root@k8s-7-200 ~]# cd /data/k8s-yaml/armory/nginx

4.7.1 准备docker镜像

[root@k8s-7-200 ~]# docker pull nginx:1.12.2

[root@k8s-7-200 ~]# docker tag 4037a5562b03 harbor.itdo.top/armory/nginx:v1.12.2

[root@k8s-7-200 ~]# docker push harbor.itdo.top/armory/nginx:v1.12.2

4.7.2 准备dp资源清单

[root@k8s-7-200 ~]# vim dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: armory-nginx

name: armory-nginx

namespace: armory

spec:

replicas: 1

revisionHistoryLimit: 7

selector:

matchLabels:

app: armory-nginx

template:

metadata:

annotations:

artifact.spinnaker.io/location: '"armory"'

artifact.spinnaker.io/name: '"armory-nginx"'

artifact.spinnaker.io/type: '"kubernetes/deployment"'

moniker.spinnaker.io/application: '"armory"'

moniker.spinnaker.io/cluster: '"nginx"'

labels:

app: armory-nginx

spec:

containers:

- name: armory-nginx

image: harbor.itdo.top/armory/nginx:v1.12.2

imagePullPolicy: Always

command:

- bash

- -c

args:

- bash /opt/spinnaker/config/default/fetch.sh nginx && nginx -g 'daemon off;'

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8085

name: api

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 180

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 3

successThreshold: 5

timeoutSeconds: 1

volumeMounts:

- mountPath: /opt/spinnaker/config/default

name: default-config

- mountPath: /etc/nginx/conf.d

name: custom-config

imagePullSecrets:

- name: harbor

volumes:

- configMap:

defaultMode: 420

name: custom-config

name: custom-config

- configMap:

defaultMode: 420

name: default-config

name: default-config

4.6.3 准备svc资源清单

[root@k8s-7-200 ~]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: armory-nginx

namespace: armory

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

- name: https

port: 443

protocol: TCP

targetPort: 443

- name: api

port: 8085

protocol: TCP

targetPort: 8085

selector:

app: armory-nginx

4.6.4 准备ingress资源清单

[root@k8s-7-200 ~]#vim ingress.yaml

kind: IngressRoute

metadata:

labels:

app: spinnaker

web: spinnaker.itdo.top

name: spinnaker-route

namespace: armory

spec:

entryPoints:

- web

routes:

- match: Host(`spinnaker.itdo.top`)

kind: Rule

services:

- name: armory-nginx

port: 80

4.6.5 应用资源配置清单

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/nginx/dp.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/nginx/svc.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/armory/nginx/ingress.yaml

DNS解析

spinnaker A 10.4.7.10

[[email protected] ~]# systemctl restart named

[[email protected] ~]# dig -t A spinnaker.itdo.top @10.4.7.11 +short

10.4.7.10

检查http://spinnaker.itdo.top

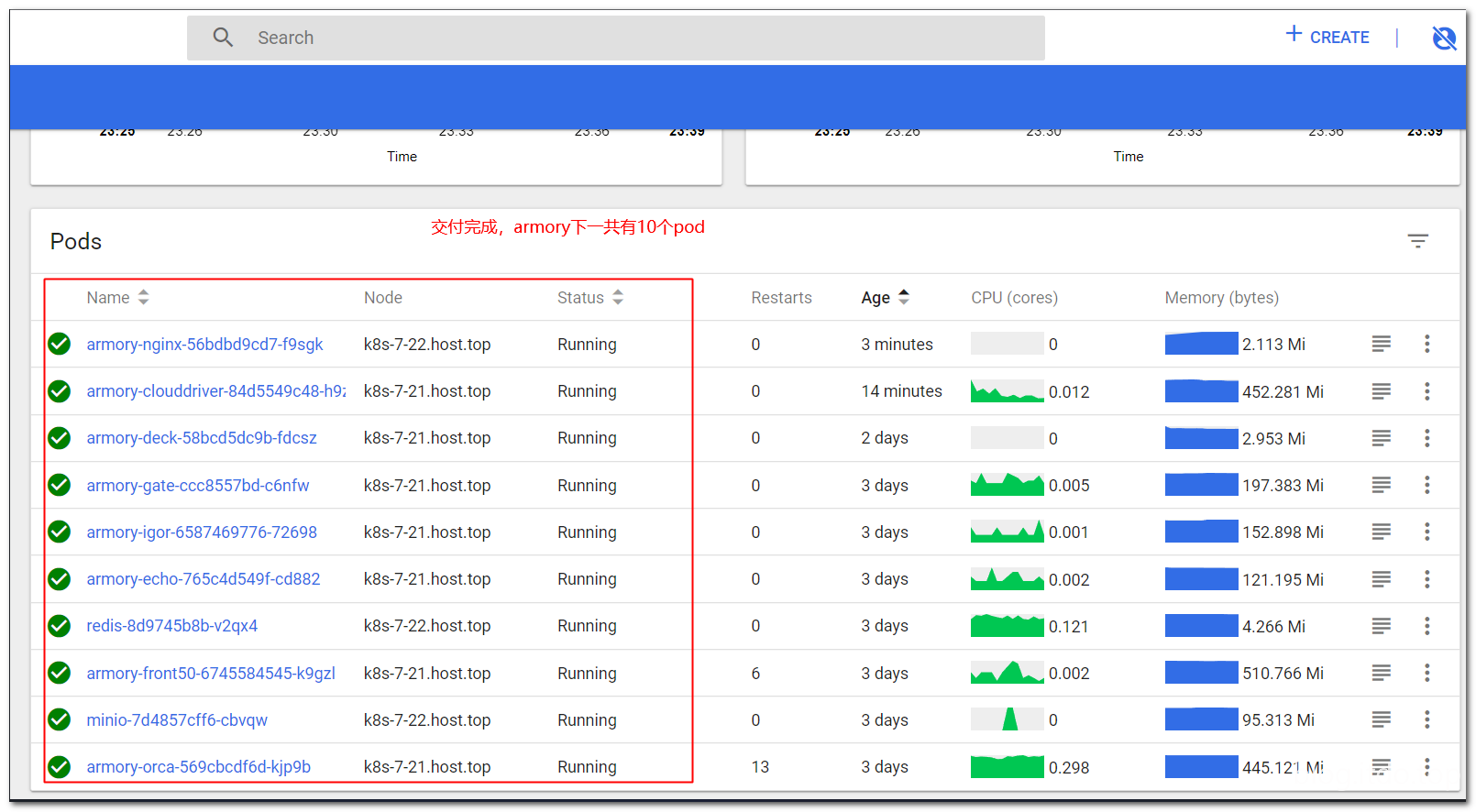

汇总

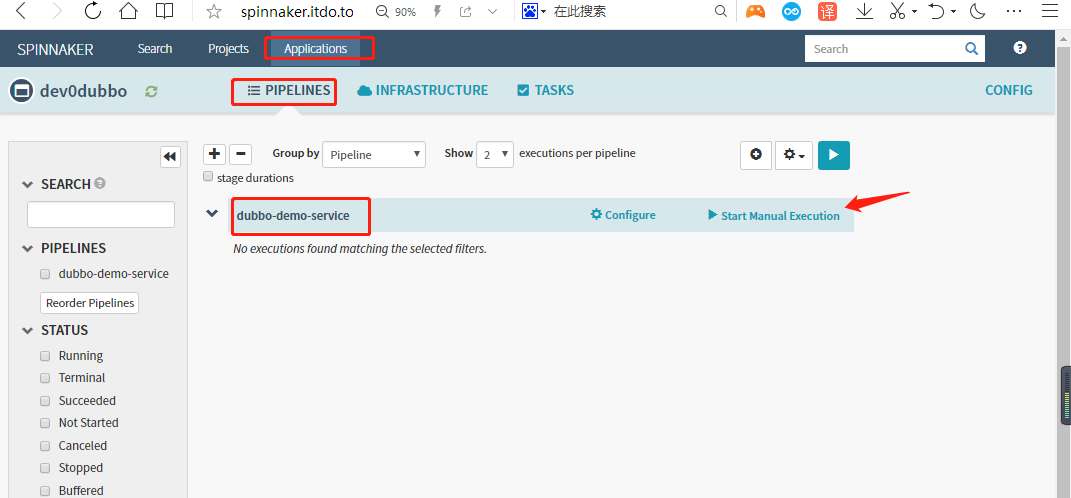

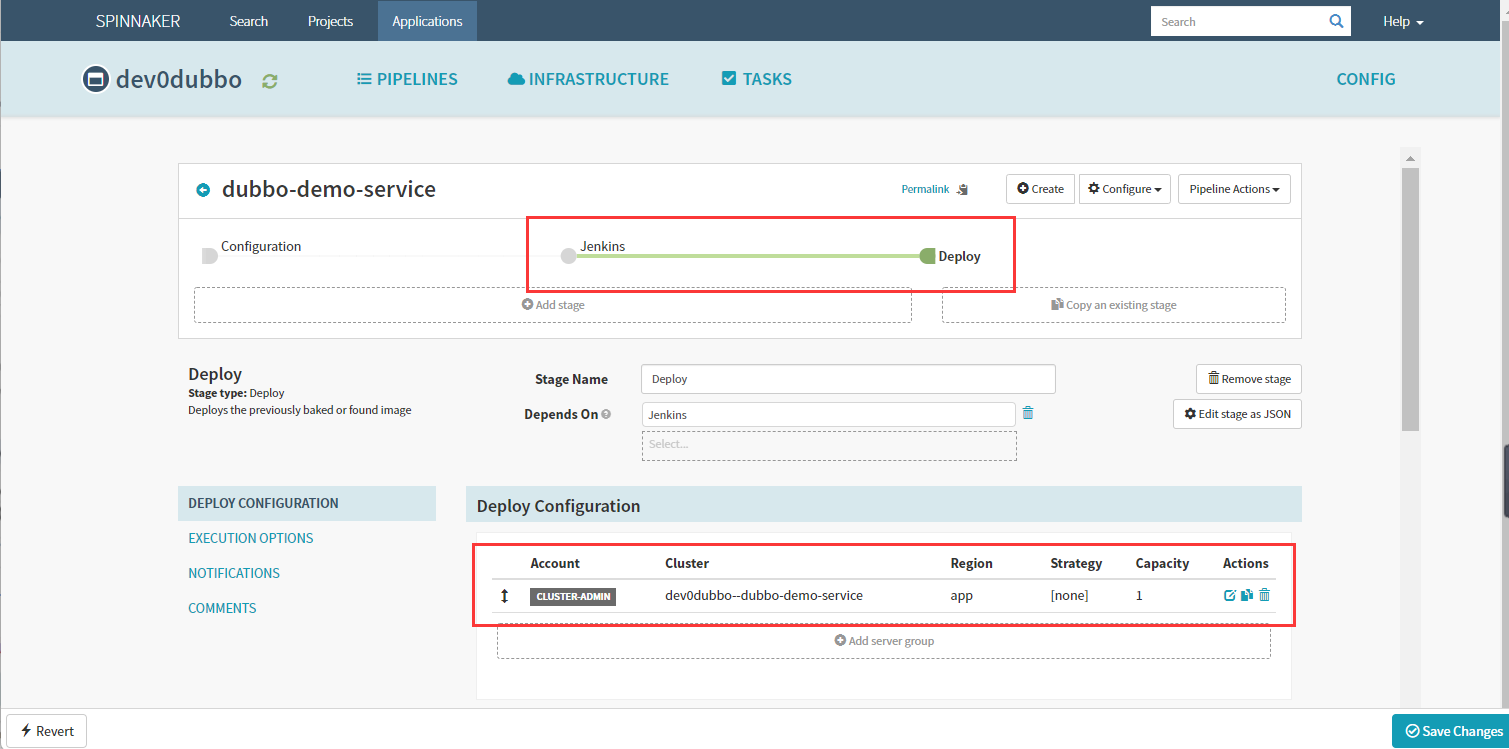

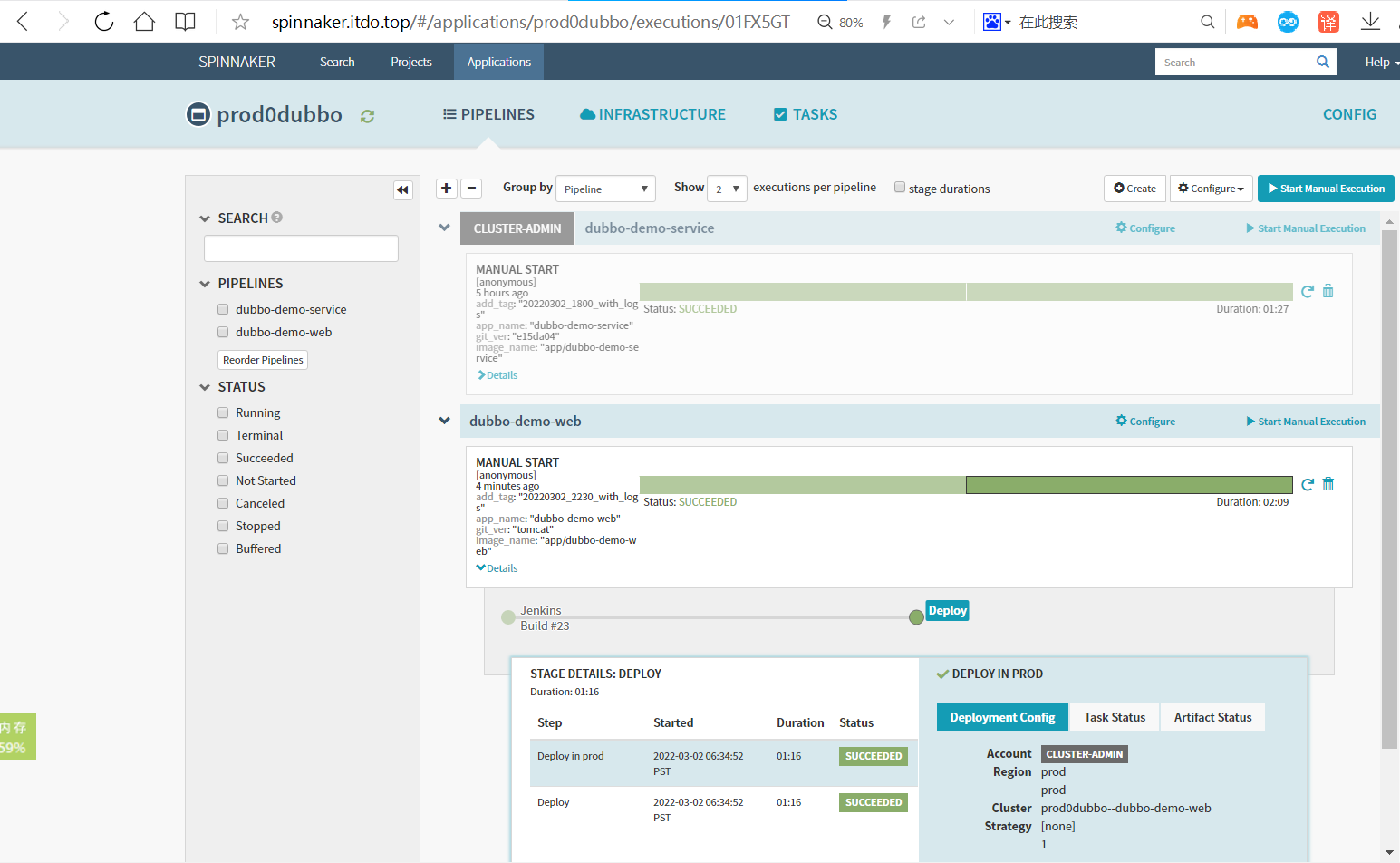

5.使用Spinnaker结合Jenkins持续构建

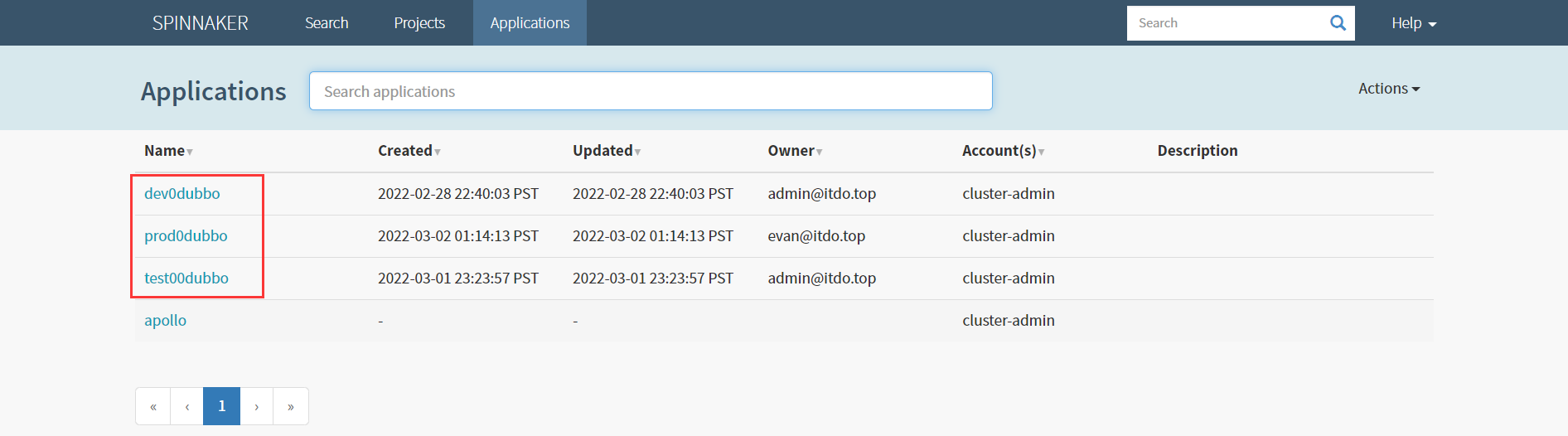

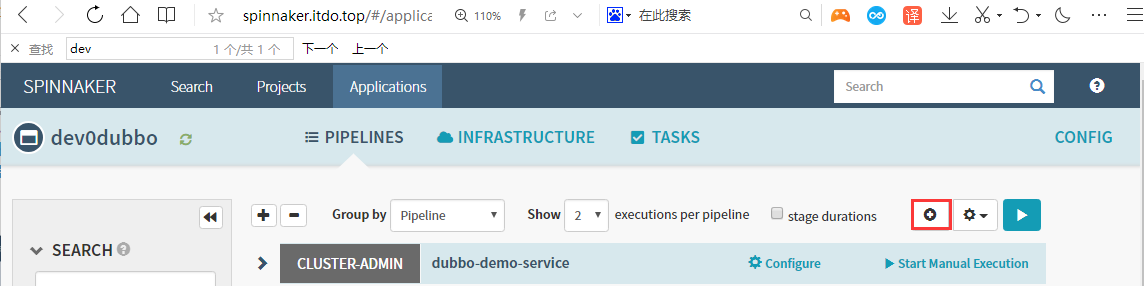

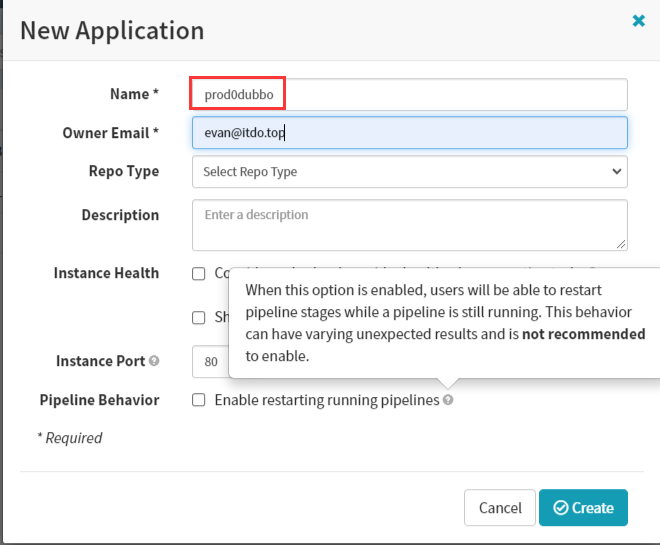

5.1 Spinnaker创建应用集

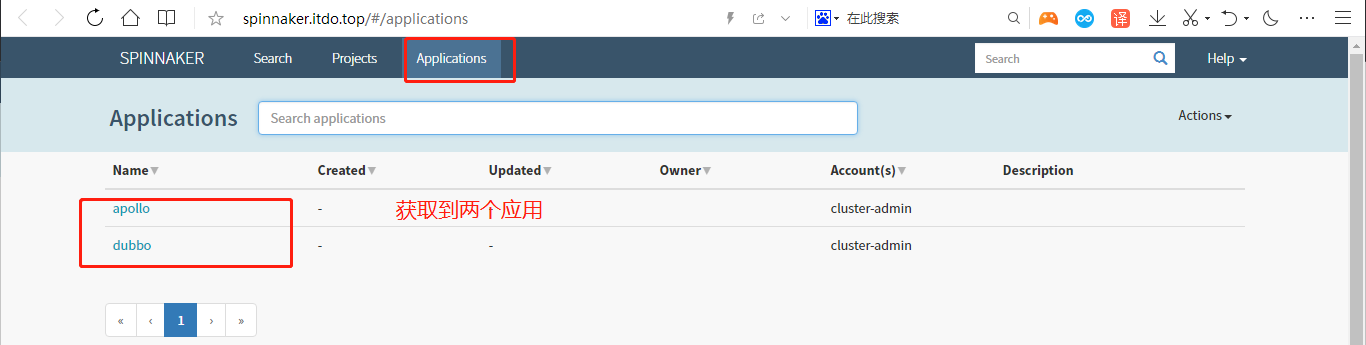

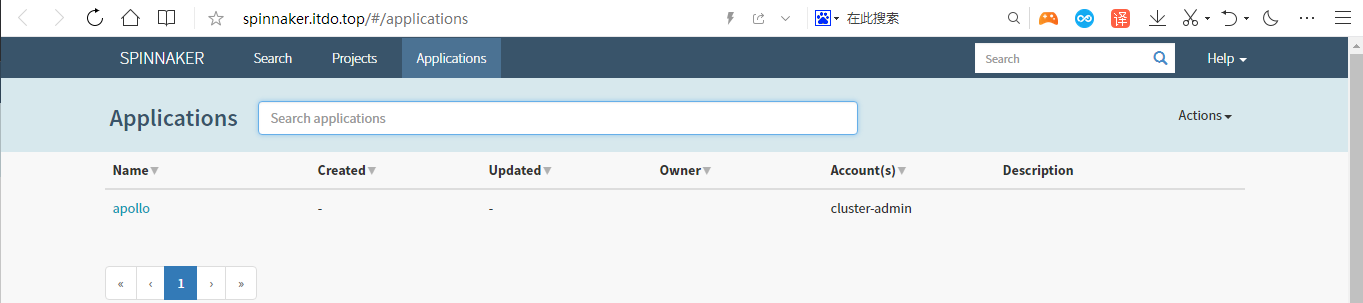

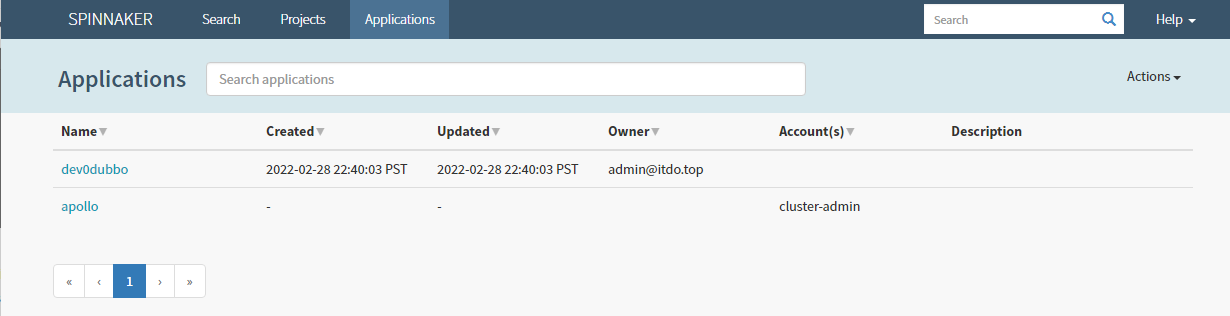

为了方便,启动Test环境中Apollo,并删除dev/test/prod 3个环境中的 dubbo-demo 提供者和消费者服务的资源配置清单,尝试通过Spinnaker实现从构建到发布的完整流程;

spinnaker上Application只剩下apollo

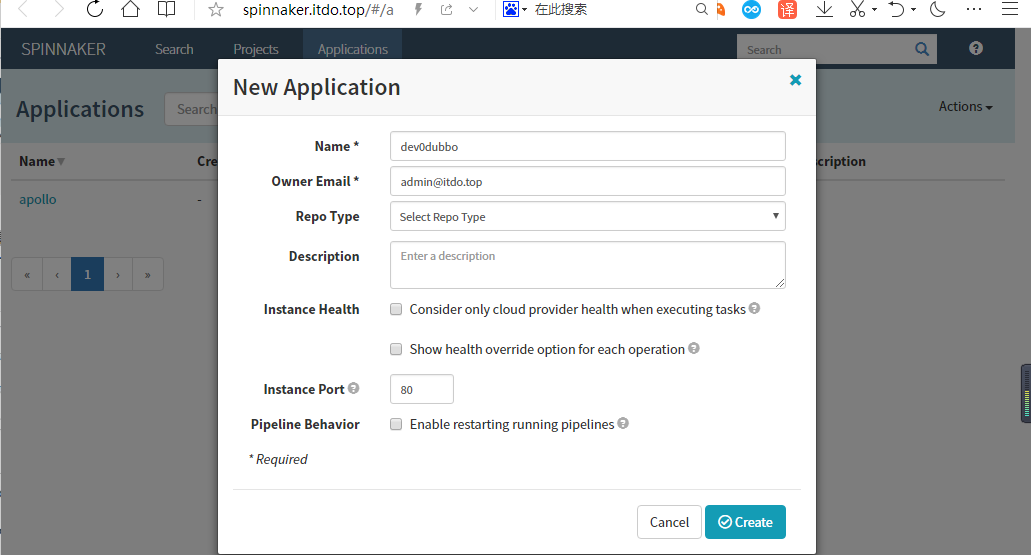

Application–>Actions–>Create Application

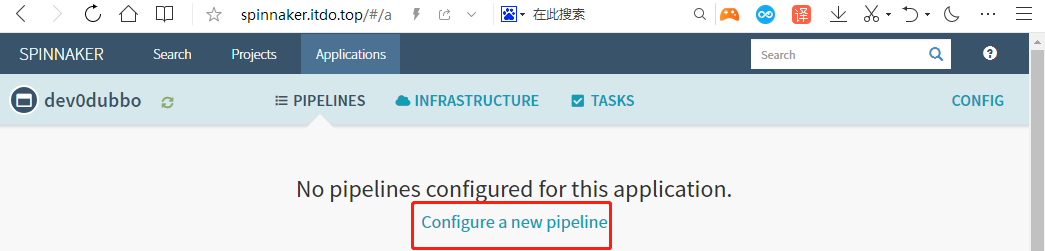

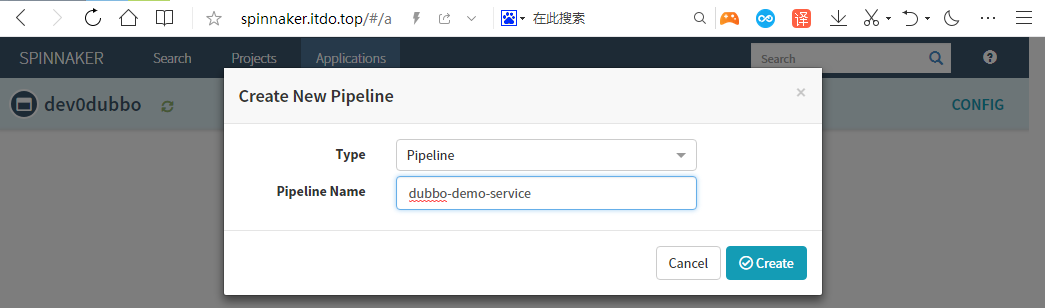

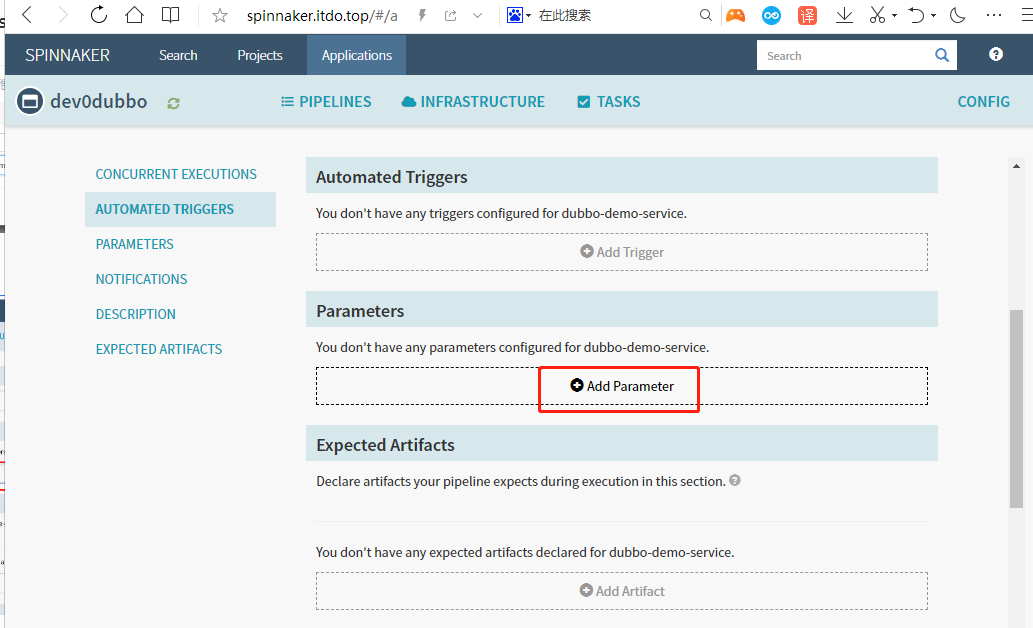

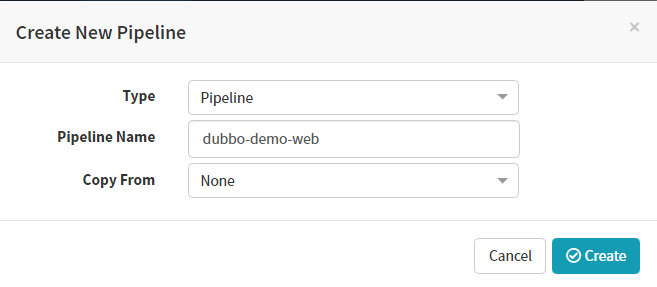

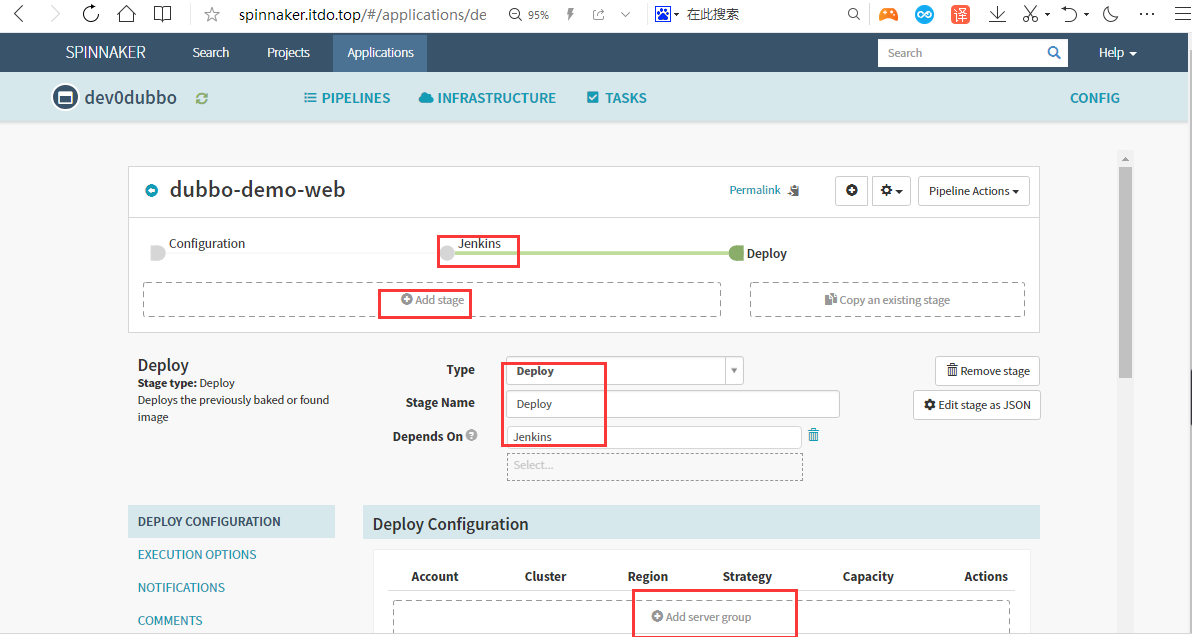

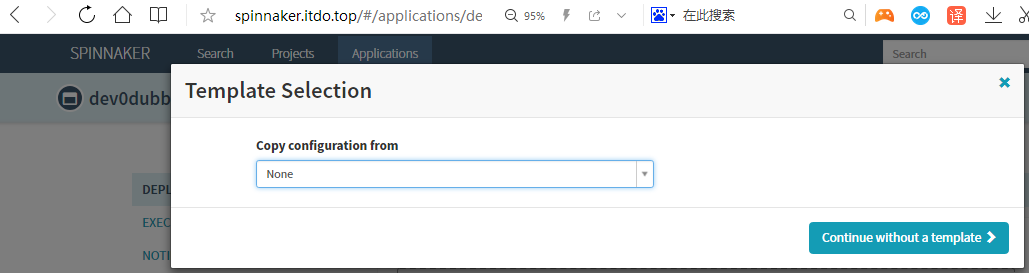

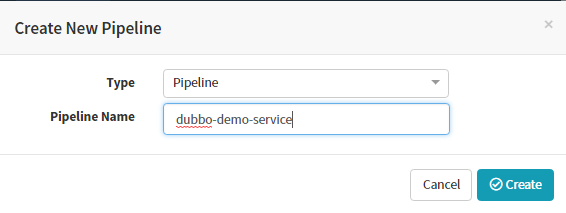

5.2 创建Pipeline

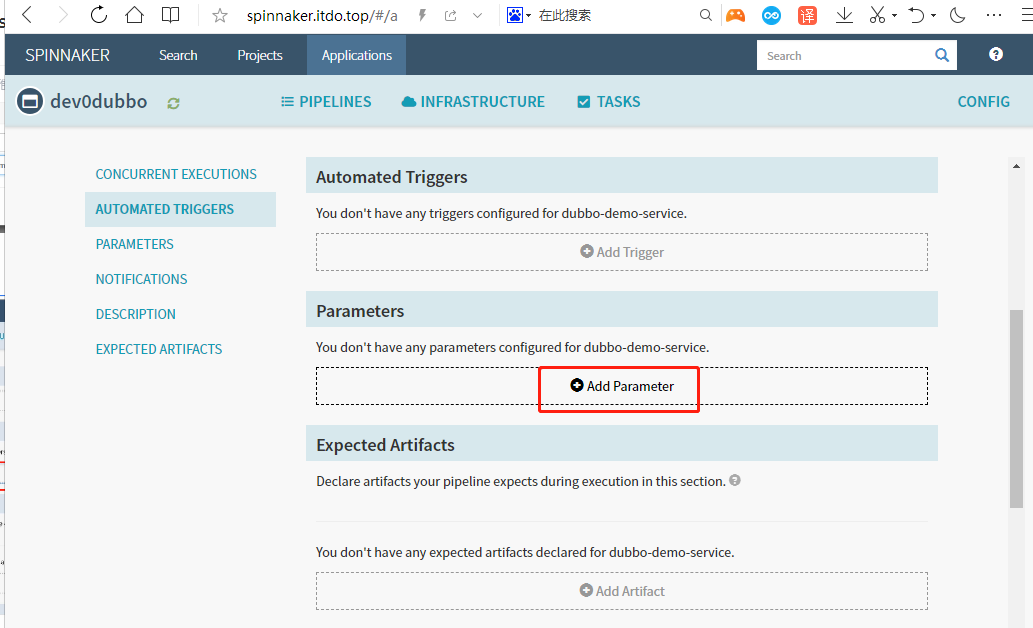

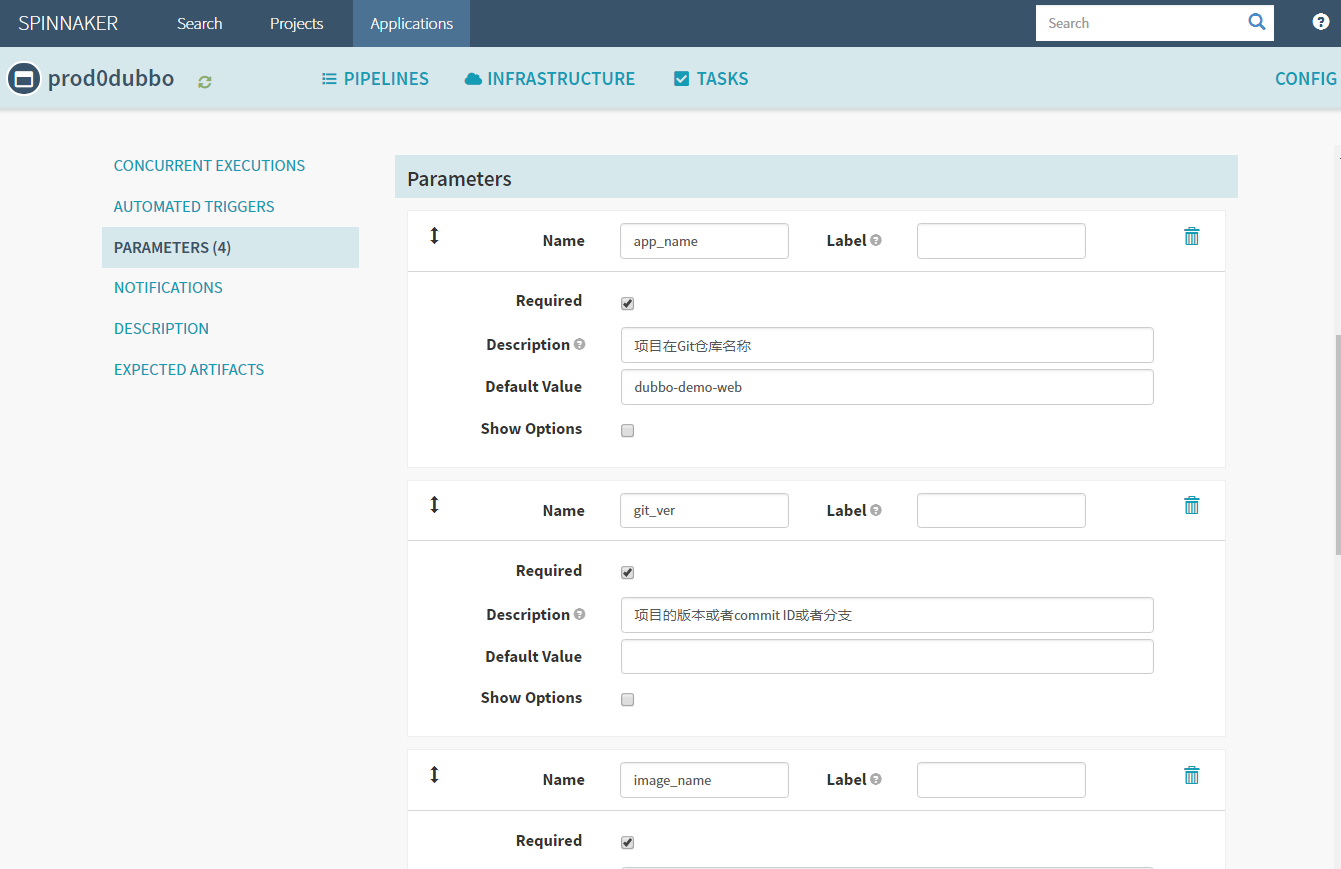

Applications–>dev0dubbo–>piplines–>configure a new pipeline

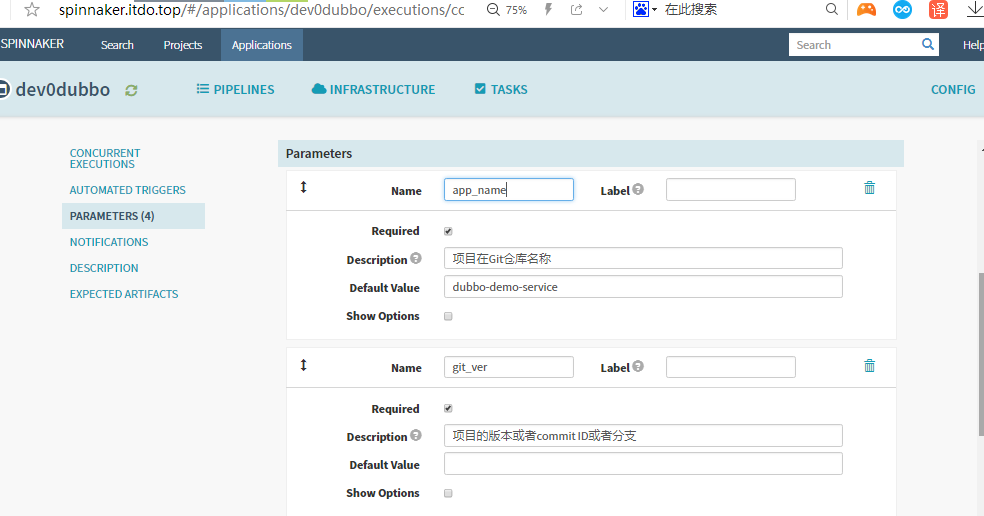

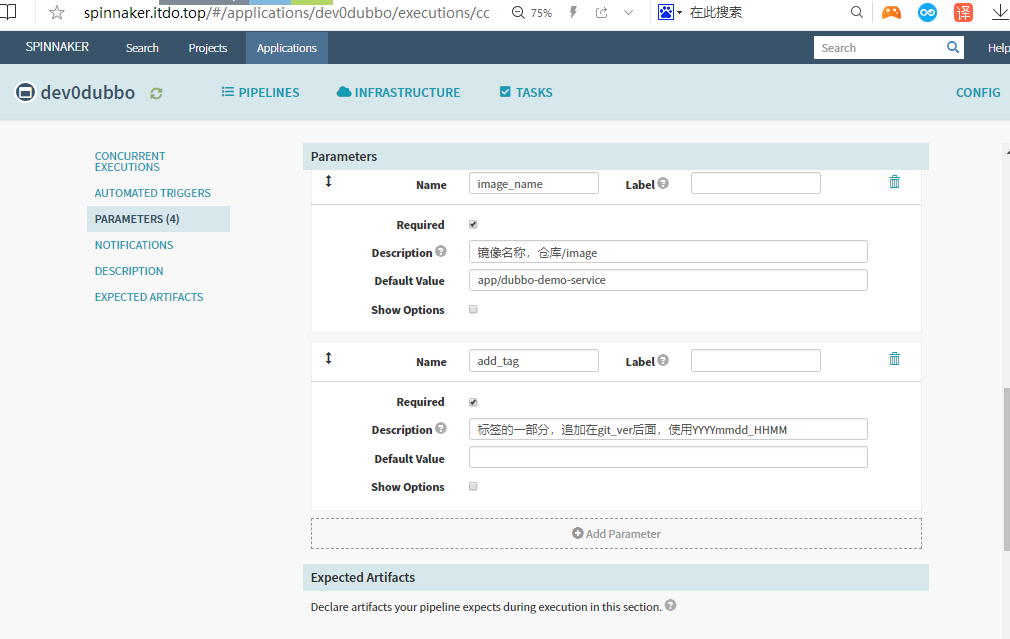

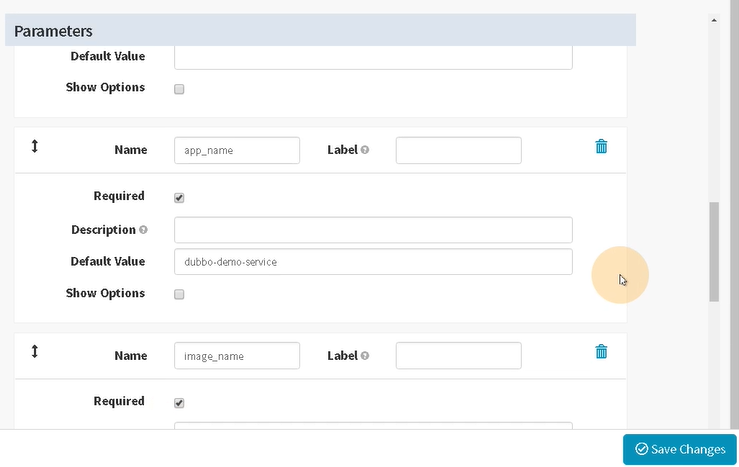

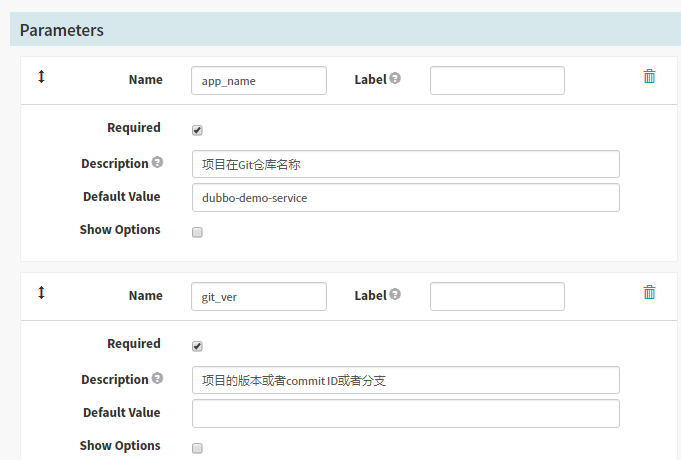

Add Parameter

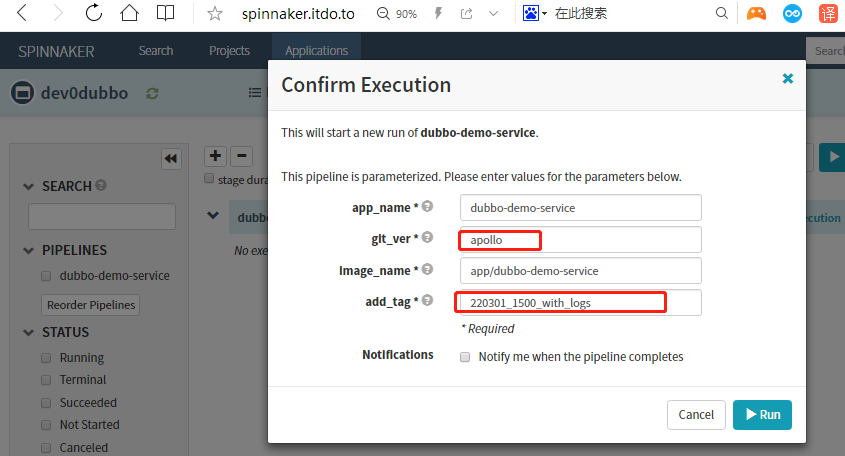

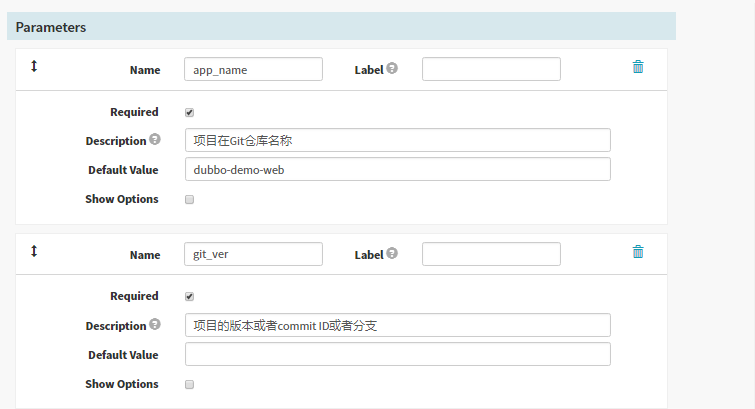

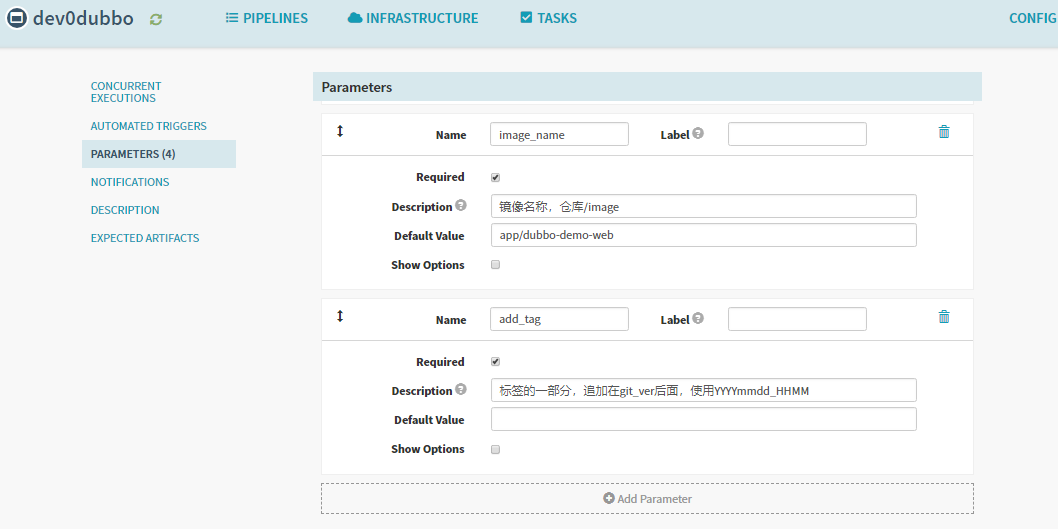

增加以下四个参数

本次编译和发布 dubbo-demo-service,因此默认的项目名称和镜像名称是基本确定的

1. name: app_name

required: true

Default Value : dubbo-demo-service

description: 项目在Git仓库名称

2. name: git_ver

required: true

description: 项目的版本或者commit ID或者分支

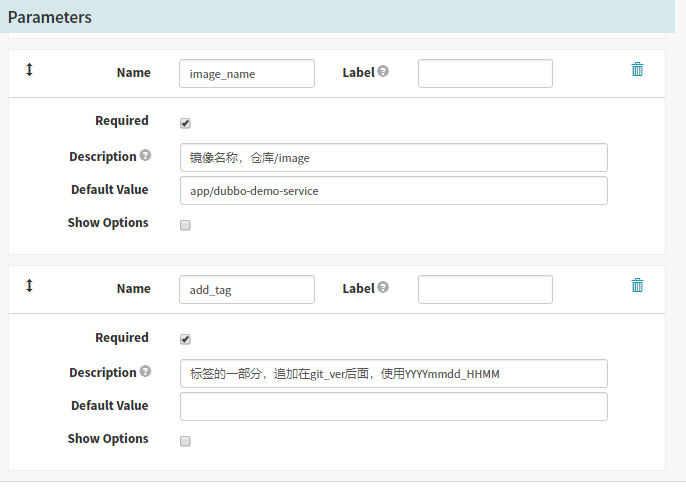

3. image_name

required: true

default: app/dubbo-demo-service

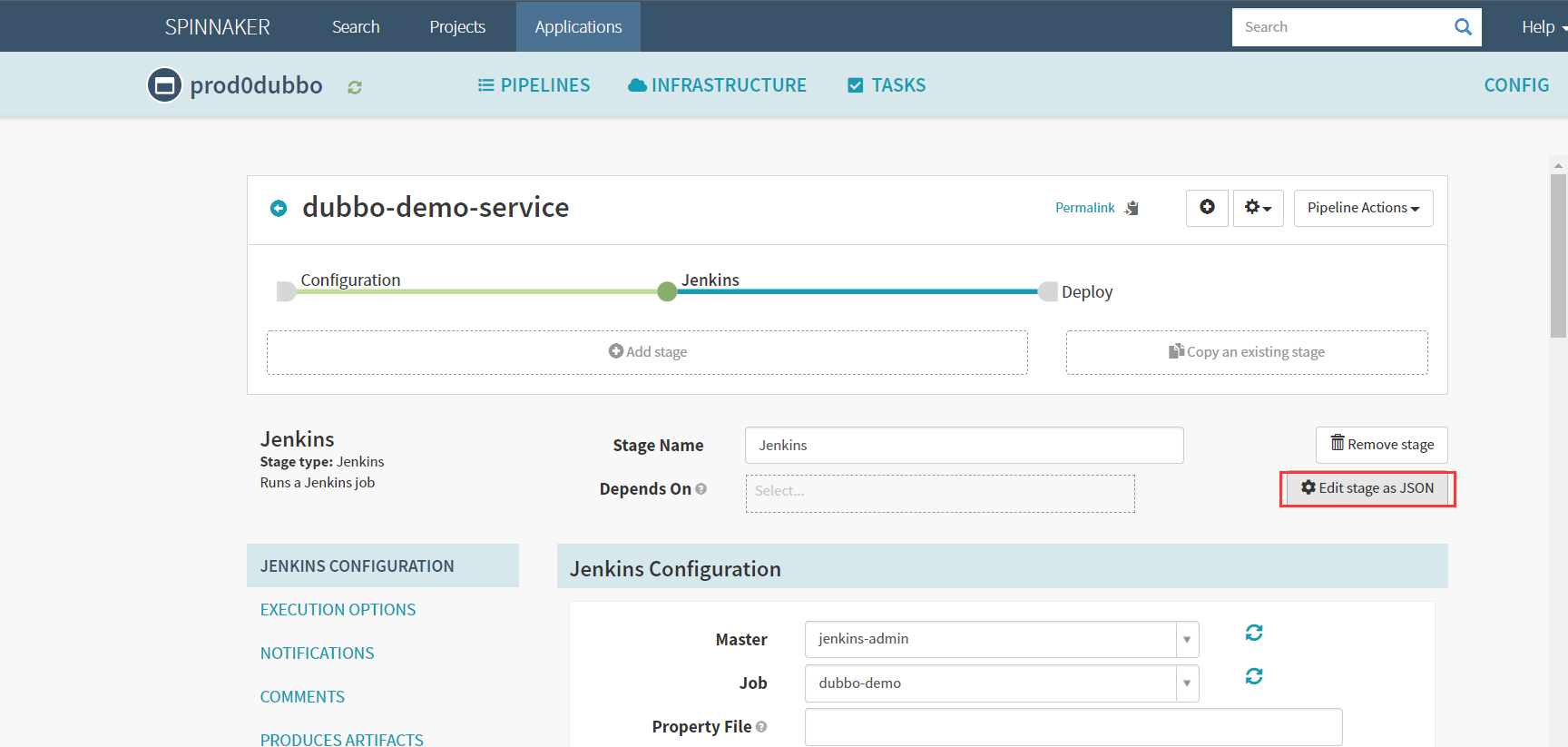

description: 镜像名称,仓库/image

4. name: add_tag

required: true

description: 标签的一部分,追加在git_ver后面,使用YYYYmmdd_HHMM

save保存配置

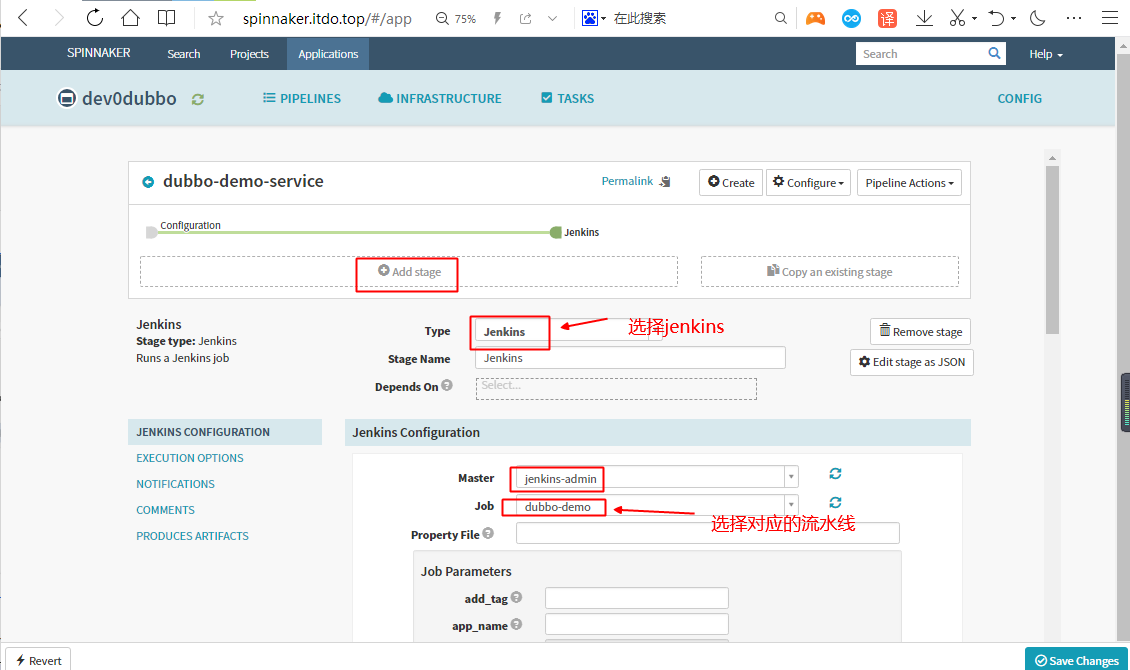

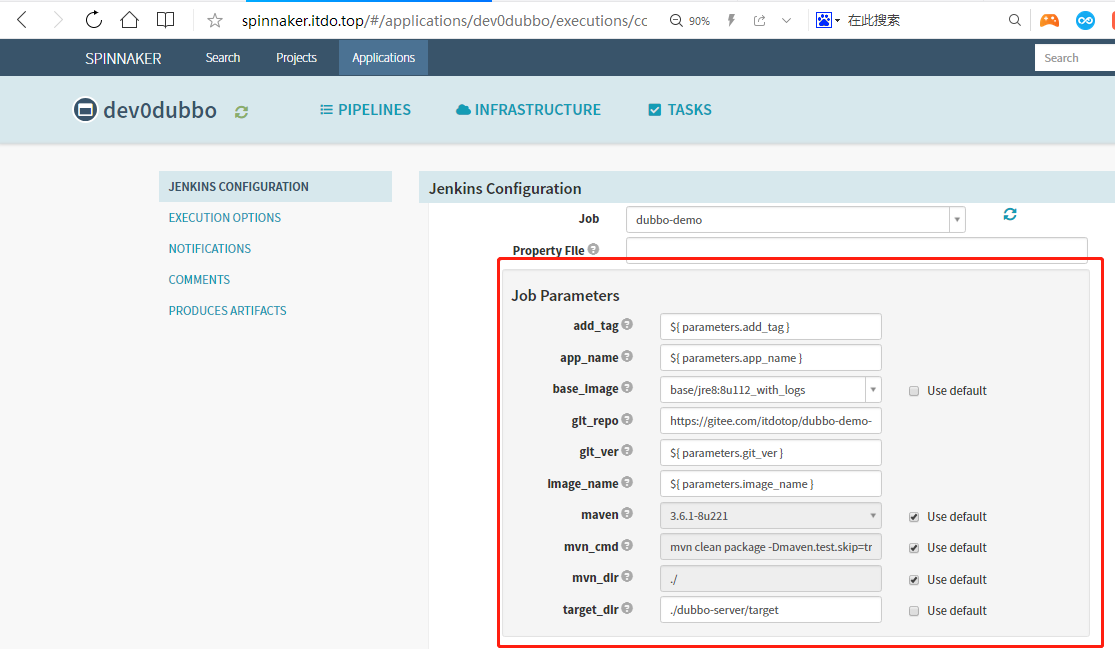

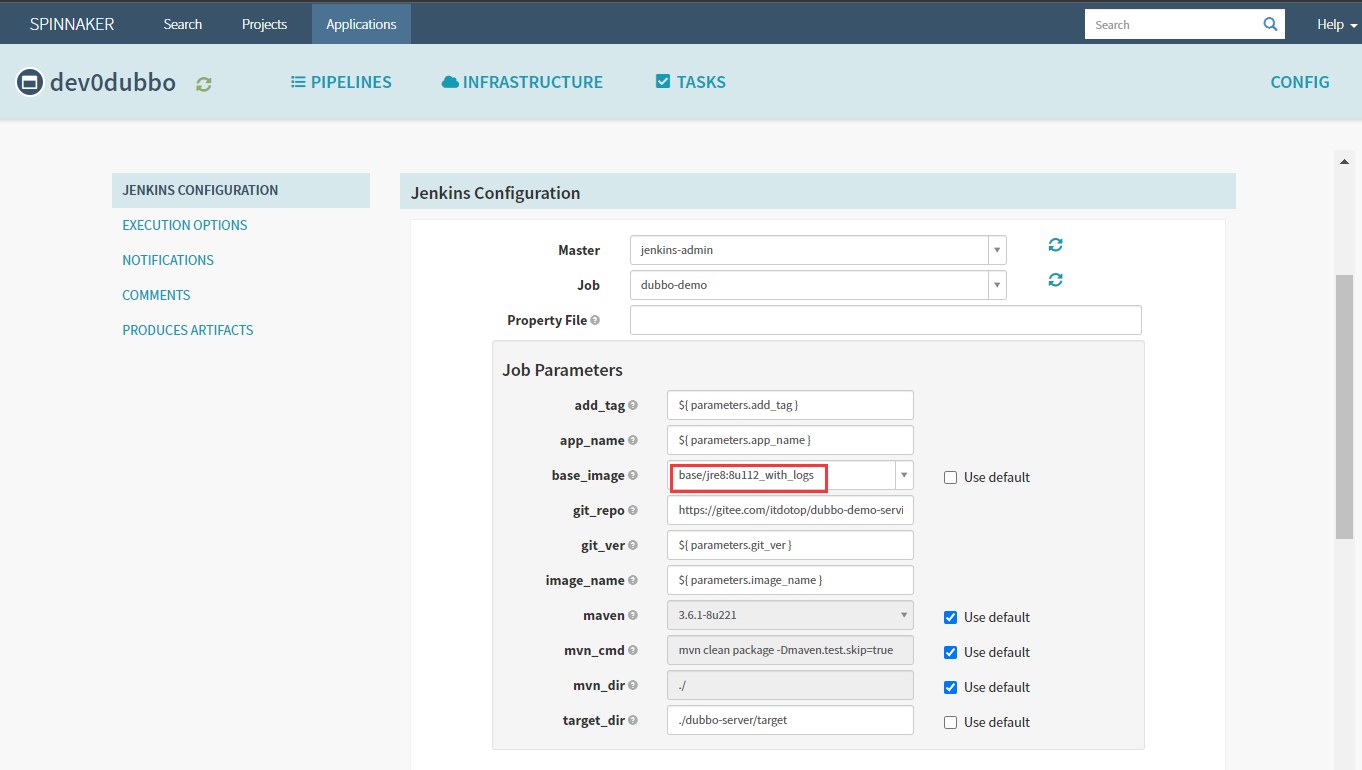

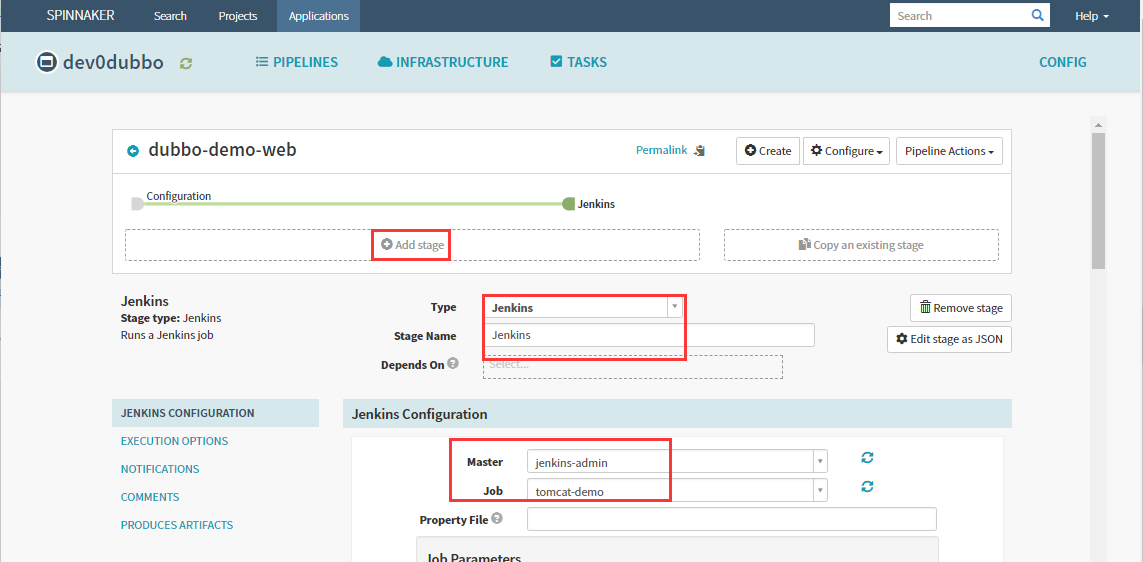

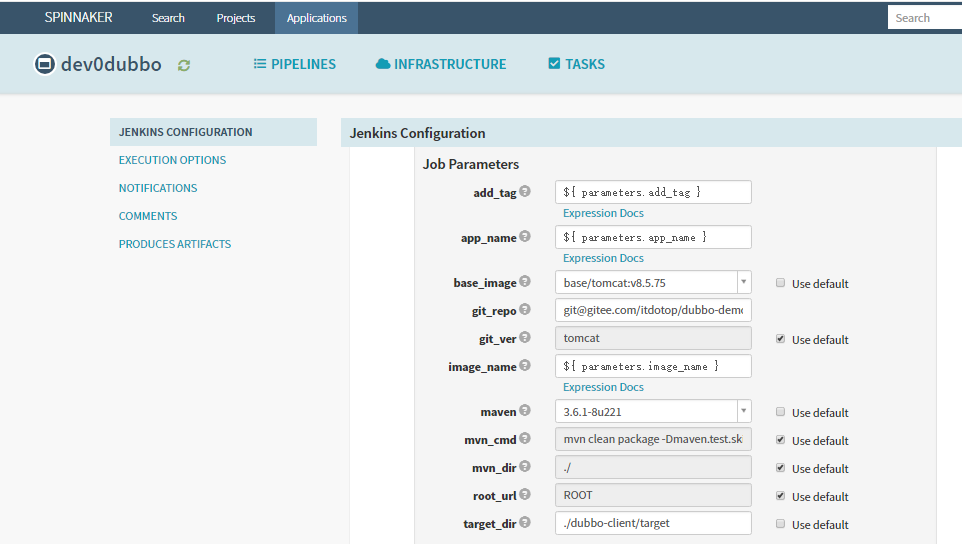

5.3 Add stage,创建Jenkins构建

参数说明:

add_tag/app_name/image_name/git_ver,使用变量传入第一步配置的4个参数

app_name:${ parameters.app_name }

image_name:${ parameters.image_name }

git_repo:https://gitee.com/itdotop/dubbo-demo-service.git

git_ver:${ parameters.git_ver }

add_tag:${ parameters.add_tag }

mvn_dir:./

target_dir:./dubbo-server/target

mvn_cmd:mvn clean package -Dmaven.test.skip=true

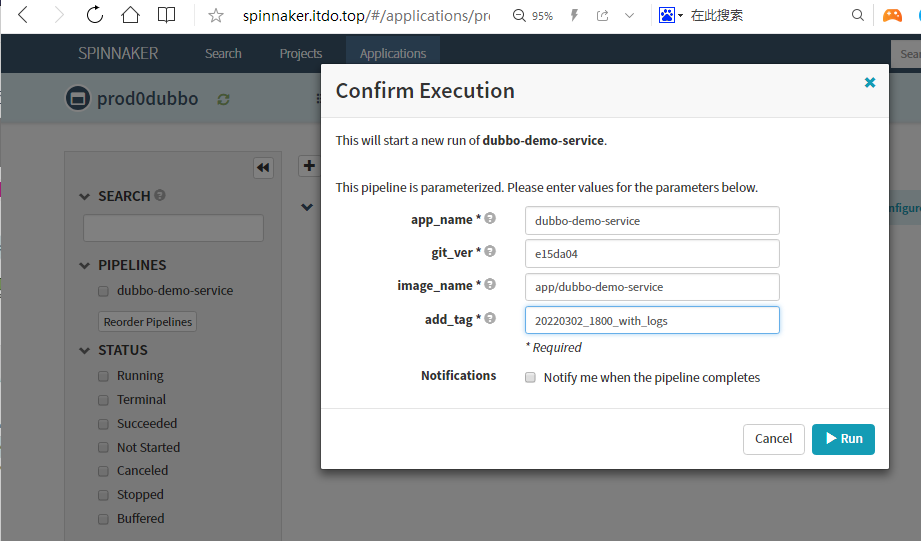

base_image:base/jre8:8u112_with_logs

maven:3.6.1-8u221

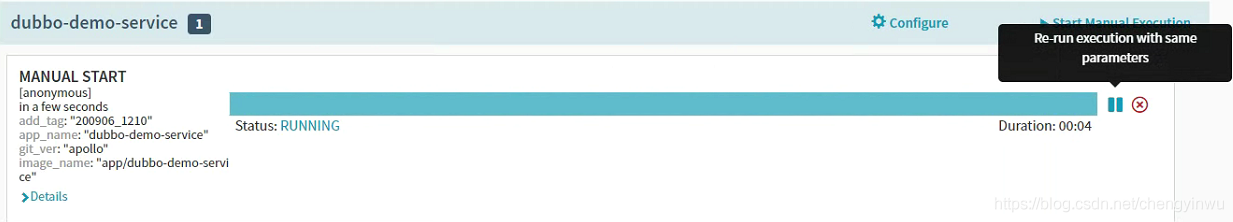

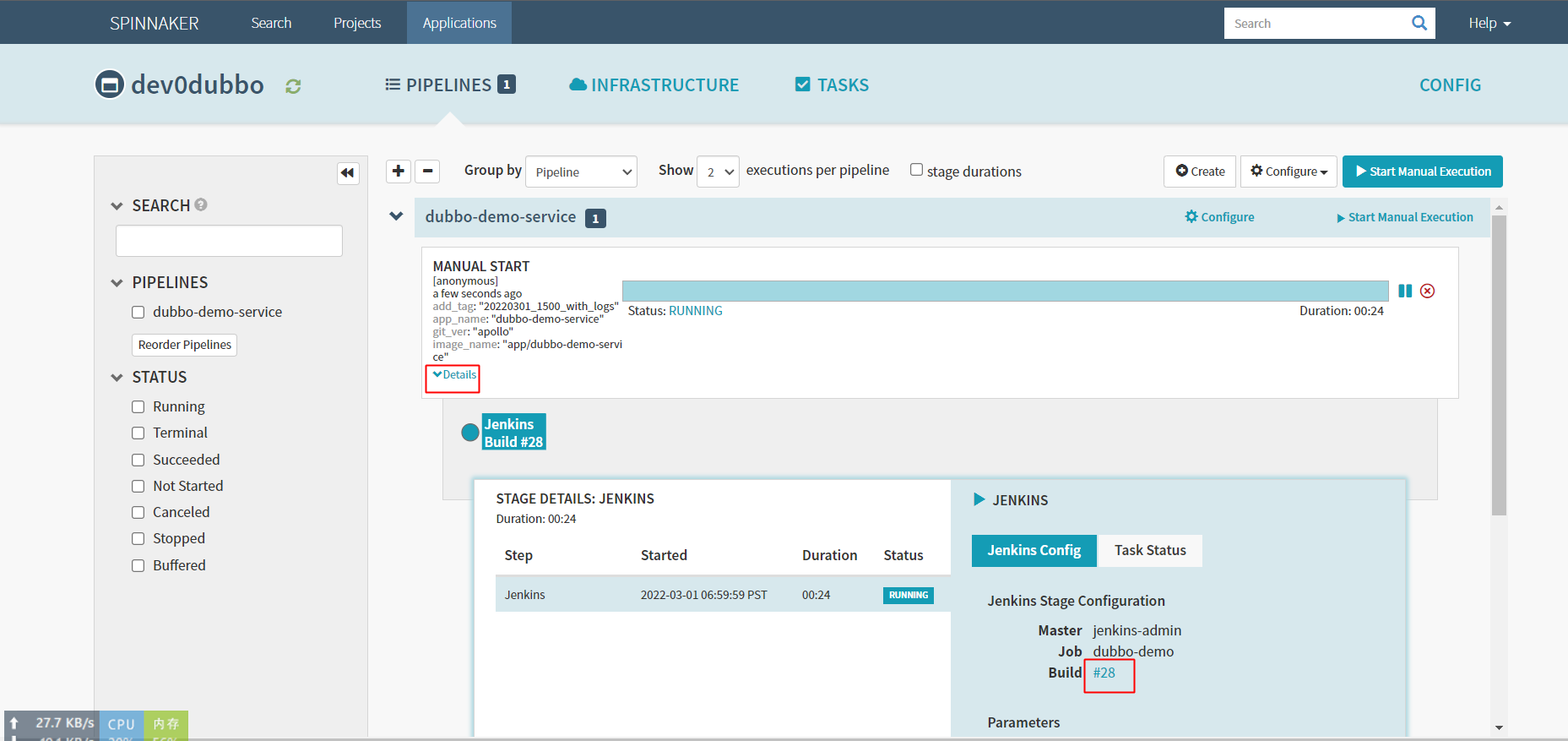

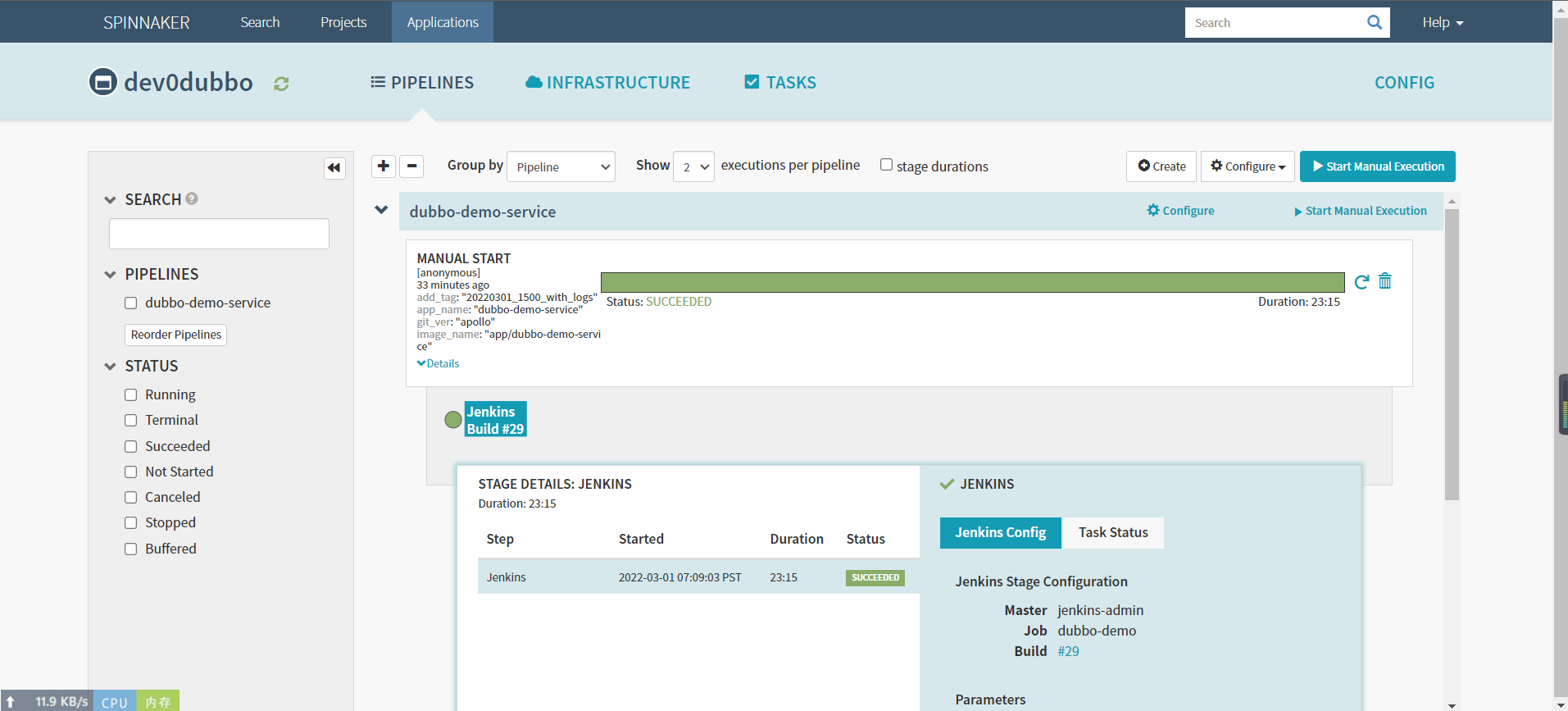

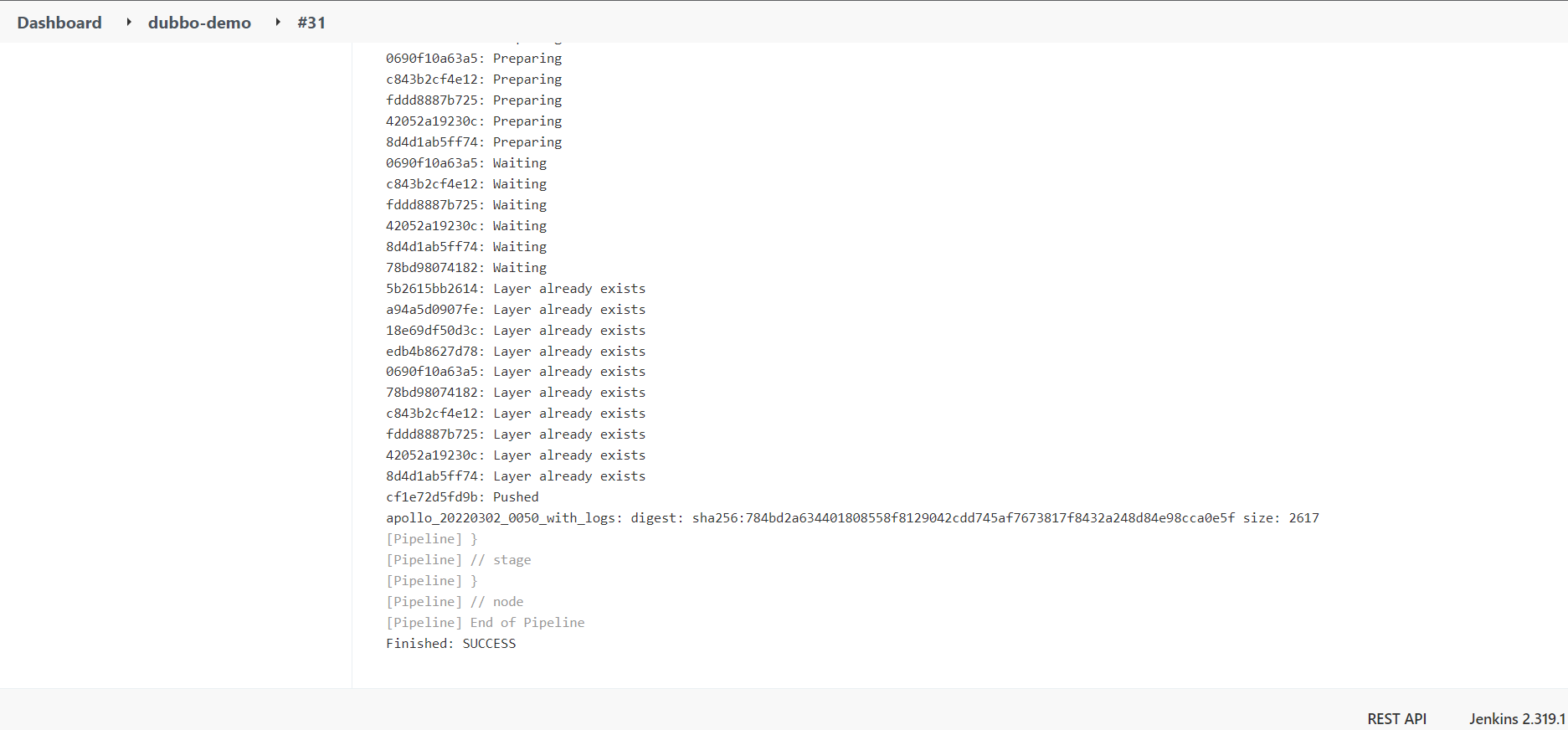

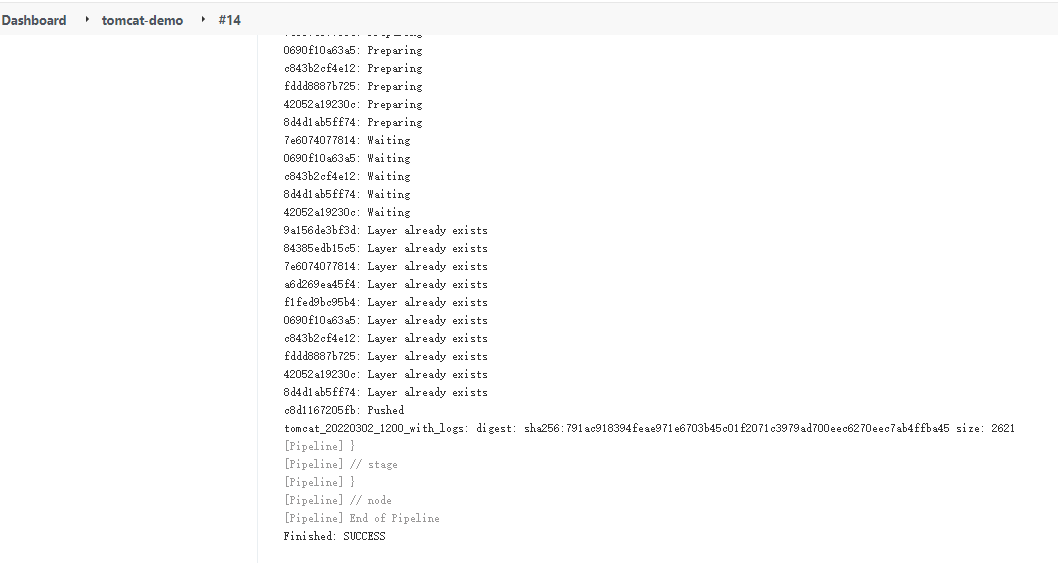

运行流水线

输入git_ver和add_tag,点击run

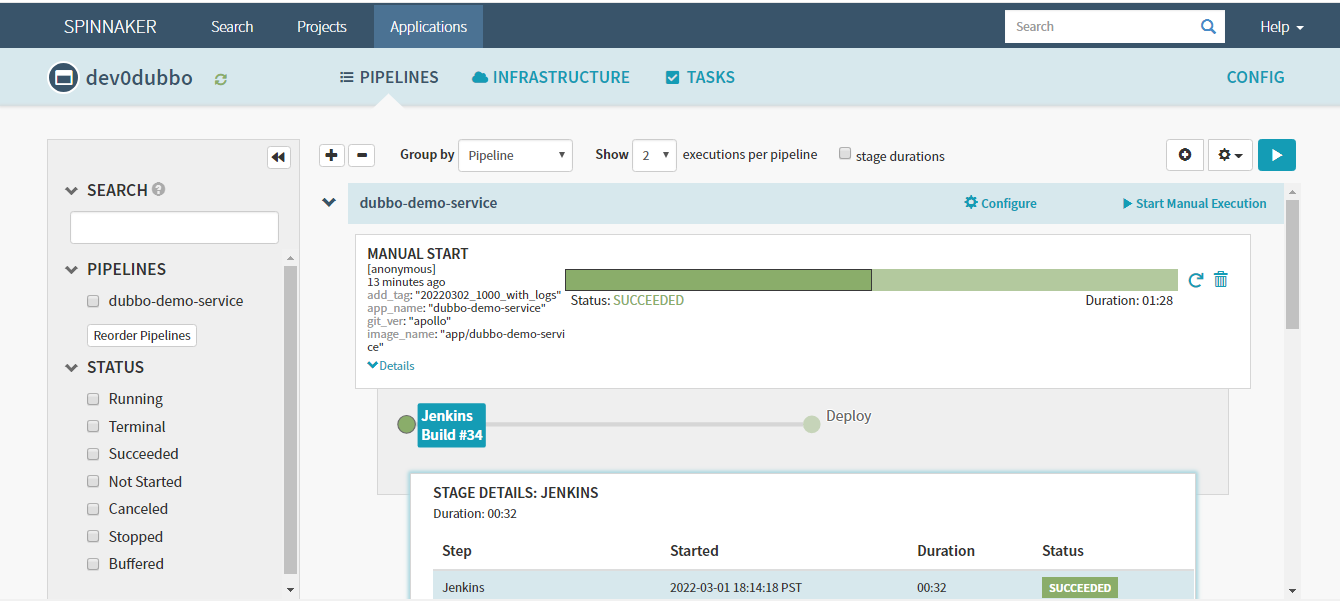

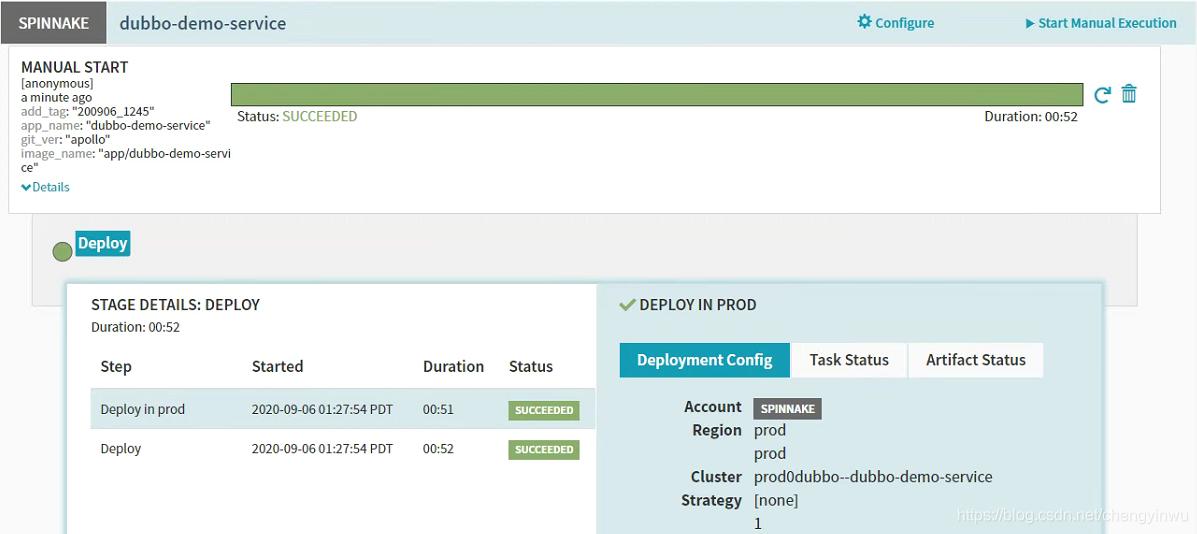

构建进行中

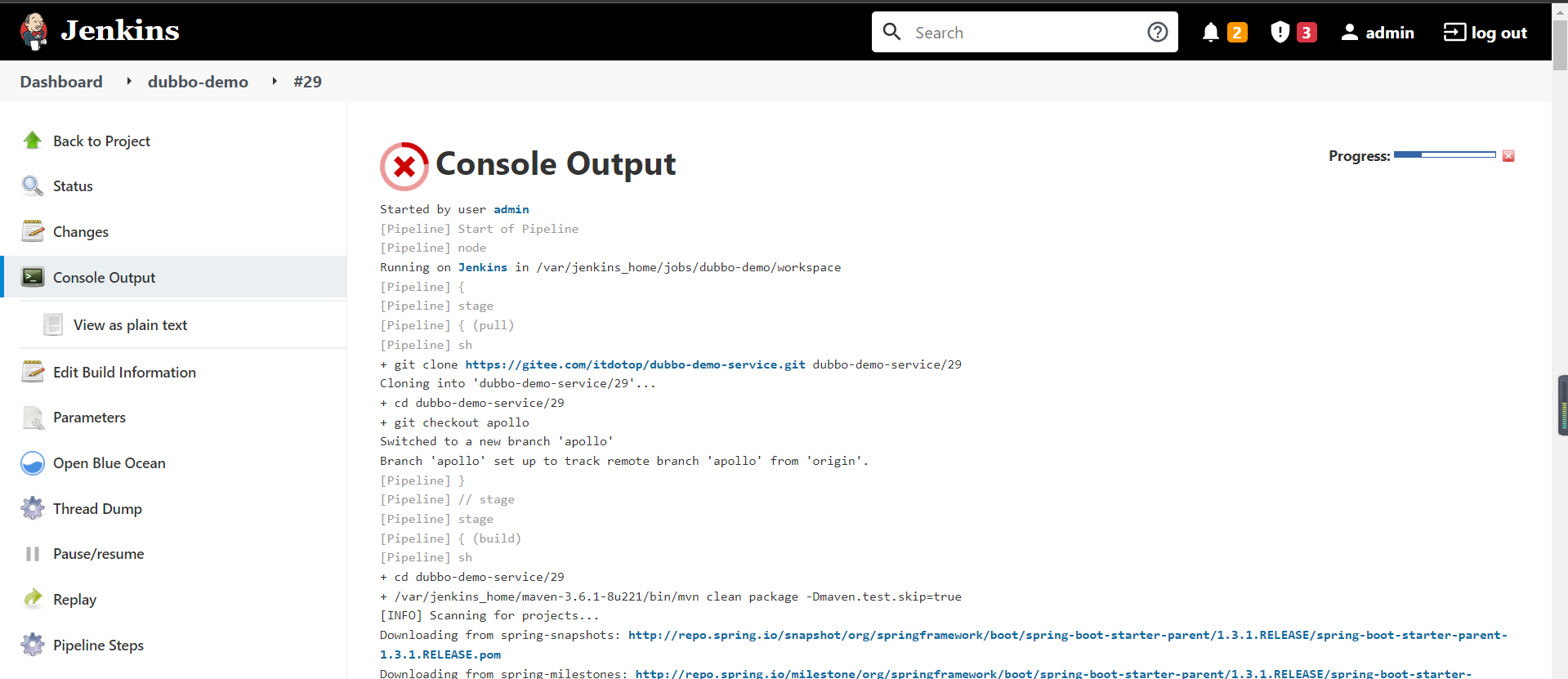

点击这里#28,可以直接跳转到jenkins上

构建完成

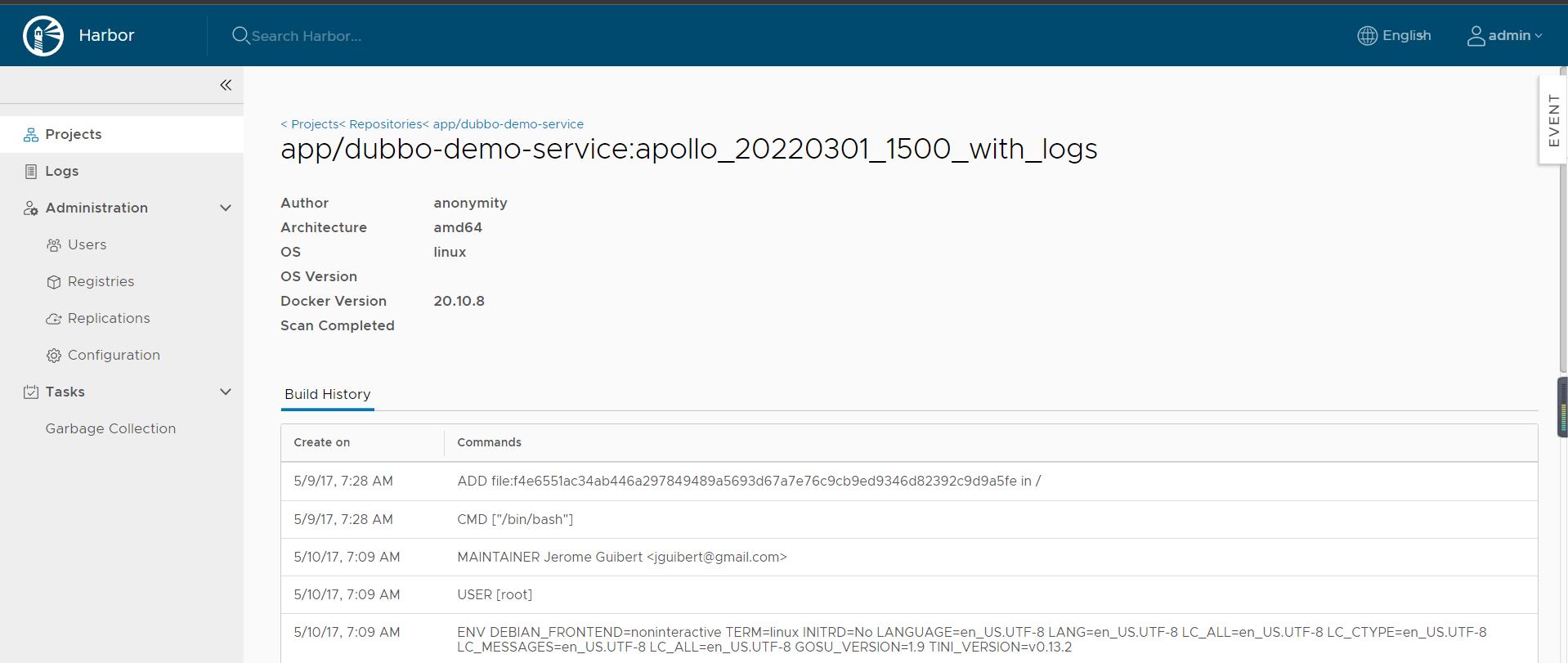

检查harbor生产的镜像

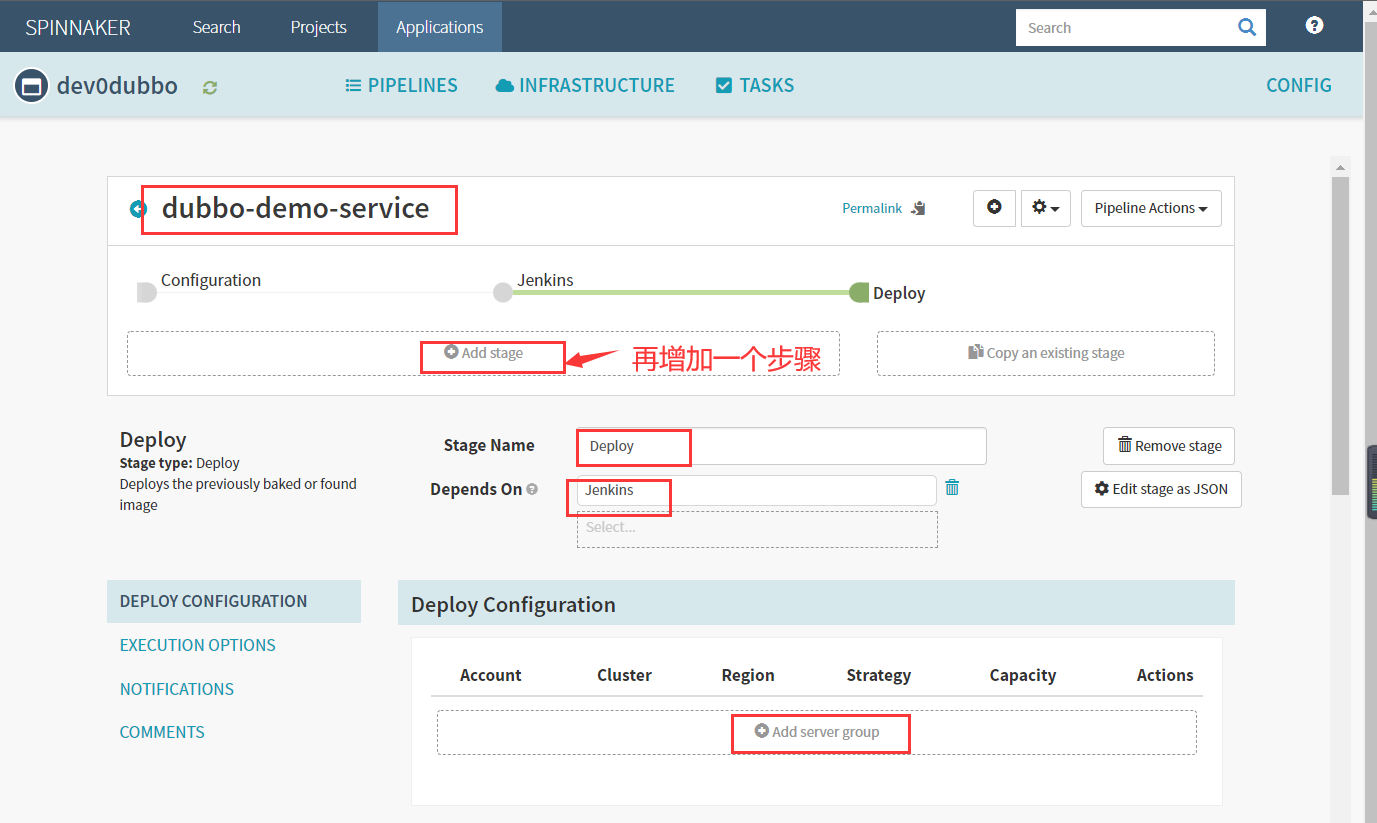

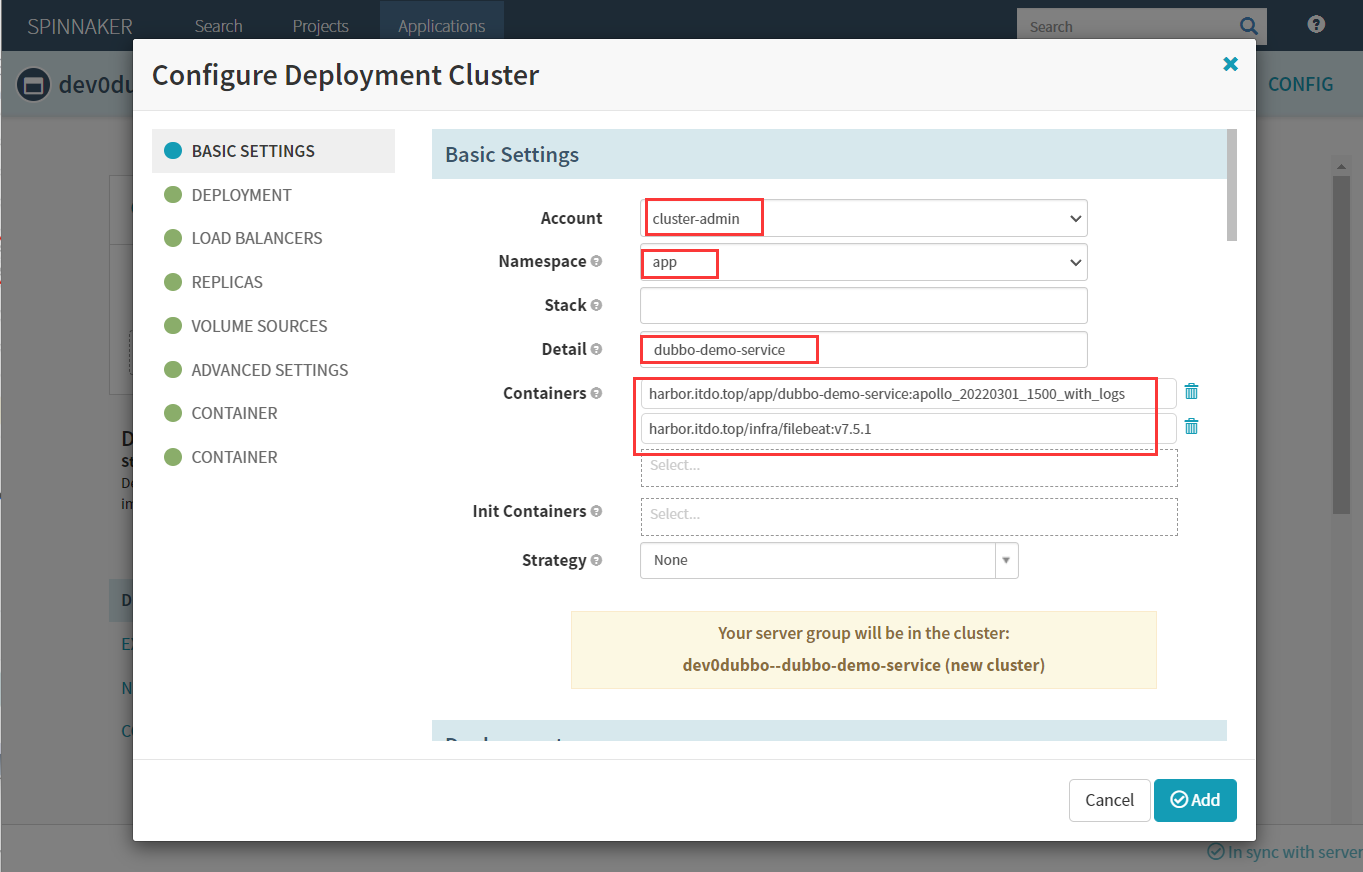

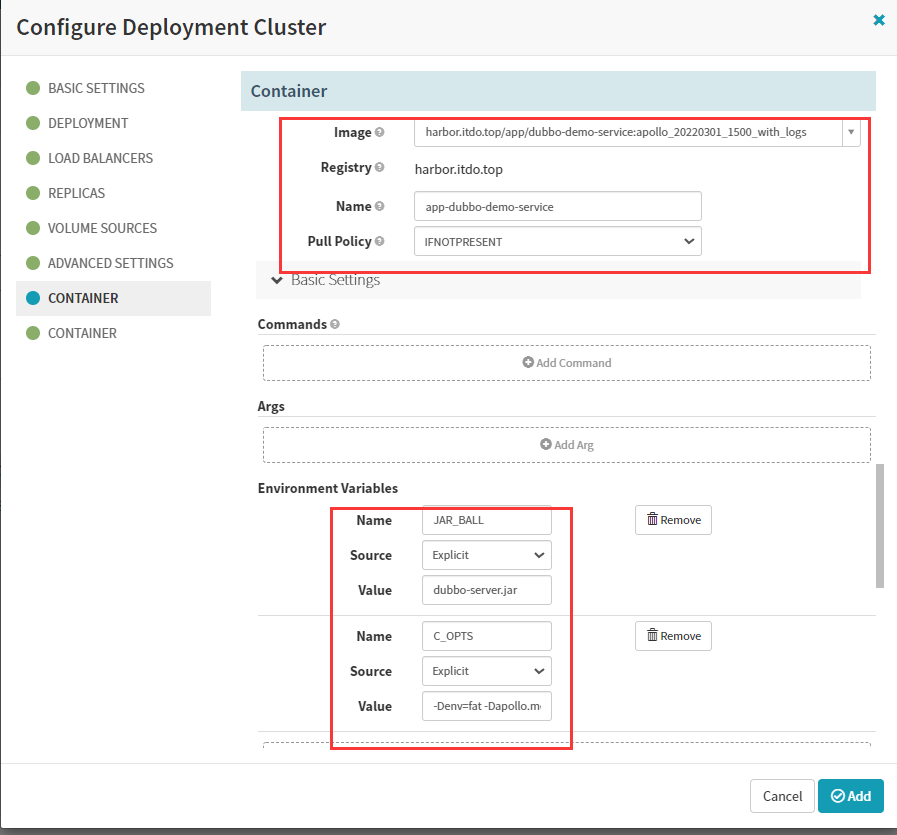

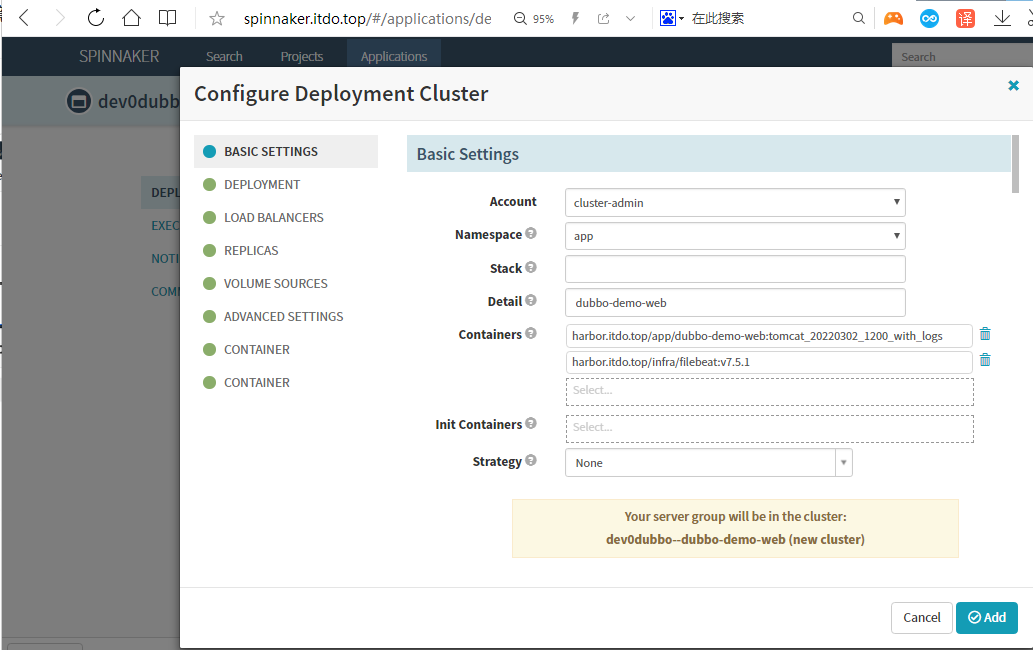

6.使用Spinnaker配置Dubbo服务提供者发布至K8S

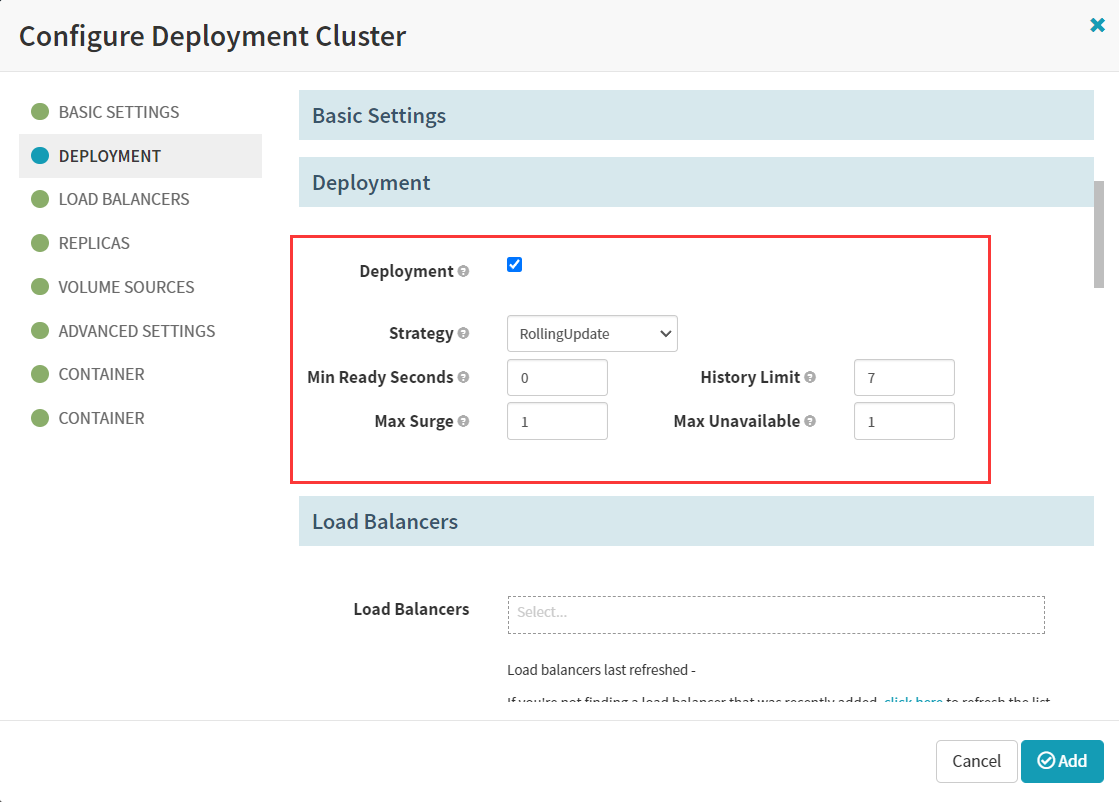

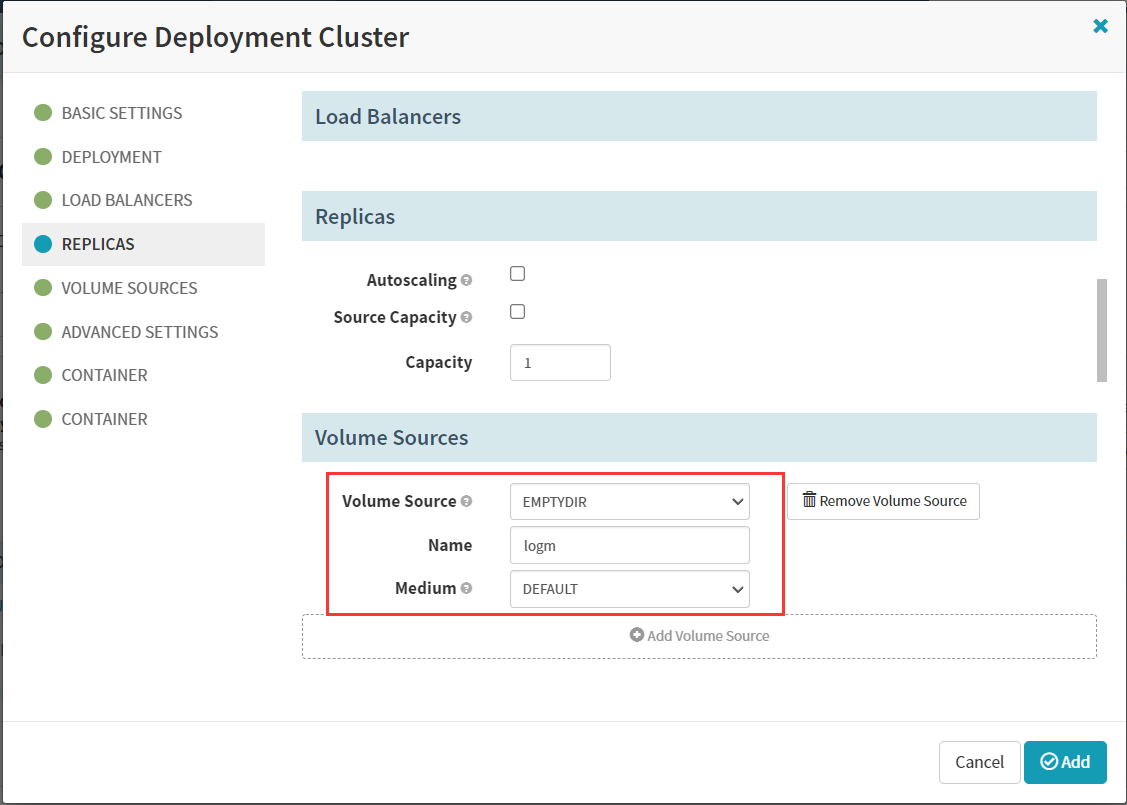

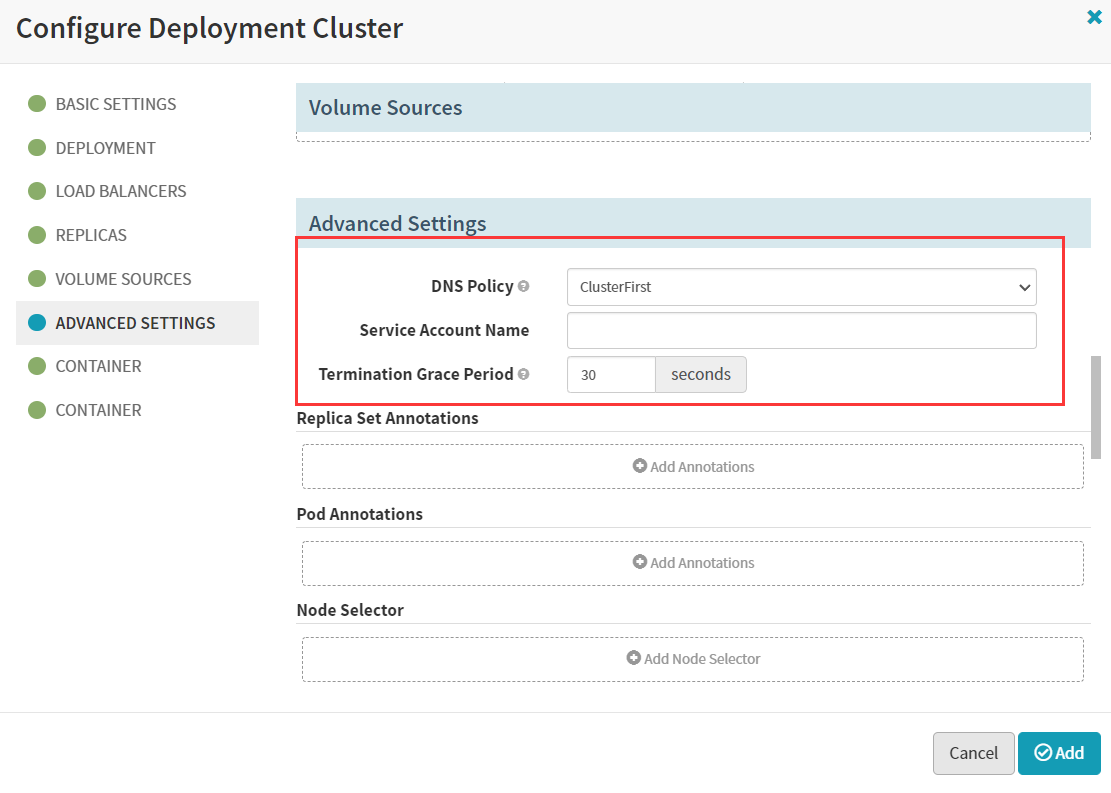

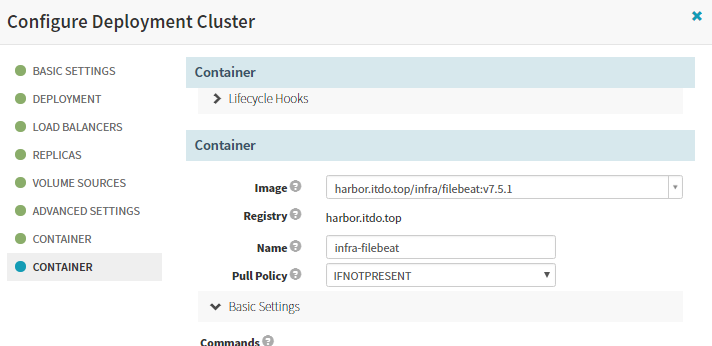

6.1 配置Deployment

Account:cluster-admin #选择前面新建的cluster-admin账号

Namespace:app #这里选择开发环境app

Detail:dubbo-demo-service

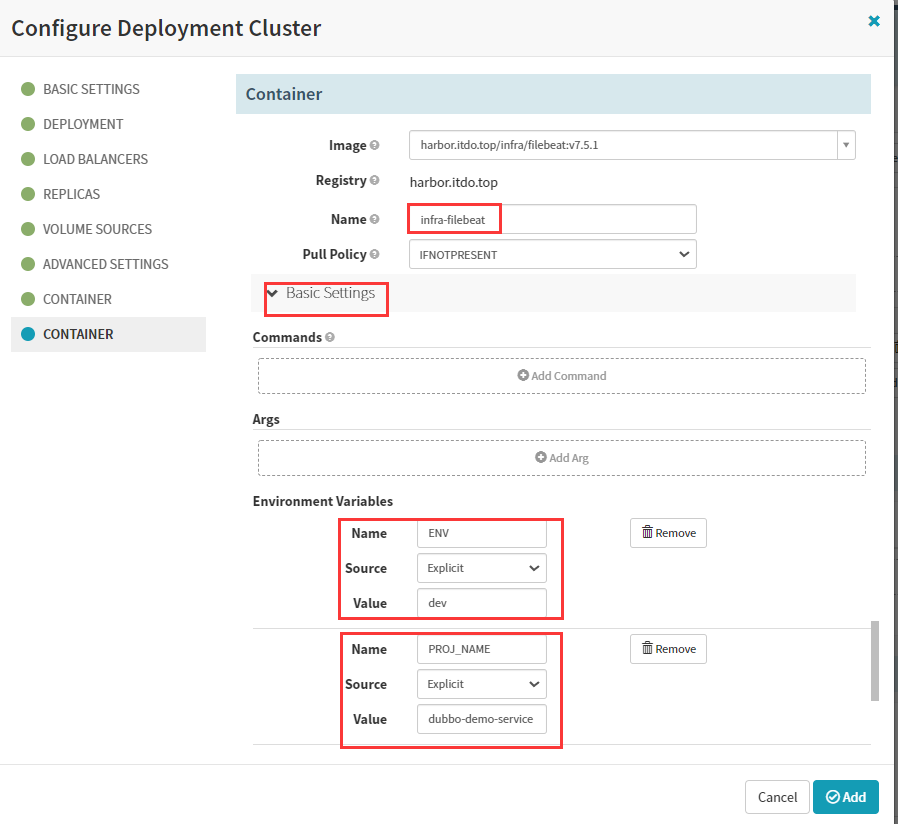

Containers: 这里选择两个镜像,一个主镜像,一个filebeat边车镜像

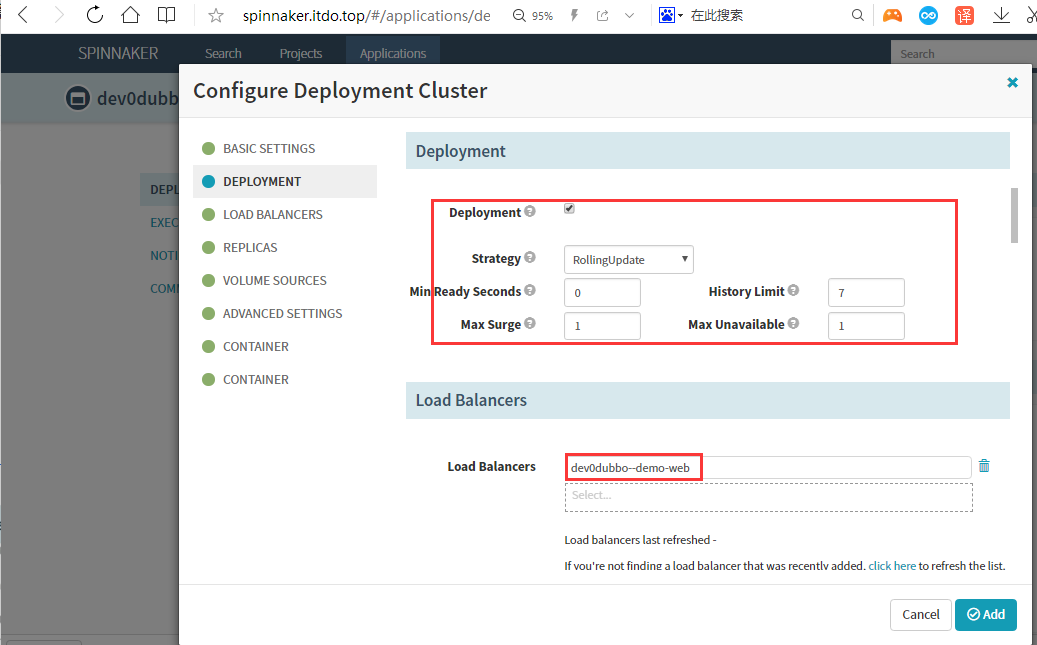

history limit :历史版本数

max surge:最大存活副本数

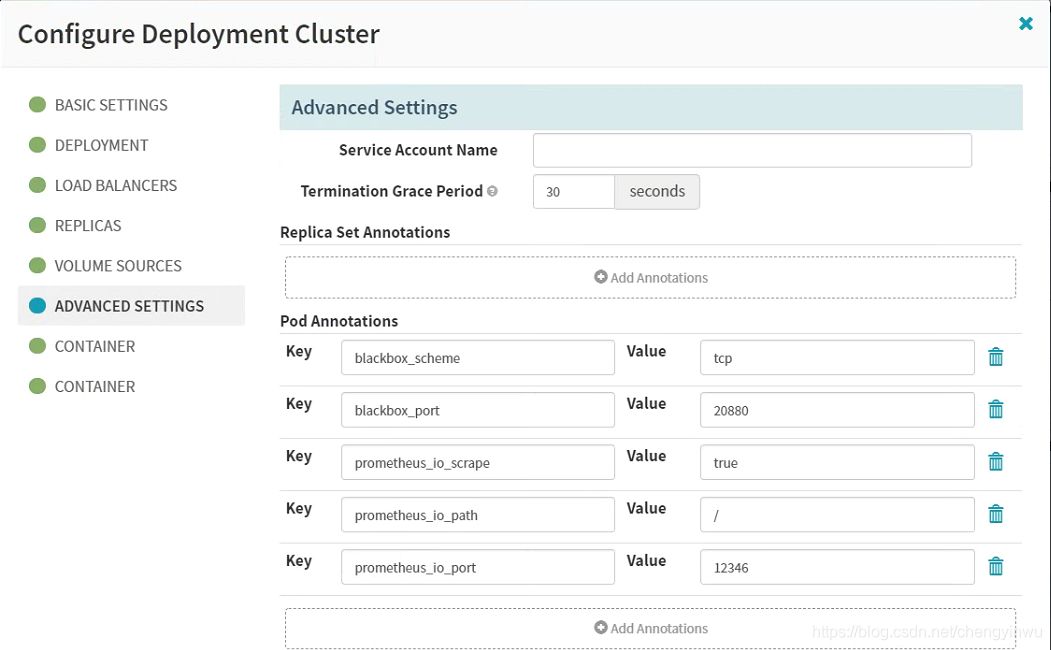

max unavailabel:最大不可达副本数

prometheus监控注解

- blackbox_port: “20880”

- blackbox_scheme: tcp

- prometheus_io_path: /

- prometheus_io_port: “12346”

- prometheus_io_scrape: “true”

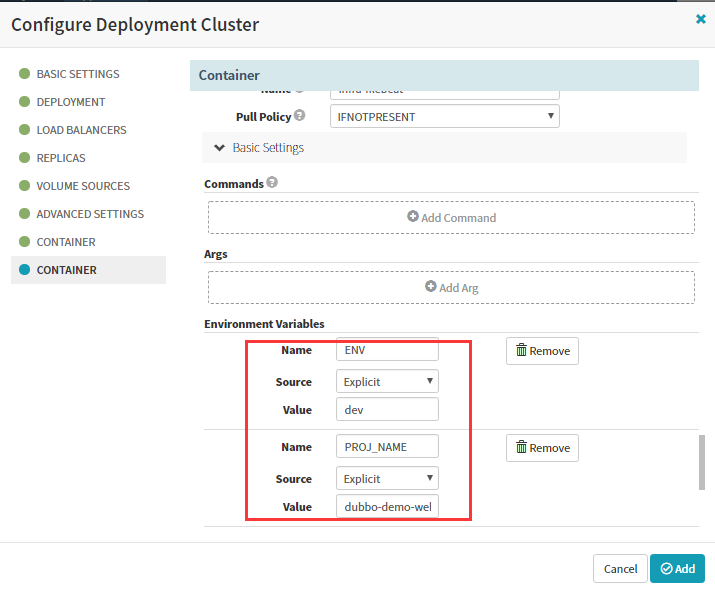

主容器变量

- name: C_OPTS

value: -Denv=dev -Dapollo.meta=http://config.itdo.top ,如果是测试环境,则value: -Denv=fat -Dapollo.meta=http://config-test.itdo.top

- name: JAR_BALL

value: dubbo-server.jar

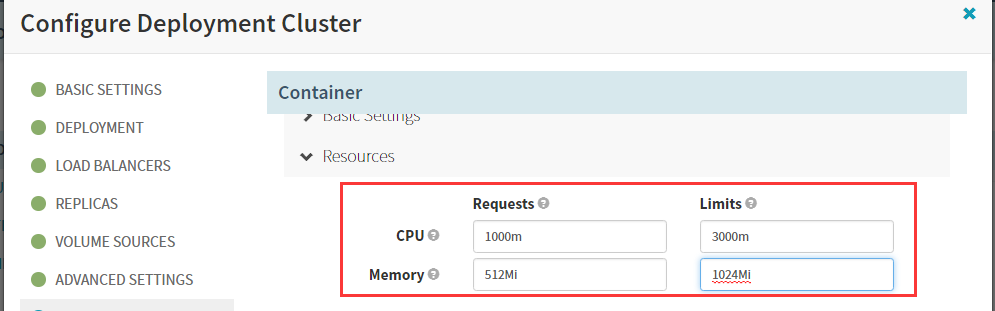

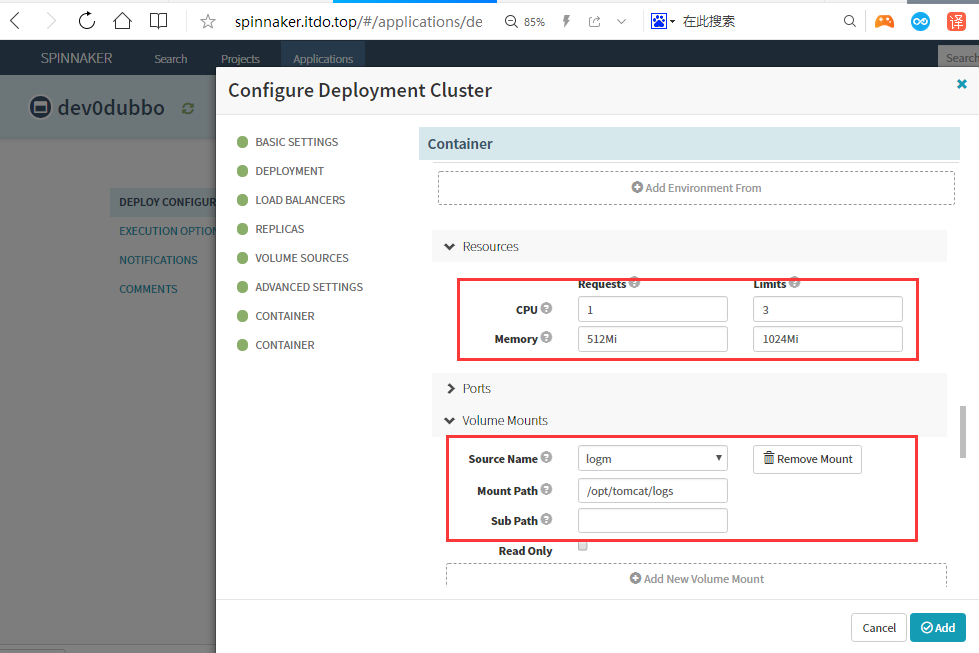

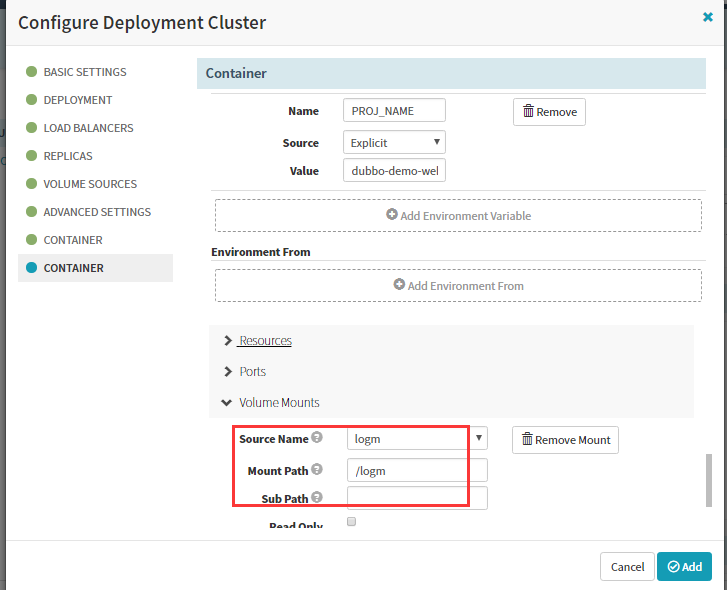

资源要求和限制

CPU:1 core = 1000 milli core(毫核);50m相当于0.05core,最小单位1m;

内存:M 1000进制 129M字节;Mi 1024进制

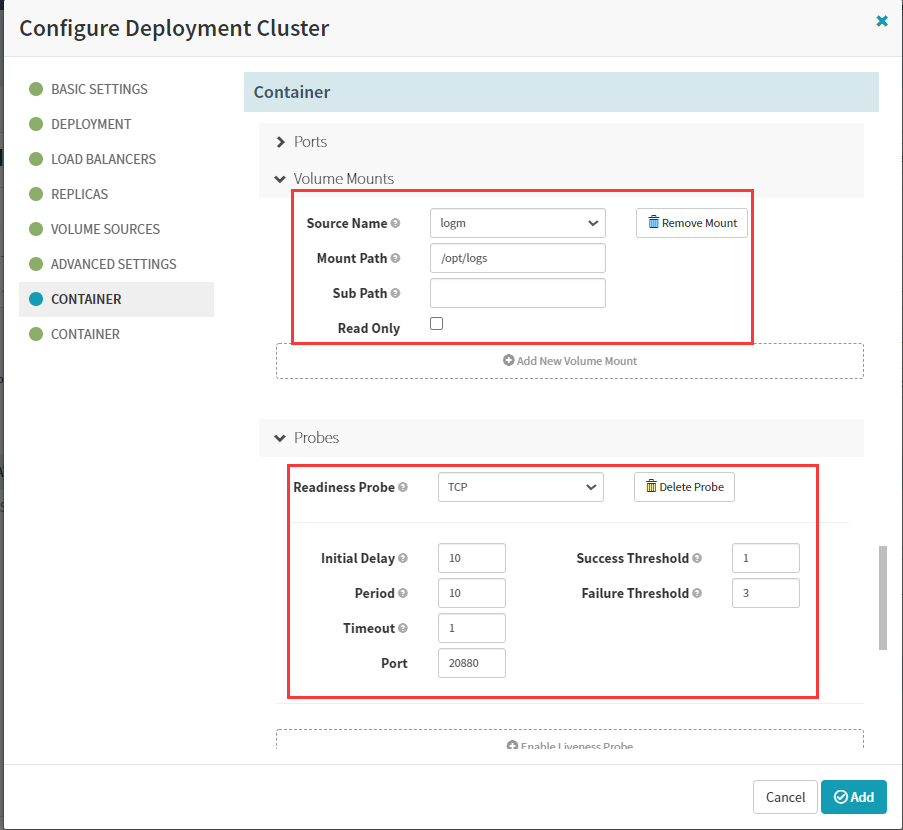

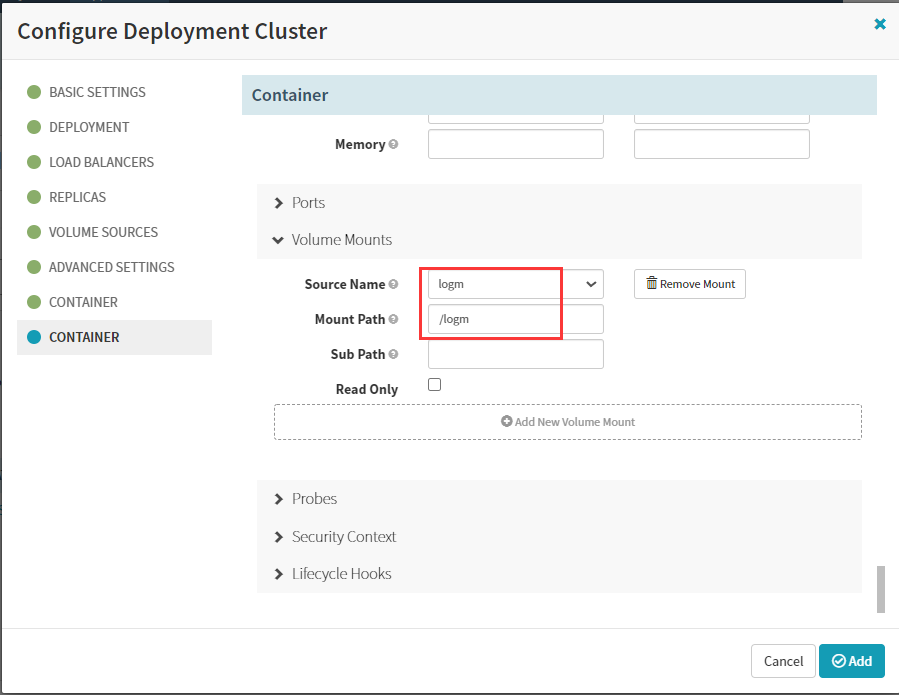

volumeMounts:

- mountPath: /opt/logs

name: logm

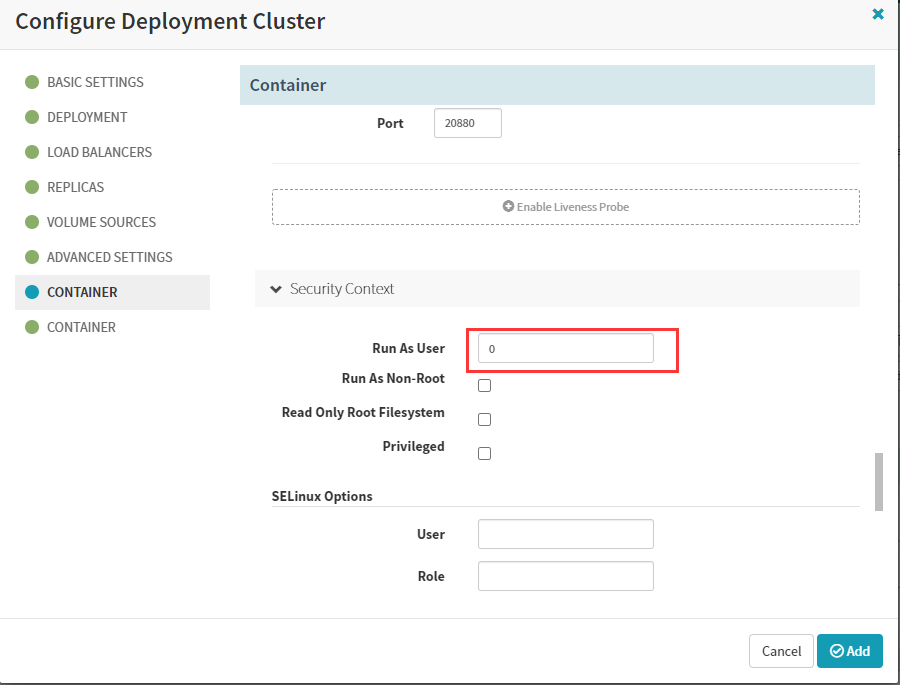

securityContext:

runAsUser: 0

配置第二个容器

env:

- name: ENV

value: dev,如果是测试环境,则 value: test

- name: PROJ_NAME

value: dubbo-demo-service

volumeMounts:

- mountPath: /logm

name: logm

添加完成

更换jenkins底包镜像,更改为jre8:8u112_wirh_logs

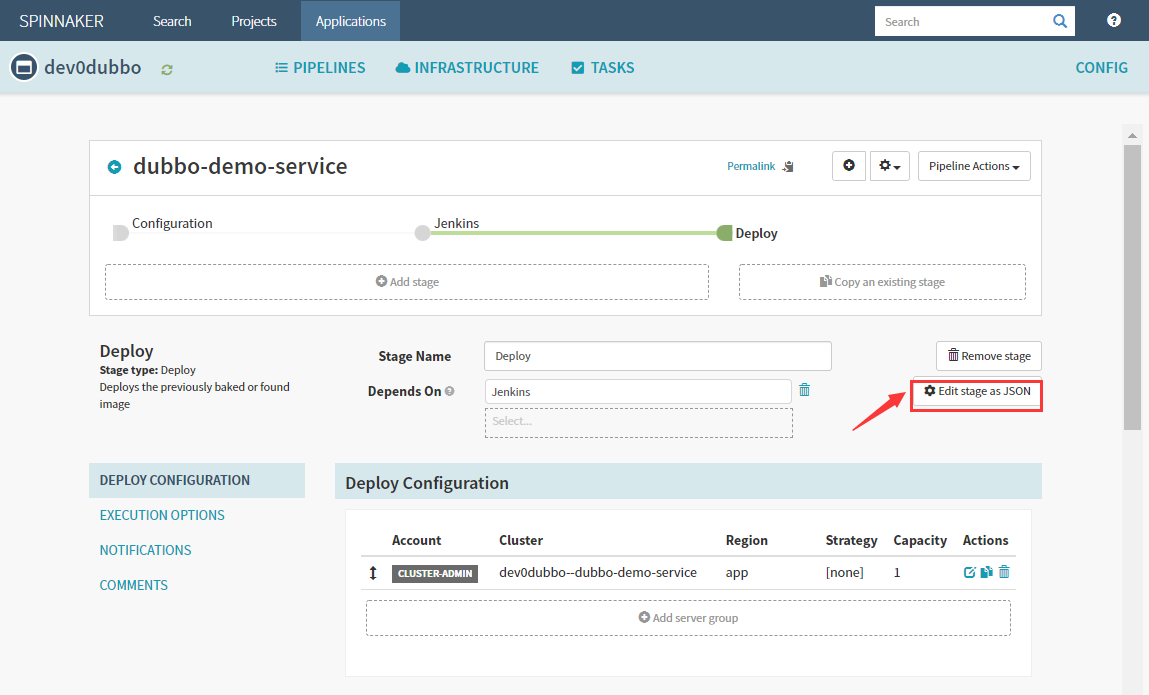

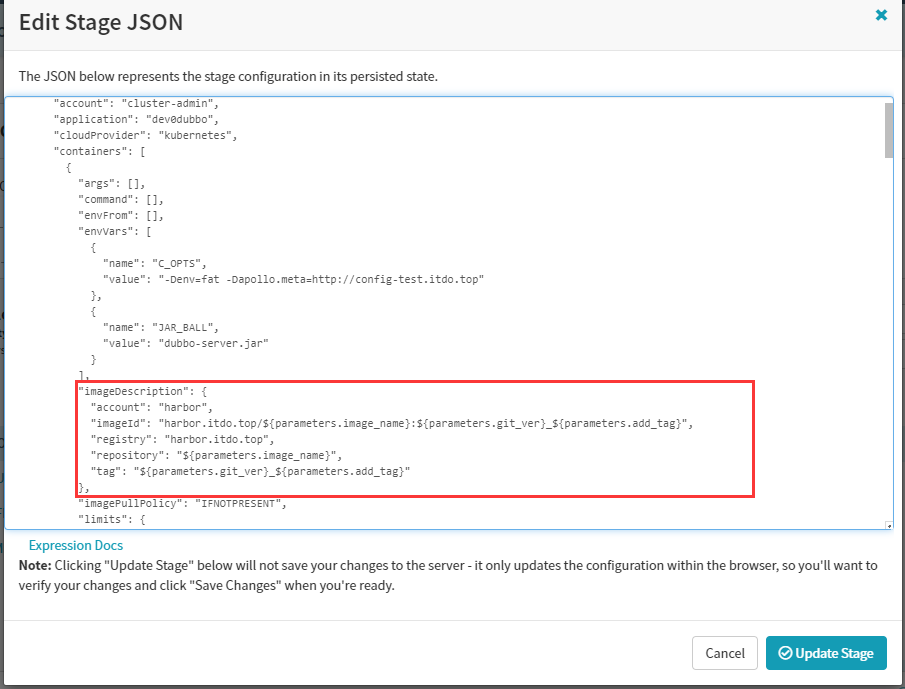

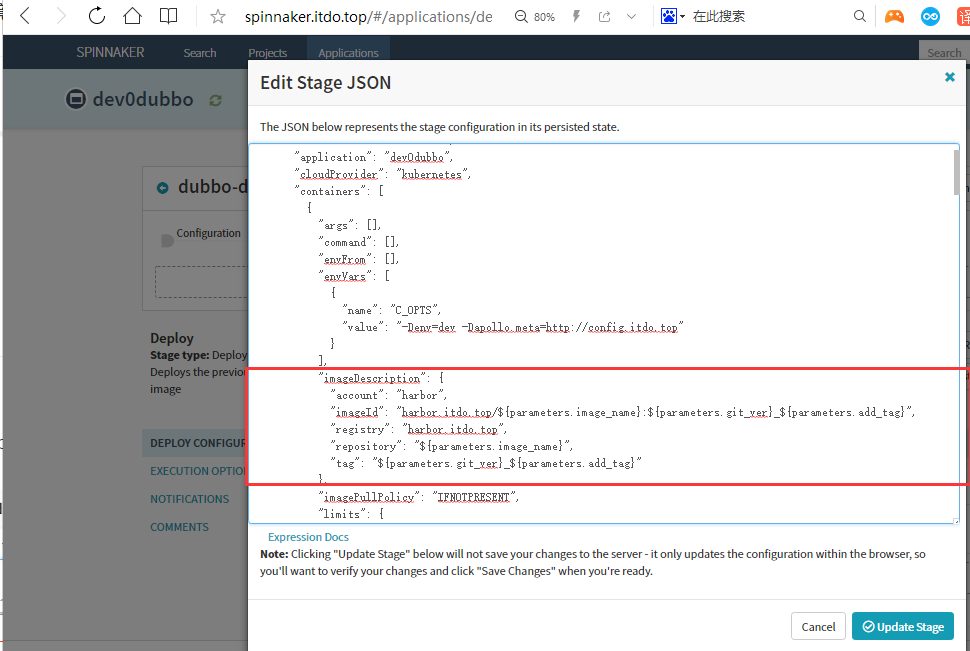

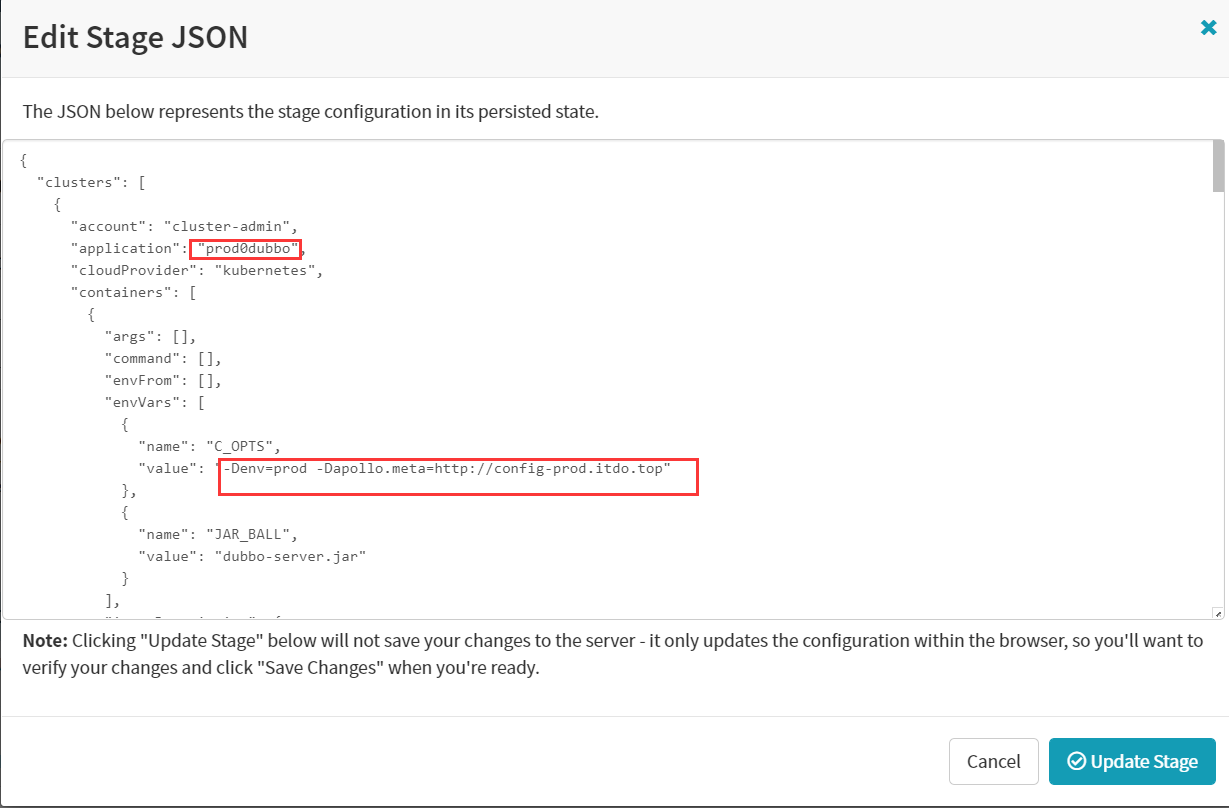

6.3 修改Json环境变量

image修改为使用变量,update–>save

"imageDescription": {

"account": "harbor",

"imageId": "harbor.itdo.top/${parameters.image_name}:${parameters.git_ver}_${parameters.add_tag}",

"registry": "harbor.itdo.top",

"repository": "${parameters.image_name}",

"tag": "${parameters.git_ver}_${parameters.add_tag}"

},

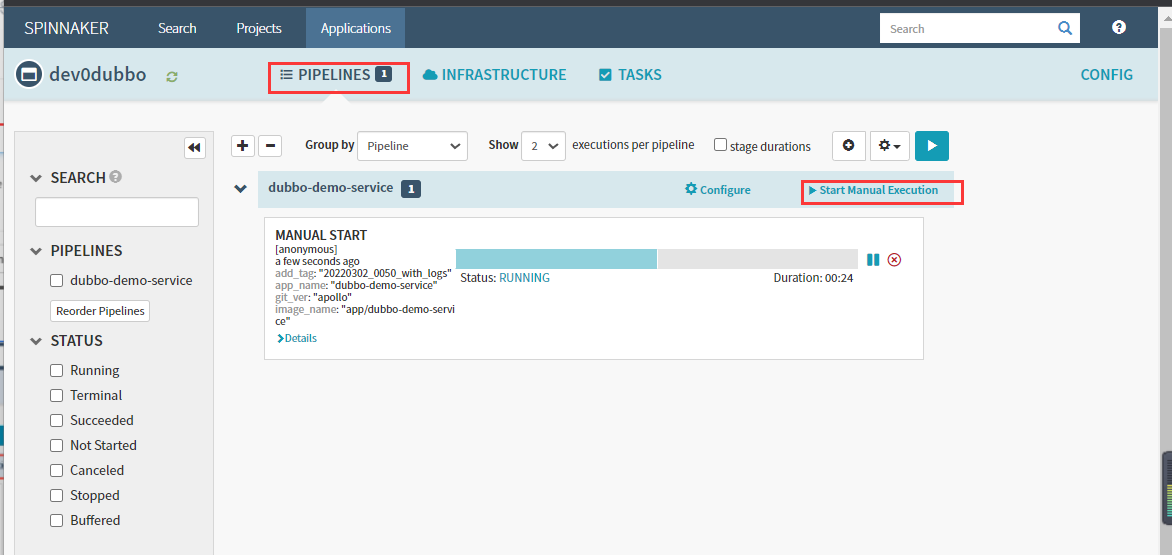

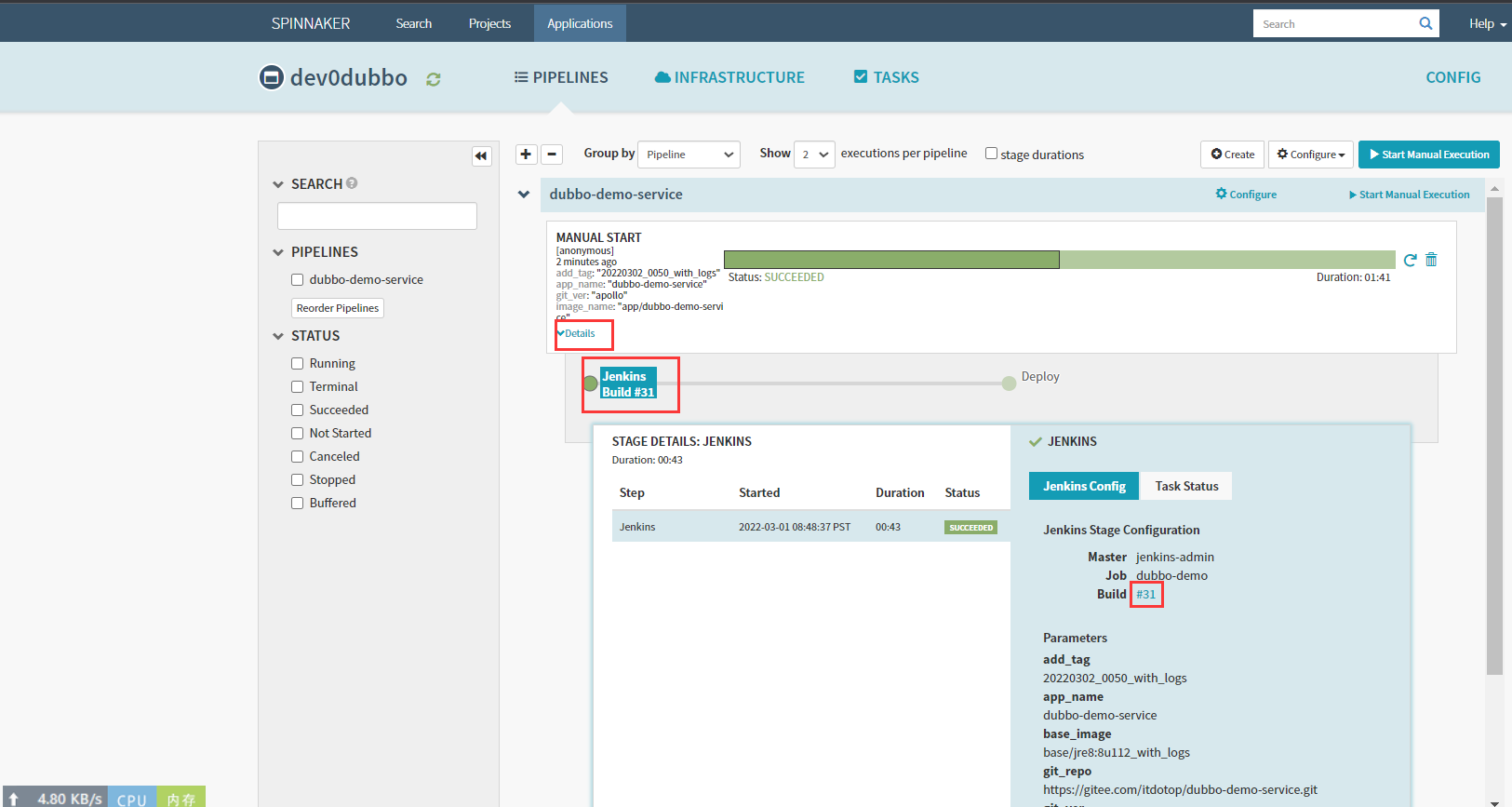

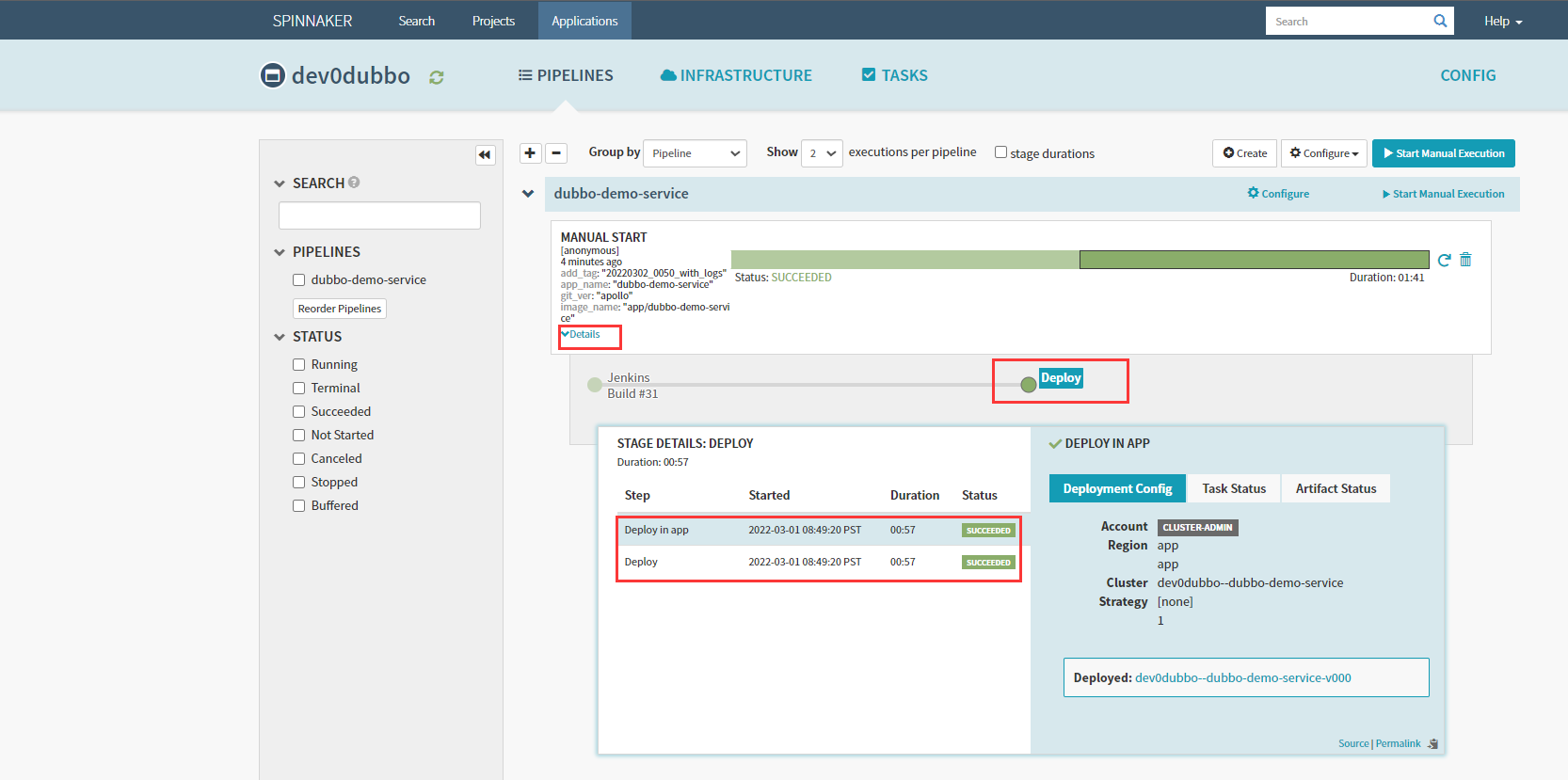

6.4执行流水线

6.5 自动调用Jenkins

检查deploy

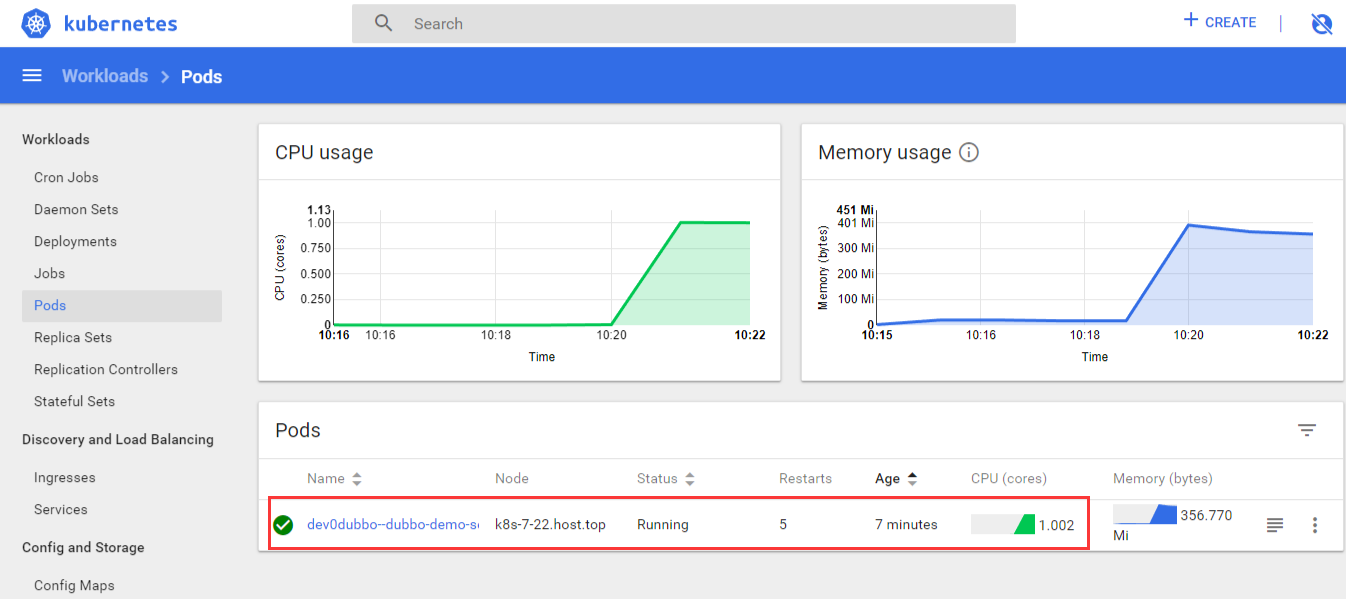

6.6 检查Pod-验证deployment

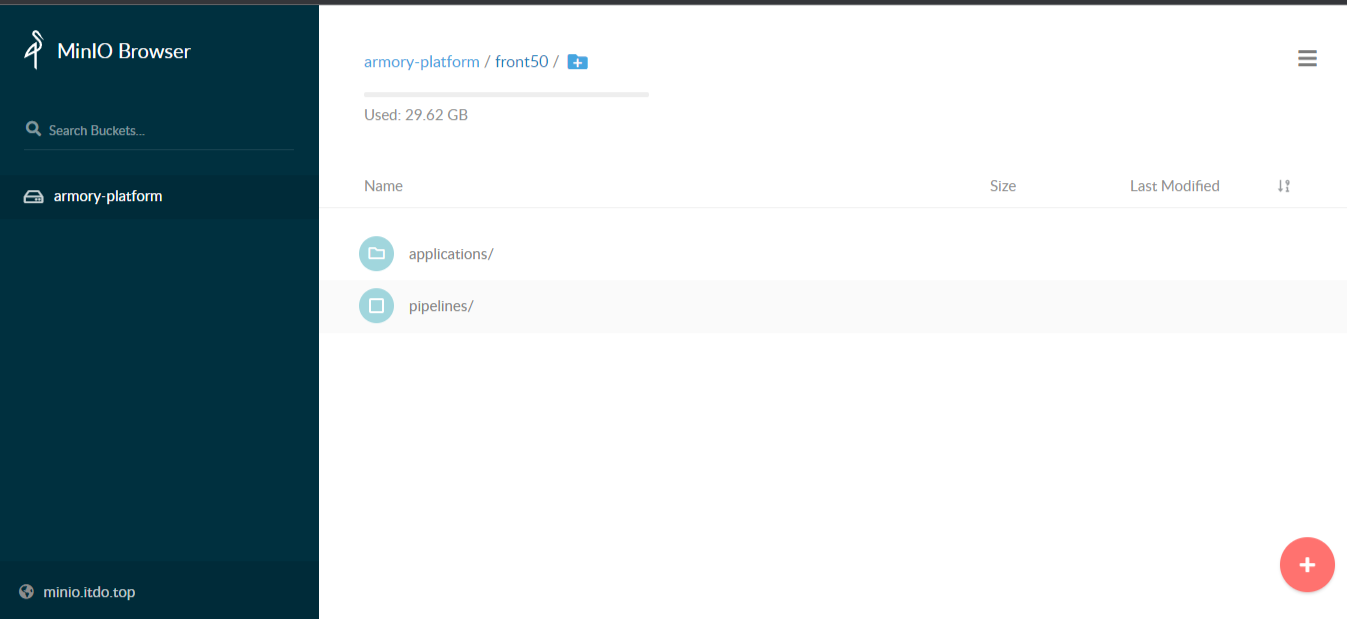

6.7检查Minio

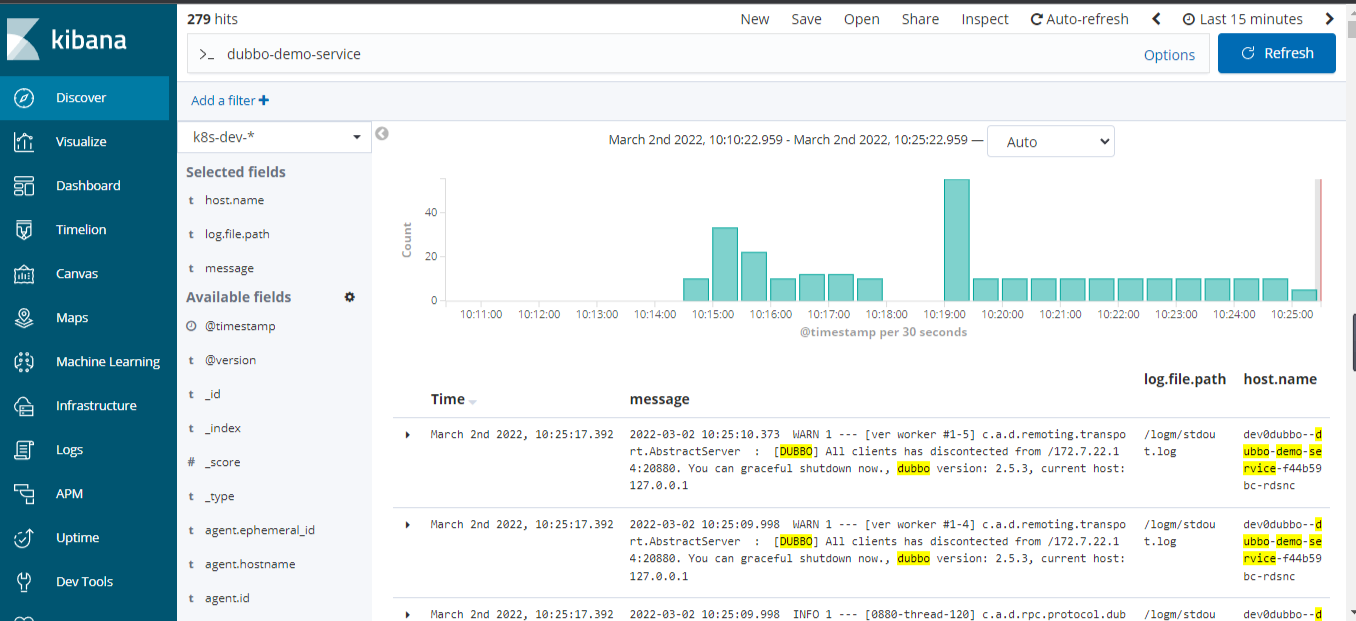

6.8 检查Kibana

6.9 自动化构建Done

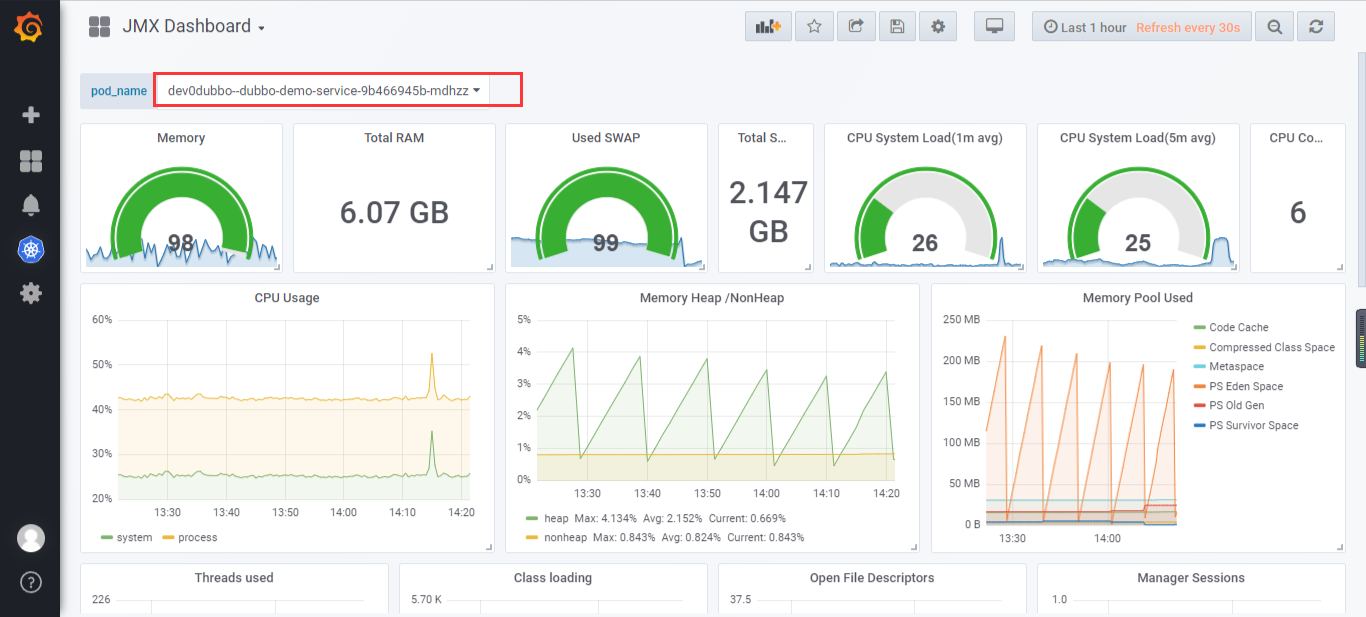

6.10 查看监控

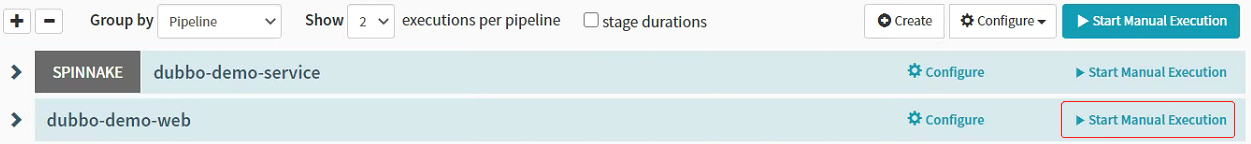

7. 使用Spinnaker配置Dubbo服务消费者发布至K8S

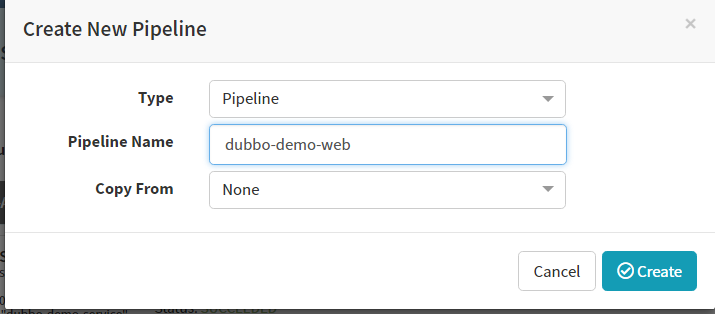

7.1 Create New Pipeline

Applications–>dev0dubbo–>piplines–>configure a new pipeline

Add Parameters

添加4个参数

本次编译和发布 dubbo-demo-web,因此默认的项目名称和镜像名称是基本确定的

1. name: app_name

required: true

Default Value : dubbo-demo-web

description: 项目在Git仓库名称

2. name: git_ver

required: true

description: 项目的版本或者commit ID或者分支

3. image_name

required: true

default: app/dubbo-demo-web

description: 镜像名称,仓库/image

4. name: add_tag

required: true

description: 标签的一部分,追加在git_ver后面,使用YYYYmmdd_HHMM

save保存配置

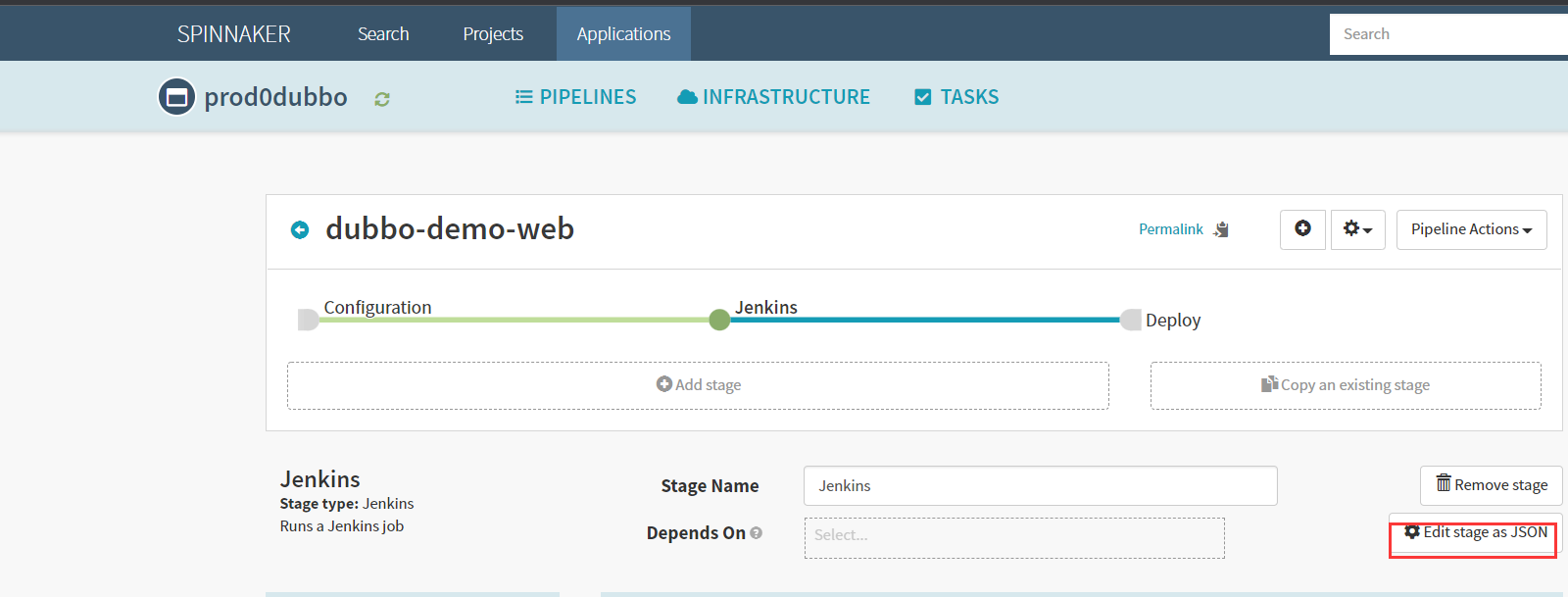

Add stage,创建jenkins

参数说明:

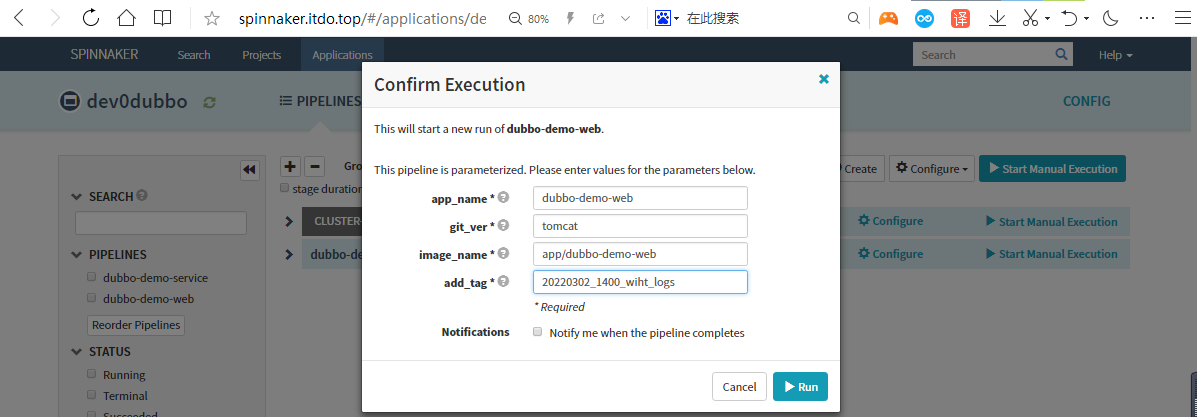

add_tag/app_name/image_name/git_ver,使用变量传入第一步配置的4个参数

app_name:${ parameters.app_name }

image_name:${ parameters.image_name }

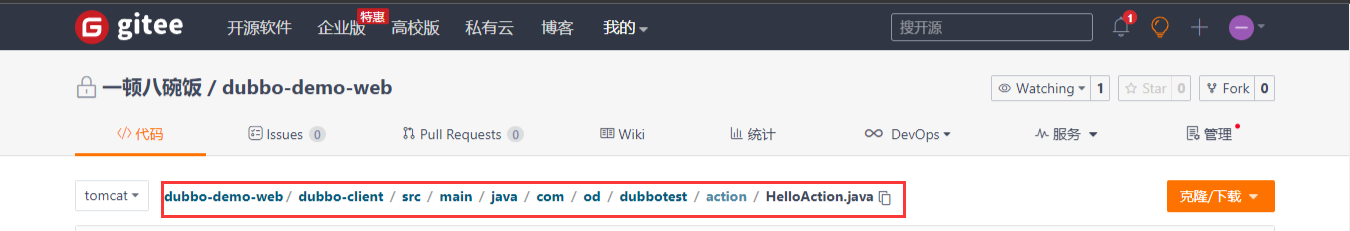

git_repo:[email protected]:itdotop/dubbo-demo-web.git

git_ver:${ parameters.git_ver }

add_tag:${ parameters.add_tag }

mvn_dir:./

target_dir:./dubbo-client/target

mvn_cmd:mvn clean package -Dmaven.test.skip=true

base_image:base/tomcat:v8.5.75

maven:3.6.1-8u221

root_url:ROOT

保存

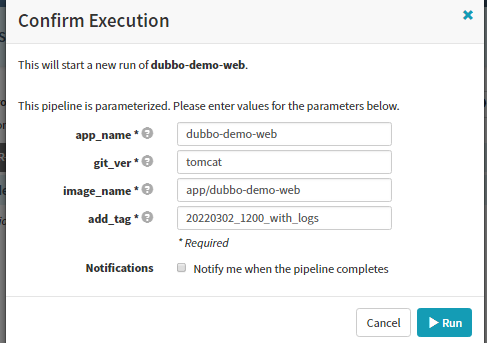

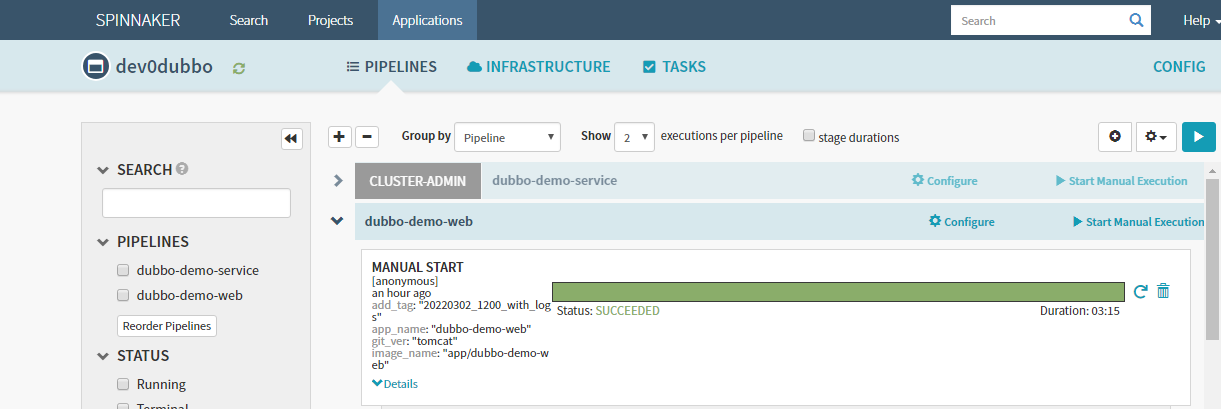

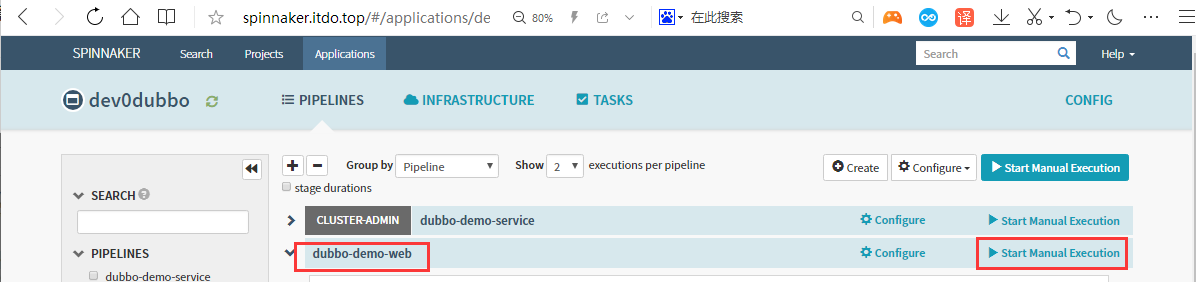

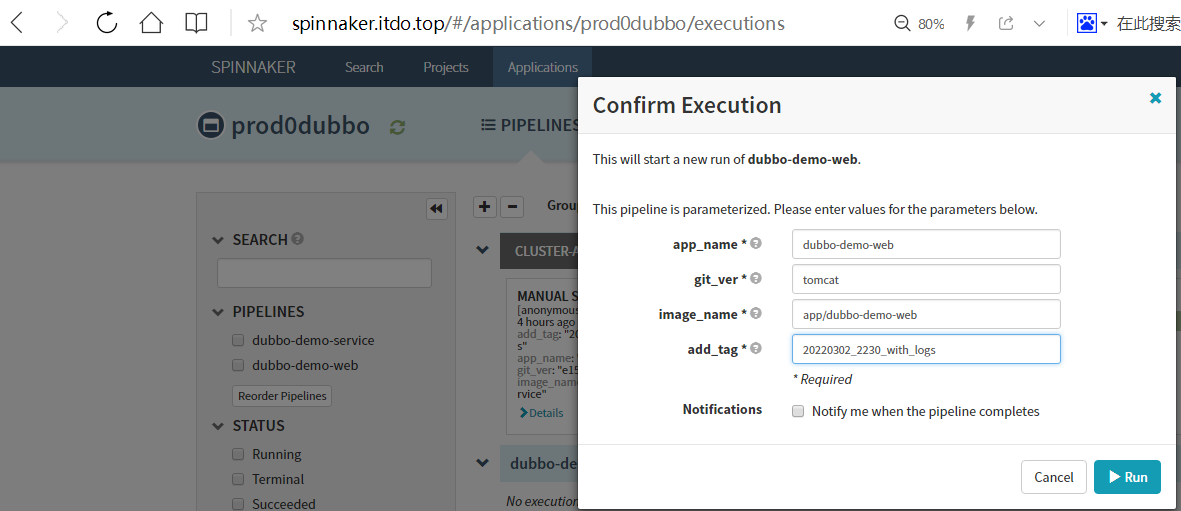

7.2 Start Manual

运行流水线

输入git_ver和add_tag,点击run

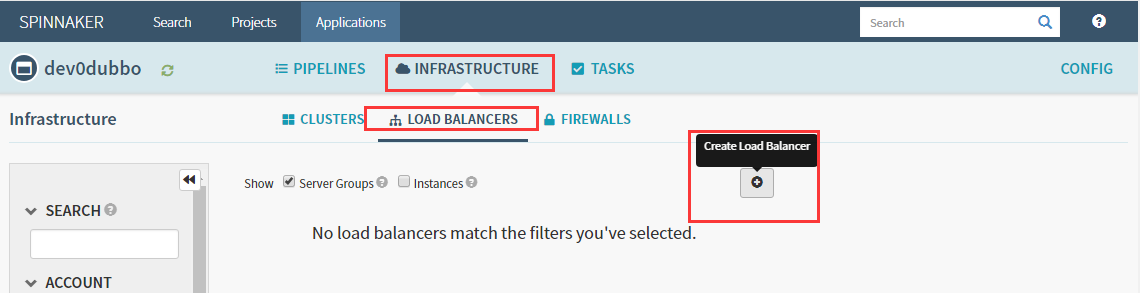

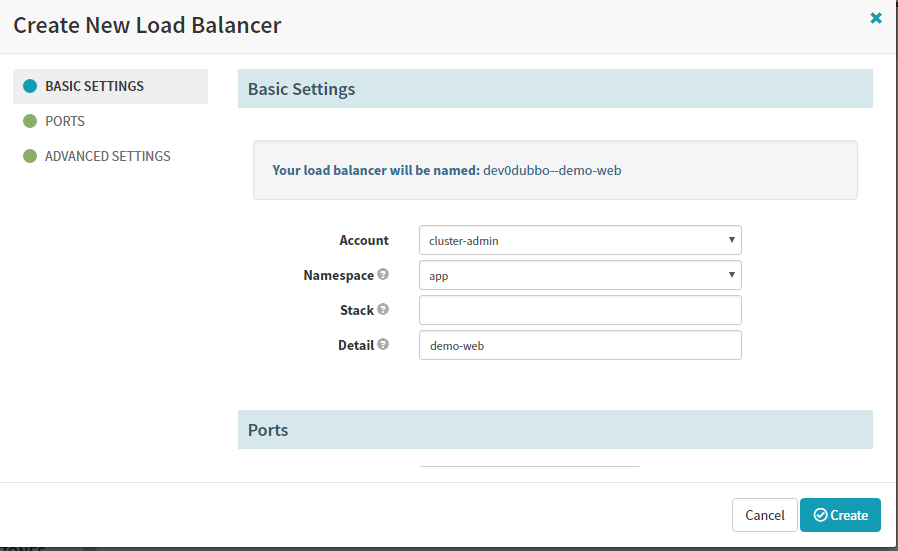

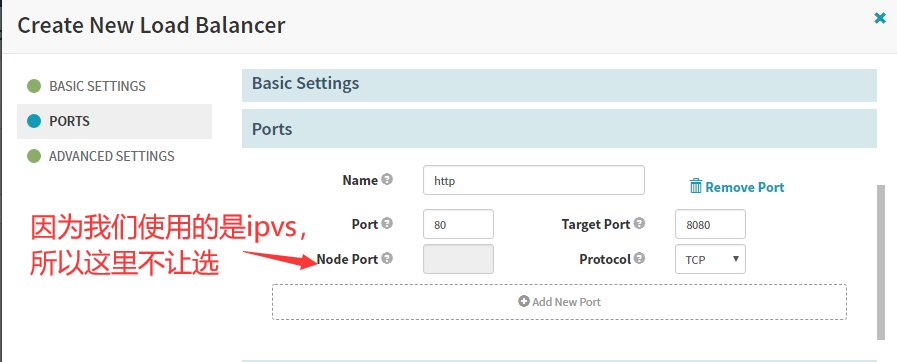

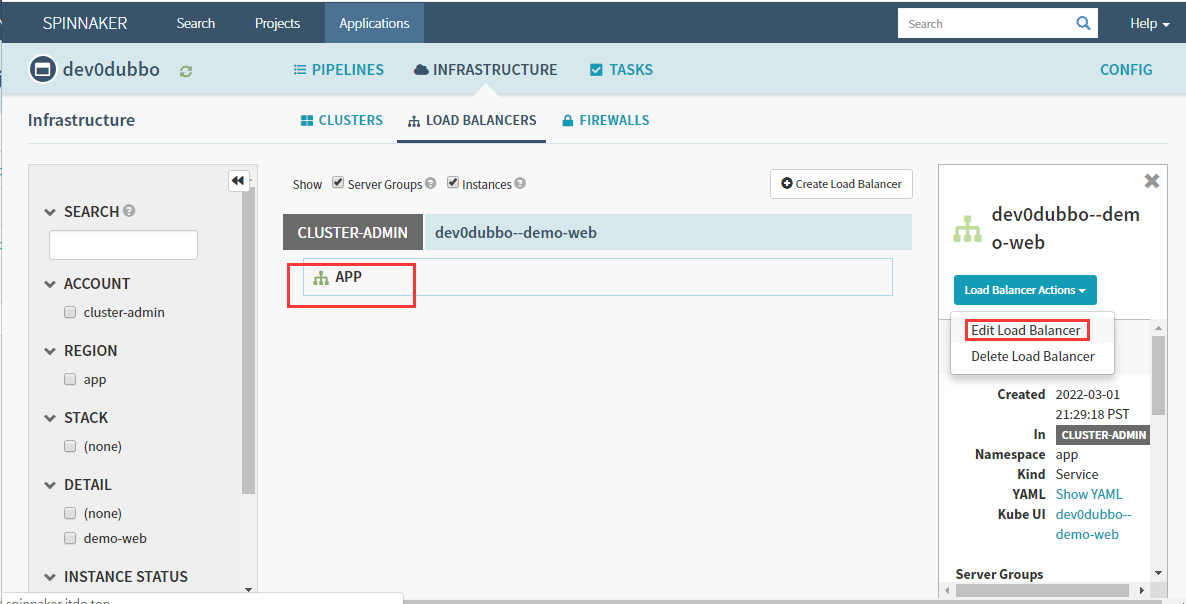

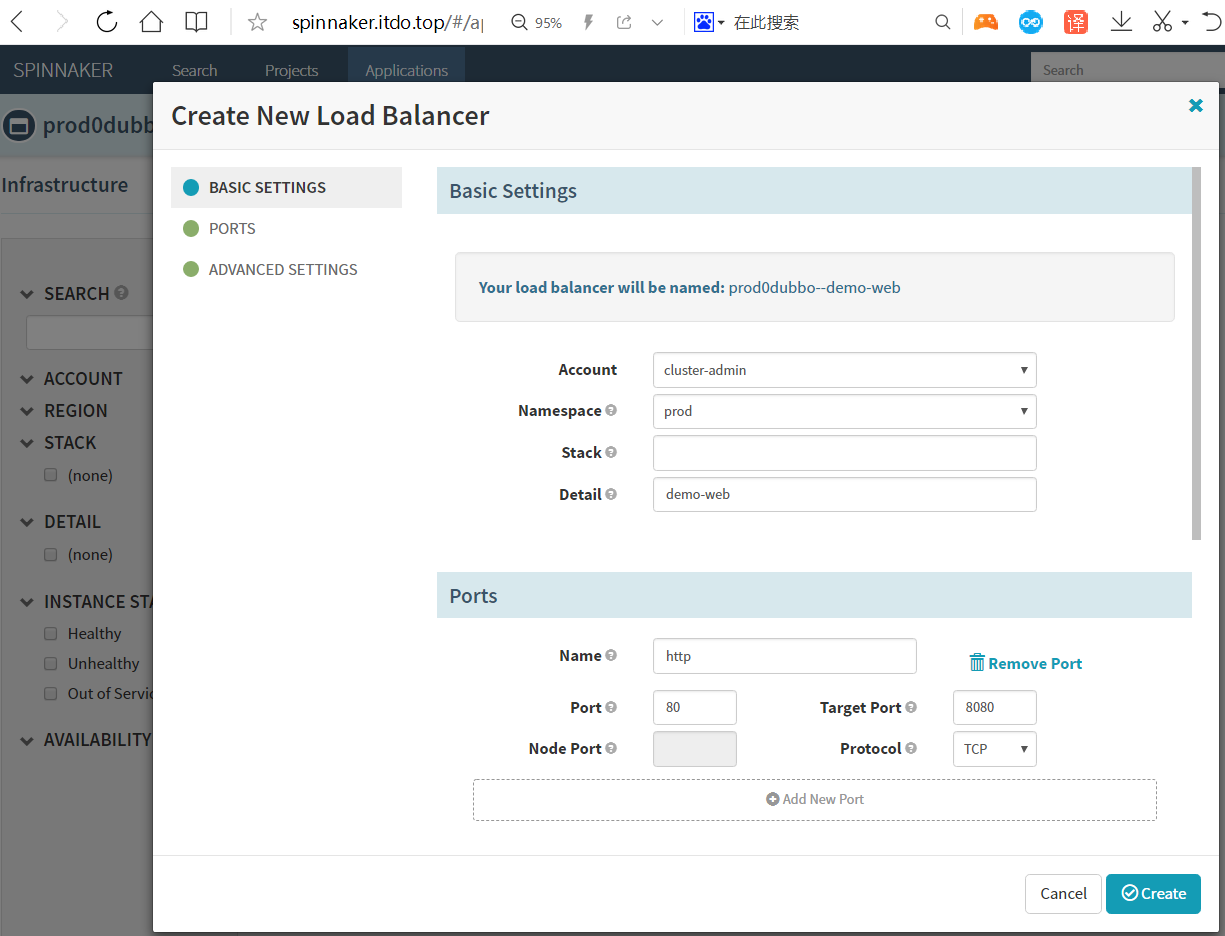

7.3 Create Load Balancer(service)

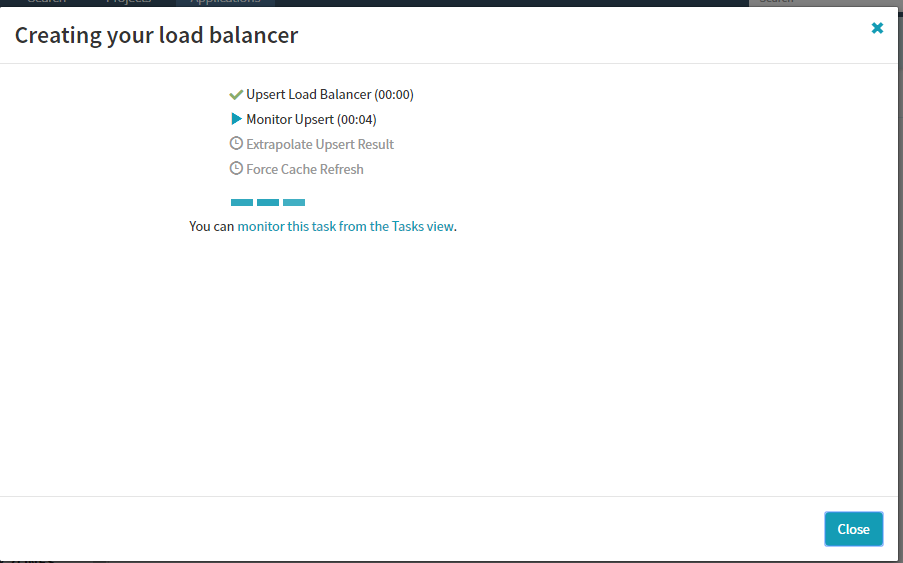

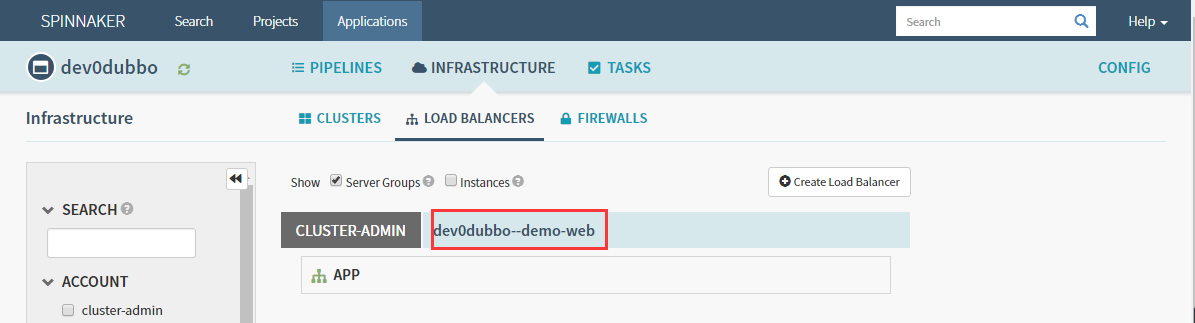

完成后点创建

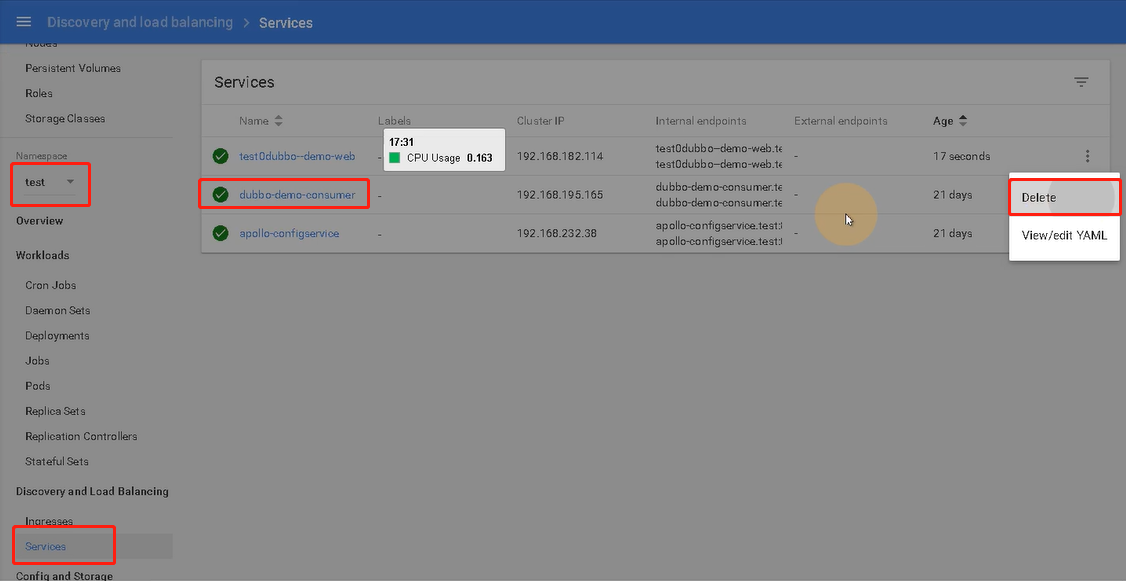

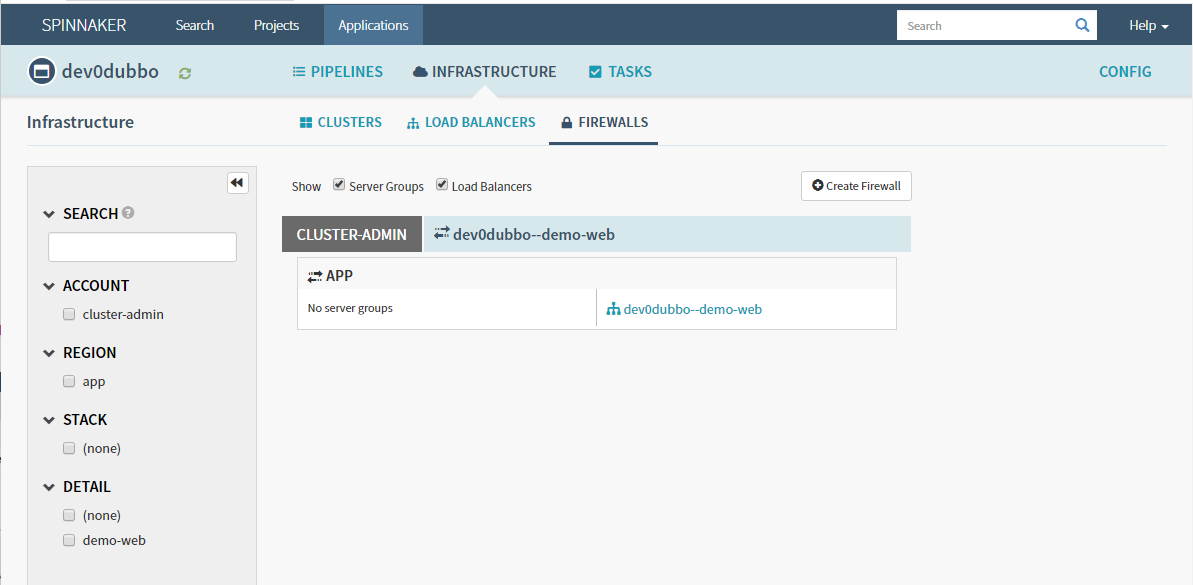

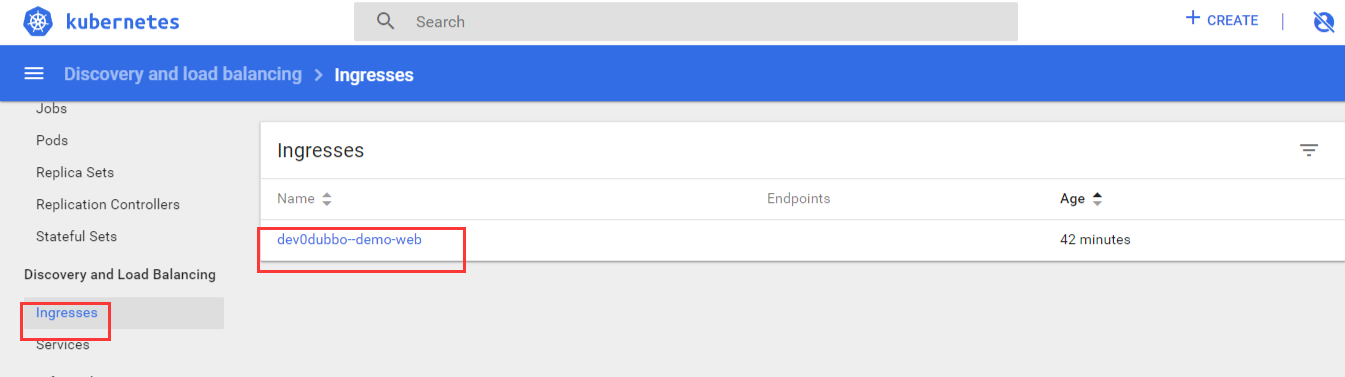

删除dev/Test/Prod环境中dubbo-demo-consumer的service和Ingress

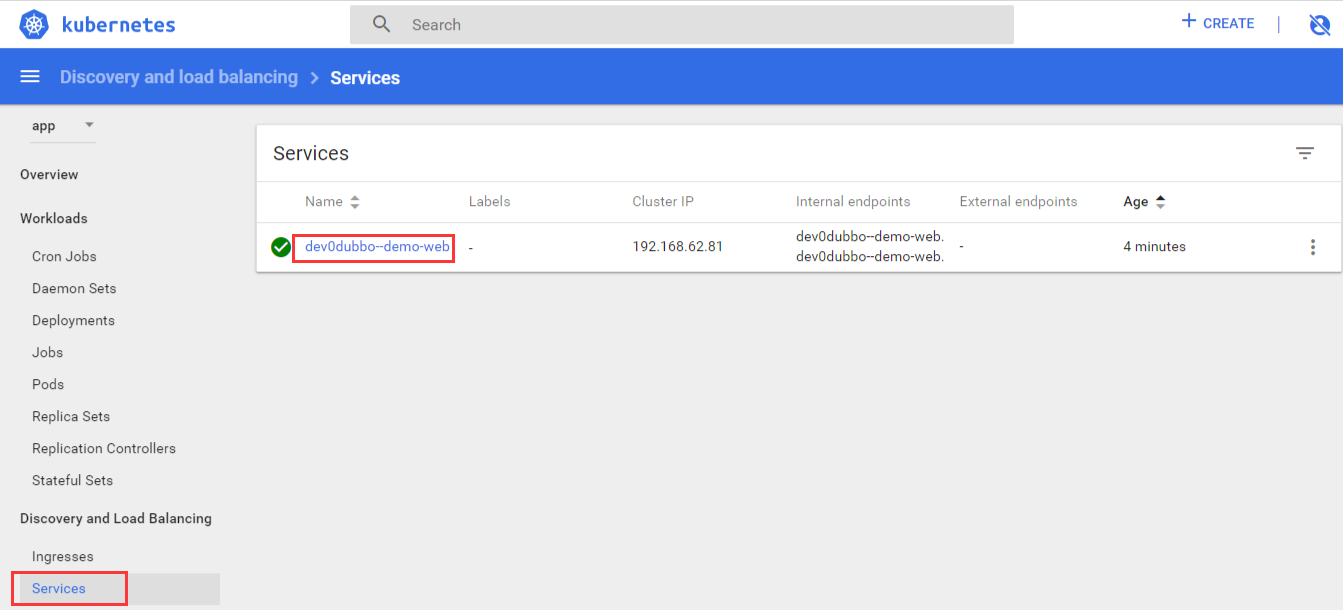

剩下新的service

如果修改可以点下面的地方

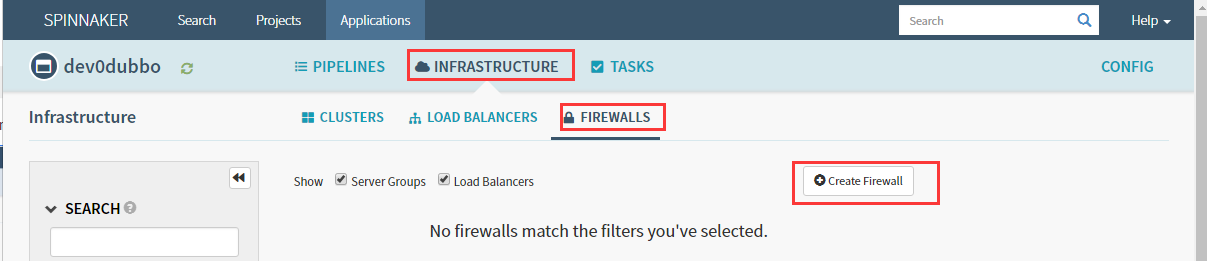

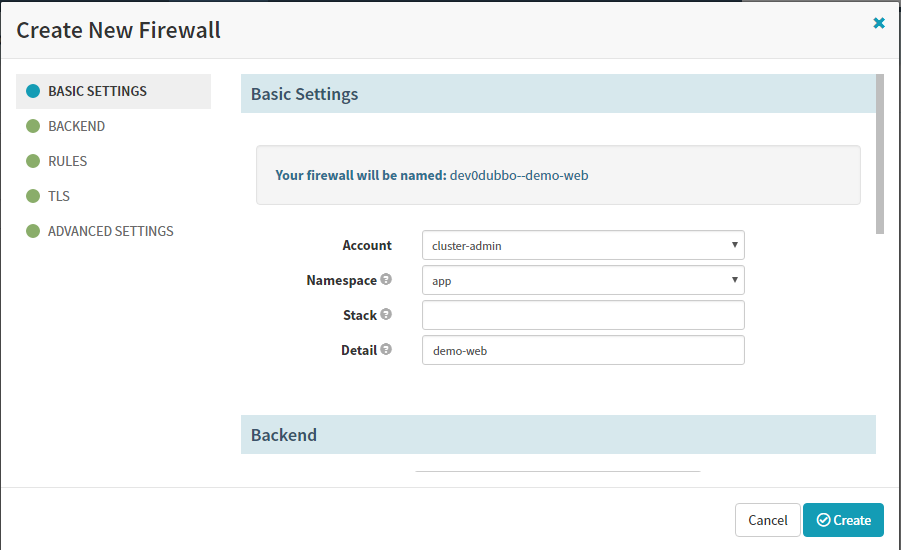

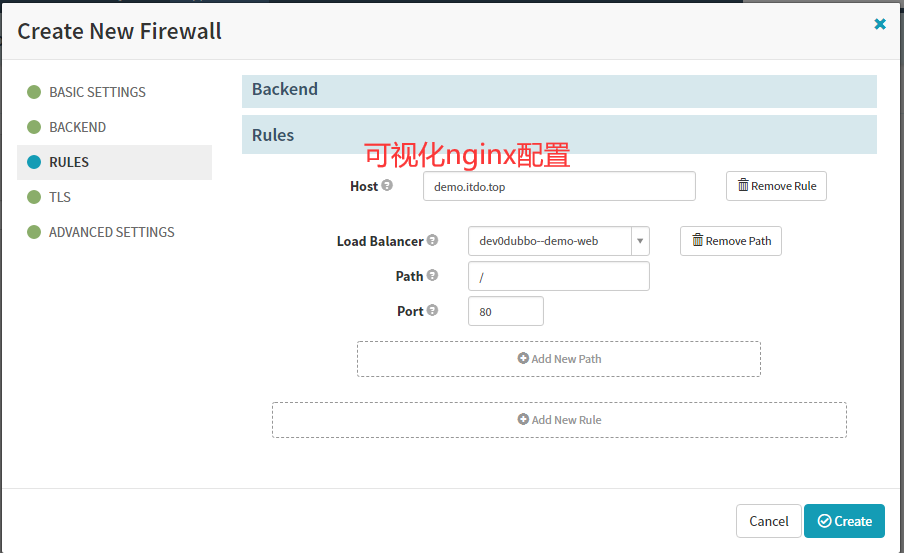

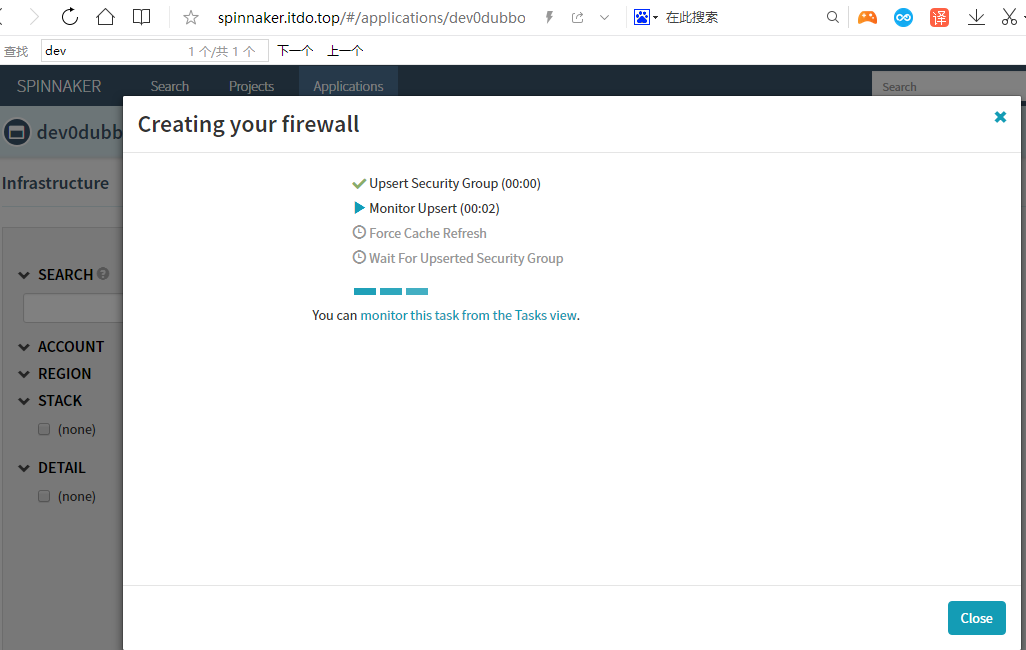

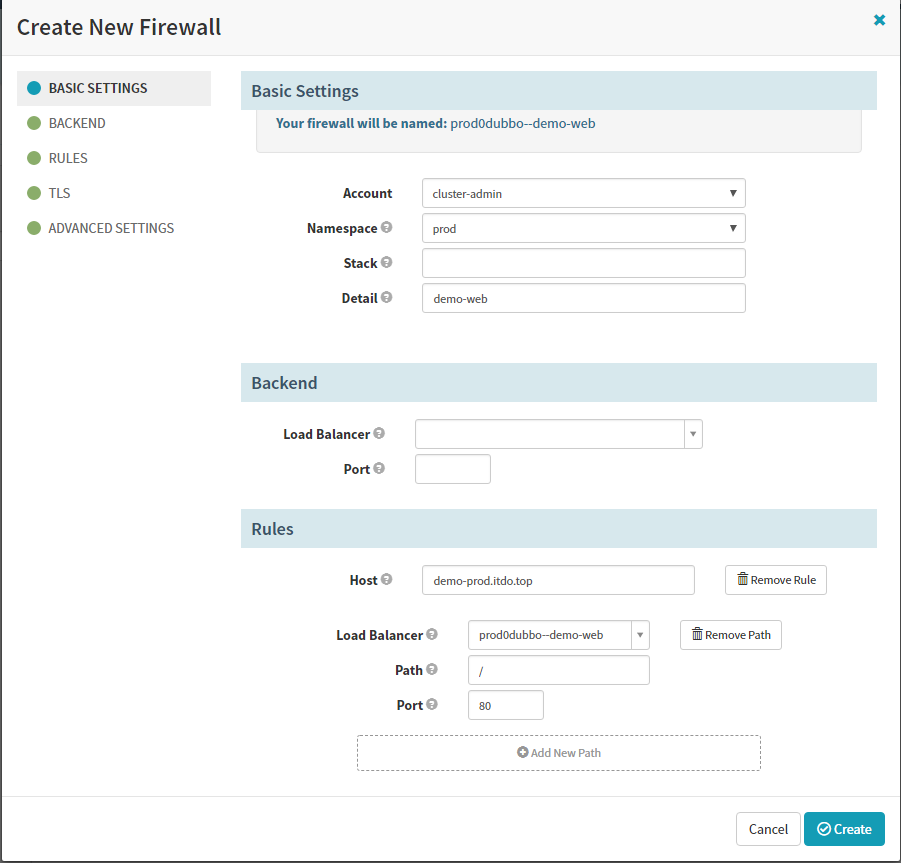

7.4 Create Firewall(ingress)

回到piplines,继续Add stage(services和ingress需要先于deployment创建,因为里面有参数需要关联)

Account:cluster-admin #选择前面新建的cluster-admin账号

Namespace:app #这里选择开发环境app

Detail:dubbo-demo-web

Containers: 这里选择两个镜像,一个主镜像,一个filebeat边车镜像

history limit :历史版本数

max surge:最大存活副本数

max unavailabel:最大不可达副本数

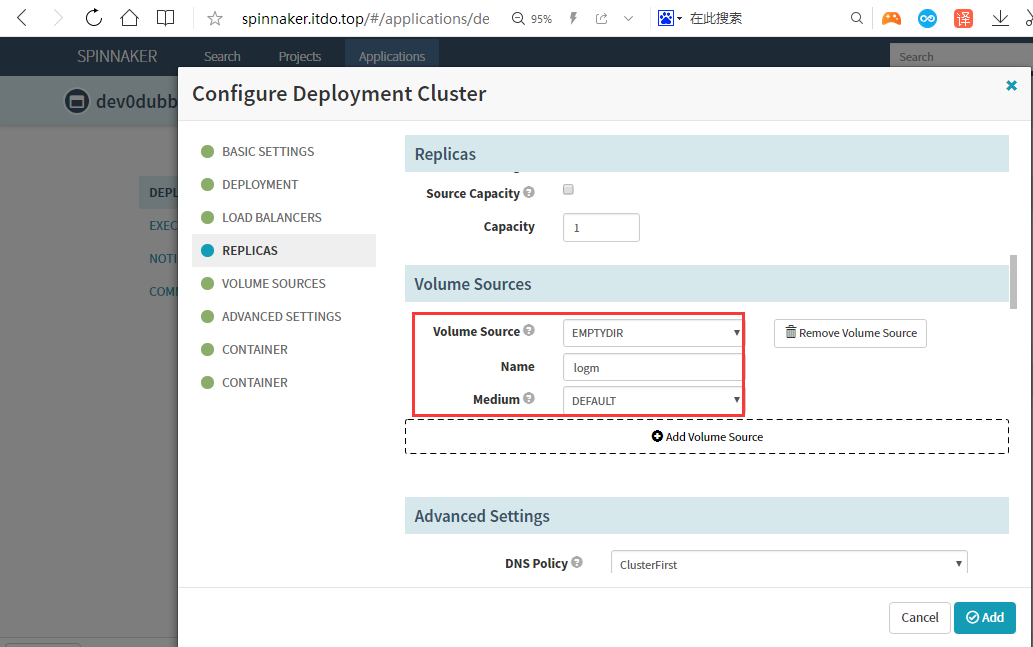

volumes:

- emptyDir: {}

name: logm

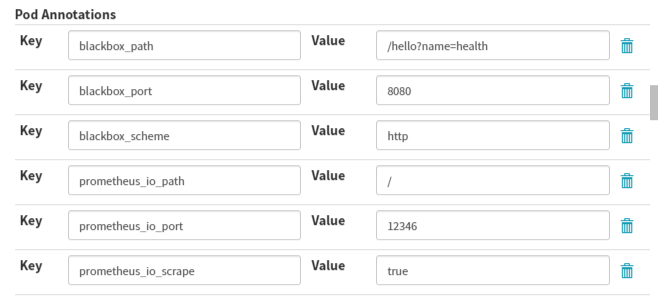

prometheus监控的注解

annotations:

-

blackbox_path: "/hello?name=health" -

blackbox_port: "8080" -

blackbox_scheme: "http" -

prometheus_io_scrape: "true" -

prometheus_io_port: "12346" -

prometheus_io_path: "/"

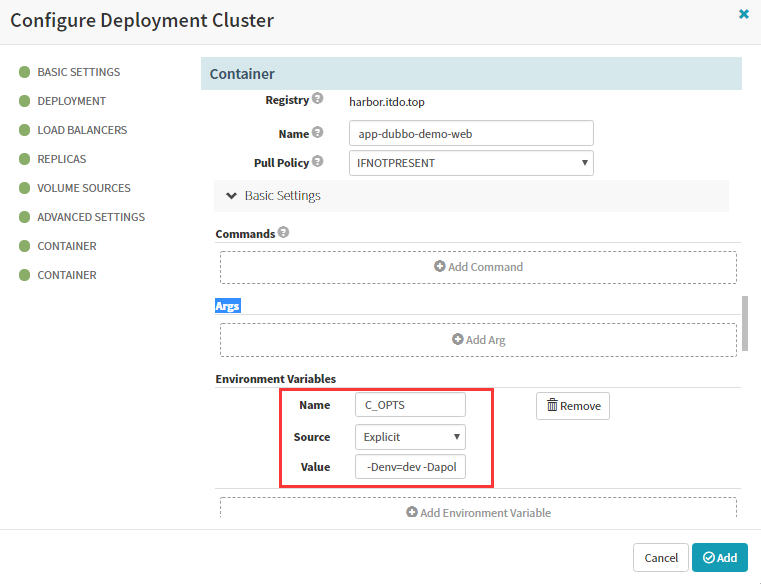

env:

name: C_OPTS

value: -Denv=fat -Dapollo.meta=http://config-test.itdo.top

volumeMounts:

mountPath: /opt/tomcat/logs

name: logm

配置第二个容器

env:

name: ENV

value: dev,,如果是测试环境,则 value: test

name: PROJ_NAME

value: dubbo-demo-web

volumeMounts:

mountPath: /logm

name: logm

最后完成添加

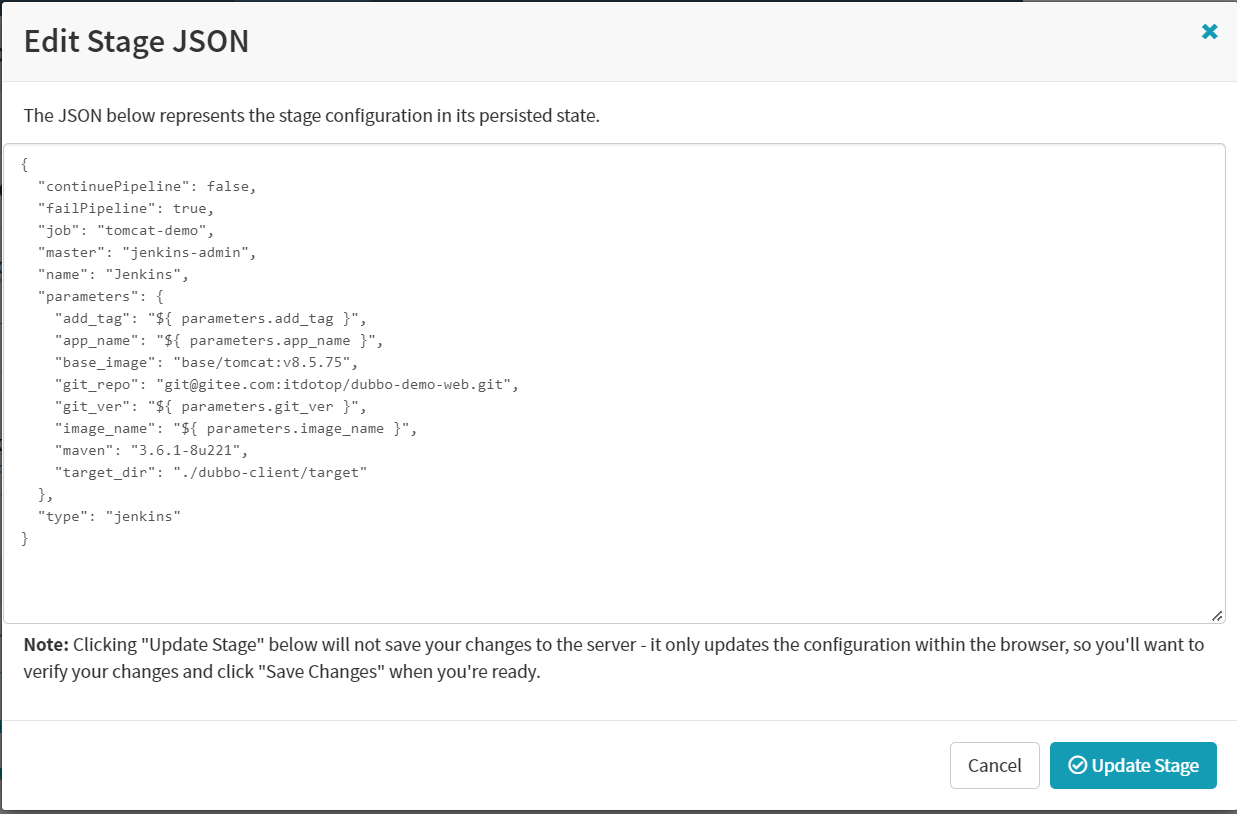

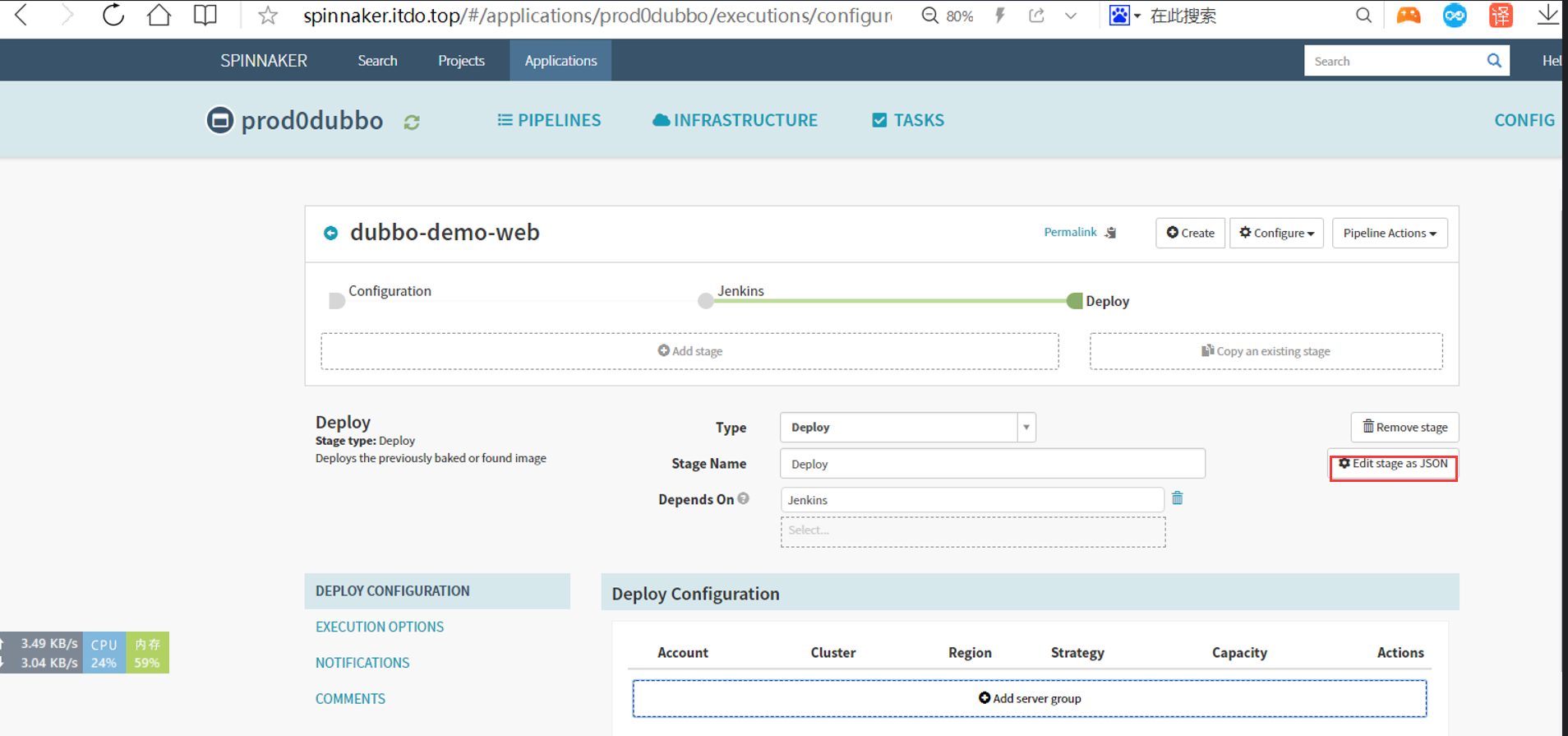

7.5Edit stage as JSON,镜像改成变量

"imageDescription": {

"account": "harbor",

"imageId": "harbor.itdo.top/${parameters.image_name}:${parameters.git_ver}_${parameters.add_tag}",

"registry": "harbor.itdo.top",

"repository": "${parameters.image_name}",

"tag": "${parameters.git_ver}_${parameters.add_tag}"

},

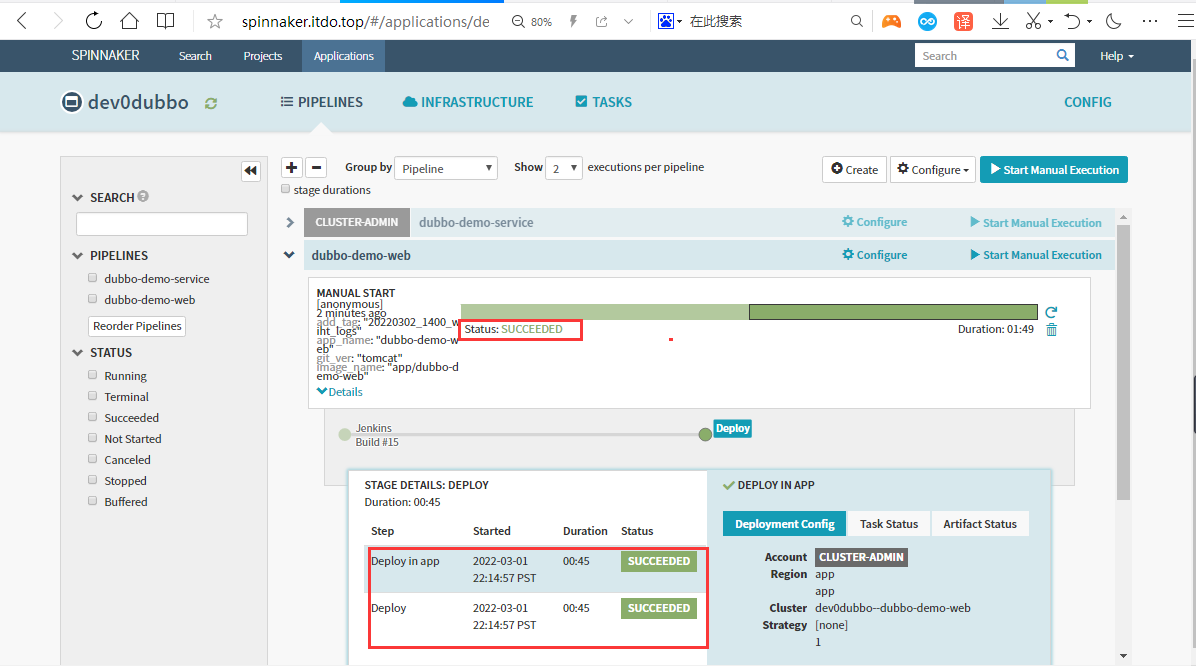

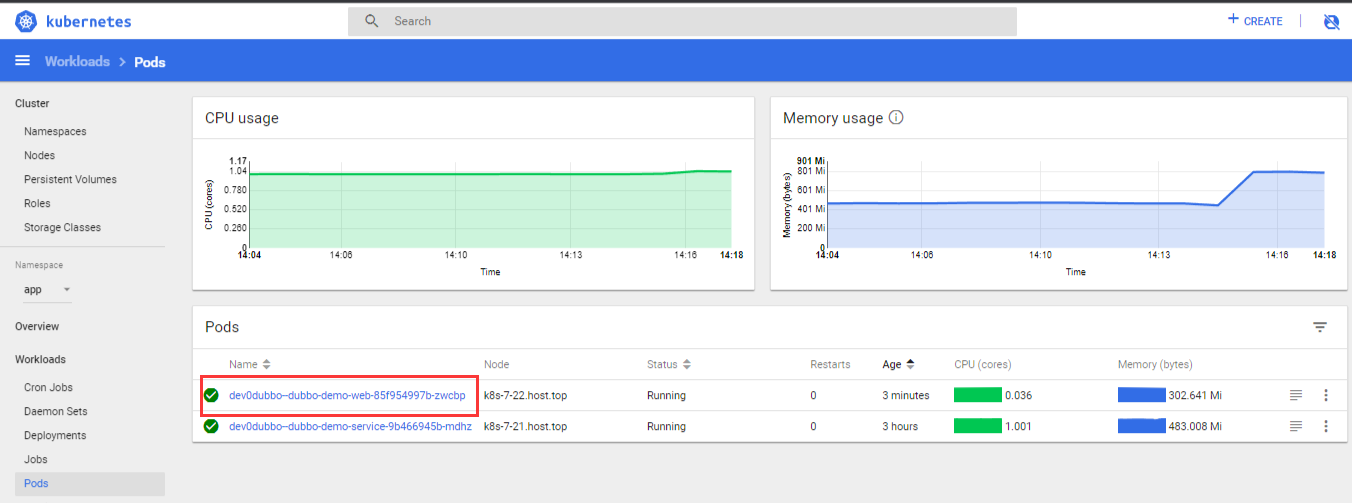

7.6 自动化发布

发布成功

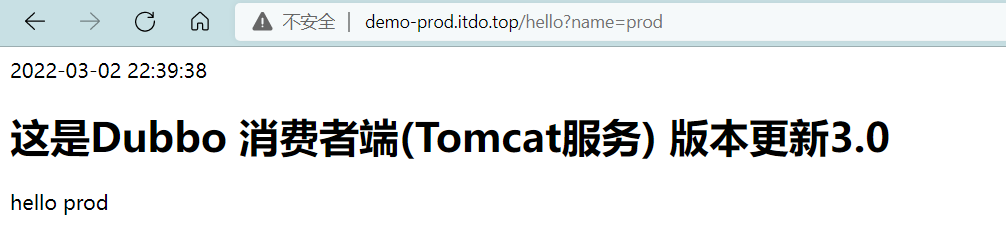

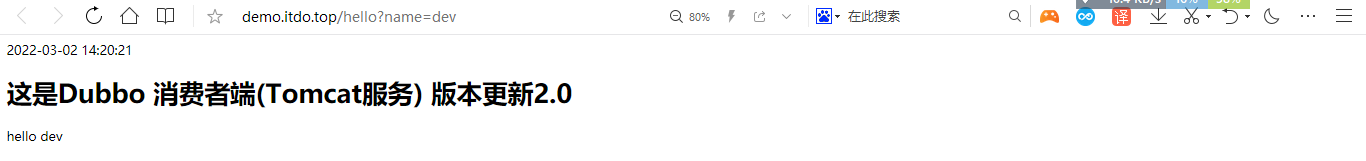

7.7 浏览器访问验证

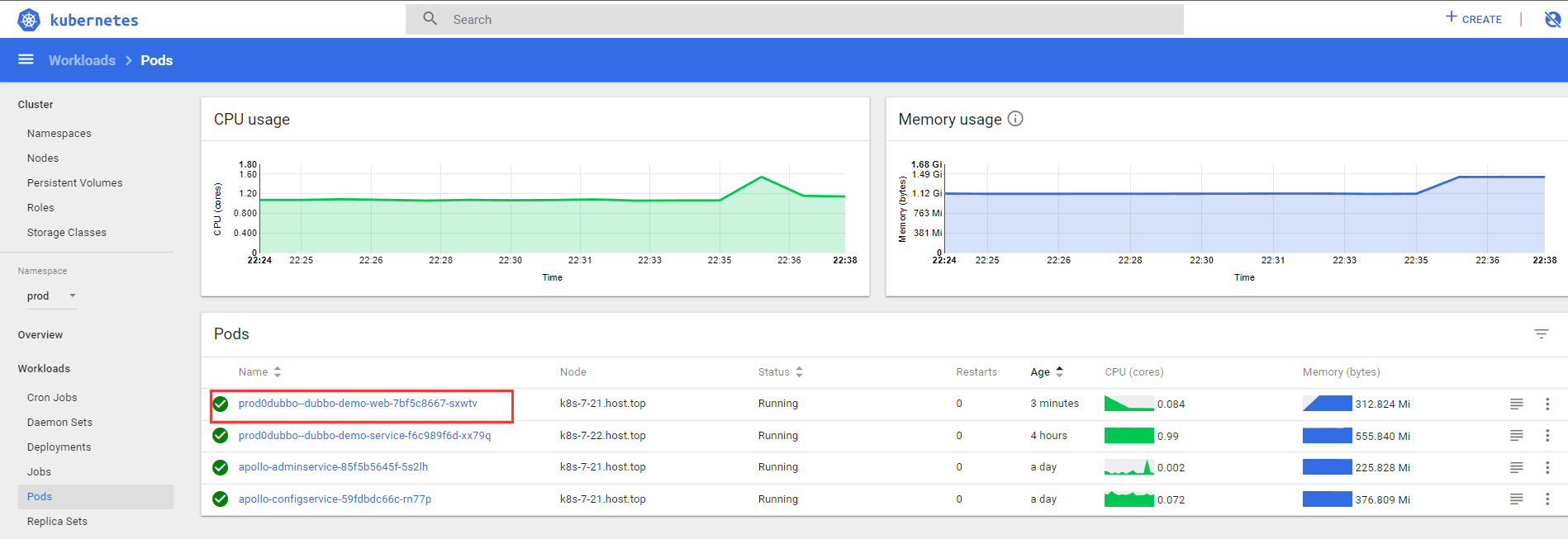

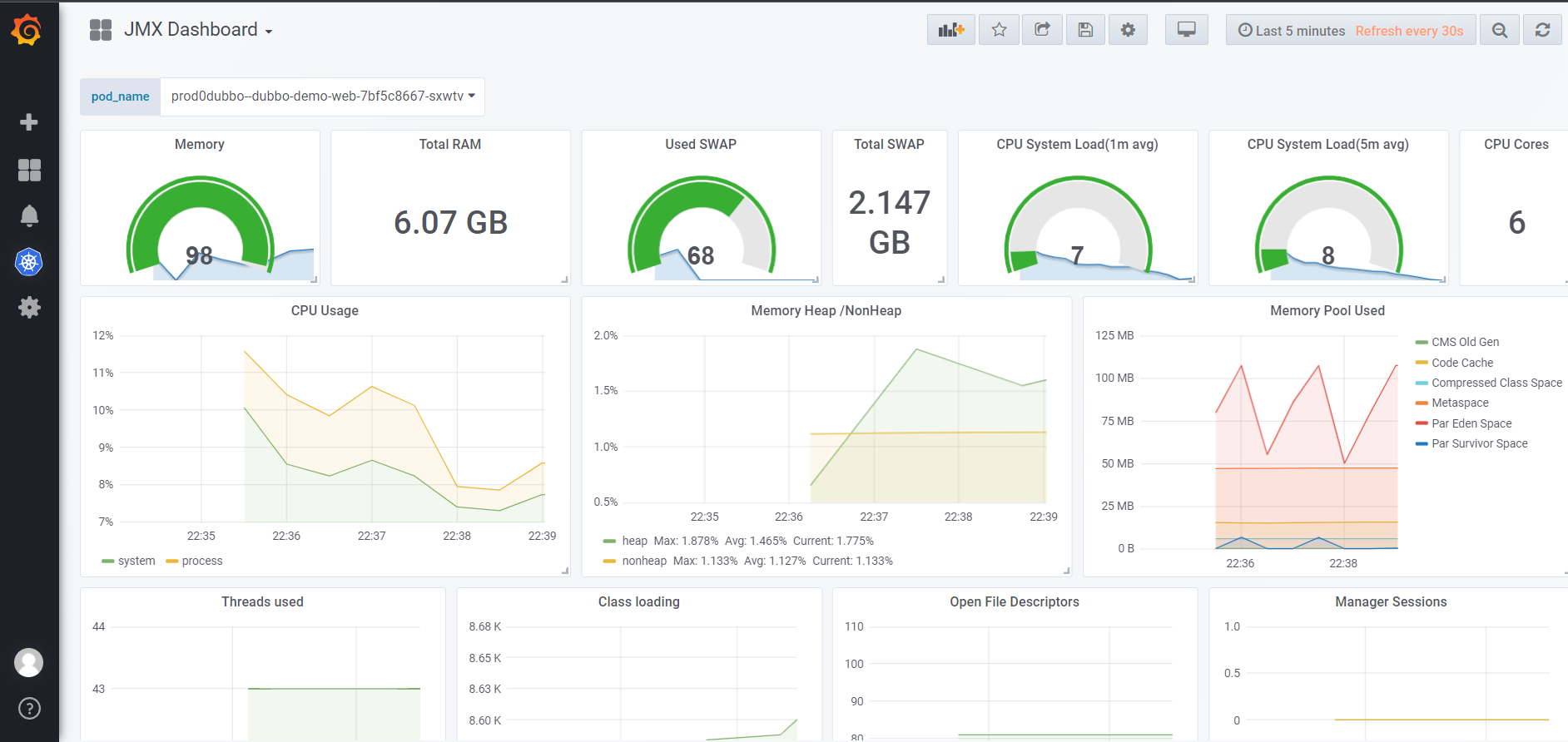

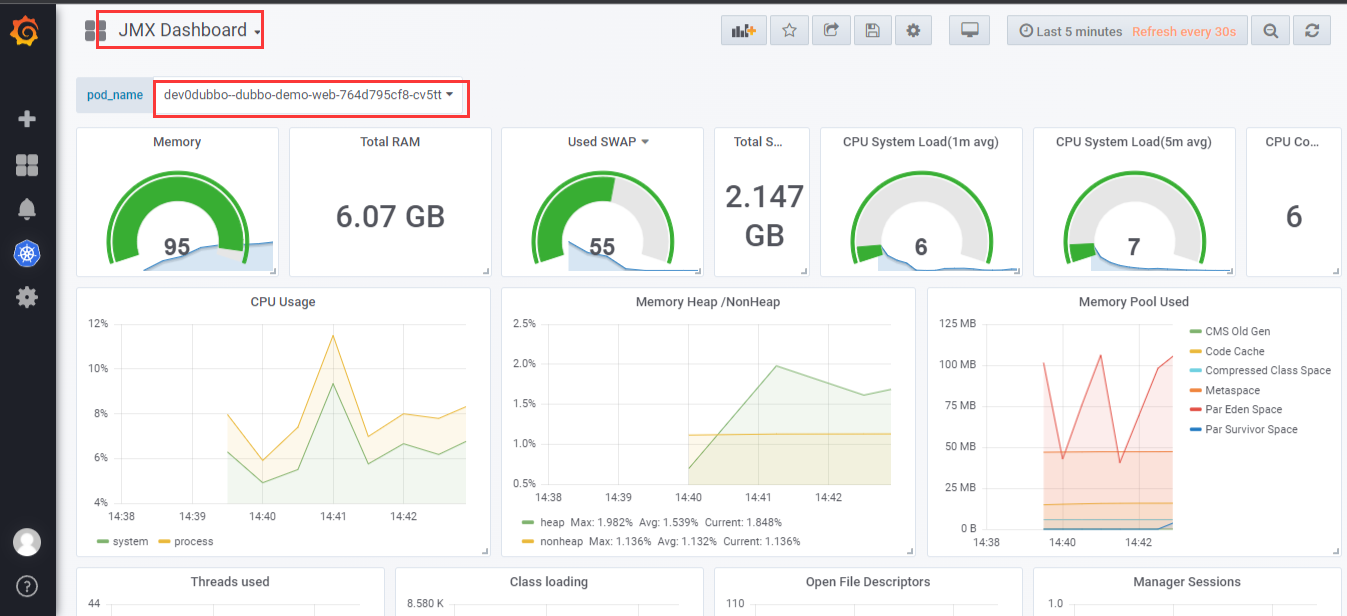

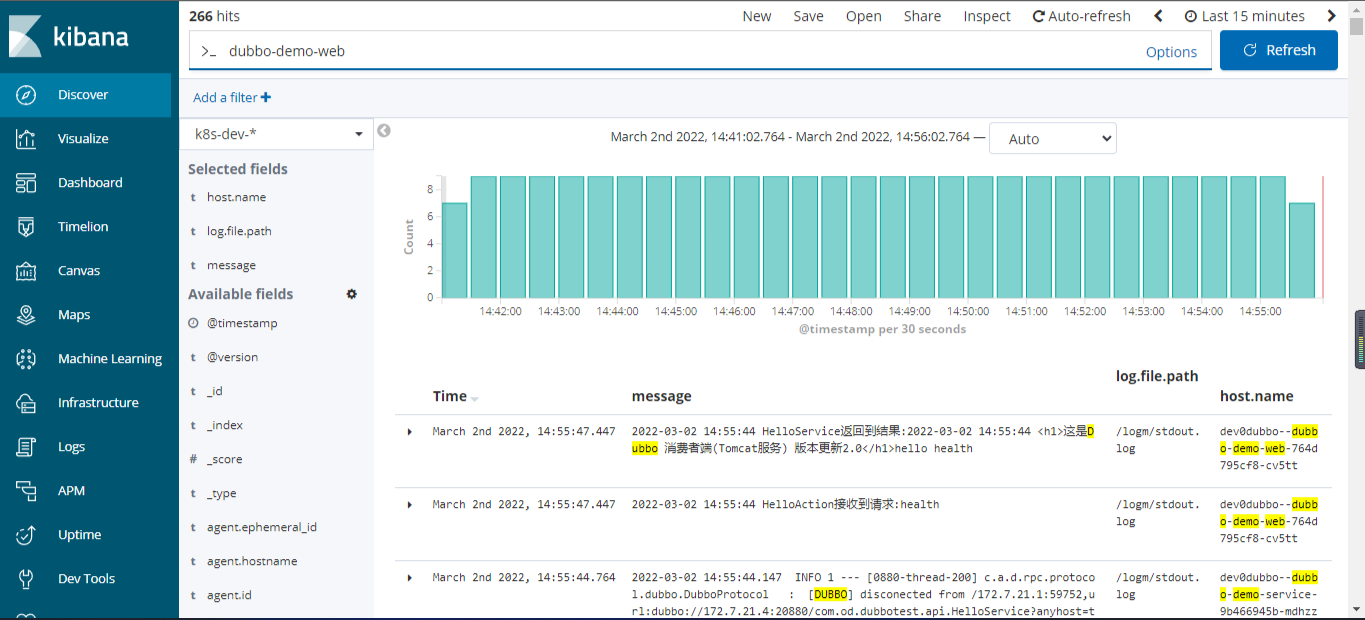

查看监控

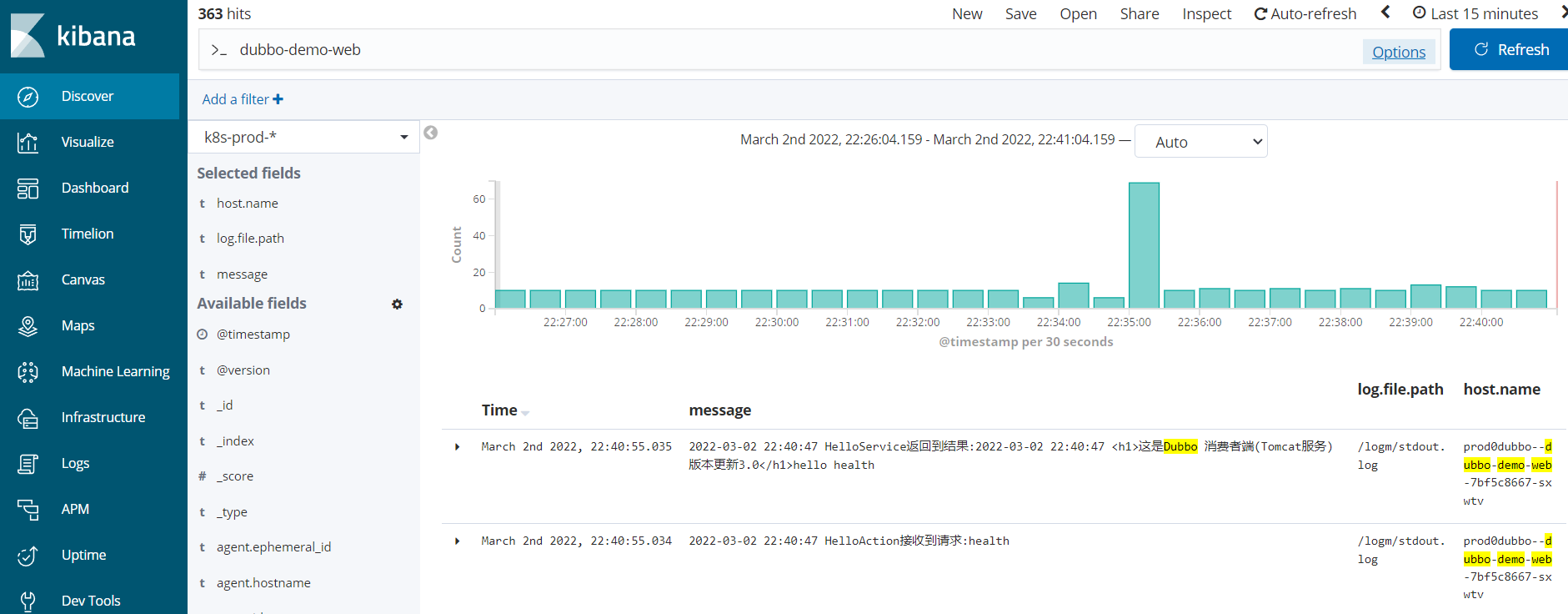

检查日志

8.使用Spinnaker发版及生产环境实践

8.1 登录Gitee修改代码

8.2 开发人员发版,测试人员验证通过

8.3 使用Spinnaker正式上线

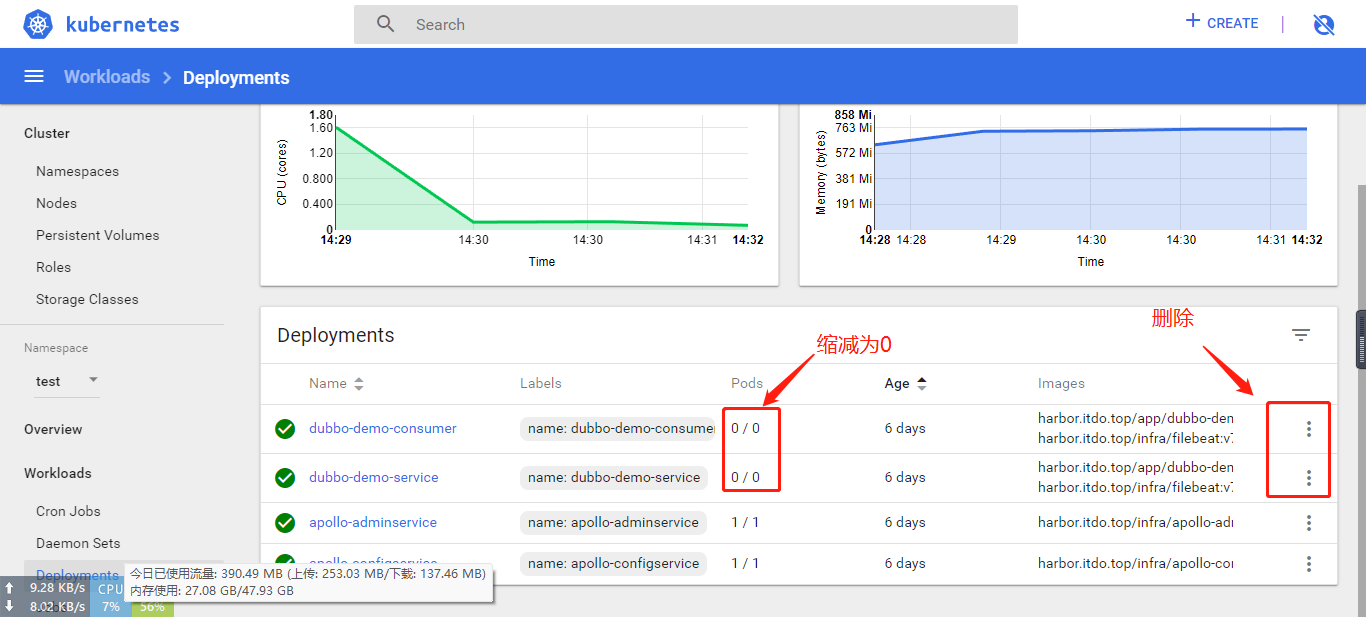

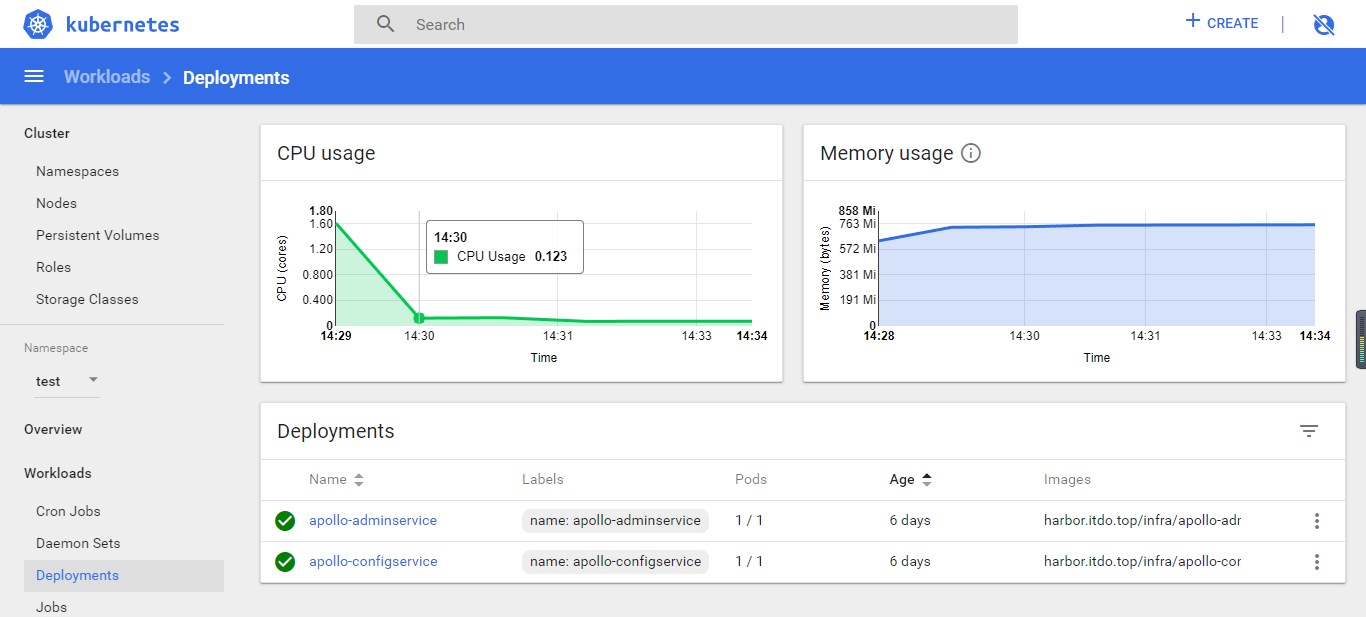

删除prod环境中的 dubbo-demo 提供者和消费者服务的资源配置清单,同时也删掉Prod环境中的service和ingress

创建Prod应用集

dubbo-demo-service部署

Create new Pipeline

Create stage–Configration

增加以下四个参数

本次编译和发布 dubbo-demo-service,因此默认的项目名称和镜像名称是基本确定的

1. name: app_name

required: true

Default Value : dubbo-demo-service

description: 项目在Git仓库名称

2. name: git_ver

required: true

description: 项目的版本或者commit ID或者分支

3. image_name

required: true

default: app/dubbo-demo-service

description: 镜像名称,仓库/image

4. name: add_tag

required: true

description: 标签的一部分,追加在git_ver后面,使用YYYYmmdd_HHMM

save保存配置

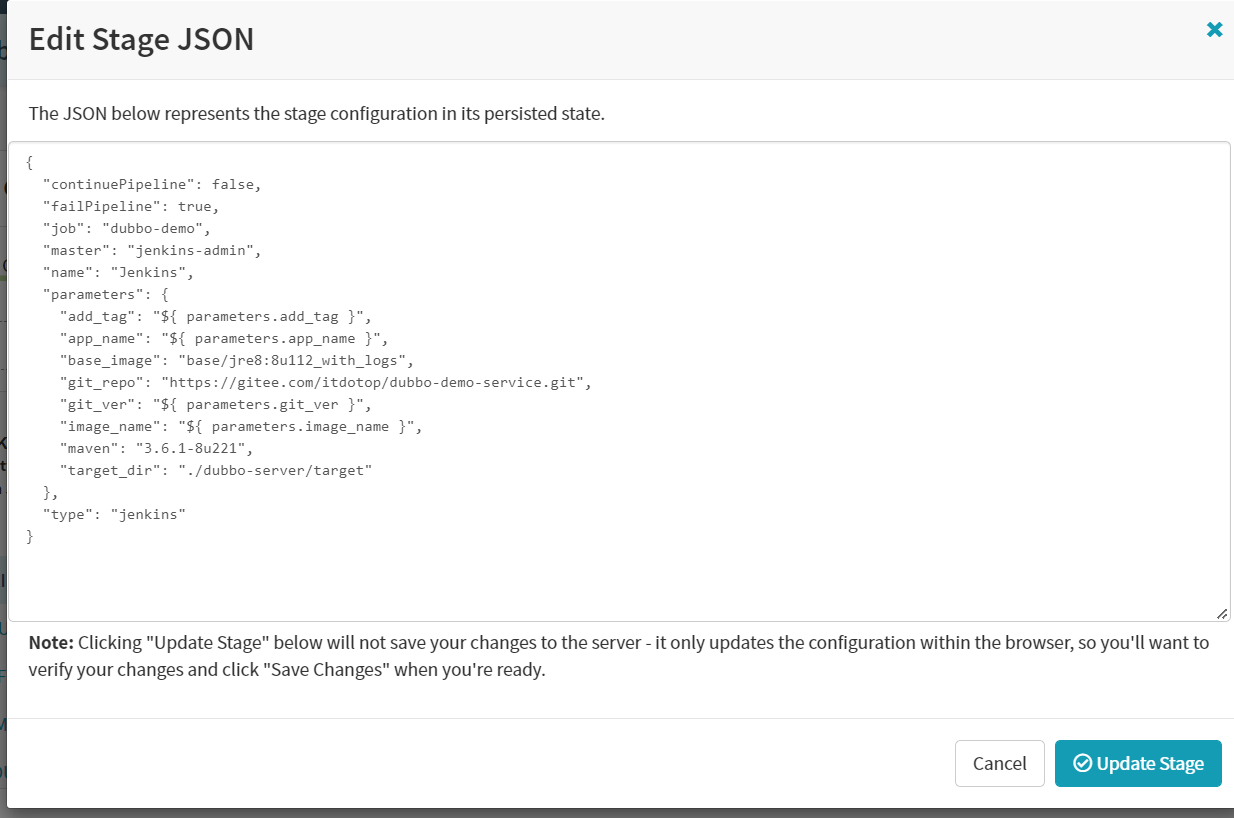

Create stage–Jenkins

为了方便,这里复制测试环境的json过来,修改里面参数后直接粘贴部署

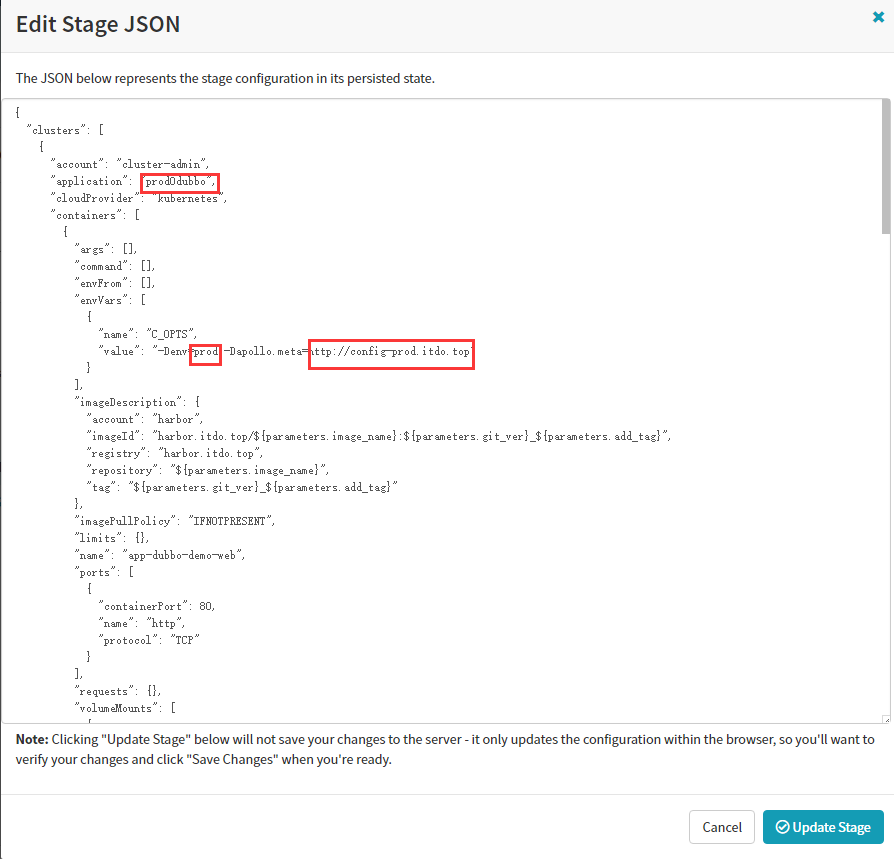

Create stage–deploy

为了方便,这里复制测试环境的json过来,修改里面参数后直接粘贴部署

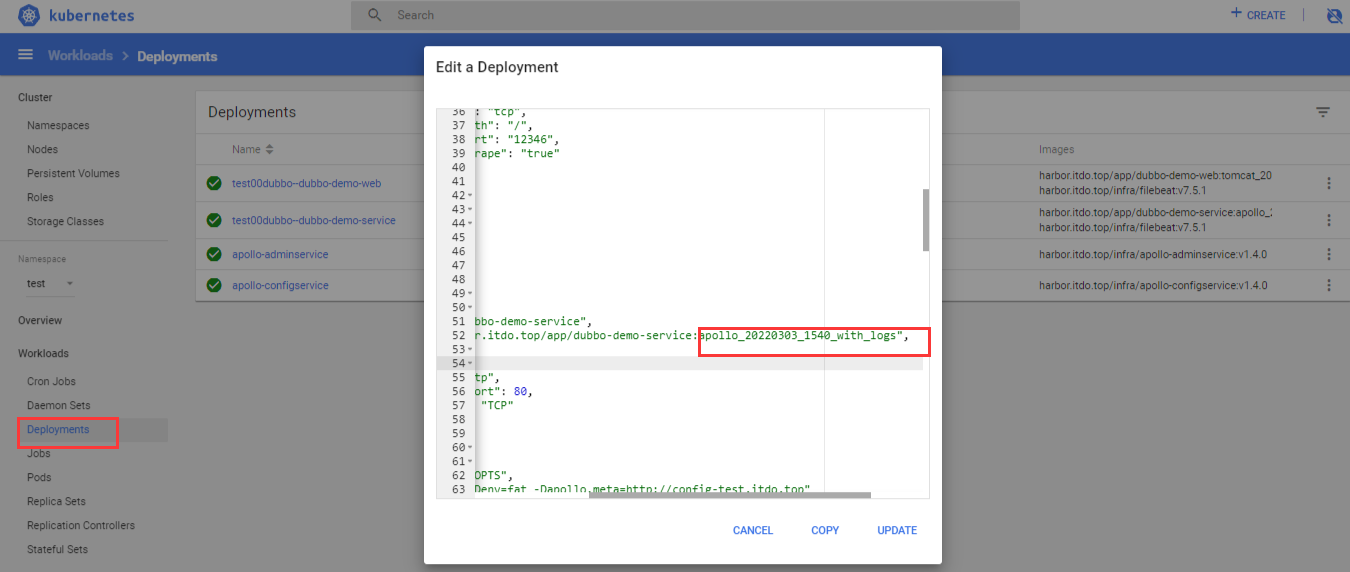

查看测试环境,确认提测通过的镜像版本

Start Manual

Dubbo-demo-web部署

Create new Pipeline

Create stage–Configration

添加4个参数

本次编译和发布 dubbo-demo-web,因此默认的项目名称和镜像名称是基本确定的

1. name: app_name

required: true

Default Value : dubbo-demo-web

description: 项目在Git仓库名称

2. name: git_ver

required: true

description: 项目的版本或者commit ID或者分支

3. image_name

required: true

default: app/dubbo-demo-web

description: 镜像名称,仓库/image

4. name: add_tag

required: true

description: 标签的一部分,追加在git_ver后面,使用YYYYmmdd_HHMM

save保存配置

Create stage–Jenkins

为了方便,这里复制测试环境的json过来,修改里面参数后直接粘贴部署

Create New Load Balancer

先删除Prod环境中dubbo-demo-consumer 的service和ingress

Create New FIREWALLS

Create stage–deploy

为了方便,这里复制测试环境的json过来,修改里面参数后直接粘贴部署

Start Manual

发版验证