说明:

1.etcd群集如果节点数据损坏,但群集中依旧有一个正常运行的节点,可以通过该正常节点数据恢复到其他异常节点上;

2.如果所有节点都无法启动,且所有节点都出现panic: invalid freelist page, page type is leaf数据损坏的情况下,只能通过备份数据恢复,如果没有做过备份,那么只能丢弃数据重新初始化etcd群集

本文没有成功恢复数据,只是记录etcd的处理过程思路。

背景:

测试环境的etcd群集3个节点放在同一宿主机上,一次断电宕机后,etcd所有节点都无法启动

[root@k8s-7-21 etcd]# supervisorctl status

etcd-server-7-21 FATAL Exited too quickly (process log may have details)

现状:3个节点都无法启动,启动都提示panic: invalid freelist page, page type is leaf

处理过程

1.启动报错如下

[root@k8s-7-21 etcd]# ./etcd-server-startup.sh

2022-02-22 09:53:36.753971 I | etcdmain: etcd Version: 3.1.20

2022-02-22 09:53:36.754012 I | etcdmain: Git SHA: 992dbd4d1

2022-02-22 09:53:36.754014 I | etcdmain: Go Version: go1.8.7

2022-02-22 09:53:36.754016 I | etcdmain: Go OS/Arch: linux/amd64

2022-02-22 09:53:36.754018 I | etcdmain: setting maximum number of CPUs to 6, total number of available CPUs is 6

2022-02-22 09:53:36.754054 N | etcdmain: the server is already initialized as member before, starting as etcd member...

2022-02-22 09:53:36.754118 I | embed: peerTLS: cert = ./certs/etcd-peer.pem, key = ./certs/etcd-peer-key.pem, ca = ./certs/ca.pem, trusted-ca = ./certs/ca.pem, client-cert-auth = true

2022-02-22 09:53:36.754630 I | embed: listening for peers on https://10.4.7.21:2380

2022-02-22 09:53:36.754642 W | embed: The scheme of client url http://127.0.0.1:2379 is HTTP while peer key/cert files are presented. Ignored key/cert files.

2022-02-22 09:53:36.754645 W | embed: The scheme of client url http://127.0.0.1:2379 is HTTP while client cert auth (--client-cert-auth) is enabled. Ignored client cert auth for this url.

2022-02-22 09:53:36.754654 I | embed: listening for client requests on 127.0.0.1:2379

2022-02-22 09:53:36.754661 I | embed: listening for client requests on 10.4.7.21:2379

panic: invalid freelist page: 167, page type is leaf

goroutine 106 [running]:

github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt.(*freelist).read(0xc4201ff020, 0x7feb716c6000)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt/freelist.go:237 +0x35b

github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt.(*DB).loadFreelist.func1()

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt/db.go:290 +0x1bf

sync.(*Once).Do(0xc420114330, 0xc420046dc8)

/usr/local/google/home/jpbetz/.gvm/gos/go1.8.7/src/sync/once.go:44 +0xbe

github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt.(*DB).loadFreelist(0xc4201141e0)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt/db.go:293 +0x57

github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt.Open(0xc4201fef00, 0x25, 0x180, 0x1363fa0, 0x0, 0x0, 0x0)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt/db.go:260 +0x3f6

github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/mvcc/backend.newBackend(0xc4201fef00, 0x25, 0x5f5e100, 0x2710, 0xd01060)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/mvcc/backend/backend.go:112 +0x61

github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/mvcc/backend.NewDefaultBackend(0xc4201fef00, 0x25, 0xa72749, 0x432538)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/mvcc/backend/backend.go:108 +0x4d

github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdserver.NewServer.func1(0xc420206800, 0xc4201fef00, 0x25, 0xc42019f800)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdserver/server.go:275 +0x39

created by github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdserver.NewServer

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdserver/server.go:277 +0x4bc

[root@k8s-7-21 etcd]#

说明:3个节点都提示异常:panic: invalid freelist page: 167, page type is leaf,该提示为db文件损坏

2.关于etcd数据存储的说明

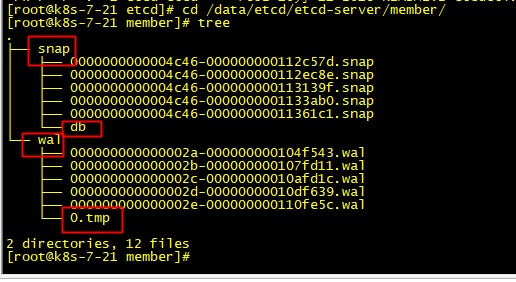

在数据存储的目录中,在启动的时候,文件目录结果如下所示:

tmp文件主要是未提交的数据记录,db信息就是集群和节点的一些信息。

3.尝试使用快照修复

说明:etcd 目前最新的版本的 v3.1.1,但它的 API 又有 v3 和 v2 之分,社区通常所说的 v3 与 v2 都是指 API 的版本号。从 etcd 2.3 版本开始推出了一个实验性的全新 v3 版本 API 的实现,v2 与 v3 API 使用了不同的存储引擎,所以客户端命令也完全不同。

# etcdctl --version

etcdctl version: 3.0.4

API version: 2

官方指出 etcd v2 和 v3 的数据不能混合存放,support backup of v2 and v3 stores 。

特别提醒:若使用 v3 备份数据时存在 v2 的数据则不影响恢复

若使用 v2 备份数据时存在 v3 的数据则恢复失败

- 对于 API 2 备份与恢复方法

官网:https://etcd.io/docs/v2.3/admin_guide/

etcd的数据默认会存放在我们的命令工作目录中,我们发现数据所在的目录,会被分为两个文件夹中:

snap: 存放快照数据,etcd防止WAL文件过多而设置的快照,存储etcd数据状态。

wal: 存放预写式日志,最大的作用是记录了整个数据变化的全部历程。在etcd中,所有数据的修改在提交前,都要先写入到WAL中。

备份

#etcdctl backup \

--data-dir %data_dir% \

[--wal-dir %wal_dir%] \

--backup-dir %backup_data_dir%

[--backup-wal-dir %backup_wal_dir%]

恢复

#etcd \

-data-dir=%backup_data_dir% \

[-wal-dir=%backup_wal_dir%] \

--force-new-cluster

恢复时会覆盖 snapshot 的元数据(member ID 和 cluster ID),所以需要启动一个新的集群。

- 对于 API 3 备份与恢复方法

官网:https://etcd.io/docs/v3.5/quickstart/

在使用 API 3 时需要使用环境变量 ETCDCTL_API 明确指定。

在命令行设置:

# export ETCDCTL_API=3

备份数据:

# etcdctl --endpoints localhost:2379 snapshot save snapshot.db

因为我们3个节点都无法启动,根本无法备份快照

[root@k8s-7-12 etcd]# /opt/etcd/etcdctl snapshot save /tmp/snapshot.db.20200628 --cacert=./certs/ca.pem --cert=./certs/etcd-peer.pem --key=./certs/etcd-peer-key.pem --endpoints="https://10.4.7.12:2380"

2022-02-22 00:43:42.032921 I | warning: ignoring ServerName for user-provided CA for backwards compatibility is deprecated

Error: grpc: timed out when dialing

恢复:

# etcdctl snapshot restore snapshot.db --name m3 --data-dir=/home/etcd_data

恢复后的文件需要修改权限为 etcd:etcd

–name:重新指定一个数据目录,可以不指定,默认为 default.etcd

–data-dir:指定数据目录

建议使用时不指定 name 但指定 data-dir,并将 data-dir 对应于 etcd 服务中配置的 data-dir

在还原时可以选择验证快照完整性。如果使用快照etcdctl snapshot save,它将具有通过检查的完整性散列etcdctl snapshot restore。如果快照是从数据目录复制的(配置文件中的data-dir),则不存在完整性哈希,并且只能使用恢复–skip-hash-check

使用db文件进行还原测试,依旧提示“panic: invalid freelist page: 160, page type is leaf”

[root@k8s-7-12 etcd]# cp -rf /data/etcd/etcd-server/member/snap/db /home/

[root@k8s-7-12 etcd]# cp -rf /data/etcd/etcd-server/member/snap/db /data/etcd/etcd-server.bak.20220221

[root@k8s-7-12 etcd]# rm -rf /data/etcd/etcd-server

[root@k8s-7-12 etcd]# ETCDCTL_API=3 /opt/etcd/etcdctl snapshot restore --skip-hash-check /home/db --name=etcd-server-7-12 --data-dir=/data/etcd/etcd-server --initial-cluster=etcd-server-7-12=https://10.4.7.12:2380 --initial-cluster-token=etcd-server-7-12 --initial-advertise-peer-urls=https://10.4.7.12:2380

panic: invalid freelist page: 160, page type is leaf

goroutine 1 [running]:

github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt.(*freelist).read(0xc4201ed080, 0x7f1567858000)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt/freelist.go:237 +0x35b

github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt.(*DB).loadFreelist.func1()

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt/db.go:290 +0x1bf

sync.(*Once).Do(0xc4201fe150, 0xc4201bb6b0)

/usr/local/google/home/jpbetz/.gvm/gos/go1.8.7/src/sync/once.go:44 +0xbe

github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt.(*DB).loadFreelist(0xc4201fe000)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt/db.go:293 +0x57

github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt.Open(0xc4201ecf60, 0x25, 0xc400000180, 0x116bf60, 0x0, 0x0, 0x0)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/bbolt/db.go:260 +0x3f6

github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/mvcc/backend.newBackend(0xc4201ecf60, 0x25, 0x5f5e100, 0x2710, 0x0)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/mvcc/backend/backend.go:112 +0x61

github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/mvcc/backend.NewDefaultBackend(0xc4201ecf60, 0x25, 0x0, 0x1174000)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/mvcc/backend/backend.go:108 +0x4d

github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdctl/ctlv3/command.makeDB(0xc4201ecd20, 0x22, 0x7ffd979c1729, 0x8, 0x1)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdctl/ctlv3/command/snapshot_command.go:375 +0x387

github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdctl/ctlv3/command.snapshotRestoreCommandFunc(0xc4201e3800, 0xc4201ded90, 0x1, 0x7)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdctl/ctlv3/command/snapshot_command.go:196 +0x34e

github.com/coreos/etcd/cmd/vendor/github.com/spf13/cobra.(*Command).execute(0xc4201e3800, 0xc4201ded20, 0x7, 0x7, 0xc4201e3800, 0xc4201ded20)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/spf13/cobra/command.go:572 +0x203

github.com/coreos/etcd/cmd/vendor/github.com/spf13/cobra.(*Command).ExecuteC(0x11a4e60, 0xd4522f, 0xb, 0xc4201bbed8)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/spf13/cobra/command.go:662 +0x394

github.com/coreos/etcd/cmd/vendor/github.com/spf13/cobra.(*Command).Execute(0x11a4e60, 0x0, 0x0)

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/spf13/cobra/command.go:618 +0x2b

github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdctl/ctlv3.Start()

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/vendor/github.com/coreos/etcd/etcdctl/ctlv3/ctl.go:96 +0x8e

main.main()

/tmp/etcd-release-3.1.20/etcd/release/etcd/gopath/src/github.com/coreos/etcd/cmd/etcdctl/main.go:40 +0x1c2

4.尝试数据文件修复

安装go环境

#mkdir /home/go && cd /home/go

#wget https://studygolang.com/dl/golang/go1.14.1.linux-amd64.tar.gz

#tar -xvf go1.14.1.linux-amd64.tar.gz

#建立工作目录。官方建议放在 /home/go 下,创建三个目录:bin(编译后可的执行文件的存放路径)、pkg(编译包时,生成的.a文件的存放路径)、src(源码路径,一般我们的工程就创建在src下面)

#mkdir -p /home/go/bin /home/go/pkg /home/go/src

#vim profile #设置环境变量

export GOROOT=/home/go/go

export GOPATH=/home/go

export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

#配置生效

#source /etc/profile

#go默认使用的是proxy.golang.org,在国内无法访问,换一个国内能访问的代理地址:https://goproxy.cn

#go env -w GOPROXY=https://goproxy.cn

安装bolt客户端

官方:https://github.com/etcd-io/bbolt/tree/master#installing

要开始使用 Bolt,请安装 Go 并运行go get:

$ go get -u -v go.etcd.io/bbolt/...

这将检索库并将bolt命令行实用程序安装到您的$GOBIN路径中。我们这里路径 /home/go/bin/bbolt

[root@k8s-7-12 etcd]# /home/go/bin/bbolt

Bbolt is a tool for inspecting bbolt databases.

Usage:

bbolt command [arguments]

The commands are:

bench run synthetic benchmark against bbolt

buckets print a list of buckets

check verifies integrity of bbolt database

compact copies a bbolt database, compacting it in the process

dump print a hexadecimal dump of a single page

get print the value of a key in a bucket

info print basic info

keys print a list of keys in a bucket

help print this screen

page print one or more pages in human readable format

pages print list of pages with their types

page-item print the key and value of a page item.

stats iterate over all pages and generate usage stats

Use "bbolt [command] -h" for more information about a command.

检查db文件,依旧报错“panic: invalid freelist page: 160, page type is leaf”

[root@k8s-7-12 bin]# /home/go/bin/bbolt check /data/etcd/etcd-server/member/snap/db

panic: invalid freelist page: 160, page type is leaf

goroutine 1 [running]:

go.etcd.io/bbolt.(*freelist).read(0x505b07, 0x7f1b50a74000)

/home/go/pkg/mod/go.etcd.io/[email protected]/freelist.go:266 +0x234

go.etcd.io/bbolt.(*DB).loadFreelist.func1()

/home/go/pkg/mod/go.etcd.io/[email protected]/db.go:323 +0xae

sync.(*Once).doSlow(0xc0000861c8, 0x10)

/home/go/go/src/sync/once.go:68 +0xd2

sync.(*Once).Do(...)

/home/go/go/src/sync/once.go:59

go.etcd.io/bbolt.(*DB).loadFreelist(0xc000086000)

/home/go/pkg/mod/go.etcd.io/[email protected]/db.go:316 +0x47

go.etcd.io/bbolt.Open({0x7ffcb9152745, 0x25}, 0x1b6, 0x0)

/home/go/pkg/mod/go.etcd.io/[email protected]/db.go:293 +0x46b

main.(*CheckCommand).Run(0xc000095e58, {0xc0000101a0, 0x1, 0x1})

/home/go/pkg/mod/go.etcd.io/[email protected]/cmd/bbolt/main.go:202 +0x1a5

main.(*Main).Run(0xc000064f40, {0xc000010190, 0x2, 0x2})

/home/go/pkg/mod/go.etcd.io/[email protected]/cmd/bbolt/main.go:112 +0x993

main.main()

/home/go/pkg/mod/go.etcd.io/[email protected]/cmd/bbolt/main.go:70 +0xb4

尝试压缩清理db文件,依旧失败

[root@k8s-7-12 etcd]# /home/go/bin/bbolt compact -o /tmp/fixed.db /data/etcd/etcd-server/member/snap/db

panic: invalid freelist page: 160, page type is leaf

goroutine 1 [running]:

go.etcd.io/bbolt.(*freelist).read(0x505b07, 0x7ff6fe887000)

/home/go/pkg/mod/go.etcd.io/[email protected]/freelist.go:266 +0x234

go.etcd.io/bbolt.(*DB).loadFreelist.func1()

/home/go/pkg/mod/go.etcd.io/[email protected]/db.go:323 +0xae

sync.(*Once).doSlow(0xc0000861c8, 0x10)

/home/go/go/src/sync/once.go:68 +0xd2

sync.(*Once).Do(...)

/home/go/go/src/sync/once.go:59

go.etcd.io/bbolt.(*DB).loadFreelist(0xc000086000)

/home/go/pkg/mod/go.etcd.io/[email protected]/db.go:316 +0x47

go.etcd.io/bbolt.Open({0x7ffd7e05d74a, 0x25}, 0x124, 0x0)

/home/go/pkg/mod/go.etcd.io/[email protected]/db.go:293 +0x46b

main.(*CompactCommand).Run(0xc0000521e0, {0xc0000100c0, 0x3, 0x3})

/home/go/pkg/mod/go.etcd.io/[email protected]/cmd/bbolt/main.go:1982 +0x2e5

main.(*Main).Run(0xc000062f40, {0xc0000100b0, 0x4, 0x4})

/home/go/pkg/mod/go.etcd.io/[email protected]/cmd/bbolt/main.go:114 +0x6f6

main.main()

/home/go/pkg/mod/go.etcd.io/[email protected]/cmd/bbolt/main.go:70 +0xb4

5.重新初始化etcd群集

集群最核心的数据是etcd,flannel网络数据存储在etcd,集群其他各种数据也全部存储在etcd。集群组件通过kube-apiserver来读取etcd数据。

备份删除所有etcd数据,重新初始化(所有节点都操作)

[root@k8s-7-12 etcd]# cp -rf /data/etcd/etcd-server/member/snap/db /data/etcd/etcd-server.bak.20220221

[root@k8s-7-12 etcd]# rm -rf /data/etcd/etcd-server

启动服务

# supervisorctl start etcd-server-7-12

# supervisorctl start etcd-server-7-21

# supervisorctl start etcd-server-7-22

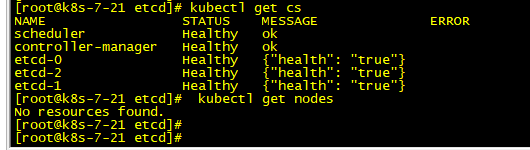

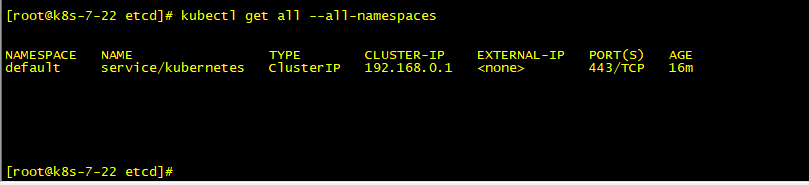

etcd集群正常后,命令可以执行,但是所有数据丢失

重新部署服务(恢复数据):

说明:需要恢复pod, namespace,services,configmap,deployment,Daemon Sets,ingress,rabc,secret,label 等数据

授权匿名用户

#kubectl create clusterrolebinding test:anonymous --clusterrole=cluster-admin --user=system:anonymous

授予权限,角色绑定

让k8s-node用户具备成为群集里运算节点的权限

[root@hdss7-21 conf]# kubectl create -f /opt/kubernetes-v1.15.4/server/bin/conf/k8s-node.yaml

#ROlES添加标签,设定节点角色,可同时加两个标签,可供后面lable选择器调度使用

[root@k8s-7-21 cert]# kubectl label node k8s-7-21.host.top node-role.kubernetes.io/master=

[root@k8s-7-21 cert]# kubectl label node k8s-7-21.host.top node-role.kubernetes.io/node=

[root@k8s7-21 cert]# kubectl label node k8s-7-22.host.top node-role.kubernetes.io/master=

[root@k8s7-21 cert]# kubectl label node k8s-7-22.host.top node-role.kubernetes.io/node=

[root@k8s-7-22 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

wmds-7-21.host.top Ready master,node 15h v1.15.2

wmds-7-22.host.top Ready master,node 12m v1.15.2

操作etcd,增加host-gw

k8s-7-21.host.top上:

[root@k8s-7-21 etcd]# /opt/etcd/etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

配置harbor仓库认证

[root@hdss7-21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.itdo.top --docker-username=admin --docker-password=Harbor12345 [email protected] -n kube-system

部署coredns

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/coredns/rbac.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/coredns/deployment.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/coredns/svc.yaml

部署traefik

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/traefik/rbac.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/traefik/daemonset.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/traefik/svc.yaml

[root@k8s-7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/traefik/ingress.yaml

部署dashboard

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dashboard/rbac.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dashboard/secret.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dashboard/configmap.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dashboard/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dashboard/svc.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dashboard/ingress.yaml

部署heapster

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dashboard/heapster/rbac.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dashboard/heapster/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.itdo.top/dashboard/heapster/svc.yaml

其他应用,也进行重新部署中o(╥﹏╥)o

后记:因为没有做etcd数据的备份,之前运行的pod, namespace,services,configmap,deployment,Daemon Sets,ingress,rabc,secret,label,taint等所有数据全部丢失。

对于namespace,label,secret,taint资源通过命令行方式创建的资源,需要手动运行命令重新创建

对于services,configmap,deployment,Daemon Sets,ingress,rabc等资源,由于之前我是通过yaml文件部署,而且都有保存归档,直接通过apply能很快恢复服务运行